Back to Journals » Advances in Medical Education and Practice » Volume 11

Receiving Real-Time Clinical Feedback: A Workshop and OSTE Assessment for Medical Students

Authors Matthews A, Hall M, Parra JM, Hayes MM , Beltran CP , Ranchoff BL , Sullivan AM, William JH

Received 10 July 2020

Accepted for publication 1 October 2020

Published 12 November 2020 Volume 2020:11 Pages 861—867

DOI https://doi.org/10.2147/AMEP.S271623

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 2

Editor who approved publication: Dr Md Anwarul Azim Majumder

Andrew Matthews, 1 Matthew Hall, 2 Jose M Parra, 3 Margaret M Hayes, 4 Christine P Beltran, 3 Brittany L Ranchoff, 5 Amy M Sullivan, 4 Jeffrey H William 2

1Perelman School of Medicine, University of Pennsylvania Health Systems, Department of Medicine, Philadelphia, PA, USA; 2Harvard Medical School, Beth Israel Deaconess Medical Center, Boston, MA, USA; 3Carl J. Shapiro Institute for Education and Research, Beth Israel Deaconess Medical Center, Boston, MA, USA; 4Harvard Medical School; Carl J. Shapiro Institute for Education and Research, Beth Israel Deaconess Medical Center, Boston, MA, USA; 5Department of Health Promotion and Policy, University of Massachusetts Amherst School of Public Health and Health Sciences, Amherst, MA, USA

Correspondence: Jeffrey H William

Harvard Medical School, Beth Israel Deaconess Medical Center, Boston, MA 02215, USA

Email [email protected]

Background: Many programs designed to improve feedback to students focus on faculty’s ability to provide a safe learning environment, and specific, actionable suggestions for improvement. Little attention has been paid to improving students’ attitudes and skills in accepting and responding to feedback effectively. Effective “real-time” feedback in the clinical setting is dependent on both the skill of the teacher and the learner’s ability to receive the feedback. Medical students entering their clinical clerkships are not formally trained in receiving feedback, despite the significant amount of feedback received during this time.

Methods: We developed and implemented a one-hour workshop to teach medical students strategies for effectively receiving and responding to “real-time” (formative) feedback in the clinical environment. Subjective confidence and skill in receiving real-time feedback were assessed in pre- and post-workshop surveys. Objective performance of receiving feedback was evaluated before and after the workshop using a simulated feedback encounter designed to re-create common clinical and cognitive pitfalls for medical students, called an objective structured teaching exercise (OSTE).

Results: After a single workshop, students self-reported increased confidence (mean 6.0 to 7.4 out of 10, P< 0.01) and skill (mean 6.0 to 7.0 out of 10, P=0.10). Compared to pre-workshop OSTE scores, post-workshop OSTE scores objectively measuring skill in receiving feedback were also significantly higher (mean 28.8 to 34.5 out of 40, P=0.0131).

Conclusion: A one-hour workshop dedicated to strategies in receiving real-time feedback may improve effective feedback reception as well as self-perceived skill and confidence in receiving feedback. Providing strategies to trainees to improve their ability to effectively receive feedback may be a high-yield approach to both strengthen the power of feedback in the clinical environment and enrich the clinical experience of the medical student.

Keywords: feedback, OSTE, medical student, learning environment

A Letter to the Editor has been published for this article.

A Response to Letter has been published for this article.

Introduction

As “an informed, non-evaluative, and objective appraisal of performance intended to improve clinical skills,” feedback is crucial to medical education.1 When structured and delivered appropriately, feedback can improve clinical performance by encouraging learner self-reflection, reinforcing positive behaviors, correcting harmful behaviors, and stimulating personal and professional growth.2 Poorly structured and/or delivered feedback can demotivate learners and lead to a deterioration of performance.3

“Real-time” feedback is defined as any positive or constructive statements about clinical performance shared between trainees and supervisors shortly after an observed behavior in a patient care setting (ie, at the bedside). Real-time feedback is formative and requires setting expectations so the learner is prepared to receive feedback in this manner.4 It is often viewed as “lower stakes” and should occur more frequently than summative feedback (often delivered at the end of a clinical rotation and not necessarily by the individuals observing the behavior), which is inherently more evaluative in nature. Effective real-time feedback is dependent both on the teacher’s delivery and the ability of the learner to receive the feedback.

As the receivers of feedback, learners’ attitudes and behaviors are just as important as those of the feedback providers. Learners may find feedback ineffective for a variety of reasons not related to faculty delivery, including their own inability to self-assess, lack of metacognitive abilities, and defensiveness to corrective feedback.5,6 Cultivating learner ownership and self-assessment helps initiate the behavior changes that feedback is meant to impart.7,8 Receiving feedback in the clinical setting is a skill that can be taught and the acquisition of these skills can be practiced and evaluated with the use of an objective structured teaching exercise (OSTE).6,9,10 Previously published studies describing workshops targeted to the feedback recipient have reported subjective measures of improvement, including increased confidence and frequency in feedback-seeking behaviors, but did not include any objective measures of performance in demonstrating how to effectively receive feedback.11–15

While the majority of the current published literature primarily has focused on faculty development of the feedback provider in delivering feedback,16–18 we directed our attention to the learner (feedback recipient). Our workshop addressed simple strategies for effectively receiving feedback, targeted to medical students just prior to the start of their first clinical clerkship. Our primary hypothesis was that this training session dedicated to feedback-receiving best practices would improve performance during an objective structured teaching exercise (OSTE), a simulated feedback encounter similar to an objective structured clinical exercise (OSCE) in both style and format. Similarly, the secondary hypothesis was that this training session would increase self-perceived confidence in the students’ ability to receive feedback.

Methods

Participants

All second-year medical students starting their clinical rotations at our large academic teaching hospital were required to attend a one-hour live workshop on feedback as part of their orientation. Students were emailed prior to the session and asked to complete a pre-workshop survey and an OSTE. At the conclusion of the workshop, all participants, whether or not they completed an initial survey or OSTE, were then invited to complete an optional post-workshop survey and OSTE. The Beth Israel Deaconess Medical Center (BIDMC) Institutional Review Board determined that the study fell under the category of educational quality improvement and was not subject to review as human subjects research.

OSTEs to Assess Performance

Objective structured teaching exercises (OSTE) are being increasingly utilized for assessment of clinical and communication skills acquisition in faculty and learners.19–23 The OSTE, an adaptation of the original standardized patient model of assessment developed in the 1960s, provides an innovative way to teach and enhance educational skills, but also importantly enables assessment of performance.10,21 Participants in OSTEs have reported enjoying the experience and feel the technique has improved the desired skills being evaluated.22 While the literature does not necessarily demonstrate that OSTEs improve teaching behaviors, they have been shown to be helpful assessment tools.23,24 In our study, the OSTE was used as an assessment tool for learners, specifically designed to measure learners’ skills in receiving feedback.

Pre-Workshop Survey

Surveys were created iteratively by the study authors according to best practices in survey design.25 Pre-testing, including cognitive interviewing, was carried out with four learners who were not part of the study population. Questions were revised and tested again. Pilot testing occurred on a subset of trainees who were not participants in the study. Survey domains covered self-reported comfort in receiving feedback, as well as skills in receiving feedback. Second-year medical students voluntarily completed a survey detailing their current perceptions of feedback during prior non-clerkship inpatient rotations. All participants were then invited to practice their feedback-receiving skills in a pre-workshop OSTE.

Pre-Workshop OSTE

We created OSTE scenarios and rubrics to objectively score performance in receiving feedback. One-page scenarios for standardized faculty and students were developed prior to each session based on examples from a variety of specialties within medicine. A standardized Likert-scale rubric was created to measure performance, with a minimum score of 1 and a maximum score of 5, across eight domains, for a total possible score of 40 (Supplementary Material – OSTE rubric).

During brief 10-minute OSTE sessions, faculty and students received the standardized scenarios and then faculty gave feedback to student participants, focused on the areas highlighted within the rubric, limited to at most five minutes after the session. All study authors were trained on the materials and provided scores for each OSTE encounter using the rubrics.

Workshop (Intervention)

During a day-long required orientation for their upcoming year of clinical clerkships, medical students attended a one-hour interactive workshop facilitated by one of the study authors (JHW). The workshop was entitled “Receiving Feedback in the Clinical Setting” and included a brief literature review of medical student comfort and skill in receiving feedback along with specific examples of how feedback recipients often deflect and dismiss constructive feedback. Approximately half of the workshop was devoted to role-playing with pairs of medical students practicing a set of strategies, as detailed in Supplementary Material - Presentation, in receiving real-time feedback offered by the facilitator in response to a relatable clinical scenario. In brief, this simple set of strategies included: Listen, Clarify, Accept, Be Proactive, and Express Gratitude/Say “Thank You”. The session closed with a structured debrief of the feedback-receiving experience and an offer to further practice these new skills in future study-related OSTEs.

Post-Workshop Survey

At the culmination of the workshop, medical students were invited to complete a voluntary online survey detailing their current perceptions of feedback during inpatient rotations. These participants were once again invited to practice their feedback receiving skills in a post-workshop OSTE.

Post-Workshop OSTE

We created OSTE scenarios that were different from the scenarios presented in the pre-workshop OSTEs and followed the same protocol to measure performance. These OSTEs occurred within one to two months of the workshop.

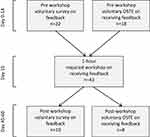

A flow chart of the study design is shown in Figure 1.

|

Figure 1 Flow chart for workshop delivery and assessment at a single hospital clerkship site. |

Analysis

Outcomes were (1) self-perceived confidence on the ability to receive feedback and skills in incorporating feedback into behavior, as measured by a post-workshop survey, and (2) objective learner performance on the post-workshop OSTE rubric. Data were analyzed using Excel Software (Microsoft, Redmond, WA) and basic descriptive statistics were compiled. We conducted paired t-tests to compare pre- and post-survey reports of confidence and skills in receiving feedback, and used the Mann–Whitney U (Wilcoxon Rank Sum) test to assess differences in OSTE performance before and after the workshop. We set a pre-determined alpha of 0.05. Effect sizes for mean differences are reported as Cohen’s d (mean difference/pre-workshop standard deviation, with effect size of 0.5–0.7=moderate, 0.8 and higher=large).

Results

Twenty-two (22) second-year medical students, 55% of whom identified as female, completed the pre-workshop survey, for a response rate of 51% (22/43). One hundred percent of workshop attendees reported that all four of the workshop objectives were “mostly” or “completely” met. As shown in Figure 2, all students reported receiving real-time feedback in the three months prior to the survey; and all but 2 students received delayed feedback. More than half of the students reported 3 or more instances of each kind of feedback in the prior three months.

|

Figure 2 Medical student’s (n=22) perceived learning environment: student self-reported frequency of real-time and delayed feedback per week on pre-intervention survey. |

Thirty-two percent of students believed they themselves could be more skilled in receiving and incorporating feedback: while 68% (n=15) of students were “very” or “extremely” comfortable receiving feedback, a little more than one-third (n=8) reported they were “very” or “extremely” skilled in incorporating feedback into behavior changes (Figure 3).

|

Figure 3 Medical student’s (n=22) perceived learning environment: student self-reported skill and confidence in feedback reception on pre-intervention survey. |

After a single workshop, students reported increases in both their confidence (mean 6.0 to 7.4, P< 0.01, Cohen’s d=1.4) and skill (mean 6.0 to 7.0, P= 0.10, Cohen’s d=1.3) on a scale of 1 to 10 (10 being the most skilled and/or confident). Between 18 pre-workshop and a separate group of 8 post-workshop students (no overlap between the groups), OSTE scores were significantly higher among the post-workshop OSTE students compared with those who completed the pre-workshop OSTE, from a mean of 28.8 to 34.5 out of 40 points (P=0.013 by Mann Whitney U, Cohen’s d=1.14).

Discussion

The purpose of this study was to assess the effectiveness of a one-hour workshop on receiving feedback for second year medical students through both subjective (survey) and objective (performance on pre- and post-workshop OSTE exercises) measures. This short intervention, which included both didactic instruction as well as opportunities for active learning through role play, was associated with increased in self-perceived confidence and skill in accepting and acting on feedback, as well as higher post-workshop OSTE scores, all with large effect size differences. The opportunity to participate in peer-to-peer deliberative practice of these simple skills and strategies aimed at improving medical students’ ability to receive feedback effectively likely contributed to this self-perceived increase in confidence and skill as well as the ability to apply these skills objectively. By combining active learning, clear and concise content, and opportunities to apply this content, even a short, targeted session devoted entirely to issues regarding feedback may be enough to increase confidence and skill in receiving clinical feedback.

This intervention is novel in that it targets students’ receiving feedback rather than faculty giving feedback. Historically, while students desire extensive feedback in the clinical realm to improve their performance, there is little instruction on how to receive or incorporate real-time feedback into their clinical practice.6 A single one-hour workshop may improve the adoption of easily applicable skills and strategies in receiving feedback. While the literature states that physicians are generally poor self-assessors, providing strategies to early trainees to improve their ability to graciously and effectively receive feedback may be a high-yield approach to strengthening the power of feedback.26,27 As our students noted in their surveys, all had received at least some real-time feedback in the prior three months. If real-time feedback, provided at the point of patient care, becomes more commonplace (ie, through the increased use of bedside rounding), learners must be equipped with tools to receive it effectively.

We evaluated the effectiveness of the workshop both subjectively and objectively through the use of surveys and OSTEs. We showed that post-workshop, students reported improvements when given the opportunity to practice the learned strategies, and students who completed post-workshop OSTEs demonstrated higher scores, compared with those who completed pre-workshop OSTEs, in their ability to receive feedback. By integrating novel curricula and strategies to best prepare medical students to effectively receive and utilize feedback, this approach can enhance the training of the most novice trainees. Though not specifically evaluated within this study, post-graduate trainees (ie, interns and residents) with more clinical experience and exposure to real-time feedback may derive similar benefits as medical students through dedicated training in receiving feedback.

Our study had several limitations. The small size of the study sample meant that statistical power of the study was low, though this cohort of students was an appropriate cross-section of their class of nearly 200 students. Additionally, recruitment of participants was from one medical school, limiting the generalizability of findings from this study to medical students at different institutions or to trainees at different educational levels within graduate medical education. Given the fact that involvement in the pre- and post-workshop OSTEs and surveys was voluntary, the study may have selected out individuals who had a special interest in the topic or behavior change addressed. We attempted to minimize this bias by providing easy access to the survey materials to all as well as multiple opportunities on different days and times to participate in the OSTE. Furthermore, as we wished to provide the same learning resources to all students and this was a required orientation session, our study could not ethically include a separate control arm without the workshop. Without a control or comparison group, we are unable to make causal inferences that learning and behavior changes that took place were related to the study workshop and not to other clinical activities or maturation. Additionally, we were not able to pair pre- and post-workshop OSTE performance for enough participants to generate robust data, due to low enrollment in the post-workshop OSTE, so we could not measure within-person change over these two time points. We were also not able to ascertain whether the measured increase in confidence in receiving feedback shortly after the workshop and OSTE sessions was durable over the clinical year given our study constraints. OSTE results are also prone to observer bias (Hawthorne effect), as the OSTE participants know they are being evaluated and may act differently than in an unmonitored setting. Despite this, OSTEs have been used in numerous studies and demonstrate consistently strong objective assessments of performance.

Despite its limitations, our study also had several strengths. The simplicity and timing (just before the beginning of clerkships) of the workshop meant that students were very likely to be cognitively activated to retain the information and have increased interest in improving their feedback receiving skills. The use of distinct OSTE scenarios helped prevent participants from learning how to simply perform better at the OSTE situation rather than learning the desired approach and behaviors to any feedback context. To our knowledge, while several studies have reported subjective outcomes in how students believe they receive feedback, this is the only study that has also included objective measures of performance through an OSTE.

Conclusion

Our findings support the use of a one-hour workshop dedicated to strategies in receiving real-time feedback to improve effective feedback reception as well as self-perceived skill and confidence in receiving feedback. Further research should expand these initially promising results among additional groups of trainees in the post-graduate sphere and across specialties. We believe further attention should be paid to feedback recipients in order to improve the learning environment in the clinical setting.

Acknowledgments

The study authors would like to acknowledge the support of the Carl J. Shapiro Institute for Medical Education and Research at the Beth Israel Deaconess Medical Center for their support of this work.

Disclosure

No potential conflict of interest was reported by the authors.

References

1. Ende J. Feedback in clinical medical education. JAMA. 1983;250(6):777–781. doi:10.1001/jama.1983.03340060055026

2. Cantillon P, Sargeant J. Giving feedback in clinical settings. BMJ. 2008;337(nov10 2):a1961–a1961. doi:10.1136/bmj.a1961

3. Kluger AN, Denisi A. The effects of feedback interventions on performance: a historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol Bull. 1996;119(2):254–284. doi:10.1037/0033-2909.119.2.254

4. Kritek P. Strategies for effective feedback. Ann Am Thorac Soc. 2015;12(4):557–560. doi:10.1513/AnnalsATS.201411-524FR

5. Dunning D, Heath C, Suls JM. Flawed self-assessment: implications for health, education, and the workplace. Psychol Sci Public Interest. 2004;5(3):69–106. doi:10.1111/j.1529-1006.2004.00018.x

6. Bing-You RG, Trowbridge RL. Why medical educators may be failing at feedback. JAMA. 2009;302(12):1330. doi:10.1001/jama.2009.1393

7. Sharma R, Jain A, Gupta N, Garg S, Batta M, Dhir SK. Impact of self-assessment by students on their learning. Int J Appl Basic Med Res. 2016;6(3):226–229. doi:10.4103/2229-516X.186961

8. Wynia MK. The role of professionalism and self-regulation in detecting impaired or incompetent physicians. JAMA. 2010;304(2):210–212. doi:10.1001/jama.2010.945

9. Algiraigri AH. Ten tips for receiving feedback effectively in clinical practice. Med Educ Online. 2014;19:25141. doi:10.3402/meo.v19.25141

10. Sturpe DA, Schaivone KA. A primer for objective structured teaching exercises. Am J Pharm Educ. 2014;78(5):104. doi:10.5688/ajpe785104

11. Noble C, Billett S, Armit L, et al. “It’s yours to take”: generating learner feedback literacy in the workplace. Adv Health Sci Educ Theory Pract. 2020;25(1):55–74. doi:10.1007/s10459-019-09905-5

12. Yau BN, Chen AS, Ownby AR, Hsieh P, Ford CD. Soliciting feedback on the wards: a peer-to-peer workshop. Clin Teach. 2020;17(3):280–285. doi:10.1111/tct.13069

13. Bing-You RG, Bertsch T, Thompson JA. Coaching medical students in receiving effective feedback. Teach Learn Med. 1998;10(4):228–231. doi:10.1207/S15328015TLM1004_6

14. Milan FB, Dyche L, Fletcher J. “How am I doing?” Teaching medical students to elicit feedback during their clerkships. Med Teach. 2011;33(11):904–910. doi:10.3109/0142159X.2011.588732

15. McGinness HT, Caldwell PHY, Gunasekera H, Scott KM. An educational intervention to increase student engagement in feedback. Med Teach. 2020;1–9. doi:10.1080/0142159X.2020.1804055

16. Aagaard E, Czernik Z, Rossi C, Guiton G. Giving effective feedback: a faculty development online module and workshop. MedEdPORTAL Publ. 2010;6(6). doi:10.15766/mep_2374-8265.8119

17. Sargeant J, Armson H, Driessen E, et al. Evidence-informed facilitated feedback: the R2C2 feedback model. MedEdPORTAL Publ. 2016;12(12). doi:10.15766/mep_2374-8265.10387

18. Schlair S, Dyche L, Milan F. Longitudinal faculty development program to promote effective observation and feedback skills in direct clinical observation. MedEdPORTAL J Teach Learn Resour. 2017;13(13):10648. doi:10.15766/mep_2374-8265.10648

19. Tucker CR, Choby BA, Moore A, et al. Speaking up: using OSTEs to understand how medical students address professionalism lapses. Med Educ Online. 2016;21:32610. doi:10.3402/meo.v21.32610

20. Cerrone SA, Adelman P, Akbar S, Yacht AC, Fornari A. Using Objective Structured Teaching Encounters (OSTEs) to prepare chief residents to be emotionally intelligent leaders. Med Educ Online. 2017;22(1):1320186. doi:10.1080/10872981.2017.1320186

21. Barrows HS, Abrahamson S. The programmed patient: a technique for appraising student performance in clinical neurology. J Med Educ. 1964;39:802–805.

22. Trowbridge RL, Snydman LK, Skolfield J, Hafler J, Bing-You RG. A systematic review of the use and effectiveness of the Objective Structured Teaching Encounter. Med Teach. 2011;33(11):893–903. doi:10.3109/0142159X.2011.577463

23. Julian K, Appelle N, O’Sullivan P, Morrison EH, Wamsley M. The impact of an objective structured teaching evaluation on faculty teaching skills. Teach Learn Med. 2012;24(1):3–7. doi:10.1080/10401334.2012.641476

24. Morrison EH, Rucker L, Boker JR, et al. The effect of a 13-hour curriculum to improve residents’ teaching skills: a randomized trial. Ann Intern Med. 2004;141(4):257–263. doi:10.7326/0003-4819-141-4-200408170-00005

25. Fowler Jr FJ. Improving Survey Questions: Design and Evaluation.

26. Eva KW, Regehr G. “I’ll never play professional football” and other fallacies of self-assessment. J Contin Educ Health Prof. 2008;28(1):14–19. doi:10.1002/chp.150

27. Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. JAMA. 2006;296(9):1094–1102. doi:10.1001/jama.296.9.1094

© 2020 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2020 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.