Back to Journals » Advances in Medical Education and Practice » Volume 14

Medical Students’ Perspective on Assessment Mechanism During Problem-Based Learning at Debre Tabor University: An Explanatory Mixed Study

Authors Animaw Z, Asaminew T

Received 13 April 2023

Accepted for publication 2 August 2023

Published 8 August 2023 Volume 2023:14 Pages 859—873

DOI https://doi.org/10.2147/AMEP.S386124

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 2

Editor who approved publication: Prof. Dr. Balakrishnan Nair

Zelalem Animaw,1,2 Tsedeke Asaminew3

1Department of Biomedical Sciences, College Health Sciences, Debre Tabor University, Debre Tabor, Ethiopia; 2Department of Health Professional Education, College Health Sciences, Jimma University, Jimma, Ethiopia; 3Department of Ophthalmology, Saint Paul’s Hospital Millennium Medical College, Addis Ababa, Ethiopia

Correspondence: Zelalem Animaw, Tel +251913122352, Email [email protected]

Background: Assessment in problem-based learning should aim to improve students’ active learning. In due course, significant student involvement in any assessment process may aid them in meeting the curriculum’s objectives.

Purpose: The primary goal of this study is to assess medical students’ attitudes towards the assessment method used during PBL tutorials at Debre Tabor College of Health Sciences.

Methods: A mixed explanatory study design was used conducted at Debre Tabor university. For quantitative and qualitative data, cross-sectional survey and phenomenological study designs were used, respectively. A self-administered questionnaire with a 5-point Likert scale was used to collect quantitative data, while Focused Group Discussions (FGDs) were used to collect qualitative data.

Results: The current study included 192 out of 195 medical students. 40%, 57.2%, and 43.2% of study participants felt the tutor did not provide constructive feedback, facilitate self-assessment/self-reflection, or encourage peer assessment, respectively. On the role of tutors in facilitating self and peer assessment, a statistically significant mean difference in agreement is observed. Their PBL assessment did not take into account punctuality or contribution to the discussion. It is perceived as biassed due to tutors’ bias towards various factors such as first impressions and student academic rank. They also stated that they did not receive sufficient information about the assessment in PBL.

Conclusion: According to the findings of this study, medical students believed they were not fairly assessed during their PBL tutorial. Due to the uncertainty of the evaluation process, a neutral perspective on comprehension skills was appreciated. The students also perceived that the tutors’ ability to assess students, poor feedback experience, and limited information about the assessment mechanism influenced their PBL assessment.

Keywords: problem based learning, medicine, assessment, perception

Introduction

The central concepts of Problem Based Learning (PBL), self-directed learning, and lifelong learning are supported by assumptions from various learning theories, particularly social constructivism and andragogy, in the sense that adult learners are autonomous, self-directed, and goal-oriented.1 Assessment has always been a critical component of education, particularly when the curriculum or intentions are based on adult principles such as student-centered learning and lifelong learning.2 Assessment methods that are aligned with objectives and teaching/learning methods have a significant impact on curriculum implementation and success.3

Traditional assessment methods were primarily concerned with the breadth of content knowledge. As a result, they failed to assess students’ ability to learn independently, think critically, and communicate effectively.4 A newly customized assessment method, particularly in PBL, is expected to instill questions such as “what is important for students to learn?” “Does it encourage self-directed learning and improve instructional practice?”5

Students’ perceptions and levels of satisfaction with their learning environment must be given the same consideration as designing the assessment method.6 Because, they are the primary customers of education, and the ultimate success of education can be measured by their satisfaction.7

PBL assessment should aim to improve students’ active learning processes. This necessitates evaluating students’ perspectives on the assessment’s method, process, and outcomes. In due course, significant student involvement in any assessment process may aid them in meeting the curriculum’s objectives.8 As a result, assessment methods designed to measure expected PBL competencies must take into account how students perceived it, as this will affect their learning.9

Following the introduction of New Innovative Medical Education in 2012, PBL was introduced as an innovative teaching method at ten universities and three hospitals in Ethiopia.10 Consequently, Debre Tabor University (DTU) implemented PBL as a significant learning technique as part of its “Hybrid Innovative Curriculum” in 2013, notably within the medical and midwifery departments. PBL adoption at DTU began with training sessions for both current and newly hired academic personnel. Following curriculum orientation, the program covered components of PBL case building, PBL session tutoring, and PBL assessment. To generate and review all PBL cases in advance of each semester, collaborative efforts comprising multidisciplinary teams of academic personnel from clinical, biomedical, and public health disciplines were established. However, staff turnover still remains to be a huge challenge.11

PBL lessons at DTU are conducted in small groups of 6 to 8 students who meet twice a week for around 2 hours each. Learners identify problems, propose theories, and reveal underlying mechanisms during the initial meeting. During this phase, information is gradually revealed to aid in the learning process. The days in between the two sessions are then set up for independent or self-directed study on the identified learning challenges. During the second meeting, students participate in active discussions to apply their knowledge to the problem at hand and synthesize the learning agendas addressed. Moreover, self reflection, peer reflection and tutor reflection regarding learners performance during each sessions is highly promoted as a formative assessment that will enhance the students’ learning experience.11

Learner performance during PBL sessions accounts for 20% of the entire module assessment. The assessment rubric was adapted from the University of Malaya’s Faculty of Medicine in Kuala Lumpur, Malaysia.12 Participation and communication, cooperation/team building, comprehension/reasoning, and knowledge/information collecting are the four competences (skills) covered by the rubrics. Each talent has its own set of criteria for minimizing inter-rater variation.11

Since the introduction of PBL, various scholars have attempted to describe their experiences with PBL implementations, opportunities, and challenges at various academic institutions/medical schools across the country.11,13,14 The most relevant report from these studies concerns students’ perceptions of the assessment modality in PBL. However, the studies were unable to adequately describe this issue plausibly.

As a result, the findings of this study will provide critical information about medical students’ actual perceptions and experiences with the assessment method in PBL using a more focused mixed methodology.

Materials and Methods

Study Design

A sequential explanatory mixed study design was employed where the quantitative phase is followed by the qualitative phase. The quantitative phase was done using cross-sectional survey design while the qualitative phase was guided by a phenomenological study design.

Study Participants for Quantitative

All Medical Students (census) from the 2nd −5th year at College of Health Science, DTU, were included in the study since they are exposed to PBL tutorial sessions for more than a year.

Sample Size and Sampling for Qualitative

A total of 24 purposively selected study participants were included for qualitative phase. The number of FGDs and study participants were determined based on data saturation during the data collection and analysis time. As a result, each FGD contained eight students, six from each cohort.

Data Collection

Self-administered questionnaire with a Five point Likert scale for an ascending order of score (1 = Strongly Disagree, 2 = Disagree, 3 = Neutral, 4 =Agree, 5 = Strongly Agree) was adapted from published literature11,15–17 and currently used assessment tool in DTU.12 The qualitative data was collected using Focused Group discussions (FGDs). The FGD guide was designed based on the findings from the quantitative data analysis.

Data Quality Assurance

A pre-test on the quantitative data collection tool was conducted on 100 students from the Midwifery department. Cronbach’s Coefficient alpha test was used as a measure of tool reliability and found to be 83% internally consistent. The FGD guide was reviewed and commented by two experts before the FGD to ensure the validity. Double coding was performed by evaluators as a method of qualitative data quality measure.

Data Analysis

The data was entered and processed using IBM SPSS version 20. ANOVA was employed to investigate whether the means of the scores differed between the academic years of the study participant. P-value at <0.05 was considered to declare level of statistical significance. The qualitative data was transcribed, coded, and then thematically analyzed by the main author and three interviewers using Jack Caulfield’s six-steps thematic analysis: familiarization, coding, generating themes, reviewing themes, defining and naming themes and writing ups. Coding was completed upon agreements within coders.18

Ethical Considerations

Ethical clearance was obtained from the Institutional Review Board (IRB) of Jimma University, Institute of Health. Permission to conduct the study was gained from Debre Tabor University, College of Health Sciences. Study participants were informed about the purpose and objective of the study. Verbal informed consent was taken prior to data collection as per the approval of the IRB. Confidentiality was maintained as the data collection was anonymous.

Results

Quantitative results

As illustrated in Table 1, a total of 192/195 medical students participated in the current with a response rate of 98.5%.

|

Table 1 General Characteristics of Study Participant |

Perception Towards Tutors in the Context of PBL Assessment

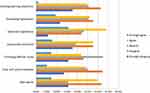

As shown in Figure 1, the majority of medical students, 70.8% and 44.8%, respectively, felt that their PBL assessment was unfair and did not take into account their progress over time. During the PBL sessions, 40%, 57.2%, and 43.2% of study participants perceived that the tutor did not provide constructive feedback, facilitate self-assessment/self-reflection, or encourage peer assessment, respectively.

|

Figure 1 Medical students’ perception towards tutor’s experience during PBL assessment. |

There is a statistically significant difference in the mean score of students’ perception across their year of study towards tutors facilitating self-assessment during a PBL session and their role in encouraging peer assessment (Table 2).

|

Table 2 Comparison of Students’ Perception Towards Tutors in the Context of PBL Assessment by Their Year of Study |

Knowledge Acquisition

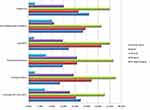

According to Figure 2, 57.3% and 44.2% of students, respectively, disagreed that their PBL assessment takes into account their role in bringing new and correct information. However, 51.1% and 46.4% of students thought their assessment reflected their ability to use appropriate scientific language and integrate newly acquired knowledge with prior knowledge, respectively.

|

Figure 2 Medical students’ perception towards knowledge acquisition component of their PBL assessment. |

Table 3 compares the mean score of agreement for items dictating students’ perceptions of the knowledge acquisition component of PBL assessment across their academic year. There was a statistically significant difference between academic years in responses to questions about whether PBL assessment reflects their role in bringing relevant information to each session and considers their use of appropriate scientific language. On the aforementioned items, the mean agreement score of second-year medical students is significantly lower than that of third-year students.

|

Table 3 Comparison of Students’ Perception Towards Knowledge Acquisition Component of PBL Assessment by Their Year of Study |

Comprehension

As shown in Figure 3, 47.4% and 45.8% of respondents, respectively, disagree that their assessment reflects their ability to report information in their own words and present information. Similarly, 45.3% of students disagree that their role as a facilitator of discussion is not taken into account in their evaluation. However, 46.5% of students agreed that their grade reflects their ability to generate hypotheses to explain the problems under consideration. Table 4 also revealed that students’ perceptions of PBL assessment in terms of comprehension skill have no statistical significance over the course of the academic year.

|

Table 4 Comparison of Students’ Perception of PBL Assessment with Respect to Comprehension Skill by Their Year of Study |

|

Figure 3 Medical students’ perception towards PBL assessment in line with comprehension skill. |

Communication

Almost half of the respondents disagree that their PBL assessment took into account how their communication was disposed of. Nonetheless, 34% of students remained neutral in this regard. Meanwhile, only 15.6% of medical students agreed that their assessment considered their punctuality, while 62% disagreed (Figure 4). Table 5 shows that there is no statistically significant difference in perception of PBL assessment in relation to communication skills throughout the academic year.

|

Table 5 Comparison of Students’ Perception of PBL Assessment with Respect to Communication Skill by Their Year of Study |

|

Figure 4 Medical students’ perception towards PBL assessment with respect to communication skill. |

Cooperation

Almost half of the students in this study disagreed that their PBL assessment takes into account their ability to interrupt others with comments, solicit feedback from the group, perform group organization, level of sympathy, and leadership skills. However, for the aforementioned items, one-third of them continue to prefer the neutral option (Figure 5). Table 6 shows that there was no significant difference in the items asking medical students about their perceptions of PBL assessment regarding cooperation skill across the academic year.

|

Table 6 Comparison of Students’ Perception Towards Cooperation Skill Component of PBL Assessment by Their Year of Study in |

|

Figure 5 Medical students’ perception towards cooperation component of their PBL assessment. |

Qualitative results

The FGD included 24 participants from the entire year of study. There were four females among them. Table 7 shows the results of the FGD analysis, which revealed three emerging themes and nine subthemes.

|

Table 7 Emerged Themes, Subthemes and Quotations from FGD Analysis |

Tutors Related

Students who took part in the focus groups raised the issue of assessment bias caused by tutors’ bias towards various factors such as first impressions and academic rank. This is supported by the opinions of a middle-scoring third-year student and a low-scoring fourth-year student.

Participant #3 from FGD No#2:

Some tutors tend to give high marks if they are impressed by a student’s performance during their first encountered PBL session irrespective of the student’s performance in the subsequent sessions

Participant #6 from FGD No#1:

Tutors usually tend to give a higher mark for those students who are known for their higher cumulative GPA despite their actual performance during the PBL session.

Another issue raised by the participants was the consistency of the assessments. In this regard, tutors failed to conduct PBL assessment on a regular and timely basis during PBL sessions, which predisposed it to a recall bias and rendered it unnecessarily subjective, let alone unreliable. This point is emphasised by the following quotes from second-year medical students:

Participant #7 from FGD No#3:

We were not usually assessed based on our performance during each PBL session rather at the end of each module. I believe this scenario affects our PBL evaluation since tutors have a high probability of forgetting our performance in each session.

Participant #4 from FGD No#2:

I remember that one of my tutors told me that he hasn’t evaluated my performance in every session when I appealed to show me my evaluation.

Students believed that PBL assessment did not accurately reflect their performance and progress. Furthermore, students believed that tutors’ ability to facilitate a PBL session influenced their assessment. The following excerpts shared similar ideas.

Participant #8 from FGD No#1:

I believe that most of my evaluation from PBL didn’t consider my improvement over time.

Participant #1 from FGD No#3:

I doubt my knowledge in the discussion might not be assessed by those tutors who didn’t know the topic of discussion.

Feedback Related

Under this theme, study participants agreed that constructive feedback, as a part of formative assessment, was not regularly provided by the tutor. This affected students to appreciate their actual gap during their PBL session. In line with this a 3rd-year medical student from one of the FGDs said the following:

Participant #2 from FGD No#2:

Tutors didn’t usually provide us regular feedback on our performance during the discussion. This makes it difficult to identify which areas of our performance to improve so that we can score higher.

Surprisingly, the tutors are sometimes not interested in giving feedback to the students about their performance during the PBL session. A 5th year student shared his experience as follows:

Participant #8 from FGD No#3:

I have encountered some tutors who left the session without saying a word about our performance.

Another critical issue raised by the participants was the consistency of the feedback provided and the final PBL assessment result. Although a few tutors attempted to provide constructive feedback, some of them failed to align their feedback with the students’ final summative assessment. The following excerpt from a third-year student clearly demonstrated a similar point of view.

Participant #5 from FGD No#2:

I remember in PBL of the nervous system module that the tutor was giving me appreciation during the majority of the sessions. Surprisingly, he gave me 12 out of 20 at the end of the module.

There was also inappropriate and discouraging feedback from tutors that affects students’ stamina to improve their performance in the PBL sessions. A 4th-year student reported that:

Participant #4 from FGD No#3:

There were tutors who humiliates actively participating students that made us to be shy and frustrated … late alone affects our evaluation.

Educational Administration Related

During PBL orientation, FGD participants perceived a significant gap from the department side. Students did not receive adequate information about the entire assessment process. As a result, they had no idea how their evaluation was conducted. This concern was expressed in the following two excerpts from a second-year high-scoring student.

Participant #2 from FGD No#1:

Even though there was a brief orientation about the purpose of PBL, we didn’t obtain information on how we are going to be assessed.

Participant #6 from FGD No#3:

It is my first time to know that there is a checklist for assessing our performance of PBL.

Students also believed that their department and other educational administrators did not assist them in dealing with their concerns, particularly those concerning PBL assessment. As a result of this, a student concluded:

Participant #3 from FGD No#1:

Our department is not usually helpful while we appeal regarding our PBL assessment result.

Discussion

According to the current study, students perceived their PBL evaluation as unfair and did not take into account their progress over time. During the qualitative data extraction, a similar concern was raised, as tutors are highly biassed in evaluating students during PBL sessions. Debre Tabor University conducted studies that revealed significant dissatisfaction of students from various disciplines (medicine, midwifery, anaesthesia, nursing, and medical laboratory) with their PBL assessment.11,13 Similar studies in Ethiopia also reported that students are not happy with their PBL assessment.14,19 Likewise, a study done in Korea reported that medical students labeled unfair assessment as a drawback of their PBL experience.20 This could be attributed to tutors’ lack of experience assessing students during PBL because it is a new method. Tutors may use the traditional assessment method in this case, resulting in a misalignment between learning outcomes, teaching method, and assessment.4 Constructive alignment, on the other hand, is a pillar of curriculum success in the sense that teaching and learning activities, as well as assessment tasks, must be in line with the intended learning outcomes.21

Tutors were reluctant to evaluate students on a regular and timely basis during each PBL session, according to additional explanations obtained during the FGD. This introduced a recall bias and made the assessment overly subjective. However, there are conflicting reports from Spain that showed a higher level of student satisfaction with their PBL assessment.22 This might be due to the robust experience of PBL implementation and well experienced schools.

According to our findings, students reported that their tutors did not provide constructive feedback. Tutors also rarely encouraged peer assessment and rarely facilitated self-assessment/self-reflection during PBL sessions. Wondie et al expressed a similar viewpoint when tutors failed to provide and receive feedback during the PBL session.11 This clearly demonstrated that tutors must promote focused and constructive feedback in order for students to identify their gaps and improve in the future, as the very essence of PBL is to encourage self-directed and lifelong learning. More importantly, because students are adults, their perceived relevance of their learning is an important consideration in the context of andragogy.23

Similarly, our FGD revealed that tutors are unable to provide constructive and respectful feedback on a regular basis. This could be due to tutors’ limited ability to provide feedback, as many of them have come from traditional teaching methods and are struggling to adapt to PBL.24 Furthermore, due to high faculty turnover, tutors were not facilitating PBL sessions in a specific group for an extended period of time. Educators, particularly constructivists, agreed, however, that feedback, self-assessment, and peer assessment serve as the foundation for subsequent professional development and self-directed learning.4,25

Despite the fact that students’ overall perception of tutors’ practice in facilitating self-assessment and peer assessment during a PBL session is negative, second-year medical students perceived their tutors to facilitate self-assessment and encourage peer assessment more than tutors of third, fourth, and fifth-year medical students. This could be due to the fact that inter-rater variation is common among PBL tutors.26

Although knowledge acquisition continues to play an important role in medical education, our research found that the majority of students disagreed that their PBL assessment result reflected their knowledge acquisition skill in bringing new and correct information to the session. Furthermore, the mean scores of items attributed to knowledge acquisition differ significantly between batches. This is related to the tutors’ ability to objectively assess students’ knowledge acquisition skills during PBL sessions. During the qualitative data collection, a similar point was raised about students believing their assessment by tutors with poor facilitation skills and non-content experts was unfair.27

The current study revealed that proportional study participants responded agreement, neutrality and disagreement for items asking if they perceived that their comprehension skill is taken in to account during PBL assessment. This insight from students can be explained by the absence of adequate information on their assessment method as a concordant perspective was reflected in the qualitative data where students were not well aware of their assessment modality.

The current study revealed that a higher proportion of medical students who participated in this study perceived that their PBL assessment did not take into account their punctuality, contribution to the discussion, and ability to address psychosocial issues due to communication and cooperation skills. This is a concerning situation given that the very essence of PBL is far superior to traditional teaching methods in terms of eliciting the aforementioned generic skills. The possible causes of this student perception include students’ own biassed viewpoints, the quality of PBL cases, and tutors’ facilitation skills that fail to foster discussion.28

This study also discovered that students were dissatisfied with educational administrators, particularly those in their department. They claimed that they were not given adequate and timely information about how they would be evaluated during the PBL tutorial. As a result, they had a hazy view of the components of their evaluation. This is a departure from the main assumption of adult learning theories in that the learner must know how they will be assessed because assessment is critical in guiding them to identify and work on their own learning needs.29

Limitation of the Study

This study only evaluated the perception of medical students pertaining to their assessment during PBL tutorial sessions. Hence, it is limited to encompass faculties’ perspective, observation of the assessment practice and document review.

Conclusions

According to the findings of this study, students believed that they were not fairly assessed during their PBL tutorial. They also perceived that the assessment in their PBL did not consider their role as a knowledge acquisition competency of bringing new and relevant information. Due to the uncertainty of the evaluation process, a neutral perspective on comprehension skills was appreciated. Punctuality, contribution to the discussion, and dealing with psychosocial issues were components of communication and cooperation skills that students did not believe their PBL assessment took into account. The students also perceived that the tutors’ ability to assess students, poor feedback experience, and limited information about the assessment mechanism influenced their PBL assessment. Therefore, It is strongly advised to review the evaluation process during PBL sessions by investigating and assuring that the required competencies were assumed in such a way that they stimulate students’ learning experience. Furthermore, other assessment systems must be considered.

Acknowledgments

The authors would like to pass our gratitude to the study participant students and FGD conductors.

Disclosure

The authors report no conflicts of interest in this work.

References

1. Gewurtz RE, Coman L, Dhillon S, Jung B, Solomon P. Problem-based learning and theories of teaching and learning in health professional education. J Perspect Appl Acad Pract. 2016;4(1):59–70.

2. (OECD/CERI) O for EC and D for ER and I. Assessment for learning: formative assessment. In:

3. Penuel W, Fishman BJ, Gallagher LP, Korbak C, Lopez-Prado B. Is alignment enough? Investigating the effects of state policies and professional development on science curriculum implementation. Sci Educ. 2009;93(4):656–677. doi:10.1002/sce.20321

4. Macdonald R. Assessment strategies for enquiry and problem-based learning. In: Handbook of Enquiry & Problem Based Learning. Sheffield, United Kingdom: CELT, NUI Galway; 2005:85–93.

5. Waters R. Assessment and evaluation in problem-based learning. In:

6. Duque LC, Weeks JR. Towards a model and methodology for assessing student learning outcomes and satisfaction. Qual Assur Educ. 2010;18(2):84–105. doi:10.1108/09684881011035321

7. Kotzé TG, Plessis PJ. Students as “co-producers” of education: a proposed model of student socialisation and participation at tertiary institutions. Qual Assur Educ. 2003;11(4):186–201. doi:10.1108/09684880310501377

8. Karpiak CP. Assessment of problem-based learning in the undergraduate statistics course. Teach Psychol. 2011;38(4):251–254. doi:10.1177/0098628311421322

9. Kolmos A, Holgaard JE. Alignment of PBL and assessment. In:

10. Abraham Y, Azaje A. The new innovative medical education system in Ethiopia: background and development. Ethiop J Health Dev. 2013;27(Specialissue1):36–40.

11. Wondie A, Yigzaw T, Worku S. Effectiveness and key success factors for implementation of problem-based learning in Debre Tabor University: a mixed methods study. Ethiop J Health Sci. 2020;30(5):803–816. doi:10.4314/ejhs.v30i5.21

12. Sim SM, Azila NMA, Lian LH, Tan CPL, Tan NH. A simple instrument for the assessment of student performance in problem-based learning tutorials. Ann Acad Med Singapore. 2006;35:634–641. doi:10.47102/annals-acadmedsg.V35N9p634

13. Kibret S, Teshome D, Fenta E, et al. Medical and health science students’ perception towards a problem-based learning method: a case of Debre Tabor University. Adv Med Educ Pract. 2021;12:781–786. doi:10.2147/AMEP.S316905

14. Semu A. Challanges in Problem Based Learning Implimentation in Debreberhan University, College of Medicine, Debrebirhan, Ethiopia. Addis Ababa University; 2016.

15. Allareddy V, Havens AM, Howell TH, Karimbux NY. Evaluation of a new assessment tool in problem-based learning tutorials in dental education. J Dent Educ. 2011;75(5):665–671. doi:10.1002/j.0022-0337.2011.75.5.tb05092.x

16. Valle R, Petra I, Martínez-González A, Rojas-Ramirez JA, Morales-Lopez S, Piña-Garza B. Assessment of student performance in problem-based learning tutorial sessions. Med Educ. 1999;33(11):818–822. doi:10.1046/j.1365-2923.1999.00526.x

17. Elizondo-montemayor LL. Formative and summative assessment of the problem- based learning tutorial session using a criterion- referenced system. J Int Assoc Med Sci. 2004;14:8–14.

18. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. 2006;3(2):77–101. doi:10.1191/1478088706qp063oa

19. Kidane HH, Roebertsen H, Van Der Vleuten CPM. Students’ perceptions towards self-directed learning in Ethiopian medical schools with new innovative curriculum: a mixed-method study. BMC Med Educ. 2020;20(7):1–10. doi:10.1186/s12909-019-1924-0

20. Kim MJ. Students’ satisfaction and perception of problem based learning evaluated by questionnaire. Kosin Med J. 2015;30(2):149–157. doi:10.7180/kmj.2015.30.2.149

21. Autoridad Nacional del Servicio Civil. Applying constructive alignment to outcomes-based teaching and learning. Angew Chem Int Ed. 2021;6(11):951–952.

22. Hernando G, Martín C, Ángel M, Ortega L, Villamor M, Professor A. Nursing students’ satisfaction in problem-based learning. Enferm Glob. 2014;13(3):97–103.

23. Milligan F. Beyond the rhetoric of problem-based learning: emancipatory limits and links with andragogy. Nurse Educ Today. 1999;19(7):548–555. doi:10.1054/nedt.1999.0368

24. Azer SA. Challenges facing PBL tutors: 12 tips for successful group facilitation. Med Teach. 2005;27(8):676–681. doi:10.1080/01421590500313001

25. de Graaf E. Education AK-I journal of engineering, 2003 U. Characteristics of problem-based learning. Int J Eng Educ. 2003;19(5):657–662.

26. Sa B, Ezenwaka C, Singh K, Vuma S, Majumder MAA. Tutor assessment of PBL process: does tutor variability affect objectivity and reliability? BMC Med Educ. 2019;19(1):1–8. doi:10.1186/s12909-019-1508-z

27. Aldayel AA, Alali AO, Altuwaim AA, et al. Problem-based learning: medical students’ perception toward their educational environment at al-imam mohammad ibn Saud Islamic university. Adv Med Educ Pract. 2019;10:95–104. doi:10.2147/AMEP.S189062

28. Des Marchais JE, Vu NV. Developing and evaluating the student assessment system in the preclinical problem-based curriculum at Sherbrooke. Acad Med. 1996;71(3):274–283. doi:10.1097/00001888-199603000-00021

29. Epstein RM, Cox M, Irby DM. Assessment in medical education. N Engl J Med. 2007;356(4):387–396. doi:10.1056/NEJMra054784

© 2023 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2023 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.