Back to Journals » Advances in Medical Education and Practice » Volume 8

Assessment test before the reporting phase of tutorial session in problem-based learning

Authors Bestetti RB , Couto LB , Restini CBA , Faria Jr M , Romão GS

Received 20 October 2016

Accepted for publication 17 January 2017

Published 27 February 2017 Volume 2017:8 Pages 181—187

DOI https://doi.org/10.2147/AMEP.S125247

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 4

Editor who approved publication: Dr Md Anwarul Azim Majumder

Reinaldo B Bestetti, Lucélio B Couto, Carolina BA Restini, Milton Faria Jr, Gustavo S Romão

Department of Medicine, UNAERP Medical School, University of Ribeirão Preto, Ribeirão Preto, Brazil

Purpose: In our context, problem-based learning is not used in the preuniversity environment. Consequently, students have a great deal of difficulty adapting to this method, particularly regarding self-study before the reporting phase of a tutorial session. Accordingly, the aim of this study was to assess if the application of an assessment test (multiple choice questions) before the reporting phase of a tutorial session would improve the academic achievement of students at the preclinical stage of our medical course.

Methods: A test consisting of five multiple choice questions, prepared by tutors of the module at hand and related to the problem-solving process of each tutorial session, was applied following the self-study phase and immediately before the reporting phase of all tutorial sessions. The questions were based on the previously established student learning goals. The assessment was applied to all modules from the fifth to the eighth semesters. The final scores achieved by students in the end-of-module tests were compared.

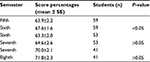

Results: Overall, the mean test score was 65.2±0.7% before and 68.0±0.7% after the introduction of an assessment test before the reporting phase (P<0.05). Students in the sixth semester scored 67.6±1.6% compared to 63.9±2.2% when they were in the fifth semester (P<0.05). Students in the seventh semester achieved a similar score to their sixth semester score (64.6±2.6% vs 63.3±2%, respectively, P>0.05). Students in the eighth semester scored 71.8±2.3% compared to 70±2% when they were in the seventh semester (P>0.05).

Conclusion: In our medical course, the application of an assessment test (a multiple choice test) before the reporting phase of the problem-based learning tutorial process increases the overall academic achievement of students, especially of those in the sixth semester in comparison with when they were in the fifth semester.

Keywords: problem-based learning, self-directed learning, medical education, undergraduates, assessment

Introduction

Problem-based learning (PBL) was developed at McMaster University in Canada in 1969; subsequently, PBL was introduced in Europe at the University of Limburg in Maastricht in the 1970s and spread throughout the world thereafter.1 However, currently, its use in medical courses is not standard.

In PBL, a problem is discussed by a small group of students, usually no >10 students, under the guidance of a tutor, who encourages the students to solve the problem by allowing them to activate previous knowledge about the topic under discussion in a process called the tutorial session. In addition, because of ample discussion among peers, the students have the opportunity to acquire new information about the topic at hand from their colleagues, which provokes retention in the long-term memory. However, such knowledge is not usually enough to solve the problem and the students perceive that they must learn more about the topic under discussion.

Thus, students identify gaps in their own knowledge and establish their own goals to be studied at home (the analyzing phase). They spend a defined period in self-directed study. Following this period, usually on another day, they meet again to finalize the problem-solving process (the reporting phase). Therefore, this is an active learning process where the tutor does not try to transfer knowledge; rather, the tutor facilitates students to construct their own knowledge.2 Figure 1 illustrates and summarizes the PBL process.

| Figure 1 Development of the tutorial session and the intervention moment. Note: Data adapted from Schmidt.2 |

PBL, therefore, is a didactic method that uses the following modern insights on learning: constructivism, self-directed learning, collaborative process, and contextual process. Self-directed learning means that the learners, under the guidance of a tutor during the tutorial process, are able to recognize gaps in their own existing knowledge, plan how to fill such gaps by selecting appropriate strategies, and monitor the pace at which their own learning process runs.3

Self-directed learning is partially reliant on both intrinsic motivation and extrinsic motivation. Intrinsic motivation means learning as a result of discovering something, particularly from coping with and solving problems.4 Self-directed learning skills are of utmost importance for the PBL process to be successful, permitting students to be aware of their learning needs and the use of appropriate information resources.3

Self-study, the manner in which self-directed learning works, ultimately allows the students to fill gaps in knowledge detected during the tutorial process and prepare them for the collaborative learning that occurs during the reporting phase of tutoring.2 Self-study is fundamental for physicians practicing medicine in our time and potentially makes the student a lifelong learner.

Assessment, defined as the “quality measures used to determine performance of an individual medical student”, is an integral component of a PBL medical curriculum and is believed to drive learning.5 If performed in the field of problem solving, assessment fulfills a requirement of a PBL medical curriculum.6 There have been several methods of written assessment in the context of the PBL medical teaching approach. They consisted of outcome-oriented instruments, which assess student performance, and process-oriented instruments, which assess and reflect on how the educational goals are obtained by students. Multiple choice questions belong to the outcome-oriented group of assessment instruments.7

Written assessment has been used worldwide as a means to monitor student acquisition of the core curriculum knowledge. In particular, multiple choice questions have largely been used because they are extremely reliable, easy to use, easy to design, can test more than mere facts,8 and can reliably identify poor students.9 Furthermore, multiple choice tests have been shown to be useful in the development of problem-solving skills.7 More importantly, tests have been found to play an important role in positively influencing self-directed learning.10 Collectively, such findings suggest that assessment can be an extrinsic motivation to learn.

In our region, the PBL method is not used in preuniversity education, there are several deficiencies in elementary and high education, and students are immature because they enter the medical course at an average age of 17 years. Therefore, students have some difficulty adapting to the medical course.11 Consequently, we have observed several dysfunctional groups during the tutorial process because of a low level of self-study before the reporting phase of a tutoring session. Based on the facts already outlined, and in an attempt to stimulate more self-study, we introduced an assessment test (a multiple choice test) before the reporting phase.

Accordingly, the purpose of this study was to determine if such a strategy would improve academic achievement, as measured by the mean of scores obtained at the end-of-module test of the three modules run each semester at the preclinical stage of the medical course. This article shows the results of such a strategy.

Methods

Setting and subjects

Details of our PBL curriculum have been described elsewhere.11 Briefly, to become a doctor, the student has to successfully complete 12 semesters. Each semester has ~60 students. From the first to the eighth semesters, there are three curricular units, tutoring, medical skills, and primary care. From the ninth until the 12th semesters, the student completes an internship, which, according to the Brazilian Guidelines for Medical Courses, is divided into pediatrics, internal medicine, surgery, obstetrics and gynecology, family medicine, and emergency medicine.

Regarding the tutoring curricular unit, three modules run each semester. Each module is composed of five problems, which are solved in the tutorial sessions, and lasts ~6 weeks. The first 2-hour tutorial session consists of problem analysis by students (usually 10 students) under the guidance of a subject-matter expert tutor; this is when students establish the learning goals for self-directed study. The second 2-hour tutorial session occurs on another day in the next week and consists of the reporting phase; that is, the tutorial session follows the seven steps developed by Schmidt.2 In each module, two subject-matter expert tutors guide the learning process of 20 students.

From the first to the fourth semesters, students are guided by tutors to develop cognitive skills in solving problems related to morphophysiological aspects of medical knowledge. From the fifth to the eighth semesters, cognitive activities are developed to acquire skills for problem-solving clinical cases related to the most common diseases in our region. All tutors have been trained at our institution on how to run a tutorial session before guiding the tutorial process, as all of them have graduated in a discipline-based curriculum, thus needing to gain experience with the PBL method.12

All tutors are specialists in the topic at hand. Since from the first to the fourth semesters, the problems solved in the tutorial session are not related to human diseases, tutors are not necessarily medical doctors. However, as the problems discussed from the fifth to the eighth semesters are related to the most prevalent diseases occurring in our region, all tutors are specialist physicians in the subject under discussion in the tutorial session.13 This means, for instance, that tutors for the cardiovascular system diseases module are clinical cardiologists.

The tutorial sessions run in parallel to real examinations, either at an infirmary service or at an outpatient service, of patients with similar problems to those discussed in the tutorial. In an attempt to increase the amount of time spent in self-directed learning, the planning group recommended the introduction of assessment tests (multiple choice questions) immediately before the reporting phase of the tutorial session. Such tests were included in the first semester of 2012.

The tutors who run the tutorial session design the assessment tests in line with the learning goals established by the students in their tutorial group. They are five alternatives with only one correct answer. The tests are of the key feature type, which is appropriate for assessing problem-solving skills; occasionally, factual recall tests (a test in which no problem-solving skill is required) are given to students. Since the tutors who guide the learning process prepare the tests, they are aware of the learning goals established by students. They prepare the tests together. All students of a given module undergo the same tests.

At the end of each module, the students undergo a final examination consisting of 12 multiple choice questions. This final examination is important because it determines whether the student passes or fails the module. Therefore, we analyzed the mean scores obtained from three end-of-module tests across two semesters. Figure 2 illustrates and summarizes the assessment process in our medical course. Three different groups of students across two semesters, first semester of 2012 and second semester of 2012, were included in the study. The introduction of the assessment test before the reporting phase of the PBL tutorial process was made between these semesters; therefore, the final scores achieved by the fifth semester students in the first semester of 2012 were compared with the final scores achieved by the same students in the second semester of 2012 as the sixth semester students, and so on for the second group (sixth semester in the first semester of 2012 and seventh semester in the second semester of 2012) and third group (seventh semester in the first semester of 2012 and eighth semester in the second semester of 2012). In this study, therefore, students were used as their own controls.

One hundred fifty-six student scores were initially screened for the study; three students from the eighth semester (the second semester of 2012) presented incomplete paired scores and were removed from the study. Therefore, 153 student scores are presented in the study.

Ethics statement

Anonymization was obtained as follows: the main investigator had access to full data; he/she extracted the scores of students from a given semester and paired them with the scores of the same students when they were in the subsequent semester. In doing so, the students were anonymized and the data obtained protected. The study was previously approved by the Ethics Committee of the National Ministry of Health/University of Ribeirão Preto (CAAE: 42348815.7.0000.5498). All students participated on a voluntary basis and provided written informed consent.

Statistical analysis

Data are presented as mean (M) ± standard error mean. The paired Student’s t-test was used to compare the end-of-module test scores between each group of students according to their semester. The nonpaired Student’s t-test was used to compare the total scores for global analysis before and after the introduction of the assessment test before the reporting phase of the PBL tutorial process. The 95% confidence interval (CI95) was established for each variable. A P-value of <0.05 was considered to have statistical significance. For the statistical analysis, the software GraphPad Prism (5.0) was used.

Results

Overall, the mean score was 65.2±0.7% before and 67.6±0.7% after the introduction of the assessment test before the reporting phase (P<0.05). Students in the sixth semester scored 67.6±1.6%, whereas in the fifth semester, they scored 63.9±2.2% (P<0.05, n=59). However, the students in the seventh semester maintained similar end-of-module test scores compared to their previous, ie, sixth, semester scores (64.6±2.6% vs 63.3±2.0%, P>0.05, n=53). Similarly, the end-of-module test scores achieved by students from the eighth semester were not significantly different compared to their previous, ie, seventh, semester scores (71.8±2.3% vs 70.0±2.1%, P>0.05, n=41). The data are summarized in Table 1.

Discussion

This study shows that an assessment test before the reporting phase of the PBL tutorial process is associated with improved student achievement, by the same group of students, in the sixth semester compared with the fifth semester of the medical course. However, the same fact was not observed in students in the other two groups, ie, students in the seventh semester compared with the sixth semester and students in the eighth semester compared with the seventh semester. The reasons for this are unapparent from our study.

Learning is a cumulative process in a PBL context. This means that problem analysis, self-study, and the reporting phase of the tutorial session are interdependent on the learning process; each step prepares students for learning in the next step. The problem analysis phase is important to activate previous knowledge,3 which, if provided in a contextual manner, facilitates memory retention.14 Furthermore, the problem analysis phase should particularly activate previous metacognitive knowledge, ie, the ability to use effective strategies within a given domain, because it facilitates self-learning.15

Perhaps, self-study is the most important part of the tutorial process in terms of cognitive achievement. In a study of 218 students in the second year, Yew et al16 observed that the number of concepts recalled following self-study and the reporting phase (collaborative learning) were higher in comparison with the number of concepts known at the initial phase. Importantly, the number of new concepts that emerged following self-study was higher than that found following the reporting phase. Self-study is also important for the learning process because it will improve problem-solving skills acquisition. In clinical practice, students will face clinical problems similar to those seen in the tutorial process. Therefore, they will recall how to solve the clinical problem via their context-dependent memory,17 ie, the contextual process (contextualized learning). Thus, self-study is important in all phases of learning in the tutorial process.

If, on one hand, self-study is important for the learning process, on the other hand, its absence prejudices knowledge acquisition. Considering the collaborative aspect existent in the learning process among the small groups of students, we can consider that the previous preparation for self-study can interfere with the depth of knowledge within the student group. In this respect, Dolmans and Schmidt18 have shown that a nonprepared student negatively interferes with the learning of the group by breaking up the process of mutual dependence observed during the discussion of the study subject. Therefore, the necessity of each member of the group to accept some kind of conceptual assessment can enrich the learning of the whole group.

Taking into account that all the topics related to a module must be studied by all students, it is necessary for all students in the group to achieve the same learning goals for self-study. In this regard, the introduction of assessment tests containing questions related to the learning goals, previously defined in a given module, ensures that the content of the module would have been covered. Furthermore, since these tests are designed by tutors, who guided the tutorial session, they are directly related to learning goals defined on a weekly basis in the tutorial sessions. These tests allow, therefore, the monitoring of students’ cognitive acquisition in each unit.

Student assessment enriches the teaching–learning process in diverse ways, but it must be in line with curriculum objectives.19 For the student, it provides feedback about the level of the acquired learning, new knowledge by contact with the correct information, and motivation to reach the learning goals for the subject. For the faculty, it allows evaluation of the effectiveness of teaching activity and indicates the efficacy of application of curricular aims.6,20

In this study, we used assessment testing before the reporting phase of the tutorial session in an attempt to stimulate student self-study based on the learning goals established by themselves. We expected that these assessment tests could ultimately improve the end-of-module test outcomes for several reasons. 1) Assessment testing following a learning experience (eg, self-study) improves performance in a subsequent test.21 2) Assessment tests were based on learning goals established by students, thereby facilitating retention of the material tested.21 3) As each module has five problems to be solved, students would have the opportunity to undergo several assessment tests, practice that would facilitate achieving a high score in the end-of-module test.21

It is difficult, therefore, to account for why only the students in the sixth semester benefited from testing before the reporting phase. These students are introduced to the pathological and clinical aspects of the most prevalent diseases that affect the population in our region. It is conceivable that, in this regard, they are not knowledgeable enough to solve problems related to human diseases. In other words, they do not have, at this stage, the necessary cognitive skills to self-regulate and to reflect on their learning process.22 Since the tutors responsible for guiding their tutorial session are subject-matter experts, it is possible that students in the fifth semester rely on tutor expertise to guide their learning process.23 By contrast, our other students, in the seventh and eighth semesters, could be more experienced learners in the clinical setting. This could make them more independent of tutors to be successful in the cognitive activities and academic achievement. In addition, they might have more skills to plan, monitor, and reflect on the learning process, thus becoming learners who are more expert. Hence, they probably have more intrinsic motivation to learn at this stage of the medical course.

Another potential explanation for the results of this study is related to the assessment test format. We used multiple choice questions. We did not control the type of multiple choice question, meaning that such tests might have been used for factual recall rather than solving problems (the so-called key feature test).8 Since student performance is better with the latter than with the former,21 the tests used would not influence the outcomes of more experienced students, who rely on well-functioning tutorial groups for cognitive achievement. Furthermore, we did not account for students guessing the answers to multiple choice questions, meaning that the examination grade may not accurately reflect the students’ knowledge. If the possibility of correctly guessing multiple choice answers had been taken into account, perhaps we would be able to identify poorly performing students from the sixth to eighth semesters,9 making the test discriminative.

This study has limitations. 1) It is retrospective. Therefore, the data obtained in this study should be received with caution. 2) We used only multiple choice questions in the assessment testing. Moreover, such tests were not always of “key feature” format, which might have precluded the assessment of clinical problem-solving skills. It would be interesting to use other types of testing to stimulate self-directed study.24 3) The difference between the results obtained by the students when they were in the sixth semester in comparison with the results when they were in the fifth semester was apparently small. Nonetheless, the statistical analysis of this controlled study clearly demonstrated that the difference has not occurred by chance alone. Thus, our data suggested that the introduction of assessment tests before the reporting phase of the PBL tutorial session might have a positive impact on academic achievement. A strength of the study is that it has a within-subjects design, where each student was used as his/her own control, which properly explains the obtained results.

Conclusion

In summary, this study showed that the introduction of multiple choice assessment tests before the reporting phase of the tutorial process increases the overall academic achievement in the end-of-module tests, particularly for students in the sixth semester when compared to their fifth semester academic achievement. However, such strategy did not improve the academic achievement of students in the seventh and eighth semesters.

Acknowledgment

The authors are indebted to Kevin Walsh for correcting the English text.

Disclosure

The authors alone are responsible for the content and writing of the article. The authors declare no financial competing interests and report no conflicts of interest in this work.

References

Hillen H, Sherpbier A, Wijnen W. History of problem-based learning in medical education. In: Van Berkel H, Scherpbier A, Hillen H, van der Vleuten C, editors. Lessons from Problem-Based Learning. Auckland, Cape Town, Dar es Salaam, Hong Kong, Karachi, Kuala Lumpur, Madrid, Melbourne, Mexico city, Nairobi, New Delhi, Shangai, Taipei, Toronto: Oxford University Press; 2010:5–11. | ||

Schmidt HG. Problem-based learning: rationale and description. Med Educ. 1983;17:11–16. | ||

Dolmans DHJ, De Grave W, Wolfhagen IHAP, Van der Vleuten CPM. Problem-based learning: future challenges for educational practice and research. Med Educ. 2005;39(7):732–741. | ||

Bruner JS. The act of discovery. Harvard Ed Rev. 1961;31:21–32. | ||

Wilkes M, Bligh J. Evaluating educational interventions. Br Med J. 1999;318:1269–1272. | ||

Barrows HS. A taxonomy of problem-based learning methods. Med Educ. 1986;20(6):481–486. | ||

Nendaz MR, Tekian A. Assessment in problem-based learning in medical schools: a literature review. Teach Learn Med. 1999;11:232–243. | ||

Schuwirth LWT, van der Vleuten CPM. Written assessment. Br Med J. 2003;326:643–645. | ||

Eijsvogels TMH, van den Brand TL, Hopman MTE. Multiple choice questions are superior to extended matching questions to identify medicine and biomedical sciences students who perform poorly. Perspect Med Educ. 2013;2(5–6):252–263. | ||

Lee M, Mann KV, Frank BW. What drives self-directed learning in a hybrid PBL curriculum. Adv Health Sci Educ. 2010;15:425–437. | ||

Bestetti RB, Couto LB, Romão GS, Araújo GT, Restini CBA. Contextual considerations in implementing problem-based learning approaches in a Brazilian medical curriculum: the UNAERP experience. Med Educ Online. 2014;19:24366. | ||

Couto LB, Romão GS, Bestetti RB. Good teacher, good tutor. Adv Med Educ Pract. 2016;7:377–380. | ||

Couto LB, Bestetti RB, Restini CBA, Faria-Jr M, Romão GS. Brazilian medical students’ perceptions of expert versus non-expert facilitators in a (non) problem-based learning (PBL) environment. Med Educ Online. 2015;20:26893. | ||

Bransford JD, Jonhson MK. Contextual prerequisites for understanding: some investigations of comprehension and recall. J Verbal Learn Verbal Behave. 1972;11:717–726. | ||

Boekaerts M. Self-regulate learning: a new concept embraced by researchers, policy makers, educators, teachers, and students. Learn Instr. 1997;7:161–186. | ||

Yew EHJ, Chng E, Schmidt HG. Is learning in problem-based learning cumulative? Adv Health Sci Educ Theory Pract. 2011;16(4):449–464. | ||

Godden DR, Baddeley AD. Context-dependent memory in two natural environments: on land and underwater. Br J Psychol. 1975;66:325–331. | ||

Dolmans DH, Schmidt HG. What do we know about cognitive and motivational effects of small group tutorials in problem-based learning? Adv Health Sci Educ Theory Pract. 2006;11(4):321–336. | ||

Al Kadri HMF, Al-Moamary M, van der Vleuten C. Students and teachers’ perceptions of clinical assessment program: a qualitative study in a PBL curriculum. BMC Res Notes. 2009;2:263–269. | ||

Epstein RM. Assessment in medical education. N Engl J Med. 2007;356:387–396. | ||

Frederiksen N. The real test bias. The influence of testing on teaching and learning. Am Psycol. 1984;39:193–202. | ||

Ertmer PA, Newby TJ. The expert learner: strategic, self-regulated, and reflective. Instr Sci. 1996;24:1–24. | ||

Schmidt HG, der Arend AV, Moust JHC, Kokk I, Boon L. Influence of tutors subject matter expertise on student effort and achievement in problem-based learning. Acad Med. 1993;68:784–791. | ||

Scouller K. The influence of assessment method on students’ learning approaches: multiple choice question examination versus assignment essay. Higher Educ. 1998;35:453–472. |

© 2017 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2017 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.