Back to Journals » Advances in Medical Education and Practice » Volume 14

“We Need More Practice”: Evaluating the Role of Virtual Mock OSCES in the Undergraduate Programme During the COVID Pandemic

Authors Lim GHT , Gera RD, Hany Kamel F, Thirupathirajan VAR, Albani S , Chakrabarti R

Received 5 November 2022

Accepted for publication 7 February 2023

Published 28 February 2023 Volume 2023:14 Pages 157—166

DOI https://doi.org/10.2147/AMEP.S381139

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

Editor who approved publication: Dr Md Anwarul Azim Majumder

Guan Hui Tricia Lim, Ritika Devendra Gera, Fady Hany Kamel, Vikram Ajit Rajan Thirupathirajan, Somar Albani, Rima Chakrabarti

UCL Medical School, University College London, London, UK

Correspondence: Rima Chakrabarti, Email [email protected]

Background: Feedback collated at University College London Medical School (UCLMS) during the COVID pandemic identified how many students felt unprepared for their summative Objective Structured Clinical Examinations (OSCEs) despite attending mock face-to-face OSCEs. The aim of this study was to explore the role of virtual mock OSCES for improving student’s sense of preparedness and confidence levels for their summative OSCEs.

Methods: All Year 5 students (n=354) were eligible to participate in the virtual mock OSCEs and were sent a pre- and post-survey for completion. Hosted on Zoom in June 2021, each circuit comprised six stations, assessing history taking and communication skills only, in Care of the Older Person, Dermatology, Gynaecology, Paediatrics, Psychiatry and Urology.

Results: Two hundred and sixty-six Year 5 students (n=354) participated in the virtual mock OSCEs, with 84 (32%) students completing both surveys. While a statistically significant increase in preparedness was demonstrated, there was no difference in overall confidence levels. In contrast, between specialties, a statistically significant increase in confidence levels was seen in all specialties barring Psychiatry. Despite half of the participants highlighting how the format did not sufficiently represent the summative OSCEs, all expressed interest in having virtual mock OSCEs incorporated into the undergraduate programme.

Conclusion: The findings of this study suggest that virtual mock OSCEs have a role in preparing medical students for their summative exams. While this was not reflected in their overall confidence levels, this may be due to a lack of clinical exposure and higher anxiety levels among this cohort of students. Although virtual OSCEs cannot replicate the “in-person” experience, considering the logistical advantages, further research is required on how these sessions can be developed, to support the traditional format of face-to-face mock OSCEs within the undergraduate programme.

Keywords: virtual mock OSCEs, undergraduate medical education, history taking & communication skills

A Letter to the Editor has been published for this article.

A Response to Letter by Miss Kelly has been published for this article.

Plain Language Summary

Objective Structured Clinical Examinations (OSCEs) form a core component of the assessment process for medical students. While most medical schools offer students the chance to participate in face-to-face mock OSCEs, these require large venues and significant faculty input for their delivery. Such opportunities were limited during the COVID pandemic, and with many students highlighting how unprepared they felt, the aim of this study was to look at the role of virtual mock OSCEs in preparing year 5 students at University College London Medical School (UCLMS) for their final clinical examinations. Each session assessed students on their history taking and communication skills across six specialties: Care of the Older Person, Dermatology, Gynaecology, Paediatrics, Psychiatry, and Urology. The key findings of the study identified that while students felt more prepared following the virtual mock OSCEs, a statistically significant improvement in overall confidence levels was not seen. Although students identified the main limitation of the virtual sessions in replicating the “in-person” experience, they all expressed interest in having these sessions incorporated into the undergraduate programme. However, more research is needed to explore how these sessions can be developed to incorporate clinical skills and, in particular, to support the traditional face-to-face format of mock OSCES.

Background

Introduced in the 1970s, OSCEs (Objective Structured Clinical Examinations) form an integral part of competency assessments within undergraduate and postgraduate medical education.1 Based on Miller’s conceptual framework,2 OSCEs are designed to test candidate’s history-taking, communication, and clinical skills, as they rotate through a series of structured stations.3,4 However, despite their widespread incorporation in the undergraduate medical curriculum,5,6 they remain an anxiety inducing experience for many students.7,8 While undertaking mock OSCEs has not been shown to reduce anxiety levels, their role in enabling preparedness among medical students has been associated with improved performance, leading to their incorporation in the undergraduate curriculum.9,10

Impact of Covid-19 on OSCEs and OSCE Preparation

For several institutions though, the provision of mock OSCEs was significantly disrupted as a result of the Covid-19 pandemic. The ability of medical students to develop their skills was further compounded by the subsequent halt to student assistantships and reduced patient interaction during this period. While studies exploring the impact of Covid-19 on undergraduate training are currently limited, a national survey undertaken in 2020 found that 38% of final year UK based medical students had their summative OSCEs cancelled, with 60% reporting feeling less prepared and 23% less confident in starting their role as a junior doctor.11

University College London Medical School (UCLMS)

The undergraduate (MBBS) curriculum at UCLMS consists of a six-year programme divided between the pre-clinical years (Years 1 and 2), an integrated Bachelor of Science in Year 3, followed by the clinical years (Years 4–6). All Year 4–6 UCLMS students have OSCE examinations included as part of their competency assessments, with the exception of 2019–2020, when only final-year (Year 6) students underwent their summative OSCE assessments. Consisting of 18 sessions, these were hosted virtually to circumvent the imposed COVID restrictions and included the assessment of both practical and communication skills.12 All summative OSCEs at UCLMS returned to their face-to-face format for 2020–2021.

Despite UCLMS reinstating mock face-to-face OSCEs in 2020–2021 for all Year 4–6 students, feedback from an online survey conducted by the Royal Free, University College, and Middlesex Medical Students’ Association (RUMS) suggested that many of these students felt unprepared for their summative OSCEs. However, this survey was unique to 2020–2021 and so was limited in identifying if this lack of preparedness was different to previous years. It was clear that there was a high level of anxiety, especially among Year 5 students and this appeared to be further affected by ongoing Covid restrictions limiting clinical exposure and subsequent patient interaction on the wards.

While virtually hosting summative OSCEs have been described by several institutions,13–15 the use of online platforms for conducting mock OSCEs within the literature remains limited. Therefore, a study was undertaken in June 2021 to explore the role of virtual mock OSCEs for facilitating OSCE preparation and to identify if and how, it could support student learning alongside the more traditional formats of face-to-face mock OSCEs.

Conducted at UCLMS, the aims of the study were to,

- to assess if virtual mock OSCEs improved students’ sense of preparedness and confidence levels for undertaking their summative OSCEs.

- to understand the strengths and limitations of virtual mock OSCEs compared to the traditional face-to-face format of conducting mock OSCEs.

Supervised by an Associate Lecturer, this student-led study was undertaken by five UCLMS students, three of whom were also affiliated with RUMS and two with the UCL Medical and Surgical Society at UCLMS.

Methods

This research study was undertaken by five UCLMS students, four of whom were in Year 5 and one in their Integrated Bachelor of Science (BSc) year. Overseen by a Clinical Associate Lecturer at UCLMS, they were responsible for gaining ethical approval, recruitment, the OSCE design and set up, data collection and analysis. Ethical approval for this study was gained from the UCL Research Ethics Committee (Project ID: 20567/001). Consent for participation was gained from all participants, and all responses were anonymised. The research study was awarded a £500 grant from the Student Quality Improvement and Development fund (SQUID) at UCLMS. This was evenly distributed among the students/doctors acting as OSCE examiners for the virtual mock sessions.

Study Sample

All Year 5 students (n=354) were eligible for participation in the virtual mock OSCEs. While OSCEs are undertaken by all Year 4–6 UCLMS students, the 2020–2021 Year 5 cohort was specifically targeted due to their summative OSCES being cancelled the previous academic year. Information regarding the virtual mock OSCEs and the details of the research study were disseminated by email (Appendix A-Participant Information Sheet). All students were required to complete an online consent form prior to completing both the pre- and post-survey (Appendix B-Consent Form). Any student declining consent was still able to participate in virtual mock OSCEs, but their data was not included as part of the data collection or analysis.

Study Design

OSCE Station Design

Currently, Year 5 clinical placements at UCLMS cover 11 specialities ranging in duration from 1 to 5 weeks. All of these specialties are assessed in the summative examinations over 2 days, with day 1 consisting of eight “five-minute” and day 2 of four “ten-minute” stations assessing clinical and communication skills. Due to the narrow window for hosting the virtual sessions and anticipated difficulties in recruiting examiners, the three specialty placements lasting less than a week, Ophthalmology, Breast surgery and HIV and Genitourinary Medicine (GUM) were excluded for assessment (Figure 1- Year 5 clinical placements and summary of OSCE stations included in virtual mock OSCEs). It was also recognised that assessing clinical skills would be difficult using an online platform and while several institutions have highlighted how they have overcome this by sending “clinical kits” to each student,13–15 this was beyond the scope of the proposed research study. Therefore, only communication and/or history-taking skills were assessed in the virtual mock sessions. All stations followed the same 5-minute format and template of a “communication skills stations” in the summative examinations.

|

Figure 1 Year 5 clinical placements and summary of OSCE stations included in virtual mock OSCEs. |

An invitation to write a 5-minute OSCE station based on one of the eight specialties was sent to all Year 6 UCLMS students, who had recently passed their final examinations and alumni of UCLMS working as foundation year doctors via email. To ensure content validity, all stations were subsequently checked by a Clinical Teaching Fellow in the relevant specialty before being approved by the Assessment Unit at UCLMS. While the objective had been to include a station for each of the eight specialties being assessed, the oncology station was not deemed to be of a sufficient standard by the Assessment Unit and was excluded. With all stations being based in a General Practice setting and to allow for sufficient time to provide candidate feedback, General Practice was not assessed per se as an individual station. Altogether, six stations based on each specialty (Urology, Paediatrics, Gynaecology, Dermatology, Care of the Older Person (COOP) and Psychiatry) were included in each circuit for assessment in the virtual mock OSCEs (Figure 1- Year 5 clinical placements and summary of OSCE stations included in virtual mock OSCEs).

With the benefits of near-peer facilitators well recognised in the literature,16,17 An invitation to be an examiner for virtual mock OSCEs was sent to all UCLMS Clinical Teaching Fellows, Year 6 students and UCLMS alumni presently working as doctors via email. All examiners were sent an information sheet outlining the purpose of the virtual mock OSCEs and were aware that they would be acting as both the examiner and role-player in the stations. All examiners were provided with a certificate of attendance and were awarded a £15 Amazon voucher.

OSCE Set-Up

The virtual mock sessions were held over 2 days on the weekend of the 12th and 13th of June 2021. Hosted on the online platform, Zoom, the day was split into a morning and afternoon session, with four circuits per session, each lasting 60 min (Figure 2- OSCE set-up). Three students were assigned to a breakout room where they completed all six stations, through which the examiners rotated. By having three students per station, the aim was that each student would rotate between the role of main candidate and observer. This element of peer-debriefing has been well recognised within the literature for aiding development and was incorporated to further augment the examiner feedback given to each student.18

|

Figure 2 OSCE set-up. |

Each station lasted 10 min, 5 min for the completion of the task and the remainder to provide feedback. In anticipation of delays, 15-minute intervals were built between the start and end of each circuit. To ensure an even distribution of students across both days, all students were given the option to select their top three preferred circuit dates and times. Students were assigned to the appropriate circuit depending on their preference and randomly allocated to breakout rooms. Due to last minute examiner cancellations on day 2, the third student acted as the examiner in the Dermatology station in the morning session, and the COOP and Paediatrics station in the afternoon session.

Data Collection

All Year 5 students attending the virtual mock OSCEs were invited by email to complete a pre- and post-survey hosted on Google forms (Appendix C- Pre Mock OSCE Survey & Appendix D- Post Mock OSCE Survey). Submitted surveys were assigned a unique code linking the pre- and post-survey response to enable individual comparison. This also ensured that duplicate entries were avoided and all responses were anonymised. Principally, quantitative data was collected using a 5-point Likert scale with two common domains, preparedness and confidence, covered in both surveys. Additional questions in each survey included,

- Pre mock OSCE survey

- Reasons for attendance

- Experience of previous mock OSCEs

- Post mock OSCE survey

- How the virtual mock OSCE experience compared with the face-to-face mock OSCE

- Feedback on the mock OSCE session with a free-text response on areas of improvement

Statistical Analysis

Statistical analysis was conducted using GraphPad Prism 8. Two-tailed paired t-tests were used to compare preparedness and confidence levels between the pre- and post-OSCE survey responses and a p value of ≤0.05 was determined to be statistically significant. It was estimated that 75% of the Year 5 cohort would participate in the study and it was determined that an 45% response rate equating to 160 responses would be required to ensure a confidence interval of 95%±5%. Given the wide variation reported in the literature of survey responses19 and especially, with this cohort of students so close to their examinations, the authors anticipated that the response rate of 20% (78 responses) would be more realistic, generating a confidence interval of 75%±5%.

Findings

Two hundred and sixty-six Year 5 UCLMS students (n=354) participated in the virtual mock OSCE, of which 84 (84/266, 32%) completed both the pre and post survey. This fulfilled the anticipated sample size generating the confidence level of 75%±5% that the results were statistically significant.

Of the 84 participants in the study, 58 (58/84, 69%) had prior experience of completing a virtual mock OSCE. All students cited “wanting more practice” as their main reason for participation in the virtual mock OSCEs. This was also the key expectation for 45% (38/84) of participants attending the sessions, followed by wanting to gain more feedback and advice from examiners on their performance (Figure 3 - Expectations of a virtual mock OSCE).

|

Figure 3 Expectations from a virtual mock OSCE. |

Preparedness and Confidence Following Virtual Mock OSCEs

Following the virtual mock OSCE session, a statistically significant increase in the preparedness levels for the summative OSCEs was demonstrated among participants (Figure 4 - Comparison of preparedness and overall confidence levels pre and post mock virtual OSCEs). In contrast, no statistically significant increase in overall confidence levels was demonstrated among participants (Figure 4 - Comparison of preparedness and overall confidence levels pre and post mock virtual OSCEs. Data is shown as Mean ± SEM (n=84). ****p≤0.0001).

|

Figure 4 Comparison of preparedness and overall confidence levels pre- and post- mock virtual OSCEs. Data is shown as Mean ±SEM (n=84). ****p≤ 0.0001. |

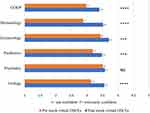

Confidence levels were also analysed by specialty, and a statistically significant increase was demonstrated in five out of the six specialties: COOP, Dermatology, Gynaecology, Paediatrics and Urology (Figure 5- Comparison of confidence levels by specialty pre- and post-virtual mock OSCEs. Data is shown as Mean ± SEM (n=84). NS – Not Significant, ***P≤0.001, ****p≤0.0001.) No difference in confidence levels was demonstrated in Psychiatry.

|

Figure 5 Comparison of confidence levels by specialty pre- and post-virtual mock OSCEs Data is shown as Mean ±SEM (n=84). ***P ≤ 0.001, ****p ≤ 0.0001. Abbreviation: NS, not significant. |

Virtual versus Face-to-Face Mock OSCEs

Overall, 90% (80/84) of participants enjoyed the experience offered by the virtual mock OSCEs, with 87% (73/84) highlighting how it had helped them prepare for the summative OSCEs. Overall, 56% (47/84) participants also felt that virtual mock OSCEs would be helpful in preparing them for foundation year training.

However, only 50% (42/84) of participants felt that the virtual mock OSCE format was sufficiently representative of the summative OSCEs, with 38% (32/84) highlighting that they felt that the face-to-face mock OSCEs were more useful than the virtual format, while 23% felt that they were both equally useful.

Despite limited feedback from the free-text responses, the main theme related to the quality of the examiner feedback, with participants citing significant variations. Despite adequate time allocated, participants felt that there was a tendency among examiners to focus on the content of the station rather than providing feedback on the student’s performance. There was no specific feedback in relation to students having to act as examiners themselves.

Discussion

In this study, it was clear that despite the virtual mock OSCEs not sufficiently representing the summative format, the majority of participants welcomed the opportunity to practice and felt that the virtual sessions had helped them prepare for the summative OSCEs. Interestingly, in this study, while the virtual sessions were associated with an increase in participant’s preparedness levels, this was not reflected in their overall confidence levels for undertaking the summative OSCEs. This was in contrast to the finding between the specialties assessed in the virtual sessions, where a statistically significant increase in participant’s confidence levels was demonstrated in five out of the six specialties.

Although the findings from this study demonstrate similar improvements in preparedness levels compared to face-to-face mock OSCES,20–22 the relationship with medical student’s confidence levels appear less clear. It also has to be acknowledged that this study did not compare student’s self-reported measures of preparedness and confidence levels pre and post the virtual sessions to the outcome of the summative OSCEs. While it was appreciated that the implementation of a new learning opportunity within the undergraduate programme would require “evidence” of its usefulness, especially in terms of improving exam results, obtaining such data would have been difficult due to participants remaining anonymous. However, studies looking at performance and pass marks in the summative OSCE following participation in face-to-face mock OSCEs have demonstrated conflicting results.23–25 Indeed, it has been suggested that student performance typically improves for identical stations assessed in both the formative and summative OSCEs and in specialty placements that were completed closer to the summative OSCEs.24

Specific limitations in regard to the OSCE set-up in this study also have to be acknowledged. One of the key limitations being that only history-taking and communication skills were assessed in the virtual sessions. While it would have been ideal to include clinical skills in virtual stations, this would have required additional equipment and resources beyond the scope of this study. Nevertheless, exploring whether, and how these virtual sessions could incorporate the assessment of clinical skills in a cost-effective manner is a key area of future consideration. Second, with only half of the Year 5 specialty placements being assessed virtually, there were limitations on the content that was tested in this study. While the number of stations per circuit was reduced to accommodate the large number of students who had registered for the sessions, it was recognised that incorporating stations from all of their speciality placements would have improved the experience. However, this had to be balanced with the time available to host these sessions whilst also ensuring that we were able to provide this resource to as many students as possible. This may explain why a key area of improvement highlighted in this study was related to the quality of examiner feedback. It is well recognised that examiner feedback is critical in mock OSCEs and that clear guidance and training should be provided to all examiners.13–15 While providing training prior to the sessions would have been difficult, it was clear that the written guidance provided needed to be more concise in detailing the scope and focus of the feedback provided to participants. Ensuring that there is an adequate pool of examiners is also crucial, as, while peer debriefing has been shown to be beneficial, providing “true” feedback can be challenging and it was recognised that on day 2, where some of the students acted as examiners due to last minute cancellations, may have introduced an element of bias and affected the feedback given. Although cancellations are unavoidable, potentially incorporating these sessions into the weekday and main timetable in the future may alleviate these pressures.

Despite the insight gained from this study, it is important to acknowledge that data was gathered from 32% of students at a single UK medical school and so there may be an element of selection bias. Interestingly, despite limited data being available on the role of virtual mock OSCEs, 69% of participants in this study had prior experience of such sessions and as such, they may have had preconceived notions of what to expect compared to those who were completely novel to the experience. However, the sample size for those who were new to virtual mock OSCEs would not have been statistically significant to generate meaningful data. Moreover, this study was restricted to Year 5 students, as Years 4 and 6 had already completed their examinations and this cohort had already been identified as having higher anxiety levels due to the pandemic limiting their clinical exposure and the cancellation of their previous years’ formative and summative OSCEs. Finally, it has to be acknowledged that while the results were statistically significant, gathering data across all clinical years and even beyond UCLMS, would enable a higher confidence interval and awareness of how virtual mock OSCEs can be developed and sustained within the undergraduate curriculum.

Although this study identifies how virtual mock OSCEs provide an alternative platform for students to gain practice prior to their summative examinations, they cannot replicate the settings offered by face-to-face mock sessions. The latter format remains an essential component of OSCE practice, as it gives them exposure to the “in-house” experience and the opportunity to test their clinical skills. However, given how keen students are to practice and the advantages virtual mock OSCEs confer in avoiding the need to secure large venues and the geographical restrictions for recruiting examiners, focusing instead on how they can be developed to augment learning within the undergraduate programme is vital. While this needs to be balanced with the digital infrastructure required to host such sessions, the authors believe that virtual mock OSCEs have a role, particularly in aiding communication skills practice among students.

Conclusion

The COVID-19 pandemic necessitated the need to adapt current teaching and learning methods. While virtual mock OSCEs appear to improve preparedness but not overall confidence levels among medical students, their key limitations, compared to face-to-face OSCEs, relate to replicating exam conditions and assessing clinical skills. Although such sessions cannot, and should not, replace the more traditional formats of face-to-face mock OSCEs, focusing on how these virtual sessions can be developed to complement the resources already offered in the undergraduate medical curriculum is vital. Crucially, this needs to explore if, and how, clinical skills can be incorporated into these virtual sessions, or if they are better suited for “assessing” communication skills. With medical students keen for any opportunity to practice and the logistical and financial advantages of hosting mock OSCES virtually, this could enable this resource to be suitably formatted for specifically developing certain OSCE skills compared to the traditional face-to-face format.

Datasets

All data will be available upon reasonable request from the corresponding author.

Ethical Approval

Ethical Approval was gained from the UCL Research Ethics Committee ID: 20567/001.

Acknowledgments

We would like to thank all those who volunteered as OSCE station writers and examiners for the virtual sessions. We are also immensely grateful to the Clinical Teaching Fellows for checking the OSCE stations and to the Assessment Unit at UCLMS for their help in verifying the stations prior to their incorporation.

Author Contributions

All authors made a significant contribution to the work reported, whether that is in the conception, study design, execution, acquisition of data, analysis and interpretation, or in all these areas; took part in drafting, revising or critically reviewing the article; gave final approval of the version to be published; have agreed on the journal to which the article has been submitted; and agree to be accountable for all aspects of the work.

Funding

The research study was awarded a £500 grant from the Student Quality Improvement and Development fund (SQUID) at UCLMS. This was evenly distributed among the students/doctors acting as OSCE examiners for the virtual mock sessions.

Disclosure

There are no competing interests to declare.

References

1. Patrício MF, Julião M, Fareleira F, Carneiro AV. Is the OSCE a feasible tool to assess competencies in undergraduate medical education? Med Teach. 2013;35(6):503–514. doi:10.3109/0142159X.2013.774330

2. Miller GE. The assessment of clinical skills/competence/performance. Acad Med. 1990;65:S63–7. doi:10.1097/00001888-199009000-00045

3. Gormley G. Summative OSCEs in undergraduate medical education. Ulster Med J. 2011;80(3):127.

4. Harden RMG, Downie WW, Stevenson M, Wilson GM. Assessment of clinical competence using objective structured examination. Br Med J. 1975;1(5955):447–451. doi:10.1136/bmj.1.5955.447

5. Jefferies A, Simmons B, Tabak D, et al. Using an objective structured clinical examination (OSCE) to assess multiple physician competencies in postgraduate training. Med Teach. 2007;29(2–3):183–191. doi:10.1080/01421590701302290

6. General Medical Council. How are students assessed at medical schools across the UK? 2013. Available from: https://www.gmc-uk.org/-/media/documents/overarching_Assesment_audit_report_FINAL_pdf.pdf_59752384.pdf_60906546.pdf.

7. Majumder MAA, Kumar A, Krishnamurthy K, Ojeh N, Adams OP, Sa B. An evaluative study of objective structured clinical examination (OSCE): students and examiners perspectives. Adv Med Educ Pract. 2019;10:387–397. doi:10.2147/AMEP.S197275

8. Troncon LE. Clinical skills assessment: limitations to the introduction of an “OSCE” (objective structured clinical examination) in a traditional Brazilian medical school. Sao Paulo Med J. 2004;112:12–17. doi:10.1590/S1516-31802004000100004

9. Race P, Pickford R. Making Teaching Work: Teaching Smarter in Post-Compulsory Education. London: SAGE Publications; 2007.

10. Blamoun J, Hakemi A, Armstead T. A guide for medical students and residents preparing for formative, summative, and virtual objective structured clinical examination (OSCE): twenty tips and pointers. Adv Med Educ Pract. 2021;12:973. doi:10.2147/AMEP.S326488

11. Choi B, Jegatheeswaran L, Minocha A, Alhilani M, Nakhoul M, Mutengesa E. The impact of the COVID-19 pandemic on final year medical students in the United Kingdom: a national survey. BMC Med Educ. 2020;20:206. doi:10.1186/s12909-020-02117-1

12. Hopwood J, Myers G, Sturrock A. Twelve tips for conducting a virtual OSCE. Med Teach. 2021;43(6):633–636. doi:10.1080/0142159X20201830961

13. Hytönen H, Näpänkangas R, Karaharju-Suvanto T, et al. Modification of national OSCE due to COVID-19 - Implementation and students’ feedback. Eur J Dent Educ. 2021;25(4):679–688. doi:10.1111/eje.12646

14. Blythe J, Patel NSA, Spiring W, et al. Undertaking a high stakes virtual OSCE (“VOSCE”) during Covid-19. BMC Med Educ. 2021;21(1). doi:10.1186/s12909-021-02660-5

15. Gulati RR, McCaffrey D, Bailie J, Warnock E. Virtually prepared! Student-led online clinical assessment. Educ Prim Care. 2021;32(4):245–246. doi:10.1080/14739879.2021.1908173

16. Kim KJ, Kim G. The efficacy of peer assessment in objective structured clinical examinations for formative feedback: a preliminary study. Korean J Med Educ. 2020;32(1):59. doi:10.3946/kjme.2020.153

17. Rashid MS, Sobowale O, Gore D. A near-peer teaching program designed, developed and delivered exclusively by recent medical graduates for final year medical students sitting the final objective structured clinical examination (OSCE). BMC Med Educ. 2011;11:11. doi:10.1186/1472-6920-11-11

18. Bevan J, Russell B, Marshall B. A new approach to OSCE preparation - PrOSCEs. BMC Med Educ. 2019;126. doi:10.1186/s12909-019-1571-5

19. Baruch Y, Holtom BC. Survey response rate levels and trends in organizational research. Human Relations. 2008;61(8):1139–1160. doi:10.1177/0018726708094863

20. O’Donoghue D, Davison G, Hanna LJ, et al. Calibration of confidence and assessed clinical skills competence in undergraduate paediatric OSCE scenarios: a mixed methods study. BMC Med Educ. 2018;211. doi:10.1186/s12909-018-1318-8

21. Chisnall B, Vince T, Hall S, Tribe R, Evaluation of outcomes of a formative objective structured clinical examination for second-year UK medical students. Int J Med Educ. 2015;(6):76–83. doi:10.5116/ijme.5572.a534

22. Brinkman DJ, Tichelaar J, van Agtmael MA, de Vries TP, Richir MC. Self‐reported confidence in prescribing skills correlates poorly with assessed competence in fourth‐year medical students. J Clin Pharmacol. 2015;55:825–830. doi:10.1002/jcph.474

23. Nackman GB, Sutyak J, Lowry SF, Rettie C. Predictors of educational outcome: factors impacting performance on a standardized clinical evaluation. J Surg Res. 2002;106(2):314–318. doi:10.1006/jsre.2002.6477

24. Alkhateeb NE, Al-Dabbagh A, Ibrahim M, Al-Tawil NG. Effect of a formative objective structured clinical examination on the clinical performance of undergraduate medical students in a summative examination: a randomized controlled trial. Indian Pediatr. 2019;56(9):745–748. doi:10.1007/s13312-019-1641-0

25. Al Rushood M, Al-Eisa A. Factors predicting students’ performance in the final pediatrics OSCE. PLoS One. 2020;15(9):e0236484. doi:10.1371/journal.pone.0236484

© 2023 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2023 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.