Back to Journals » Advances in Medical Education and Practice » Volume 13

Teaching Undergraduate Medical Students Non-Technical Skills: An Evaluation Study of a Simulated Ward Experience

Received 13 October 2021

Accepted for publication 8 April 2022

Published 11 May 2022 Volume 2022:13 Pages 485—494

DOI https://doi.org/10.2147/AMEP.S344301

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 2

Editor who approved publication: Dr Md Anwarul Azim Majumder

Jennifer Pollard,1 Michal Tombs2

1Highland Medical Education Centre, University of Aberdeen, Inverness, UK; 2Centre for Medical Education, Cardiff University, Cardiff, UK

Correspondence: Michal Tombs, Centre for Medical Education, Cardiff University, Cardiff, UK, Email [email protected]

Purpose: Research suggests that medical students in the UK report a need to be better prepared for the non-technical skills required for the role of a junior doctor. A Simulated Ward Experience was developed in an attempt to address this need. The purpose of this study was (1) to evaluate the effectiveness of the Simulated Ward Experiences by examining students’ reactions regarding the quality of teaching and (2) to examine the main drivers of learning and the extent to which students felt it helped them prepare for their future training.

Methods: A mixed method evaluation study was conducted using a questionnaire and focus groups. Final year students who participated in the Simulated Ward Experience were invited to contribute to the evaluation, out of which 25 completed the questionnaire and 13 took part in focus group interviews. Data analysis were conducted by means of descriptive statistics for questionnaire data and thematic analysis of focus group transcripts.

Results: The median Likert scores for quality of teaching Non-Technical Skills were either very good or excellent, demonstrating that students were highly satisfied with the way in which these were taught. Qualitative data provided further insights into the aspects of the intervention that promoted learning, and these were categorised into four themes, including realism of the simulation; relevance for the role and responsibilities of the Foundation Year 1 Doctor (including Non-Technical Skills); learning from and with others; and supportive learning environment.

Conclusion: This evaluation study provides further evidence of the value of learning in a simulated setting, particularly when it is closely aligned to the real clinical context and creates opportunities to practice skills that are perceived to be relevant by the learner. Study limitations are recognised and suggestions for further studies are provided.

Keywords: evaluation, simulation, non-technical skills, preparedness

Introduction

Originating in the aviation and high-risk industries,1–3 Non-Technical Skills (NTS) are described as “the cognitive, social and personal resource skills that complement technical skills and contribute to safe and efficient task performance”.4 NTS often encompasses skills such as communication, situational awareness, decision-making, prioritisation, and leadership, all of which are key domains within the General Medical Council’s “Generic Professional Capabilities Framework”.5 Based on the assumption that such skills are best taught in the workplace, most medical schools in the UK rely on the clinical placements for the learning of NTS. This follows the claim that students are not fully aware of the need for the range of skills required and therefore take time to acquire them once they begin working, known as the “apprenticeship gap”.6 One example is the “assistantship” in the final year,7–10 during which students shadow a junior doctor and undertake supervised practice. However, given the reduction in teaching within the clinical environment,8,11–16 fewer opportunities to develop these essential skills have been noted.17,18 In addition, evidence suggests there is a great deal of inconsistency in learning experiences, with students often reporting inadequate supervision,8,19 and feeling underprepared for Foundation Training,7,19–21 particularly in NTS.6,7,14,17,22 Given the existing constraints medical educators face,16 simulation has provided an alternative educational solution.18,19

Educational interventions based on sound simulation principles have the potential of bridging a gap and addressing the need of students to be better prepared for Foundation Training. This is because simulation allows acquisition and practise of skills in a low-stress environment without risk to real patients.11,17,23 It also provides exposure to clinical situations not frequently encountered on the ward to increase the preparedness of trainees.8,14,24–26 Ward round simulations for undergraduate medical students provide the opportunity to develop skills in diagnosis and management, as well as decision-making, communication and teamwork.14,27 Behaviours demonstrated in the simulation environment have been shown to predict professional behaviours in the clinical environment28 and the literature supports simulation as a tool for preparing for clinical practice.7,8,15,17,23,29

In the face of the growing need to focus on improving teaching generalised NTS to undergraduates medical students at our institution, a Simulated Ward Experience (SWE) was designed to encompass more generic skills.20,21,30 The SWE aimed to provide a realistic representation of the ward environment and a true reflection of the role of a junior doctor, with appropriate tasks representing those students will be expected to undertake once qualified. The research question of this evaluation study was set to explore students’ perceptions of their educational experience regarding the teaching and learning of NTS. It aimed at examining students’ perceptions regarding specific aspects of the intervention that drove learning and the extent to which these helped them prepare for their role as junior doctors.

Methods

The Simulated Ward Experience

The Simulated Ward Experience was originally developed by clinical teaching staff at a UK Medical School who recognised that one of the greatest challenges to patient safety students face when transitioning to work as junior doctors, is distraction management.31 After initial piloting, feedback from students highlighted a further need to embed the teaching of NTS relevant to the work of junior doctors and the SWE was revised to meet students’ identified needs.

Developed and taught by experienced clinical teaching staff who have been trained in simulation delivery and debriefing, SWE was delivered to final year medical students rotating through the campus. Designed as a half-day group teaching exercise, it was an additional learning opportunity to the regular programme of simulation-based clinical sessions included within the curriculum. The learning objectives and scenarios, summarised in Table 1, focused on NTS rather than clinical knowledge and included prioritisation, handover, communication with patients and other healthcare professionals, team working, and development of decision-making skills. Simulated patients were utilised to enhance the realism and were trained to provide students with constructive feedback.

|

Table 1 Summary of Simulation Scenarios and Learning Objectives |

The session was delivered in a purpose-built training ward for a maximum of 6 students at a time. Students were briefed at the start of the session about the setup and learning objectives and were required to work in pairs. Each simulated scenario lasted 25 minutes and each pair of students participated in one of the three scenarios (see Supplementary data for an example simulation overview). Distraction elements were retained throughout the simulations to give participants the opportunity to experience their impact on concentration. These included pagers going off in the middle of tasks, cleaner vacuuming during the ward round and a confused wandering patient. Students were expected to handover to the next “shift” at the start of each subsequent simulation, ensuring all students got the opportunity to practise handover. The SWE then culminated in a 30-minute group debrief with an experienced facilitator. During debriefing, faculty supported students to identify strengths and challenges of each scenario, before choosing one or two NTS for in-depth discussion. This approach was adapted from techniques learned in the “Introduction to Simulation: Making it work” course at the Scottish Simulation Centre. Students were encouraged to lead the discussion, with input from faculty where appropriate. Tutors also took the opportunity to teach any pressing clinical issues, which arose during the simulations, for example highlighting safe sedation practice.

Design and Sample

A mixed method approach using both a questionnaire and focus groups was utilised to explore students’ perceptions of SWE. This is because interpretation of questionnaire data from a relatively small sample is likely to yield meaningless results unless accompanied by rich contextual data. Final year students were notified about the study via email in advance of their SWE session and provided with a participant information sheet. At the end of the SWE they were invited to complete an anonymous questionnaire and asked to provide a contact email address if they wished to participate in a focus group interview. A total of 25 students completed the questionnaire (13 male, 12 female), equating to an 83% return rate, and 13 volunteers participated in focus group interviews (7 male, 6 female). The median age of participants was 23 years old. Formal ethical approval was granted from the School of Medicine at Cardiff University prior to commencing this evaluation study and participants provided informed consent for publication of anonymised responses.

Materials and Procedure

This was a small-scale study designed to provide evaluation data regarding the quality of an educational intervention in a local context. Stakeholders were particularly keen to evaluate issues pertinent to them, including quality of teaching, drivers of learning and usefulness in preparing students for Foundation Training. Having consulted the wider evaluation literature,32–34 the questionnaire and focus group interview schedule were designed. Evaluation of educational experiences typically address questions regarding student rated perceptions of confidence, satisfaction and enjoyment32,33 primarily using a combination of Likert rating scales and free-text comments.35–37 This approach informed the development of the questionnaire for our evaluation. Face and content validity of the questionnaire and the interview schedule were established via consultation with tutors, inviting them to comment and contribute towards the final version, ensuring the questions tapped into quality of teaching of all components of SWE. The tools were piloted, and no changes were necessary.

Using a 5-point Likert scale, with 1 being poor and 5 being excellent, students were asked to rate the quality of teaching in relation to each of the seven NTS taught, including: prioritisation; handover; documenting in patients notes; managing the unwell patient; working as part of the ward team, discussing patients with other healthcare professionals, and communicating with patients. This was complemented by nine open-ended questions aimed to expand upon the rating and tap into students’ perceptions of what they felt they learned from each aspect of the teaching experience (ie, the simulation, observing others, and debriefing). They were invited to comment about the set-up of the session, what they felt about the use of simulated patients and their thoughts about the scenarios. They were also asked about what aspects they enjoyed most, as well as what they enjoyed least. As a final question, they were invited to provide any suggestions on what could have been done differently and how the session could be improved.

Focus group interviews were conducted 1–2 weeks after each simulation aiming to unearth a different way of understanding the SWE, which may have otherwise remained undiscovered if using the questionnaire alone.38 An interview schedule was developed to ensure questions focused on similar themes that emerged from the open-ended questions and provided an opportunity to probe into how students felt and what they thought about each aspect of SWE, what they found particularly useful. We decided not to use the questionnaire results to inform the questions as the aim of the focus group was not to check our understanding of the answers but to try and elicit new data. The interview also provided an opportunity to explore what and whether students were able to practise in SWE that they were unable to in the real ward, and whether they translated their learning to the clinical environment.

Focus group volunteers were emailed interview details to arrange a mutually suitable date and time to conduct the interview. Three separate focus group interviews took place with 4, 5 and 4 participants in each group, respectively, lasting up to 60 minutes. The list of questions was emailed to participants ahead of their scheduled interview to ensure transparency and that the focus was on learning, not recall.

Data Analysis

Likert scales regarding quality of teaching were evaluated for descriptive statistics including median scores and overall ratings of each NTS. Median was chosen, rather than mean, because the data were skewed. Responses to open-ended questions were transcribed electronically and collated under each question heading. Manual complete coding of collated questionnaire data was undertaken independently by two researchers to generate key concepts, which were then reviewed and revised into candidate themes. A consensus was reached by discussion if there was disagreement over themes, which then informed the content of focus group questions.

Focus group interview transcripts were coded manually and themes created by grouping similar codes together. The first review yielded approximately 10–15 central concepts. Transcripts were reviewed and compared with questionnaire responses to ensure no themes were overlooked and used to identify any new emerging themes, and the four final themes were defined.39 Triangulation of the data by ascertaining facilitator perspectives in relation to their experiences of delivering the SWE was considered; however, this was not possible due to time constraints.40–44

Results

Evaluation of Teaching

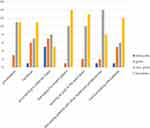

Students rated quality of teaching associated with all NTS as either very good (4) or excellent (5). “Documenting in the notes” was the only skill rated adequate (2) by more than one student (see Figure 1). Further exploration of median scores revealed that all NTS were rated on average as very good (4), with team working and managing the unwell patient identified as excellent (5).

|

Figure 1 Overall quality of teaching ratings for each NTS. |

Answers to the open-ended questions highlighted that each aspect of the simulation appeared to provide a slightly different kind of learning. Students commented that participating in the simulation provided them with an opportunity to practise NTS, such as “the importance of prioritisation; how easy it is to become distracted by interruption”. Watching other students served as an opportunity to learn from others because they were “able to put yourself in their shoes and appreciate different ways of doing things”, as well as reflect on their own performance, as reported by one student: “[it] helped to watch as [we were] able to compare what was going on to what I might do differently”. Group debriefing enabled students to pull together learning experiences and discuss elements, as well as participate in peer feedback, for example: “it helps us to learn from what we have seen and raises good talking points”.

Drivers for Learning

Thematic analysis yielded four main themes reflecting participants’ perceptions of aspects of SWE that enabled learning to take place. For students, what drove learning was (1) the realism of the learning experience, (2) its relevance to their future role as FY1 doctors, (3) learning with and from others, and (4) having a supportive learning environment.

Theme 1: Realism of the Learning Experience

Defined as true representation of the environment, expectations and encounters of a junior doctor, this was a common theme throughout the data, and several aspects were identified as contributing to the realism associated with SWE. One student noted: “I think similarity to the actual ward was what was quite useful”. Whilst scenarios and set-up were generally accepted to be realistic, the most prominent aspect enhancing realism and contributing to a positive learning experience, was the use of simulated patients (lay people who role-play patients according to a specific script). Students overwhelmingly preferred interacting with SP’s as this provided an opportunity to practise communication skills in a way, which is superior to using manikins,51–53 as stated by one student: “[it was] good to have a real person to interact with. With manikins, you end up just going through the motions”.

Theme 2: Relevance to the Role of the FY1

This theme is defined as the way in which students perceived the experience to contribute to their understanding of the roles and responsibilities of an FY1 doctor. For example, “I don’t think you really get any better experience … than actually being in the role that you are going to be expected to be doing”. Comments such as “I think it was only until we did this that it dawned on me that … FY1 is about organisational skills” highlighted that SWE seemed to be useful in developing an appreciation of aspects of FY1 that may not be evident to students whilst undertaking real-life ward experience.

SWE provided opportunity for students to practise having responsibility for patients and making decisions, in a safe environment. It also appeared to give students “permission” to take a more proactive approach in the ward, by understanding the FY1 role. As noted by one student: “Writing in the notes. I feel a lot more comfortable … before I put the notes away, I will just double check if I am unsure. Whereas before I’d maybe just put it away and think “oh someone else will get it”.

Focus group data further reinforced questionnaire findings, illustrating that learning about prioritisation and how to do it well was a key objective for students, as noted by one student: “I learned mostly about prioritisation because you’re never really in a situation on the ward where you’re [the one] making the decisions”.

Students appreciated the opportunity to consider important attributes of team working throughout all aspects of SWE, not just participating in the simulation. This supports findings from questionnaire data, for example, one student commented it was “really useful to watch how people interact with colleagues and patients”. In particular, students identified that working alongside nurses was valuable, highlighting additional skills and attitudes that SWE is teaching our undergraduates. One student noted: “you are siloed into “oh as a medical student I only chat to doctors and nursing students only chat to nurses”. Whereas that ward simulation … in your mind you are like “ah … we have to do things together”. Students also welcomed the opportunity to experience a stressful working environment similar to the one they will be exposed to as qualified doctors:

I think it’s really good to be put under that stress. I think it will be just such a “thing” when we are an FY[1], that level of stress that we’ve never had before.

Theme 3: Learning with and from Others

Defined as the understanding of how to learn from and with others, including how to give peer feedback, this theme captures the way SWE provided opportunity for students to develop an understanding of peer learning, including giving peer feedback. Some of the richest data came from discussions surrounding learning from others, which was achieved in SWE primarily during peer observation and group debriefing. One student remarked: “I learned a lot from watching everybody else. It made you more aware of what other people were doing and made me reflect on what I had done”.

The observation aspect of SWE enabled students to actively participate in discussion and provide feedback to each other during debriefing, as suggested by one student: “It’s a useful opportunity to pick up tips from peers on how they would deal with different situations”. Group debriefing, as opposed to individual feedback, was also considered a useful element, as highlighted by one student:

not only are you debriefing the two people in the room, but you’re [also] debriefing your own thoughts and everything that you were thinking about when they were doing it.

There was universal acceptance of the value of peer feedback, but students were aware it can be challenging for them: “you want that slight boundary … you’ve got more of an agenda [because] you do need to still remain friends … you don’t want to upset people”. Reflecting on SWE, students recognised that learning with and from others is an essential part of their training, for example, “I guess that part’s also realistic of real life … if [a] situation like that happened on the ward … you’d go to your colleagues or seniors to talk it over”.

Theme 4: Supportive Learning Environment

This theme was defined as the understanding of the importance of a safe learning environment and reducing concerns about observation and judgement. Comments highlighted that being watched inherently came with judgement. Some students were concerned people observing would form negative opinions regarding decisions or behaviours expressed by the individual. There were subtle hints of this in questionnaire responses - when asked what students found least enjoyable about the session, one student commented “being watched by other people in another room who can talk freely about what you are doing [and] “mistakes” you make”. A lot of student language centred around being “right” or “wrong”, and fear of judgement as a result of being watched: “people end up talking about it … watching you and judging everything you’re doing”. Despite anxiety about being watched, most students agreed peer observation was a worthwhile feature, albeit the initial concept was daunting:

It was nerve-wracking a little bit because you were being watched, but you knew everyone else was going to be in the same situation … it felt like a good, supportive learning environment.

Students recognised that once they start working as doctors the learning environment may not feel as safe and noted that SWE was “such a good opportunity to do as much of this as possible before we’re released into the real world, and it’s real patients and there’s actual risk”.

Discussion

The challenges associated with increasing service demands and lack of time of senior doctors inevitably result in fewer opportunities for students to learn in the clinical environment.18,45,46 However, ward rounds remain an essential part of medical practice and our findings provide further evidence that simulation is a suitable educational solution to help prepare students for clinical practice. SWE used in the current setting focused on the teaching of NTS, alongside simulated distraction. These were consistently highlighted as elements students had either not been taught before, or had not gained adequate exposure in the clinical environment. It was encouraging to find that students were satisfied with the quality of the teaching and that they appreciated the opportunity to learn and develop NTS such as prioritisation, teamwork, and communication with other healthcare professionals.

Findings suggest that the aspects that drove learning were: realism of the experience; relevance to their role as FY1 doctor; learning with and from others; and having a supportive learning environment. This further supports the argument that in order to optimise learning in a simulated context, it should mimic the clinical environment as closely as possible,17 and should be perceived as relevant by students.28 The most prominent aspect enhancing realism and contributing to a positive learning experience was the use of SP’s. This finding is unsurprising as SP’s are known to increase contextual fidelity of simulation,11,25 and those trained to give constructive feedback can further enhance the learning experience by providing a more holistic view of student performance and encouraging reflection on the patient’s perspective.47

For students, relevance to their role as FY1 doctors was particularly important, and related to this is the opportunity to be exposed to working under pressure.20 Students appreciated the opportunity to experience a stressful working environment similar to the one they will be exposed to as qualified doctors. The findings of this study are supportive of the general literature surrounding preparedness for practice.7,8,20,21,48

SWE introduced students to concepts of peer feedback, and the importance of learning with and from one another, which are essential for preparing for life as a junior doctor.11,49 Some of the richest data came from discussions surrounding learning from others, which was achieved in the SWE primarily by utilising peer observation and group debriefing, creating an environment perceived to be safe and supportive. Our findings therefore support previous literature on the importance of a supportive learning environment as a driver of learning, where students feel safe to make and learn from mistakes.50

Study Limitations

It is important to recognise that this study is not without limitations. Being a small-scale evaluation study, it helped understand the role of this specific SWE in our local context, but the findings cannot be generalised.51,52 In the wider context of 200 students per year, it is not possible to make general comments about the entire final year group. It is also worth noting that approximately 10% of the total medical school cohort are international students. The views of a representative sample of international students were captured in the questionnaire data; however, none of them volunteered to participate in interviews. It is hard to say whether this would have influenced the overall results of the study, but any follow-up study should aim to ensure there is representation of this group in interviews to be truly reflective of the whole cohort. Whilst aiming to overcome the criticism of mixed method questionnaires by conducting a qualitative evaluation as a priory,53 qualitative studies can be difficult to control for bias, as the subjective nature of elements being studied are intimately related to the context in which they are studied.44 For this reason, the evaluation could have been enhanced by further validating and checking the reliability of the evaluation tools. Whilst responses to open-ended questionnaire items provided valuable information on what students learned and found useful, it was the rich data generated from focus groups that provided insights into what drove learning and what was particularly useful in preparing for Foundation training.

Conclusion

Despite being small scale, the results of this evaluation study demonstrate that quality of teaching related to NTS in SWE is high. But more than that, this intervention is creating an awareness of other skills, which could potentially facilitate transition into life as a junior doctor. This study therefore contributes to the large body of literature highlighting the advantages of using simulation in the teaching of NTS, and that the efficacy of such interventions depend on the extent to which they adhere to solid design principles, including realism, relevance, learning with others and providing a supportive learning environment. What remains to be seen is whether SWE translates to real behavioural change in the clinical environment and how this impacts patient care. A follow-up longitudinal study evaluating attitudes of newly qualified FY1ʹs who participated in SWE as undergraduates may help ascertain other aspects and possible benefits of the educational intervention.32,33

Abbreviations

DOHE, Department of Health England; FY1, foundation year 1 doctor; GMC, General Medical Council; NTS, non-technical skills; SWE, simulated ward experience; WHO, World Health Organisation.

Data Sharing Statement

The datasets generated and/or analysed during the current study are not publicly available due GDPR rules but are available from the corresponding author on reasonable request.

Ethics Approval and Consent to Participate

Formal ethical approval was granted from Cardiff University prior to study recruitment (SMREC reference number 19/13).

Consent for Publication

Participants were informed via the participant information sheet and understood that by agreeing to participate they were consenting to anonymised data being used in any publication.

Acknowledgments

Thank you to all staff at the Highland Medical Education Centre and the students at University of Aberdeen for their participation and support throughout this project.

Author Contributions

All authors made a significant contribution to the work reported, whether that is in the conception, study design, execution, acquisition of data, analysis and interpretation, or in all these areas; took part in drafting, revising or critically reviewing the article; gave final approval of the version to be published; have agreed on the journal to which the article has been submitted; and agree to be accountable for all aspects of the work.

Funding

No funding was received for this study.

Disclosure

The authors report no conflicts of interest in this work.

References

1. Sexton JB, Thomas EJ, Helmreich RL. Error, stress, and teamwork in medicine and aviation: cross sectional surveys. BMJ. 2000;320(7237):745–749. doi:10.1136/bmj.320.7237.745

2. Helmreich RL. On error management: lessons from aviation. BMJ. 2000;320(7237):781–785. doi:10.1136/bmj.320.7237.781

3. Gordon M. Non-technical skills training to enhance patient safety. Clin Teach. 2013;10(3):170–175. doi:10.1111/j.1743-498X.2012.00640.x

4. Flin R, O’Connor P, Crichton M. Safety at the Sharp End: A Guide to Non-Technical Skills. Taylor & Francis; 2008.

5. General Medical Council. Generic professional capabilities framework; 2017:8–9.

6. Brown M, Shaw D, Sharples S, Le Jeune I, Blakey J. A survey-based cross-sectional study of doctors’ expectations and experiences of non-technical skills for out of hours work. BMJ Open. 2015;5(2):1–6. doi:10.1136/bmjopen-2014-006102

7. McGlynn MC, Scott HR, Thomson C, Peacock S, Paton C. How we equip undergraduates with prioritisation skills using simulated teaching scenarios. Med Teach. 2012;34(7):526–529. doi:10.3109/0142159X.2012.668235

8. Morgan J, Green V, Blair J. Using simulation to prepare for clinical practice. Clin Teach. 2018;15(1):57–61. doi:10.1111/tct.12631

9. General Medical Council. Good medical practice; 2013. doi:10.1016/B978-0-7020-3085-7.00001-8

10. General Medical Council. Promoting excellence: An international study into creating awareness of business excellence models. TQM J. 2000;6. doi:10.1108/17542730810867254

11. Ker J, Bradley P. Simulation in medical education. In: Swanick T, editor. Understanding Medical Education: Evidence, Theory and Practice.

12. Hunt G, Wainwright P. Expanding the Role of the Nurse. Blackwell Scientific Publications; 1994.

13. McManus I, Richards P, Winder B. Clinical experience of UK medical students. Lancet. 1998;351:802–803. doi:10.1016/S0140-6736(05)79103-0

14. Gee C, Morrissey N, Hook S. Departmental induction and the simulated surgical ward round. Clin Teach. 2015;12(1):22–26. doi:10.1111/tct.12247

15. Harvey R, Mellanby E, Dearden E, Medjoub K, Edgar S. Developing non – technical ward- round skills. Clin Teach. 2015;12:336–340. doi:10.1111/tct.12344

16. Cleland J, Patey R, Thomas I, Walker K, O’Connor P, Russ S. Supporting transitions in medical career pathways: the role of simulation-based education. Adv Simul. 2016;1(1):1–9. doi:10.1186/s41077-016-0015-0

17. Ker JS, Hesketh EA, Anderson F, Johnston DA. Can a ward simulation exercise achieve the realism that reflects the complexity of everyday practice junior doctors encounter? Med Teach. 2006;28(4):330–334. doi:10.1080/01421590600627623

18. Laskaratos FM, Wallace D, Gkotsi D, Burns A, Epstein O. The educational value of ward rounds for junior trainees. Med Educ Online. 2015;20:1. doi:10.3402/meo.v20.27559

19. Nikendei C, Kraus B, Schrauth M, Briem S, Jünger J. Ward rounds: how prepared are future doctors? Med Teach. 2008;30(1):88–91. doi:10.1080/01421590701753468

20. Illing J, Morrow G, Kergon C, et al. How prepared are medical graduates to begin practice? A comparison of three diverse UK medical schools final report for the GMC April 2008; 2013.

21. Illing JC, Johnson N, Allen M, et al. Perceptions of UK medical graduates’ preparedness for practice: a multi-centre qualitative study reflecting the importance of learning on the job. BMC Med Educ. 2013;13:1. doi:10.1186/1472-6920-13-34

22. Pucher PH, Aggarwal R, Singh P, Srisatkunam T, Twaij A, Darzi A. Ward simulation to improve surgical ward round performance: a randomized controlled trial of a simulation-based curriculum. Ann Surg. 2014;260(2):236–243. doi:10.1097/SLA.0000000000000557

23. Mollo EA, Reinke CE, Nelson C, et al. The simulated ward: ideal for training clinical clerks in an era of patient safety. J Surg Res. 2012;177(1):e1–e6. doi:10.1016/j.jss.2012.03.050

24. Nikendei C, Kraus B, Lauber H, et al. An innovative model for teaching complex clinical procedures: integration of standardised patients into ward round training for final year students. Med Teach. 2007;29(2–3):246–252. doi:10.1080/01421590701299264

25. Stirling K, Hogg G, Ker J, Anderson F, Hanslip J, Byrne D. Using simulation to support doctors in difficulty. Clin Teach. 2012;9(5):285–289. doi:10.1111/j.1743-498X.2012.00541.x

26. Brigden D, Dangerfield P. The role of simulation in medical education. Clin Teach. 2008;5:167–170. doi:10.1053/j.scrs.2008.02.007

27. Behrens C, Dolmans DHJM, Leppink J, Gormley GJ, Driessen EW. Ward round simulation in final year medical students: does it promote students learning? Med Teach. 2018;40(2):199–204. doi:10.1080/0142159X.2017.1397616

28. Weller JM. Simulation in undergraduate medical education: bridging the gap between theory and practice. Med Educ. 2004;38:32–38. doi:10.1046/j.1365-2923.2004.01739.x

29. Mole LJ, McLafferty IHR. Evaluating a simulated ward exercise for third year student nurses. Nurse Educ Pract. 2004;4(2):91–99. doi:10.1016/S1471-5953(03)00031-3

30. Hagemann V, Herbstreit F, Kehren C, et al. Does teaching non-technical skills to medical students improve those skills and simulated patient outcome? Int J Med Educ. 2017;8:101–113. doi:10.5116/ijme.58c1.9f0d

31. Thomas I, Nicol L, Regan L, et al. Driven to distraction: a prospective controlled study of a simulated ward round experience to improve patient safety teaching for medical students. BMJ Qual Saf. 2015;24(2):154–161. doi:10.1136/bmjqs-2014-003272

32. Kirkpatrick D. Great ideas revisited. Techniques for evaluating training programs. Revisiting Kirkpatrick’s four-level model. Train Dev. 1996;50:54–59. doi:10.2217/nnm.11.175

33. Bates R. A critical analysis of evaluation practice: the Kirkpatrick model and the principle of beneficence. Eval Program Plann. 2004;27(3):341–347. doi:10.1016/j.evalprogplan.2004.04.011

34. Stufflebeam DL. The CIPP model for evaluation. In: International Handbook of Educational Evaluation.

35. Boynton PM, Greenhalgh T. Selecting, designing, and developing your questionnaire. BMJ. 2004;328(7451):1312–1315. doi:10.1136/bmj.328.7451.1312

36. Boynton PM. Administering, analysing, and reporting your questionnaire. BMJ. 2004;328(7452):1372–1375. doi:10.1136/bmj.328.7452.1372

37. Artino AR, La Rochelle JS, Dezee KJ, Gehlbach H. Developing questionnaires for educational research: AMEE Guide No. 87. Med Teach. 2014;36(6):463–474. doi:10.3109/0142159X.2014.889814

38. Lovato C, Wall D. Programme evaluation: improving practice, influencing policy and decision-making. In: Swanick T, editor. Understanding Medical Education: Evidence, Theory and Practice.

39. Braun V, Clarke V. Moving towards analysis. In: Successful Qualitative Research. A Practical Guide for Beginners.

40. Cook DA. Twelve tips for evaluating educational programs. Med Teach. 2010;32(4):296–301. doi:10.3109/01421590903480121

41. Hart A. Ten common pitfalls to avoid when conducting quantitative research. Br J Midwifery. 2014;14(9):532–533. doi:10.12968/bjom.2006.14.9.21795

42. Mays N, Pope C, Qualitative research in health care. Assessing quality in qualitative research. BMJ. 2000;320(7226):50–52. doi:10.1136/bmj.320.7226.50

43. Noble H, Smith J. Issues of validity and reliability in qualitative research. Evid Based Nurs. 2015;18(2):34–35. doi:10.1136/eb-2015-102054

44. Ng S, Baker L, Cristancho S, et al. Qualitative research in medical education: methodologies and methods. In: Swanick T, editor. Understanding Medical Education: Evidence, Theory and Practice.

45. Powell N, Bruce CG, Redfern O. Teaching a “good” ward round. Clin Med J. 2015;15(2):135–138. doi:10.7861/clinmedicine.15-2-135

46. Force J, Thomas I, Buckley F. Reviving post-take surgical ward round teaching. Clin Teach. 2014;11(2):109–115. doi:10.1111/tct.12071

47. Spencer J, McKimm J. Patient involvement in medical education. In: Swanick T, editor. Understanding Medical Education: Evidence, Theory and Practice.

48. Brennan N, Corrigan O, Allard J, et al. The transition from medical student to junior doctor: today’s experiences of tomorrow’s doctors. Med Educ. 2010;44(5):449–458. doi:10.1111/j.1365-2923.2009.03604.x

49. Mcleod S. Maslow’s hierarchy of needs. Simply Psychol. 2019. Available from: https://www.simplypsychology.org/maslow.html.

50. McGregor CA, Paton C, Thomson C, Chandratilake M, Scott H. Preparing medical students for clinical decision making: a pilot study exploring how students make decisions and the perceived impact of a clinical decision making teaching intervention. Med Teach. 2012;34:7. doi:10.3109/0142159X.2012.670323

51. Braun V, Clarke V. Successful Qualitative Research. A Practical Guide for Beginners.

52. Castillo-page L, Bodilly S, Bunton S. Understanding qualitative and quantitative research paradigms in academic medicine. Acad Med. 2012;87(3):386. doi:10.1097/ACM.0b013e318247c660

53. Horrocks N, Pounder R. Working the night shift: preparation, survival and recovery. Medicine. 2006;6(1):61.

© 2022 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2022 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.