Back to Journals » Advances in Medical Education and Practice » Volume 13

Integrating Medical Simulation into Residency Programs in Kingdom of Saudi Arabia

Authors Alzoraigi U, Almoziny S, Almarshed A, Alhaider S

Received 18 July 2022

Accepted for publication 3 November 2022

Published 7 December 2022 Volume 2022:13 Pages 1453—1464

DOI https://doi.org/10.2147/AMEP.S382842

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 4

Editor who approved publication: Dr Md Anwarul Azim Majumder

Usamah Alzoraigi,1 Shadi Almoziny,1 Abdullah Almarshed,1 Sami Alhaider1,2

1Center of Research, Education Simulation and Enhanced Training (CRESENT), King Fahad Medical City, Riyadh, Saudi Arabia; 2Executive Presidency of Academic Affairs, Saudi Commission for Health Specialties, Riyadh, Saudi Arabia

Correspondence: Usamah Alzoraigi, Center of Research, Education Simulation and Enhanced Training (CRESENT), King Fahad Medical City, Riyadh, 11525, Saudi Arabia, Tel +966 505104548, Email [email protected]

Purpose: This study conducted a simulation needs assessment on diverse ongoing residency training programs supervised by the Saudi Commission for Health Specialties (SCFHS) in Saudi Arabia. The goal was to develop a standardized methodological approach to integrate simulation as a teaching tool for any ongoing training program.

Methods: A mixed-methods approach is used in four steps to focus on top educational needs and integrate simulation into the curriculum. The first step was the selection of 38 residency training programs based on the scoring criteria tool. Of these, nine were selected as target programs. The next step was champion recruitment, where two faculty representatives from each specialty were trained to become specialty champions. The third step was a targeted audience needs assessment, consisting of four phases: curriculum review; a targeted audience survey; stakeholders’ interview; and selection of top educational requirements generated by the first three phases. Lastly, the fourth step used an integration simulation sheet to build common themes for incorporating simulation into the program curriculum.

Results: Out of 38 programs, the nine selected top-ranked specialties completed the process, and roadmaps were developed. Using the combined list of all skills and behaviors, the final score proportion was calculated and then ranked. A list of the top needed skills and behaviors was compiled as follows: Obstetrics and Gynecology 10/84, Emergency Medicine (ER) 80/242, Intensive Care Unit 20/139, Internal Medicine (IM) 37/102, Pediatric 82/135, Ear, Nose, and Throat (ENT) 49/125, General Surgery (GS) 55/114, Plastic Surgery 24/165, and Family Medicine (FM) 59/168.

Conclusion: Findings from this process could be used by the supervisory bodies at a country level and assist decision-makers to determine which criteria to use in the needs assessment to integrate simulation into any ongoing residency training program.

Keywords: research priority, need assessment, simulation curriculum, SCFHS, skills, behavior

Introduction

The postgraduate medical education system, in particular residency training programs, is changing considerably. Many countries introduce competency-based training with outcome-based approaches as well as competence-dependent certification rather than time-dependent training. In the 1990s, the CanMEDS framework (Canadian Medical Education Directives for Specialists),1 followed by the Outcome Project of the ACGME (Accreditation Council for Graduate Medical Education in the USA) was created to improve resident physicians’ ability to deliver quality patient care and to work effectively within current and evolving healthcare delivery systems.2,3 In 2005, “Entrustable Professional Activity” (EPA) was introduced, which allowed trainees to be independent enough and undertake unsupervised practice.4–6 This transition expanded the need for simulation of these programs, aside from matching residency programs with competencies during and after residency training programs graduation.7

Simulation training helps residents practice and performs basic skills and behaviors they need with a high level of accuracy before they step into a real-life clinical scenario. Moreover, simulation can be integrated into residency programs to enhance complex medical management and crisis resource management.8 Most residency training programs are governed by national regulatory bodies that monitor educational standards.

The Saudi Commission for Health Specialties (SCFHS), a national body, oversees more than 38 residency training programs in the Kingdom of Saudi Arabia. In recent years, SCFHS has transitioned from traditional medical education to competency-based training and is in the process of implementing the EPA education program.9,10 As with any transition, multiple factors provide challenges for integrating simulation-based activities such as: introducing simulation activities to the current residency curricula that have never implemented simulation before; transforming part of ongoing training activities into simulation-based activities; aligning simulation-based activities with the EPA system; and competency-based training, all create challenges for a new transformation.

Research has shown that some needs assessment methods can fill training gaps and target-specific specialties such as interviews; surveys; focus group discussions; and workshops. On the other hand, mixing these methods is recommended to represent accurately the gap and be relevant to learners’ needs. Designing a needs assessment that fits multiple diverse specialties is a national challenge.11,12

Between 2018 and 2019, the SCFHS began integrating simulations into residency training. The initiative targeted training gaps in every specialty and allowed ongoing training continuity for different specialties. In this manuscript, we aim to develop a structured method for assessing simulation needs on the ongoing residency training programs belonging to the SCFHS, prioritizing the top-needed specialties and competencies.

Materials and Methods

In every programs supervised by SCFHS, a scientific council is responsible for developing the curriculum and ensuring its effective implementation. In this study, a mixed-method approach was used in four step to focus on top educational needs and integrate simulation into the curriculum. A metric-based approach was also used to rank the list of skills and behaviors of invited stakeholders. An overview of the process is shown in Figure 1.

|

Figure 1 Summary method to integrate simulation into residency program. |

Step 1: Selecting Targeted Specialties

The SCFHS has assigned a panel of three expert of physicians which were selected based on their qualifications with more than 5 years’ experience in simulation-based education. During discussion and brainstorming, the panel of experts used standard ratings after answering 9 questions. Based on the average rating of panel, the criteria for selecting the best target specialties were identified. The panel of experts came up with nine criteria against a linear scale from 1 to 10, where ten indicate high/strongly agree while 1 indicates the reverse. The criteria were as follows:

- Was simulation in this specialty applicable and were simulation resources available?

- Was simulation internationally used in this specialty?

- Are psychomotor skills in this specialty highly needed?

- Are the chances for skills training limited in the form of clinical exposure?

- Were clinicians in specialty dealing with high patients’ acuity (ie patients need immediate intervention)?

- Were clinicians in specialty dealing with crises?

- Does this specialty have a significant impact on patient safety?

- Does this specialty need strong interprofessional skills?

- Does this specialty need strong communication skills?

Each of the 38 specialties was evaluated based on the voting rank (1–10) of each panel member.

There were nine specialties (24%) out of 38 SCFHS programs that scored high and were included in the study. The high score was determined based on answers to nine questions and review of the curriculum. The panel of experts considered five specialties targeted in stage one (first year) based on a large number of residents, such as obstetrics and gynecology, internal medicine, and pediatrics. Also, they considered acute care patterns of practice such as ER and ICU. The remaining four specialties were considered in stage two (second year).

Step 2: Selecting Targeted Champions

To involve specialty content experts for better engagement, SCFHS as a national reference body compiled a list of champions in each specialty based on their data. The specialty’s scientific council then nominated three names, and a panel of simulation experts selected two of them. The process resulted in two champions representing each specialty. All steps of the selection process were based either on one or more of the following qualifications: expertise in the specialty subject; simulation expertise; experience in medical education; and instructorship in the trainer’s courses. During an orientation workshop, a detailed description of specialty champions’ tasks was explained and discussed. An orientation package was provided to the selected specialty champions in an electronic format numbered sequentially, with one folder labeled as an orientation package, that contained a curriculum folder, an electronic survey list, information about focus group, and an integration simulation sheet and submission document. The curriculum folder contains the original specialty curriculum and another file with the first list of skills and behaviors, which the Curriculum development department at SCFHS extracts; the electronic survey list is an excel sheet ready to be sent to collect survey responses for the second list of skills and behaviors; focus group contains an excel sheet which will be used during the focus group brainstorming during the workshop in order to collect the third list of skills and behaviors; Integration Simulation Sheet is an excel sheet helps specialty champions translate all collected skills and behaviors to educational objectives and try to link current suggested methods of delivering these objectives with future needs; and Roadmap Submission Document is a Word document has the broad lines and titles for the final document, which will be submitted to the Scientific Council for approval.

Step 3: Targeted Audience Simulation Need Assessment

The needs assessment conducted followed a chronological sequence of four phases. The first three phases led to a product that contained a list of skills and behaviors. These skills and behaviors emanated from different sources and different steps (such as: curriculum; survey; and workshop) in a different format (as objectives or titles) then it will be merged into a list. The three phases aim to increase skills and behaviors among a larger population to prevent training gaps. The phases are: curriculum review; targeted audience survey; and focus group of stakeholder interviews. The final phase of the process was to reduce the number of skills and behaviors to those that were more relevant to the need and implementation.

Phase 1: Curriculum Review

After the panel of simulation experts reviewed the current curriculum, the specialty champions summarized the first set of skills and behaviors. During the review phase, the goal was to create a broad objective-based list of skills and behaviors.

Phase 2: Targeted Audience Survey

A simple survey consists of open-ended questions developed by panel of three experts including demographic data, skills that impact the residents with limited clinical exposure, the most critical behaviors needed for residents with limited opportunity to practice, and inter-professional skills and behaviors. A survey questionnaire was distributed to new graduates, program directors, and practicing consultants (please see Table 1). Recent graduates and program directors might recall their examination performance reflecting training gaps while practicing consultants might recall incidents in their daily clinical practice.

|

Table 1 Survey Questions |

Phase 3: Focus Group of Stakeholders’ Interviews

The specialty champions conducted a half-day focus group meeting for targeted consultants, program directors, and final-year residents of each of the nine targeted specialties separately. The targeted participants were selected based on SCFHS data (program directors, senior residents, specialty council members). Communication was done through SCFHS channels since they are the responsible body for training. The specialty champions facilitated the meeting by asking structured questions to the participants to obtain more information on needed skills and behavior changes for each specialty. The meeting included three rounds of table discussions. The first round-table aimed to add skills and behaviors not addressed in curriculum analysis and survey results. By the end of round 1, the expanded list of skills and behaviors (divergent) was completed. A schedule of focus group discussion workshops is shown in Table 2.

|

Table 2 Focus Group Discussion Workshop Schedule |

Phase 4: Selecting the Top-Ranked List of Skills and Behaviors

In the same interview, the second round-table discussion focused on the ranking list of skills and behaviors based on the curriculum, survey, and first round-table discussion. Each item was scored individually using a Google Form as an electronic scoring system (1–10) by the focus group. During the third round-table, re-arrangement of skills and behaviors from highest to lowest need was discussed, followed by agreement on an imaginary line (threshold line) above which all skills and behaviors would be required and funded.

Step 4: Building Common Themes Using an Integration Simulation Sheet

To guide specialty champions with their integration of simulation into the final ranked list, the panel of experts established a roadmap integration template. The sheet had five primary columns: themes, needs assessment objectives, type of current delivery method of these objectives, if any, suggested future simulation delivery method, where to fit the proposed new simulation activities into the academic calendar, and any remarks or ideas (Figure 2 and Table 3). The panel of experts outlined the threshold line that determined the final ranked list for each specialty from the combined list of skills and behaviors required for each specialty.

|

Table 3 Drop-Down List for Suggested Future Activities |

|

Figure 2 Sample Road Map Integration Sheet. |

Also, the panel of experts proposed a drop-down list to help champions plan for future delivery methods and fit new simulation activities into the academic calendar as seen in Table 3.

The top-ranked skills and behaviors obtained from the above phases were incorporated into the roadmap integration simulation sheet as a final list of skills and behaviors.

Results

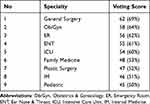

Each program of the 38 specialties was assessed according to the voting rank (1–10) of panel experts. They combined the results based on average rating and use percentage to obtain score. Nine specialties scored high and were included in the study (please see Table 4). The high score was determined upon answering 9 questions and reviewing the curriculum with a standard rating.

|

Table 4 Results of Specialty Voting Run by Panel of Experts |

As shown in Table 5, each skill and behavior in each specialty was analyzed step by step and contributed to the educational themes. Interestingly, communication issues with patients (such as consent-taking, communicating bad news to patients, counseling patients, etc.) and between staff members (such as crisis management and team-based training) tended to appear as a training gap theme in all specialties, except for plastic surgery, which was heavily focused on skills. During the course of the process, the participants believed that various communication skills were extremely important in the care of a patient. Nonetheless, most participants felt they had initially overestimated their knowledge of communication topics. Demographic differences were evident in the responses. The study proves there was a wide range of communication needs, and optimizing the connection between healthcare professionals and patients is crucial.

|

Table 5 Summary of the Number of Skills and Behaviors in Every Specialty in Step 3 (Simulation Needs Assessment Methodology) and Step 4 (Development Integration Simulation Sheet) |

The panel considered five specialties targeted in stage one (first year) based on a large number of residents, such as obstetrics and gynecology, internal medicine, and pediatrics. Additionally, they considered acute care patterns of practice such as ER and ICU. The remaining four specialties were considered in stage two (second year).

By the end of the initiative, nine specialties had completed the process, resulting in nine roadmaps that were presented to the higher governing bodies for specialty training (scientific councils). Additionally, the roadmaps all had similar titles that preceded the steps in the methodology. Box 1 lists the components of the submission roadmap documents.

|

Box 1 Components of the Submission Roadmap Document |

Discussion

The approach that we described has never been used in Saudi Arabia. Nine medical programs were analyzed and described as national standardized needs assessments that can be applied to different postgraduate studies. We considered the allocation of the existing training programs under the umbrella of the SCFHS to be the first step in facilitating the standardization of communication between simulation experts and specialists in the field (specialty scientific councils).

To explain how the process works, obstetrics/gynecology is used as an example. Following a review of the curriculum, their champions specified 49 skills and behaviors, and then, they added five additional skills and behaviors that were not in the curriculum. During the focus group discussion (Phase 3), participants listed an additional 30 skills and behaviors after the first round of table discussions, resulting in 84 skills and behaviors in total. In the third round of brainstorming, the participants agreed on 10 skills and behaviors that are above an imaginary threshold line and worthy of funding. In Step 4, the champions divided the final ranked list into three themes using an integration simulation sheet. Further, the panel developed specially designed guidelines for conducting the simulation process for training the trainers (TOTs). These guidelines covered many of the areas related to the qualifications of the instructor (see Box 2 for TOT guidelines), including course objectives, design, and facility. Specifically, the TOT simulation education program can offer residents a safe learning environment that will allow them to learn the essential concepts and gain on-The-job training experience to help improve patient outcomes. The trainers who were involved in the program had to have healthcare experience, be registered with the SCFHS, and hold a qualification in healthcare simulation. More importantly, they had to demonstrate that they had completed the simulation TOT course.13–17

|

Box 2 Simulation Train the Trainers (TOT) Courses Guidelines |

The use of multiple sources to collect information on training gaps has been described in the literature as enabling more accurate definitions of training gaps. Mann and Chaytor described a large-scale survey and focus group discussion as a means of assessing the needs of a specific group of physicians.18,19 Following a chronological process, the authors analyzed the curriculum, conducted the survey, and then held focus groups. During the sequence of targeted audience simulation activities, we found that some skills and behaviors may not be covered in the curriculum while they are taught separately during scattered activities. However, sending a simple targeted audience survey through emails could identify these critical learning gaps, and the focus group of stakeholders’ interviews could identify additional learning gaps not addressed by earlier methods.

Salzman et al devised a six-step process for standardizing proposal submission and evaluation mechanisms. The project targeted 190 training programs and 1136 trainees. Their approach focused on recruiting simulation experts to develop a structured format for reviewing the curriculum. They then realized that those curricula might benefit from simulation experts’ mentoring and support in making proper decisions regarding funding.20 Furthermore, Wehbi et al published a needs assessment method for simulation-based training that targeted a single specialty (eg, emergency medical practice) to build customized curricula to cover training gaps on-site using mobile units in rural areas.21 The methodology sequence began with a focus group followed by surveys and analysis. Comparatively, we conducted our needs assessment work by beginning with a review of ongoing structural training programs, followed by an open-ended survey and then focusing on specialized group workshops.

In Wehbi et al study, they compiled a list of 30 skills/behaviors from focus group discussions; eight themes have 17 descriptions for skills/behaviors. Comparatively, we identified 205 skills/behaviors in our curriculum review; eight of which came from the Targeted Audience Survey; 29 from the Focus Groups of Stakeholders. Interview; 80 from the top-ranked list; and 13 themes from the simulation integrating sheet. An earlier study by Arab et al analyzed simulation in anesthesia residency training programs in Saudi Arabia using other approaches.22

The authors outlined a gradual transition schedule between 2014 and 2018. It began by implementing mandatory standardized workshops that matched the specialty curriculum and ongoing training activities.23 Consequently, anesthesia was excluded from the implementation plan.

Limitations of the Study

- The process was retrospectively retrieved based on reflective learning until we reach a mature process.

- Listening skills and behaviors in the survey without writing structural objectives might not reflect the exact meaning of the gap; for example, communication with the patient might be related to breaking bad news or dealing with difficult patients. This gap was raised and managed during the focus group discussions by participants and by filling up the integration simulation sheet by specialty champions. Additionally, in sending the survey in Step 2, we found that we missed the exact number of targeted groups to calculate the response rate. The problem occurred when we sent the open ended questions survey to all specialty stakeholders without confirming whether they had received or counted the sent surveys and discovered a gap when we described the process retrospectively.

The needs assessment detailed gaps in every specialty, including a list of objectives, themes, modules, recommended educational activities, and others not included in the current paper because they were outside the scope. Scrutinizing these details in every specialty may enable content experts to review these gaps and provide support for their decisions and future publications. It would be interesting to measure the secondary outcomes of training programs using the described methods, such as the competency level of graduates or patient safety.24 Several previous studies have illustrated the significance of assessing the secondary outcomes of any method used to create simulation needs assessments, particularly where skills and behaviors are concerned.25–29

Implementation Challenges

The approach used and described in our study was a creative idea supported by previous needs assessment work. We described some challenges for better learning in the future and might support the simulation community.11,30,31

Time and Space

The regular meetings between the simulation experts’ panel and the champions, for example, enriched the feedback on the process used. They were mainly designed to obtain a concurrent reflection, and they reported immediately after each step with review and feedback. Consequently, significant modifications and reviews of ongoing processes were carried out, which challenged the speed of the process and extended the time to complete the process. However, it can be asserted that our approach will greatly reduce the required time for conducting this needs assessment method in any simulation program. This would take no more than ten to twelve weeks to complete each cycle and develop a specialty road map without overstretching champions and stakeholders.

Champion Commitment

One of the challenges was champion commitment. We replaced two of the champions due to a lack of response. Simultaneously, another specialty needed a third additional champion to review and revise a portion of their work since their methodology differed from the proposal.

Variation in Required Skills by Specialty

Another challenge we encountered was the variation in training patterns and methods used to deliver the targeted specialties education activities between specialties. For instance, surgical specialties had more hands-on activities. Another example is emergency medicine, which had a long list of skills and behaviors required. Most of their training was based on joint rotations with other specialties.

Logistics and Accreditation

To ensure the successful implementation of this initiative, practical steps must be undertaken, including the readiness of simulation centers, availability of equipment, and educator skills in using simulation tools. The panel of three physicians at SCFHS trained in simulation-based education studied infrastructure readiness to ensure proper and smooth implementation. Consequently, summary road map reports were sent to simulation centers and program directors, asking for feedback. The feedback focused on the availability of similar training centers, the possibility of creating new activities, and the cost necessary for establishing such a facility.

Conclusion

This work can be used by groups planning similar projects to learn valuable lessons like: 1) compare literature needs assessment having different chronological sequences with our work in terms of primary outcome; 2) support any organization and national umbrellas with suggested needs assessment methods and prepared guided forms; 3) learn and identify challenges during this process; 4) awareness of cost-effective needs assessment methods that focus on implementing what is needed; and 5) provide solutions for logistics issues and how it affects our needs assessment implementation.

We offer all medical practitioners interested in implementing the concluded simulation needs assessment an approachable and easy-to-apply methodology based on the needs assessment method (steps and phases). In the current study, we found that applying these descriptive methods might help target training deficiencies and make it easier for organizations and decision makers to supervise residency training programs, as well as strengthen the integration of simulations into ongoing residency training programs across the country.

Abbreviations

SCFHS, Saudi Commission for Health Specialties; Ob/Gyn, Obstetrics & Gynecology; ER, Emergency Room; ICU, Intensive Care Unit, IM, Internal Medicine; ENT, Ear, Nose & Throat; TOT, Train the trainers.

Data Sharing Statement

The datasets generated and/or analyzed during the current study are not publicly available due to the ownership of data by SCFHS but are available from the corresponding author on reasonable request.

Ethics Approval

The research was approved by the Institutional Review Board at King Fahad Medical City, Riyadh, Saudi Arabia. IRP registration number: 21-420.

Acknowledgments

Thanks to all of the program directors of the nine programs for their kind cooperation over the various stages, as well as to Scribendi Editing for helping with English language editing.

Author Contributions

All authors made a significant contribution to the work reported, whether that is in the conception, study design, execution, acquisition of data, analysis and interpretation, or in all these areas; took part in drafting, revising or critically reviewing the article; gave final approval of the version to be published; have agreed on the journal to which the article has been submitted; and agree to be accountable for all aspects of the work.

Funding

This study did not receive funds from any funding agency in the public, commercial or not-for-profit sectors.

Disclosure

All authors report no conflicts of interest in relation to this work and certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

References

1. Frank JR. The CanMEDS 2005 Physician Competency Framework: Better Standards, Better Physicians, Better Care. Ottawa: Royal College of Physicians and Surgeons of Canada; 2005.

2. Swing SR; Swing SR. The ACGME outcome project: retrospective and prospective. Med Teach. 2007;29(7):648–654. doi:10.1080/01421590701392903

3. Ten Cate O. Competency-based medical education and its competency-frameworks. In: Mulder M, editor. Competence Based Vocational and Professional Education Bridging the Worlds of Work and Education. Cham, Schweiz: Springer International Publishing Switzerland; 2017:903–929. doi:10.1007/978-3-319-41713-4_42

4. Ten Cate O. Entrustability of professional activities and competency-based training. Med Educ. 2005;39(12):1176–1177. doi:10.1111/j.1365-2929.2005.02341.x

5. Pangaro L, ten Cate O. Frameworks for learner assessment in medicine: AMEE Guide No. 78. Med Teach. 2013;35(6):e1197–1210. doi:10.3109/01F159X.2013.788789

6. Ten Cate O. Competency-based postgraduate medical education: past, present, and future. J Med Educ. 2017;34(5):2366–5017.

7. McGaghie WC, Kristopaitis T. Deliberate practice and mastery learning: origins of expert medical performance. Res Med Educ. 2015;219.

8. Sam J, Pierse M, Al-Qahtani A, Cheng A. Implementation and evaluation of a simulation curriculum for paediatric residency programs including just-in-time in situ mock codes. Paediatr Child Health. 2012;17(2):e16–e20. doi:10.1093/pch/17.2.e16

9. Entrustable Professional Activity Plan in SCFHS Curricula. Available from: https://www.scfhs.org.sa/en/MESPS/Pages/EPA-.aspx.

10. SCFHS. Professional Classification and Registration Requirements Riyadh, KSA [Internet]; 2020. Available from: https://www.scfhs.org.sa/en/registration/Regulation/Pages/professional-registration-requirements-.aspx.

11. Azzam K, Wasi P, Patel A. Designing and implementing a comprehensive simulation curriculum in internal medicine residency. Canadian J General Int Med. 2012;7(3):548.

12. Kern DT, Hughes PA. Curriculum Development for Medical Education: A Six-Step Approach. Baltimore: Johns Hopkins University Press; 2009.

13. Gaba DM. Simulations That are Challenging to the Psyche of Participants: How Much Should We Worry and About What? LWW; 2013.

14. Noureldin YA, Lee JY, McDougall EM, Sweet RM. Competency-based training and simulation: making a “valid” argument. J Endourol. 2018;32(2):84–93. doi:10.1089/end.2017.0650

15. Tipping J. Focus groups: a method of needs assessment. J Contin Educ Health Prof. 1998;18:150–154. doi:10.1002/chp.1340180304

16. Mann KV, Chaytor KM. Help! Is anyone listening? An assessment of learning needs of practicing physicians. Acad Med. 1992;67(Suppl 10):S4–S6. doi:10.1097/00001888-199210000-00021

17. Salzman DH, Wayne DB, Eppich WJ, et al. An institution-wide approach to submission, review, and funding of simulation-based curricula. Adv Simulation. 2017;2(1):9. doi:10.1186/s41077-017-0042-5

18. Wehbi NK, Wani R, Yang Y, et al. A needs assessment for simulation-based training of emergency medical providers in Nebraska, USA. Adv Simulation. 2018;3(1):22. doi:10.1186/s41077-018-0081-6

19. Arab A, Alatassi A, Alattas E, et al. Integration of simulation in postgraduate studies in Saudi Arabia: the current practice in anesthesia training program. Saudi J Anaesth. 2017;11(2):208. doi:10.4103/1658-354X.203059

20. SCHS. Saudi Board: Anesthesia Curriculum. Riyadh, KSA: Saudi Commission for Health Specialties [Internet]; 2015. Available from: https://www.scfhs.org.sa/MESPS/TrainingProgs/TrainingProgsStatement/Documents/Anesthesia.pdf.

21. Grant J, Scragg P, Turner S. Learning needs assessment: assessing the need. BMJ. 2002;324(7333):324. doi:10.1136/bmj.324.7333.324

22. Barsuk JH, Cohen ER, Feinglass J, McGaghie WC, Wayne DB. Use of simulation-based education to reduce catheter-related bloodstream infections. Arch Intern Med. 2019;169(15):1420–1423. doi:10.1001/archinternmed.2009.215

23. Wayne DB, Didwania A, Feinglass J, Fudala MJ, Barsuk JH, McGaghie WC. Simulation-based education improves quality of care during cardiac arrest team responses at an academic teaching hospital: a case-control study. Chest. 2008;133(1):56–61. doi:10.1378/chest.07-0131

24. Dawe SR, Pena G, Windsor JA, et al. Systematic review of skills transfer after surgical simulation‐based training. Br J Surgery. 2014;101(9):1063–1076. doi:10.1002/bjs.9482

25. Zendejas B, Cook DA, Bingener J, et al. Simulation-based mastery learning improves patient outcomes in laparoscopic inguinal hernia repair: a randomized controlled trial. Ann Surg. 2011;254(3):502–511. doi:10.1097/SLA.0b013e31822c6994

26. Barry Issenberg S, Mcgaghie WC, Petrusa ER, Lee Gordon D, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005;27(1):10–28. doi:10.1080/01421590500046924

27. McLaughlin S, Fitch M, Goyal D, Hayden E, Kauh C, Laack T. SAEM Technology in Medical Education Committee and the Simulation Interest Group. Simulation in graduate medical education 2008: a review for emergency medicine. Acad Emerg Med. 2008;15(11):1117–1129. doi:10.1111/j.1553-2712.2008.00188.x

28. Otolaryngology Head and Neck Surgery Curriculum [Internet]. Available from: https://www.scfhs.org.sa/en/MESPS/TrainingProgs/List%20graduate%20programs/Documents/ENT.pdf.

29. Plastic Surgery Curriculum [Internet]. Available from: https://www.scfhs.org.sa/en/MESPS/TrainingProgs/List%20graduate%20programs/Documents/Plastic%20Surgery.pdf.

30. SCFHS Family Medicine Curriculum [Internet]. Available from: https://www.scfhs.org.sa/MESPS/TrainingProgs/TrainingProgsStatement/Documents/Family%20Medicine%202020.pdf.

31. So HY, Chen PP, Wong GKC, Chan TTN. Simulation in medical education. J Royal College Phys Edinburgh. 2019;49(1):845. doi:10.4997/JRCPE.2019.112

© 2022 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2022 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.