Back to Journals » Advances in Medical Education and Practice » Volume 9

Improving feedback by using first-person video during the emergency medicine clerkship

Authors Hoonpongsimanont W , Feldman M, Bove N , Sahota PK , Velarde I, Anderson CL , Wiechmann W

Received 28 March 2018

Accepted for publication 7 May 2018

Published 10 August 2018 Volume 2018:9 Pages 559—565

DOI https://doi.org/10.2147/AMEP.S169511

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 2

Editor who approved publication: Dr Md Anwarul Azim Majumder

Wirachin Hoonpongsimanont,1 Maja Feldman,1 Nicholas Bove,1 Preet Kaur Sahota,1 Irene Velarde,1,2 Craig L Anderson,1 Warren Wiechmann1

1Department of Emergency Medicine, University of California, Irvine School of Medicine, Irvine, CA, USA; 2Touro University College of Osteopathic Medicine, Vallejo, CA, USA

Purpose: Providing feedback to students in the emergency department during their emergency medicine clerkship can be challenging due to time constraints, the logistics of direct observation, and limitations of privacy. The authors aimed to evaluate the effectiveness of first-person video, captured via Google Glass™, to enhance feedback quality in medical student education.

Material and methods: As a clerkship requirement, students asked patients and attending physicians to wear the Google Glass™ device to record patient encounters and patient presentations, respectively. Afterwards, students reviewed the recordings with faculty, who provided formative and summative feedback, during a private, one-on-one session. We introduced the intervention to 45, fourth-year medical students who completed their mandatory emergency medicine clerkships at a United States medical school during the 2015–2016 academic year.

Results: Students assessed their performances before and after the review sessions using standardized medical school evaluation forms. We compared students’ self-assessment scores to faculty assessment scores in 14 categories using descriptive statistics and symmetric tests. The overall mean scores, for each of the 14 categories, ranged between 3 and 4 (out of 5) for the self-assessment forms. When evaluating the propensity of self-assessment scores toward the faculty assessment scores, we found no significant changes in all 14 categories. Although not statistically significant, one fifth of students changed perspectives of their clinical skills (history taking, performing physical exams, presenting cases, and developing differential diagnoses and plans) toward faculty assessments after reviewing the video recordings.

Conclusion: First-person video recording still initiated the feedback process, allocated specific time and space for feedback, and possibly substituted for the direct observation procedure. Additional studies, with different outcomes and larger sample sizes, are needed to understand the effectiveness of first-person video in improving feedback quality.

Keywords: clerkship, emergency medicine, feedback, medical student education, first-person video

Introduction

The emergency medicine (EM) clerkship is a unique clinical experience required by most medical schools. The majority of the learning during the clerkship comes from observing, practicing, and receiving feedback. A key component of training in the EM clerkship is thorough feedback based on various patient interactions. The feedback given is critical for students to refine their clinical skills.1 Nonetheless, studies suggest that feedback received in EM occurs infrequently.2 Lack of time, space, and privacy in a busy emergency department (ED) environment limits availability for direct observation of students’ clinical skills.3 Only one third of clerkship directors met with medical students during the mid-portion of their rotation and, learners spent less than 1% of their time in the ED under direct observation.4 Without witnessing student–patient interactions, feedback is unlikely to be of value.

Previous studies illustrate the use of first-person video recording systems for teaching in various levels of training from medical students to resident education. The recording system is also widely utilized in various fields of medicine, including surgery, family medicine, disaster relief, diagnostics, nursing, autopsy and postmortem examinations, wound care, and different medical sub-specialties.5–7 To date, we found very limited studies on the use of this technique for teaching in EM. For this study, we aimed to evaluate the effectiveness of first-person video recording, captured by Google Glass™, to enhance feedback quality in medical student education during the EM clerkship. This study focuses on the use of Google Glass™ in the ED, a unique medical setting in comparison to previous Google Glass™-related studies. We hypothesized that reviewing Google Glass™ recordings, with feedback from faculty, would provide students with insightful feedback on their clerkship performance; thus, aligning students’ self-evaluation scores closer to faculty evaluation scores.

Material and methods

Study protocol

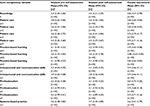

We conducted a cross-sectional study at a United States medical school during the 2015–2016 academic year. During their fourth-year EM clerkship, each student used the Google Glass™ device to record a patient encounter and a patient presentation in an urban, tertiary care, university-based ED. The clerkship director trained the students in the use of Google Glass™. The patient, assisted by the medical student, wore the Google Glass™ device and recorded the student during their encounter. Any ED patients who were willing to use the Google Glass™ device to record the students’ patient encounters were included in the study; there were no exclusions specified. After the completion of the patient encounter, a supervised attending or resident physician wore the Google Glass™ device and recorded the student’s patient presentation. As per the EM clerkship requirements, each student had to attend the 30-minute Google Glass™ feedback session and review their recordings with the clerkship director. The students were asked to assess their performances before and after the review session using standardized medical school evaluation forms (Table 1). The evaluation form was created by the division of medical education of the medical school. The medical school has utilized this form in all required clerkships, including family medicine, surgery, internal medicine, and gynecology for the past five years.

We collected students’ pre- and post-self-assessment forms and obtained standardized faculty assessment forms. Standardized faculty assessment forms were a collation of individual shift evaluations, which were completed by multiple faculty evaluators. The clerkship director completed the forms and submitted the forms as the students’ grades for the clerkship. Each form consists of 14 categories which evaluate students on six core competencies: knowledge, patient care, practice-based learning, interpersonal and communication skills, professionalism, and systems-based practice, which mimic residency training objectives. The form reports the scores on a Likert scale (from 1 to 5): problematic (not at expected level of proficiency in this area), adequate (below expected level), at expected level, above expected level, and clearly outstanding. The study was approved by the Institutional Review Board at the university as exempt.

Ethics statement

This study has been approved by the UC Irvine Institutional Review Board (UCI IRB) as Exempt from Federal regulations in accordance with 45 CFR 46.101. Informed consent was waived by the UCI IRB for this study.

Data analysis

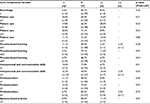

We compared the pre-self-assessment, post-self-assessment, and faculty assessment scores using descriptive statistics. We conducted Stuart–Maxwell symmetry and marginal homogeneity tests to obtain the Stuart–Maxwell test statistics for each variable and to determine whether the pre- and post-self-assessment scores differed/deviated from the faculty evaluation scores. A positive value indicates that the student’s post-self-assessment score moved closer to the faculty evaluation score compared to the pre-self-assessment score. A negative value indicates that the student’s post-self-assessment score moved further away from the faculty evaluation score compared to the pre-self-assessment score. A value of 0 indicates no change in either direction for the student’s self-assessment score in relation to the faculty evaluation score. A p-value of 0.05 or less was considered significant. Statistical analyses were performed using StataCorp. 2015 (Stata Statistical Software: Release 14, StataCorp LP, College Station, TX, USA).

Results

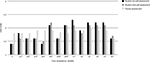

We reviewed a total of 135 assessment forms from 45 participants: 45 pre-session (student’s pre-self-assessment), 45 post-session (student’s post-self-assessment), and 45 faculty evaluations. The overall mean scores for all forms, for each of the 14 categories, ranged between “at expected level” (3) and “above expected level” (4) (Table 2 and Figure 1). There were two missing scores in the practice-based learning category which were taken into consideration when conducting the statistical analyses.

The symmetric test analyses show that the majority of the student scores did not change in either direction when comparing the pre- and post-self-assessment scores with the faculty evaluation scores. Additionally, none of the results were statistically significant (Table 3).

Discussion

The use of first-person video recording in feedback and assessment of medical students has significantly increased in the last decade. Educators apply this modality to enhance the learning experience, perform assessments, and teach procedures. The majority of available studies illustrate that first-person video is a useful learning aid in various settings, including operating rooms, primary care clinics, standardized patient encounters, and other settings except in the ED.5–7 The videos reviewed by students, with expert feedback, can improve student performance.8 Our study reinstated the feasibility of using Google Glass™ with ED patients, but we did not find a significant impact of incorporating Google Glass™ with the mandatory feedback sessions on learners’ self-perceptions.9

Google Glass™ did provide many theoretical advantages when giving feedback to learners. First, Google Glass™ provided context and refreshed the learners’ memories of their patient encounters; thus, this occurrence should enhance the effects of feedback for learners.10 Reviewing video clips should allow the educator to reference specific clinical skills and highlight aspects that the student may have otherwise overlooked. Next, Google Glass™ creates an opportunity for educators and learners to discuss one-on-one in a designated space and time, a rare opportunity within a busy ED environment. The educator and learner each prepared for this educational feedback session; which, in turn, encouraged a positive learning environment. Third, Google Glass™ recordings allowed learners to see from the patient’s point of view. This unique perspective re-emphasizes the importance of nonverbal communication skills and the student’s professionalism. Lastly, in addition to providing feedback, first-person review of student performance has significant potential for improving the summative evaluation process at the end of a clerkship. By utilizing the Google Glass™ videos, the faculty will have the ability to thoroughly review a student’s presentation skills and patient interactions at their leisure and convenience. By providing a more prepared environment for the feedback sessions, we expected students to be more receptive to constructive feedback from faculty.

Although not statistically significant, one fifth of students changed perspectives of their clinical skills (history taking, performing physical exams, presenting cases, and developing differential diagnoses and plans) toward faculty assessments after reviewing the video recordings. This suggests that Google Glass™ could serve as an alternative for directly observed history and physical examination skills, which are typically required by the Liaison Committee on Medical Education.

By including a mandatory, first-person video component, our clerkship curriculum ensured that at least one opportunity for meaningful feedback was provided to every student. As described in the ABCs of Learning and Teaching Medicine, “a good course ensures that regular feedback opportunities are built in, so that both teachers and learners come to expect and plan for them.” 10

Limitations

Our study found that Google Glass™ video recordings have no statistically significant impact on student self-assessment scoring when compared to faculty evaluation scoring. It is unclear whether this result is due to the ineffectiveness of first-person video itself for altering student perceptions or the quality of the faculty–student review sessions, where the videos were reviewed. Faculty who provide feedback also play an important role in the success of this process. Unstructured and/or unconstructive feedback could have contributed to the minimal changes in our findings. The faculty should receive formal training on giving feedback to ensure the efficiency of the review session.

We used the faculty evaluations as our gold standard with an understanding that it is an imperfect gold standard. Future studies should consider using assessments from patients in conjunction with faculty assessments, or additional Google Glass™ recordings after the initial feedback session to better evaluate the effectiveness of this feedback process.

There are limitations in the study design, as this was a cross-sectional study with only 45 medical student records. A larger sample size would provide more accurate comparisons with greater generalizability. Additionally, comparisons between a “Google Glass™ video group” and a control “no Google Glass™ video group” could provide more information in regards to how influential the Google Glass™ videos may be when students complete the pre- and post-self-assessment forms. Furthermore, the lack of demographic information of the participants such as gender, age, chief complaint of patients, and work experience of attending physicians, which was not collected for this study, limits the generalizability of these findings to other clerkships and medical schools.

There may have been a bias when choosing patients to record the students’ interactions. Students may have asked patients who they have better rapport with, to record their interactions. We also must consider the “Hawthorne Effect”: students may have performed differently, because they knew they were being recorded by the Google Glass™ device and the faculty would review the videos at a later time. As a result, students may have performed more thoroughly and professionally during the recordings.

Educators should be aware that there is no absolute correlation between self-evaluation and clinical practice. There is no concrete evidence that self-evaluation scores predict how a student may perform in future clinical settings.

Conclusion

Although the study did not demonstrate statistically significant changes in students’ perspectives of their clerkship performance, reviewing first-person video recordings of medical student clinical interactions during mandatory feedback sessions, could offer various advantages to both learners and educators. Future prospective studies, with larger sample sizes and different measurable outcomes, are needed.

Data sharing statement

The data that support the findings of this study are available from the corresponding author upon request.

Disclosure

The authors report no conflicts of interest in this work.

References

Bernard AW, Kman NE, Khandelwal S. Feedback in the emergency medicine clerkship. West J Emerg Med. 2011;12(4):537–542. | ||

Yarris LM, Linden JA, Gene Hern H, et al. Attending and resident satisfaction with feedback in the emergency department. Acad Emerg Med. 2009;16 Suppl 2:S76–S81. | ||

Fromme HB, Karani R, Downing SM. Direct observation in medical education: a review of the literature and evidence for validity. Mt Sinai J Med. 2009;76(4):365–371. | ||

Khandelwal S, Way DP, Wald DA, et al. State of undergraduate education in emergency medicine: a national survey of clerkship directors. Acad Emerg Med. 2014;21(1):92–95. | ||

Wei NJ, Dougherty B, Myers A, Badawy SM. Using google Glass™ in surgical settings: systematic review. JMIR Mhealth Uhealth. 2018;6(3):e54. | ||

Youm J, Wiechmann W. Formative feedback from the first-person perspective using google Glass™ in a family medicine objective structured clinical examination station in the United States. J Educ Eval Health Prof. 2018;15:5. | ||

Dougherty B, Badawy SM. Using google Glass™ in nonsurgical medical settings: systematic review. JMIR Mhealth Uhealth. 2017; 5(10):e159. | ||

Hammoud MM, Morgan HK, Edwards ME, Lyon JA, White C. Is video review of patient encounters an effective tool for medical student learning? A review of the literature. Adv Med Educ Pract. 2012;3:19–30. | ||

Tully J, Dameff C, Kaib S, Moffitt M. Recording medical students’ encounters with standardized patients using Google Glass: providing end-of-life clinical education. Acad Med. 2015;90(3):314–316. | ||

Cantillon P, Wood D, editors. ABC of learning and teaching in medicine. 2nd ed. West Sussex, United Kingdom: John Wiley & Sons, Ltd; 2010. |

© 2018 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2018 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.