Back to Journals » Journal of Multidisciplinary Healthcare » Volume 14

Google Glass-Supported Cooperative Training for Health Professionals: A Case Study Based on Using Remote Desktop Virtual Support

Authors Yoon H , Kim SK , Lee Y, Choi J

Received 22 March 2021

Accepted for publication 31 May 2021

Published 17 June 2021 Volume 2021:14 Pages 1451—1462

DOI https://doi.org/10.2147/JMDH.S311766

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

Editor who approved publication: Dr Scott Fraser

Hyoseok Yoon,1 Sun Kyung Kim,2 Youngho Lee,3 Jongmyung Choi3

1Division of Computer Engineering, Hanshin University, Osan, Korea; 2Department of Nursing, and Department of Biomedicine, Health & Life Convergence Sciences, BK21 Four, Biomedical and Healthcare Research Institute, Mokpo National University, Jeonnam, Korea; 3Department of Computer Engineering, Mokpo National University, Jeonnam, Korea

Correspondence: Sun Kyung Kim

Department of Nursing, and Department of Biomedicine, Health & Life Convergence Sciences, BK21 Four, Biomedical and Healthcare Research Institute, Mokpo National University, 1666 Yeongdan-ro, Cheonggye-myeon, Muan-gun, Jeonnam, 58554, Korea

Tel +82-61-450-6292

Email [email protected]

Youngho Lee

Department of Computer Engineering, Mokpo National University, 1666 Yeongdan-ro, Cheonggye-myeon, Muan-gun, Jeonnam, 58554, Korea

Tel +82-61-450-2448

Email [email protected]

Purpose: Observation of medical trainees’ care performance by experts can be extremely helpful for ensuring safety and providing quality care. The advanced technology of smart glasses enables health professionals to video stream their operations to remote supporters for collaboration and cooperation. This study monitored the clinical situation by using smart glasses for remote cooperative training via video streaming and clinical decision-making through simulation based on a scenario of emergency nursing care for patients with arrhythmia.

Participants and Methods: The clinical operations of bedside trainees, who is Google Glass Enterprise Edition 2(Glass EE2) wearers, were live streamed via their Google Glasses, which were viewed at a remote site by remote supporters via a desktop computer. Data were obtained from 31 nursing students using eight essay questions regarding their experience as desktop-side remote supporters.

Results: Most of the participants reported feeling uneasy about identifying clinical situations (84%), patients’ condition (72%), and trainees’ performance (69%). The current system demonstrated sufficient performance with a satisfactory level of image quality and auditory communication, while network and connectivity are areas that require further improvement. The reported barriers to identifying situations on the remote desktop were predominantly a narrow field of view and motion blur in videos captured by Glass EE2s, and using the customized mirror mode.

Conclusion: The current commercial Glass EE2 can facilitate enriched communication between remotely located supporters and trainees by sharing live videos and audio during clinical operations. Further improvement of hardware and software user interfaces will ensure better applicability of smart glasses and video streaming functions to clinical practice settings.

Keywords: smart glass, google glass engerprise edition 2, remote support, cooperation, interaction, health professional

Introduction

Health professionals’ ability to collaborate with other experts over a network has become more important than ever to improve quality of care and maintain high standard practice.1 Expert knowledge is needed to develop an optimal solution for problems and prevent unnecessary errors, especially in care environments, where a high level of clinical expertise is costly and rare.2 Transfer of knowledge to a large proportion of inexperienced health providers is key to successful remote collaboration. An efficient approach to achieve this goal is to improve both the availability and accessibility of experts to trainees, at scale.

Systems for remote collaboration enable remote workers to work together as if they are present at the site. Recently, people in the industry have been interested in synchronous remote collaboration systems that support collaboration between remote experts and local workers.3 There is a system that monitors local workers’ real-time videos, facial expressions, and gazes with the help of a remote expert.4 Few companies provide commercial tools through which real-time videos of workers with remote expertise can be shared over a high-speed network.5

Currently, partly due to the COVID-19 pandemic, advancements in computing processors, graphic processors, form factors, and battery life to overcome existing barriers related to remote collaboration, are being accelerated. A previous study suggests that wearable devices can help health professionals solve problems more quickly and safely via knowledge transfer using direct verbal and visual information.6 The first commercial smart glass was Google Glass. It was created for the general public, but due to functional limitations and lack of public acceptance of smart glasses, it is now being developed for specialized target groups for business, industrial, medical, and military uses. We can increase the efficiency of work by using the display, communication module, and computational power of smart glasses.

There are many different types of smart glasses such the Glass Enterprise Edition 2 (Glass EE2), Vuzix blade, Echo frame, HoloLense, Magic Leap One, Nreal Light, BT-300, and SmartEyeglass, however, their features vary. Though the Glass EE2 and Vuzix Blade are both equipped with a monocular display, the Glass EE2, has a small display on the top of the right-side eyeglasses, so that the screen does not obstruct the view, however, the Blade’s display is on the eyeglass’ glass. Realwear-HMT1 is a video-see-through Head Mounted Display (VST-HMD), which is specialized for manufacturing fields such as factories, has dustproof and waterproof functions, and operates entirely with voice recognition. The Nreal Light, BT-300, and SmartEyeglass have binocular displays. HoloLens 2 and Magic Leap One recognize space and visualize information in a three-dimensional space, unlike the aforementioned products. Additionally, it is capable of motion and voice recognition and is the most advanced augmented reality display device. The Echo Frame released by Amazon is unique as it does not have a display and is characterized by using various functions by communicating with Alexa through voice recognition. Details are summarized in (Appendix 1).

The usability and feasibility of smart glasses in education and health care areas, such as reading health data, telemonitoring, video recording, documentation, and education, have been extensively examined.7,8 Google Glass, in particular, has been widely adopted in surgical operations, and previous reviews have identified its applicability as a videography device, monitoring device, and navigation display.9,10 Smart glasses adapted to medical settings have been used to offer a first-person point-of-view, mostly in motionless environments such as surgical settings. Particularly, using video streaming is appropriate not only for telemedicine but also for medical documentation, patient recognition, and training students.11,12 Along with state-of-art technology to deliver precise information to smart glasses, remote experts need to consider clinical circumstances. Compared to the traditional in-person observation, smart glasses have greater efficacy in clinical usage due to the autonomic and unobstructive data collection.13 A correct understanding of the clinical situation can facilitate seamless interactions among professionals, and the need for high-end technology for uninterrupted interactions has never been higher, especially since the outbreak of COVID-19.14 Smart glasses are small computers, and a video camera records what the wearer is seeing, thereby offering video streaming to remote viewers.15 This video streaming feature is suitable for clinical settings where supervision from more experienced experts is closely related to patient safety. Furthermore, the previous qualitative interview revealed health professionals’ expectations regarding smart glasses for effective real-time information sharing, so they can discuss patients.16 However, the usefulness of smart glasses in the context of telemedicine is not well established. Audio and video streaming among health providers enables remote collaboration, and it is important to identify the factors influencing the process of information exchange.

Despite the benefits of streaming real-time videos, such as reduction of time and cost for remote observation or supervision, concerns regarding its actual usability in clinical practice remain. Previous studies have indicated that display resolution, field of view (FOV), internet connection, and image distortion determine the quality of the video streamed from smart glasses.17 In particular, wearers have focused on minimizing head movements to reduce motion blur in videos.17 However, this cannot be the case for certain clinical practices such as emergency units; thus, a comprehensive system evaluation would ensure the active application of smart glasses in the clinical environment.

Objectives

The present study aimed to assess the feasibility of a desktop user interface to monitor remote collaboration systems using the latest Google Glass (Glass EE 2) and to determine whether real-time video and audio provided via Glass EE2 is helpful, informative, and provides adequate information needed in emergency care settings. This study evaluated the usability of virtual support on remote desktops. The participants were the remote supporters who did not wear Google Glasses but watched and interpreted video streams, on a desktop program, that were generated by the trainee’s Glass EE2 and provided audio feedback to the trainee in real-time.

Materials and Methods

Setup

Hereinafter, we refer to the expert who sits in front of a desktop monitor as the “supporter” and the worker who works in an emergency room wearing a Glass EE2 as the “trainee.” Our system was comprised a supporter-side desktop system, trainee-side wearable system, and network server. The supporter-side desktop system consisted of a typical desktop computer (ie, with a monitor, a mouse, and a keyboard), a headset (audio input/output), and software tools. The software tools were a remote video conferencing application program based on the App-RTC, to monitor the trainee, and an image and text message transmission program for the trainee-side wearable system (Figure 1).

|

Figure 1 Real-time video captured via the Google Glass EE2 is delivered to the remote supporters’ desktop screen. |

The trainer-side wearable system comprised Glass EE 2, Bluetooth earphones, and a small mirror that is driven by a remote app we made. Bluetooth earphones were used because Google Glasses have built-in speakers, due to which, the sound from another person’s Glass EE2 could confuse the trainees. A software for the video and audio communication, based on the App-RTC, and for receiving images and text messages was implemented. The supporter-side desktop system (display resolution 1920×1080) received the trainee’s video and voice and sent voice commands, images, and text messages for help in real-time. The trainee-side wearable system received voice commands, images, and text messages from the supporter, and sent video and audio in real-time.

The trainee-side wearable system consisted of the Glass EE 2, Bluetooth earphones, and a small mirror attached to the Glass EE 2. The Glass EE 2 has a front camera (up to 1080p, 30f/s), Bluetooth-enabled audio input/output, and a touchpad. The trainee-side wearable system transmitted real-time video captured from the Glass EE 2 camera and audio through Glass EE 2’s microphone and received audio and image files transmitted from the supporter-side system. The Glass EE 2, which runs on Android Oreo 8.1 (API Level 27), with the firmware version OPM1.200625.001 was used. Android Studio and Android SDK8.1 (API 27) were used as development tools.

We also developed a software tool that can transmit images and texts that are required for training. We implemented one-to-one video communication by accessing the web RTC server using Google Chrome. Google provides the App-RTC (https://appr.tc/) server for free with an android sample source code (https://developers.google.com/glass-enterprise/samples/code-samples). The App-RTC supports video calls with 640×480 video resolution on Android apps using Google’s Chrome browser. Text and images were transmitted by a direct connection between the desktop and Glass EE2 without the data going through the server.

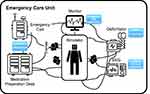

We developed a software for delivering images and text messages from supporter-side system to trainee-side system. We used the Unity3D for developing a desktop-side application as shown in Figure 2. The application shows image arrays and the image that the supporter clicks goes to the trainee’s display. Additionally, there is a text box for messages to be sent from the supporter to the trainee. This application was developed using UDP (User Datagram Protocol). On the trainee’s side, the images and text messages were displayed on the Glass EE2.

|

Figure 2 A mirror attached to the Google Glass to increase the FOV. |

An issue with the Glass EE2 is that a blind spot occurs because the camera faces the front, which makes it impossible to capture an image from the bottom. In general, nurses stand and handle devices on a desk, however, since the camera faces the front, it is impossible to capture a video of things on the desk. To solve this problem, images were transmitted to the navigator by attaching a mirror to the Glass EE2, which transmitted both the front side and lower part simultaneously. As shown in Figure 2, the mirror attached to the Glass EE2 was made of a light plastic material. The direction of the mirror was adjusted by the trainee by rotating it. The navigator received a video and the top half of the video shows the bottom side and the bottom half of the video shows the front side view, which allowed them to observe the trainee’s working situation.

Sample and Setting

Data were collected from 31 participants who attended supporter-trainee simulations and used two Glass EE2s to take part in the simulation program as both bedside trainees (glass wearers) and remote supporters. All participants completed a 15-hour simulation based on a scenario of patients with arrhythmia in an emergency unit. During the simulation, students were required to accomplish tasks related to each patient’s condition, including patient monitoring, EKG lead application, medication, and cardioversion (Figure 3). Students were required to take turns being bedside trainees and remote supporters. The inclusion criteria for study participants were 1) nursing students who participated in team-based simulation of an emergency nursing scenario involving interprofessional interaction, 2) provided informed consent to participate, and 3) who had taken on the role of desktop remote supporters within the scenario. This study obtained the ethics approval from the Institutional Review Board of Mokpo National University in Korea (No. MNUIRB-201,006-SB-011-02) in accordance with the Declaration of Helsinki.

|

Figure 3 Tasks that were required by the wearers and their range of movement during simulation. |

Data Collection

A structured questionnaire comprising the following eight essay questions was used: 1) Were you able to figure out the clinical situation in general? If not, how can it be improved? 2) Were you able to determine the condition of the patients? If not, how can it be improved? 3) Were you able to figure out the trainees’ hand movements? If not, how can it be improved? 4) Were you able to determine what the trainees were doing? If not, how can it be improved? 5) Was the mirror-reflected display helpful to figure out what was happening in the field? 6) Did you have any difficulties because of the unsteady camera? 7) How did you find the display resolution? 8) How was the sound transmission? Was it sufficient to facilitate flawless communication?

Statistical Analysis

SPSS (version 25.0) was used to compute descriptive statistics, and values were expressed as numbers, percentages, means, and standard deviations.

Results

Study Participants

The participants’ mean age was 23.94 years and approximately 74% of them were female. Most participants were candidates for graduation (93.5%), and all the participants had previous experience with simulation-based training. Table 1 presents the general characteristics of the participants.

|

Table 1 General Characteristics of the Study Participants (n=31) |

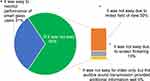

Identification of the Clinical Situation

Table 2 provides a summary of responses from participants to the essay questions. Twenty-seven (out of 32; 84%) reported that it was not easy to identify the overall situation due to narrow FOV (47%), motion blur (22%), and mirror mode of display (16%) (Figure 4).

|

Table 2 Summary of Responses from Participants |

|

Figure 4 Ease of the overall situation identification. |

Figure 5 shows examples of restrictions on situation identification during video streaming. These included limited information and a narrow FOV due to the short-distanced filming. This provided a partial view because of which the video captured only a small part of the manikin. In the application of devices, the mirror mode caused confusion, resulting in inaccurate directions by the supervisors to trainees wearing the smart glasses. Lastly, motion blur was a predominant problem; frequent relocation of smart glasses wearers and an unstable network made it difficult to identify the situation.

|

Figure 5 Examples of restrictions related to video streaming: limited information, narrow field of view, and mirror mode. |

Monitoring of Patients

Less than one-third of the participants (n=9, 28%) responded easily to the system for monitoring the patient’s condition. Limited FOV (n=20, 63%) and network issues (n=3, 9%) were requested to be considered for further improvement (Figure 6).

|

Figure 6 Ease of identifying patients’ conditions in general. |

Monitoring of Trainees

Seven participants (22%) expressed the usefulness of mirror-reflected screens. Twenty-two participants (69%) perceived that monitoring trainees was not easy due to limited FOV and motion blur (Figure 7), and most participants experienced difficulty identifying trainees’ hand movements (n=30, 94%).

|

Figure 7 Ease of monitoring smart glass users’ performance. |

Figure 8 shows an example of the systemic limitation of desktop-side video streaming. The short distance filming could capture only one hand of the trainee, which made it difficult to identify the performance of the trainees. In addition, the mirror mode increased confusion, hence advice for the decision-making process was only tentative.

|

Figure 8 Examples of mirror mode and limited FOV. |

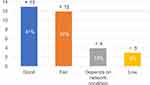

Quality of Image (Screen Resolution)

The majority of the participants expressed satisfaction with the screen resolution; 78% (n=25) responded that they had fair to good experience with video streaming provided via a desktop monitor (Figure 9).

|

Figure 9 Perceived screen resolution quality of the desktop computer. |

The participants also reported that the unstable network led to low screen resolution, which made it difficult to identify patient conditions in the scenario situation.

Motion blur was experienced by 84% (n=27) of the participants, and 25% (n=5) reported irritation caused by unstable video streaming (Figure 10). Participants also reported that motion blur worsened with trainees’ moving speed and activity intensity, especially their head motions.

|

Figure 10 Difficulties during physical operation and on-the-go. |

Auditory Communication

The study participants reported that the audio system did not work smoothly at times, and further improvements were requested for Bluetooth (n=11, 34%) and earphones (n=9, 28%) (Figure 11).

|

Figure 11 Perceived stability of sound transmission. |

Discussion

The present study evaluated the usability of virtual support on remote desktops for collaboration and investigated whether video streaming via Glass EE2 could assist remote supporters to monitor clinical situations. Most monocular optical see-through smart glasses or AR glasses have similar hardware specifications (refer to Appendix 1), produce comparable video streams, and technical limitations, reported in this study that was conducted with Glass EE2.

Our study specifically aimed to evaluate cooperative training, supported by Glass EE2, on the remote supporter’s end (ie, a desktop program user, rather than an app on the Google Glass). Boillat et al’s18 work reviewed studies and used cases and scenarios regarding those wore smart-glasses and used an app on it. Ponce et al,19 Hashimoto et al,20 and Datta et al’s21 studies are similar to our cooperative training, since they included trainees who wore smart-glasses and remote supporters who watched what the trainees were viewing. However, these studies had some limitations related to using the Google Glass, such as limited FOV and viewing angles, these were revisited by our study, using the latest Google Glass. In an attempt to provide more usable user interface and comprehensible video streams on the remote supporter’s end, we attached a customized mirror to the Glass EE2.

Our remote collaboration system’s current configuration demonstrated some deficiencies regarding streaming performance and video quality, in terms of both resolution and continuity, for the remote supporters. This study’s findings, however, identified a few limitations in the current model of Google Glass related to its clinical use. First, the videos generated by Google Glasses, even before it is streamed, are not of sufficient quality to be used for clinical purposes. In many cases, due to Google Glasses’ limited FOV, essential parts of the clinical scene that remote supporters need to interpret are either cut-off or not recorded. The mediocre quality videos are further degraded due to the wearer’s rapid head movements (ie, motion blur in the video), therefore, interpreting such videos would require the experts to meticulously analyze them. Second, the streaming performance does not meet the remote supporter’s expectation (ie, delay, buffering, frozen frames, and dropped frames).

We intentionally designed interactions of the trainees who wear Glass EE2 to be minimal, non-obtrusive and hands-free. Trainees (ie, nursing students) could focus on their training without worrying about operating the Glass EE2. With a pre-configured app created for this study, trainees could share their view and receive vocal feedback without learning how to operate the device or requiring technical expertise. Remote supporter’s instructions were shown on the trainee’s Glass EE2 display. The videos that were streamed were not shown on the Glass EE2 display, because they overlap what the trainees’ were viewing directly.

Remote supporters reported difficulties in monitoring and identifying patient conditions because of partially available information through a limited FOV. The limited FOV is due to the camera installed on trainees’ smart glasses. To provide a wider FOV and higher resolution images, advanced cameras, such as 360 cameras, and ultra-wide fisheye lenses can be used. However, high-resolution videos of greater size have the risk of reducing streaming performance by requiring higher network bandwidth (ie, buffering, and frame drops).22,23 Additionally, the remote supporters would require a high-resolution display to match the wearable camera’s resolution and a large screen monitor to enlarge objects of interest. Ideally, the mirror capturing a patient should be firmly fixed (ie, attached to the ceiling or on the corners) to minimize motion blurs in the transferred video. This approach is suitable for hospitals that can satisfy steady and controllable infrastructure requirements.

Our approach of using a trainee’s wearable camera is applicable to emergency care scenarios outside well-prepared facilities. Moreover, the trainee’s wearable camera can provide focused eye- and first-person views as needed to mitigate any blind spots in the fixed cameras.24 The videos captured via the trainee’s wearable camera can be stabilized using an additional gimbal and software-based image processing, which would increase equipment cost. On the remote supporter’s side, advanced controls of the received video, such as pausing the live video stream to capture a moment of interest on a separate window and the zoom-in function to take a closer look at a region of interest, would make the process more efficient.

There is another issue with video streaming: always-on cameras could be a violation of privacy.25 However, several strategies can be designed to protect the privacy of patients. For example, patient faces in the video can be blurred for anonymity, and a wide view mode can be used optionally or the remote supporters can authorize the use of 360 cameras. The actual use and acceptance of smart glasses in clinical settings are unknown, and there should be other issues requiring crucial consideration. The acceptance (due to the perceived ease of use) of new technology system could differ among health professionals who are not digital natives. Additionally, there are concerns regarding regulatory requirements for its approval as a medical device. Moreover, the perceived resolution may not be sufficient for an actual clinical setting where the complexity of the disease condition could be far greater than that in a simulation setting. For example, the scenarios used in this study did not involve physical symptoms such as bruising or bleeding. Given the large body of evidence to support the suitability of smart glasses,9,10 the potential is high. Thus, future studies with device reinforcement are warranted to facilitate remote collaboration for wearers outside the simulation.

The remote supporters appreciated the multiple sources of information. When the real-time video did not provide sufficient information, an auditory communication system was used to compensate for the visual restraints. This is consistent with previous studies which showed that multimodal communication improves the clarity and accuracy of message delivery.26,27 In a dynamic hospital environment, remote assistance would be helpful only when the supporter grasps the situation comprehensively. Although diverse modes facilitate interprofessional communication, concerns remain as to whether multimodal communication is without side effects. Continuous attempts of remote supporters requesting additional information could impede the clinical performance of wearers.

Our current configuration relies on Bluetooth wireless earphones for audio communication between the trainee and remote supporters. Our choice of using Bluetooth should be compared and validated against other alternatives such as currently available audio-video communication smartphone apps28 and high-end Bluetooth sets.

The findings of this study revealed the constraints of using newly developed digital interprofessional interaction methods. Since remote supporters’ high intervention could impede the clinical performance of wearers, a hierarchical guideline should be designed to provide no excessive multimodal communication at a given time. In the high-risk and time-restraint environments of hospitals, especially in emergency units, effective communication is key to quality care.29 Further considerations for developing guidelines for digital interprofessional interactions are needed. For example, simple pointing and capture of a patient would suffice for most cases, whereas assessing a patient’s moan is rarely required.

In this study, a customized mirror was attached to get a better view of the wearers’ performance; however, the outcome was not satisfactory because only a few users expressed that the glasses assisted their work. In fact, users reported that half and half screens reduced the already narrow FOV, and the mirror mode caused confusion from time to time, particularly regarding the regions of the body. According to the results, remote supporters would benefit from incorporating a sufficiently large monitor to correctly interpret the transferred video of high resolution and a wide FOV. As discussed earlier, a wide FOV and higher resolution video would fit better on a larger display to provide more usable UI and effective user experience. The transferred video can be clearly segmented into mirror mode and non-mirror mode. To reduce confusion among remote supporters, the mirror-mode area should be flipped vertically using image processing.

Limitations

There are limitations to this study that warrant caution when interpreting the findings. First, the small sample size of 31 may not have well represented all potential users in clinical settings. Second, considering that the participants were young (mean age = 23.94), the perceived usefulness and ease of use could significantly differ for the older people in the clinical settings,30 thus, there is a high probability that important issues that need to be addressed, were not. Additionally, although the advancement of ICT technology can enforce a greater working interaction, it might cause technostress among older individuals. Perceived potential of mastery and optimism could predict the active adaptation of current system among healthcare practitioners in clinical settings,31 thus, a different approach (reinforcing the necessity of devices for improved safety) would ensure positive attitude toward smart glass-based interactions. Third, strong empirical evidence in support of the current system is required, for it to be certified as a medical device. To be used in clinical settings for interactions between health professionals, the current system including smart glasses with the additional mirror accessory need to be certified as medical device.32,33 Since it is of relatively low risk and has a purpose, gaining regulatory approval would ensure that the current model/version is safe for clinical use. Lastly, since we used essay questionnaires alone, we could not quantify the user experience. This may restrict future studies from having comparative outcomes. We focused on Google Glass-supported cooperative training for remote supporters who received visual information through the trainee’s wearable camera. In the future, remote collaboration from both sides should be assessed to determine the nature of interaction and feedback between the trainee and remote supporter bi-directionally.

Conclusion

The present study investigated the initial experience of a Google Glass-based video streaming system for remote collaboration. The clinical situation and trainees’ performance were streamed to develop a work environment where timely information and guidance were made available via the Google Glass. Using the Google Glass-based video streaming system, the remote supporters experienced some difficulties, especially regarding identifying clinical situations, patients’ conditions, and the trainees’ performance. The findings of this study could contribute to the design and implementation of cooperative training systems using smart glasses by identifying barriers to its implementation. Future studies should make further mechanical progress and upgrade the user interface to address the current limitations, technical issues, and unmet user requirements.

Acknowledgement

This research was supported by a grant (20012234) of Regional Customized Disaster-Safety R&D Program funded by the Ministry of Interior and Safety (MOIS, Korea).

Disclosure

The authors report no conflicts of interest in this work.

References

1. Li J, Talari P, Kelly A, et al. Interprofessional Teamwork Innovation Model (ITIM) to promote communication and patient-centred, coordinated care. BMJ Qual Saf. 2018;27(9):700–709. doi:10.1136/bmjqs-2017-007369

2. Rosenfeld E, Kinney S, Weiner C, et al. Interdisciplinary medication decision making by pharmacists in pediatric hospital settings: an ethnographic study. Social Adm Pharm. 2018;14(3):269–278. doi:10.1016/j.sapharm.2017.03.051

3. Sereno M, Wang X, Besancon L, Mcguffin MJ, Isenberg T. Collaborative work in augmented reality: a survey.

4. Lee Y, Masai K, Kunze K, Sugimoto M, Billinghurst M. A remote collaboration system with empathy glasses.

5. VIRNECT. VIRNECT. 2021. Available from: https://virnect.com/.

6. Faiola A, Belkacem I, Bergey D, Pecci I, Martin B. Towards the design of a smart glasses application for micu decision-support: assessing the human factors impact of data portability & accessibility. Proc Int Symp Hum Factors Ergon Healthc. 2019;8(1):52–56. doi:10.1177/2327857919081012

7. Wrzesińska N. The use of smart glasses in healthcare–review. MEDtube Sci. 2015;31.

8. Basoglu NA, Goken M, Dabic M, Ozdemir Gungor D, Daim TU. Exploring adoption of augmented reality smart glasses: applications in the medical industry. Front Eng Manag. 2018;5(2):167–181.

9. Wei NJ, Dougherty B, Myers A, Badawy SM. Using google glass in surgical settings: systematic review. JMIR Mhealth Uhealth. 2018;6(3):e54. doi:10.2196/mhealth.9409

10. Rahman R, Wood ME, Qian L, Price CL, Johnson AA, Osgood GM. Head-mounted display use in surgery: a systematic review. Surg Innov. 2020;27(1):88–100. doi:10.1177/1553350619871787

11. Mitrasinovic S, Camacho E, Trivedi N, et al. Clinical and surgical applications of smart glasses. Technol Health Care. 2015;23(4):381–401. doi:10.3233/THC-150910

12. Ruminski J, Smiatacz M, Bujnowski A, Andrushevich A, Biallas M, Kistler R. Interactions with recognized patients using smart glasses.

13. Ruminski J, Bujnowski A, Kocejko T, Andrushevich A, Biallas M, Kistler R. The data exchange between smart glasses and healthcare information systems using the HL7 FHIR standard.

14. Arslan F, Gerckens U. Virtual support for remote proctoring in TAVR during COVID-19. Catheter Cardiovasc Interv. 2021. doi:10.1002/ccd.29504

15. Kumar NM, Singh NK, Peddiny VK. Wearable smart glass: features, applications, current progress and challenges.

16. Romare C, Hass U, Skär L. Healthcare professionals’ views of smart glasses in intensive care: a qualitative study. Intensive Crit Care Nurs. 2018;45:66–71. doi:10.1016/j.iccn.2017.11.006

17. Hiranaka T, Nakanishi Y, Fujishiro T, et al. The use of smart glasses for surgical video streaming. Surg Innov. 2017;24(2):151–154. doi:10.1177/1553350616685431

18. Boillat T, Peter G, Homero R. Increasing completion rate and benefits of checklists: prospective evaluation of surgical safety checklists with smart glasses. JMIR Mhealth Uhealth. 2019;7(4):e13447. doi:10.2196/13447

19. Ponce BA, Menendez ME, Oladeji LO, Fryberger CT, Dantuluri PK. Emerging technology in surgical education: combining real-time augmented reality and wearable computing devices. Orthopedics. 2014;37(11):751–757. doi:10.3928/01477447-20141023-05

20. Hashimoto DA, Phitayakorn R, Fernandez-del Castillo C, Meireles O. Blinded assessment of video quality in wearable technology for telementoring in open surgery: the google glass experience. Surg Endosc. 2016;30(1):372–378. doi:10.1007/s00464-015-4178-x

21. Datta N, MacQueen IT, Schroeder AD, et al. Wearable technology for global surgical teleproctoring. J Surg Educ. 2015;72(6):1290–1295. doi:10.1016/j.jsurg.2015.07.004

22. Fan CL, Lo WC, Pai YT, Hsu CH. A survey on 360° video streaming: acquisition, transmission, and display. ACM Comput. 2019;52(4):1–36. doi:10.1145/3329119

23. Yaqoob A, Bi T, Muntean. G-M. A survey on adaptive 360° video streaming: solutions, challenges and opportunities. IEEE Commun Surv Tutor. 2020;22(4):2801–2838. doi:10.1109/COMST.2020.3006999

24. Mayol-Cuevas WW, Tordoff BJ, Murray DW. On the choice and placement of wearable vision sensors. IEEE Trans Syst Man Cybern a Syst Hum. 2009;39(2):414–425. doi:10.1109/TSMCA.2008.2010848

25. Hofmann B, Haustein D, Landeweerd L. Smart-glasses: exposing and elucidating the ethical issues. Sci Eng Ethics. 2017;23(3):701–721. doi:10.1007/s11948-016-9792-z

26. Pimmer C, Mateescu M, Zahn C, Genewein U. Smartphones as multimodal communication devices to facilitate clinical knowledge processes: randomized controlled trial. J Med Internet Res. 2013;15(11):e263. doi:10.2196/jmir.2758

27. Bresciani S, Arora P, Kernbach S. Education and culture affect visualization’s effectiveness for health communication.

28. Jeong J, Kim TH, Kang SB. A feasibility study of audio-video communication application using mobile telecommunication in inter-hospital transfer situations. Korean J Emerg Med Ser. 2019;23(1):125–134.

29. Prasad N, Fernando S, Willey S, et al. Online interprofessional simulation for undergraduate health professional students during the COVID-19 pandemic. J Interprof Care. 2020;34(5):706–710. doi:10.1080/13561820.2020.1811213

30. Schlomann A, Seifert A, Zank S, Woopen C, Rietz C. Use of Information and Communication Technology (ICT) devices among the oldest-old: loneliness, anomie, and autonomy. Innov Aging. 2020;4(2):igz050. doi:10.1093/geroni/igz050

31. Chopik WJ, Rikard RV, Cotten SR. Individual difference predictors of ICT use in older adulthood: a study of 17 candidate characteristics. Comput Human Behav. 2017;76:526–533. doi:10.1016/j.chb.2017.08.014

32. Akshay A, Venkatesh MP, Kumar P. Wearable healthcare technology-the regulatory perspective. Int J Drug Regul Aff. 2017;4(1):1–5. doi:10.22270/ijdra.v4i1.13

33. Erdmier C, Hatcher J, Lee M. Wearable device implications in the healthcare industry. J Med Eng Technol. 2016;40(4):141–148. doi:10.3109/03091902.2016.1153738

© 2021 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2021 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.