Back to Journals » Journal of Multidisciplinary Healthcare » Volume 16

Generative and Discriminative Learning for Lung X-Ray Analysis Based on Probabilistic Component Analysis

Authors Alshamrani K , Alshamrani HA, Alqahtani FF, Alshehri AH, Althaiban SH

Received 21 September 2023

Accepted for publication 23 November 2023

Published 14 December 2023 Volume 2023:16 Pages 4039—4051

DOI https://doi.org/10.2147/JMDH.S437445

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 2

Editor who approved publication: Dr Scott Fraser

Khalaf Alshamrani,1,2 Hassan A Alshamrani,1 F F Alqahtani,1 Ali H Alshehri,1 Saleh Hudayban Althaiban3

1Radiological Science Department, Najran University, Najran, Saudi Arabia; 2Oncology and Metabolism Department, Medical School, University of Sheffield, Sheffield, United Kingdom; 3Department of Radiology, New Najran General Hospital, Najran, Saudi Arabia

Correspondence: Khalaf Alshamrani, Email [email protected]; [email protected]

Introduction: The paper presents a hybrid generative/discriminative classification method aimed at identifying abnormalities, such as cancer, in lung X-ray images.

Methods: The proposed method involves a generative model that performs generative embedding in Probabilistic Component Analysis (PrCA). The primary goal of PrCA is to model co-existing information within a probabilistic framework, with the intent to locate the feature vector space for X-ray data based on a defined kernel structure. A kernel-based classifier, grounded in information-theoretic principles, was employed in this study.

Results: The performance of the proposed method is evaluated against nearest neighbour (NN) classifiers and support vector machine (SVM) classifiers, which use a diagonal covariance matrix and incorporate normal linear and non-linear kernels, respectively.

Discussion: The method is found to achieve superior accuracy, offering a viable solution to the class of problems presented. Accuracy rates achieved by the kernels in the NN and SVM models were 95.02% and 92.45%, respectively, suggesting the method’s competitiveness with state-of-the-art approaches.

Keywords: generative learning, discriminative learning, probabilistic component analysis, nearest neighbour classifier, support vector machines classifier

Introduction

Recent developments in deep-learning techniques have led to significant progress in the field of imaging, including segmentation and classification. The analysis of medical imaging, such as X-ray imaging of the lungs, is one of the most popular deep-learning applications. Deep learning can automatically extract features from data, whereas conventional computer vision methods depend on manually created features. Deep learning for lung X-ray interpretation still faces obstacles such as the scarcity of labelled data and the need for tolerance to minute fluctuations in the input data.1–4

Small malignant nodules cause most lung cancers. Radiologists evaluate chest X-ray images for malignant nodules slice-by-slice, which requires time and money and involves operator bias. Although computer-aided diagnosis systems (CADs) have been used to assist radiologists in reading chest X-ray scans, automated identification of benign and malignant nodules on chest X-rays remains problematic for at least two reasons: the difficulty of lung nodule delineation due to a wide range of shape and texture variations and the visual similarities shared by malignant and benign nodules. Non-professionals may have trouble distinguishing between these two.

Researchers have suggested merging the generative and discriminative learning approaches to overcome these issues.5 Generative models can be used to supplement existing data by creating artificial instances that can be used to train a discriminative model. Lung X-ray images may then be categorised, and anomalies found using discriminative models6,7. Combining these two approaches makes it feasible to use each of their advantages while enhancing the overall performance of the system.

Discriminative techniques learn behaviour from conditional probability models of the labels given in the data, whereas generative strategies learn their joint distributions. The proposed method combines these two methods to enhance categorisation. In this method, the generative model learns the data structure and trains a discriminative classifier. The proposed model was trained using the Kaggle online dataset from a generative model for an underrepresented class.

Deep learning algorithms have been very successful in computer vision because they provide a standard method for feature extraction and classification, which frees users from having to do feature extraction manually.8–12

Since this breakthrough, many medical image analysts have used deep convolutional neural networks (CNNs) after this breakthrough. The fully convolutional network (FCN), which upsamples layers to match the output size to the input, offers a novel approach to image segmentation. Deep learning algorithms are more accurate than handmade feature-based methods; however, they have not performed as well in routine lung nodule classification as in the ImageNet Challenge. Medical image analysis uses a small dataset because of the labour involved in gathering and annotating images, which leads to deep model overfitting and unsatisfactory performance.

Many deep learning researchers have attempted to solve this little-data problem. First, ImageNet-learned picture representations can be used for general visual identification tasks with little training data.13

Contribution

The objective of this study is:

- To build a generative and discriminative learning model that can precisely categorise pictures of healthy and sick lungs using a batch of X-ray images of the lungs.

- To detect any lesions and other anomalies in the images and categorise them based on their severity.

- To provide medical practitioners with a solid and trustworthy approach for analysing lung X-rays, which may aid in the early detection and treatment of disorders.

Literature Review

The proposed method uses a hybrid generative-discriminative strategy to address the classification problem indicated in previous studies.14 Usually, hybrid generative-discriminative models follow generative learning from the data a generative model that can accurately model the data at hand. Using that model to include each object in the selected feature space is termed as generative embedding space and uses that feature space to train a discriminative classifier. In particular, non-vectorial data (strings, trees, and pictures) have been effectively used in this class of techniques in many different settings.15,16

The probabilistic analysis technique17 was used with regard to the generative model. This powerful method was initially created for unsupervised learning problems and has been proposed to be widely used in medical informatics, computer vision and imaging community.16,18–20 The recently proposed free energy score space (FESS) and the posterior distribution across themes (as in)16 have both been considered in the trained generative model using the given dataset. It has been shown that the latter outperforms other generative embeddings in a variety of circumstances.12,13

Typically, support vector machines (SVM) with linear or radial basis function (RBF) kernels are used to feed kernel-based classifiers with feature vectors produced through generative embedding. Newly developed information theoretic (IT) kernels are used instead of ordinary kernels.21–23

Lung CT tissue categorisation employs representation learning.14,24,25 Trained conventional (non-convolutional) neural networks on tiny subpatches taken from the patch to be categorised.26 Classified entire dataset slices without ROIs using convolutional neural networks.27 Classified lung tissue in another lung CT dataset using convolutional neural networks.28 Other lung CT applications include lung nodule and lymph node identification using convolutional neural networks. A convolutional was developed to reject or confirm preprocessed lung nodules in an early application.29,30 Recently, multiscale convolutional networks (CNNs) have been employed to classify lung nodules.31 Extracted lung nodule categorisation characteristics using multi-layer autoencoders32 suggested a 2.5D convolutional neural network to identify lung CT lymph nodes using several 2D orthogonal views.33

A stream of methods for discriminative and generative learning uses variational autoencoders (VAEs), which are modelled using various latent variable models.34 Per-pixel categorisation seldom uses VAEs. Several generative adversarial network-inspired generative learning approaches have been developed. Developed “bi-directional Generative adversarial networks (GAN)”, where an encoder maps the picture distribution to the GAN latent space. Latent space has been used for categorisation in other publications.35 These studies employed a concise model of image data distribution to predict picture labels in a supervised manner. Other researchers have used generative modelling and adversarial training to categorise images. Proposed GAN-based learning using TripleGAN architectures.36 The GAN samples were concatenated to classifier-generated labels and were assigned to a discriminator for adversarial training. In no GAN-based approach other than37 is the joint probability distribution known. This study is the first to evaluate picture-distribution modelling approaches.

The justification for this decision is that these kernels may use the probabilistic character of generative embeddings, potentially enhancing the classification outcomes of the hybrid techniques. This work focuses on a specific class of kernels that are defined as normalised (probability) or non-normalised based on multinomial representations, and is found to be based on a non-extensive generalisation of the traditional Shannon information theory. The objective was to treat generative embedding points as multinomial distributions, making them strong justifications for information-theoretic kernels.

The remainder of this paper is structured as follows: Section 3 details the methodology, Results and Discussion presents the experimental findings, and Section 5 concludes the paper.

Proposed Method

Materials

The proposed method was evaluated on a dataset comprising 214 images using a variety of features and kernels, and compared to nearest neighbour classifiers and standard kernels on both the original feature marking and the extended spaces produced by the generative embeddings. These findings demonstrate that this is a good area for further investigation.

The goal of the suggested method is to attain a high level of accuracy in the categorization of lung diseases by combining generative learning, discriminative learning, and principal component analysis in a novel way. When compared to more standard machine learning algorithms for lung X-ray analysis, the approach that has been developed is able to learn from a more limited dataset. The approach that has been proposed is able to understand more intricate correlations between the features that are present in the X-ray images of the lung. The data that was used in the study is credible and was gathered from a variety of sources that are accessible to the public.

Methodology

The proposed generative discriminative classification technique integrates both generative and discriminative models. Generative models describe the likelihood of a given feature set, whereas discriminative models describe the probability of a particular class for a given feature set. Figure 1 shows the block diagram of the proposed method. The proposed method uses the advantages of both approaches, thereby achieving a significant improvement in classification accuracy. The technique begins by developing a generative model to understand the underlying structure of data. This model was used to estimate the likelihood of each characteristic for a given class. Subsequently, a discriminative model was created on top of the generative model to predict the class for each feature set. The discriminative model leverages the information from the generative model to increase its accuracy. The proposed method is beneficial for the classification problems, when a single strategy may not be able to capture the whole complexity of the data. The broad strategy can be summarised as follows, given the description of the characteristics in the preceding section:

- Generative model training: a generative model is trained using the training set as input.

- Generative embedding: In this stage, the learned model is used to embed all problem-related items in a vector space, including training and testing patterns.

- Discriminative classification: The items in the generative embedding space are categorised at this stage. This study focuses specifically on information-theoretic kernels for SVM and closest neighbour algorithms.

|

Figure 1 Block diagram of the proposed methodology. |

Each of these steps is thoroughly explained in the subsections that follow.

Training Generative Models

Generative machine learning models may generate data comparable to the data used to train the model. Learn the data distribution and use it to produce fresh samples. Generative adversarial networks (GANs) use two neural networks to compete with them. Variational autoencoders (VAEs) are generative models that use encoders and decoders to learn data distribution. The generator creates new data, and the discriminator distinguishes between real and generated data. Boltzmann machines use a probabilistic graphical model to learn the data distribution. The encoder converts the data into a latent space and the decoder converts it back.

The generative model38 was the first model used in the computer vision field and is widely embraced by the bioinformatics community for unsupervised learning. A series of co-occurrences of the form, (I,I(i,j)), which individually specify the existence of a specific pixel I(i,j) in an image I, are produced by Probabilistic Component Analysis (PrCA) from a generative probabilistic perspective. The following describes the generative representation of the proposed model that underlies these co-existing pairs: The first step is to take a pixel I(i,z) from the probabilistic distribution over the whole image  ; the second is to take a pixel sample from the conditional probability distribution of pixels as

; the second is to take a pixel sample from the conditional probability distribution of pixels as  ; and the third is to take an image sample (independent of the pixel sample) from the conditional probabilistic distribution of the image

; and the third is to take an image sample (independent of the pixel sample) from the conditional probabilistic distribution of the image  . The probabilistic distribution which is produced is

. The probabilistic distribution which is produced is  , where the total spans the set of pixels are included in the model.

, where the total spans the set of pixels are included in the model.

In the proposed method, specific pixels in the PrCA model correspond to the previously stated visual attributes. It is possible to consider the PrCA model created using X-ray data to define visual themes. One distinct benefit of representing an X-ray image with specific pixels is that the pixel topic is uniquely interpretable and offers a probability distribution across pixels that identifies a cohesive cluster of abnormalities, such as a tumour. The ultimate objective is to provide information regarding complex systems and reveal potential hidden connections. Therefore, it is helpful for cancer diagnosis using X-ray images.

Generative Embedding

Machine learning employs neural networks to produce vector representations of data through generative embedding. Classification, grouping, and recommendations employ vector representations. Generative embedding assumes that neural networks can learn to represent data structures. The labelled dataset was used to train the neural network. Each vector in the neural network represents a data point. A neural network compresses a high-dimensional vector space into a lower-dimensional space while maintaining its structure. After training, a neural network can create data-vector representations. New vector representations can be used for various purposes. These methods can categorise, cluster, and propose new data points. Generative embedding outperformed the other machine learning methods. First, generative embedding can be used to represent data based on its structure. It outperforms linear machine learning methods, which only learn to represent the data linearly. Second, the generative embedding generates fresh data. It is a powerful tool for data production and augmentation.

In this stage, the learned model is used to project all identified training and testing patterns onto a vector space.

Several strategies have been proposed, each with its own advantages, in terms of interpretability, effectiveness, efficiency, and other factors. In this study, a unique approach, whose effectiveness has been shown in several settings39,40 was used, where the posterior probabilistic distribution  , is following the requirements of the generative and discriminative model that was taken into consideration.

, is following the requirements of the generative and discriminative model that was taken into consideration.

The posterior probabilistic probabilities for a given image I in the posterior distribution embedding E(i) is defined as

The set of pixels is ranging from 1 to T (where T represents the total number of pixels). The idea is that healthy and malignant cells co-occur with variou;s visual cu, es, and that the topic distribution  captures these co-occurrences, therefore it should provide useful information for classification. Both computer vision tasks41 and medical informatics42 have previously effectively employed this approach with the topic posteriors.

captures these co-occurrences, therefore it should provide useful information for classification. Both computer vision tasks41 and medical informatics42 have previously effectively employed this approach with the topic posteriors.

In several applications, it has been demonstrated that embedding43 performs better than other generative embeddings such as those in.8,15 The significant point that must be made is that all components of the proposed embedding from any X-ray image are chosen to be non-negative (similar to the posterior distribution embedding).

Classification of Cancer Cells Using Discriminative- Generative Embedding

Discriminative- Generative Embedding (DGE) can be used to categorise cancer cells using machine learning. DGE improves performance by combining discriminative and generative models. Discriminative models categorise data effectively; however, poorly labelled data makes training more difficult. Generative models are effective for learning data distribution, but not categorisation. DGE solves these problems by classifying data points using a discriminative model, and learning the data distribution using a generative model. The discriminative model initialises the generative model, which improves performance. DGE also classifies cancer cells. DGE classifies cancer cells with 97% accuracy in natural medicine. This method outperformed discriminative and generative models.

The proposed discriminative generative method helps to classify cancer cells. The discriminative generative format can reveal data patterns for categorising cancer cells. The proposed approach assists in classifying cancer cells by detecting tiny changes in the data that other approaches may miss. This information can be used to categorise cancer cells for improved detection and therapy. Thus, the proposed approach can classify cancer cells faster by autonomously learning their characteristics from data.

The feature vector space which is produced by the generative embedding process, is fed back into the kernel-based classifier, such as the nearest neighbour classifier or the support vector machine-based classifier. This is observed by using information-theoretic kernels,21,22 which have just been developed as a similarity metric for the feature set of generative and discriminative models as an alternative to conventional kernels. Using such kernels, generative embeddings are found to be probabilistic in nature and have been proven to enhance classification outcomes. This is supported by experimental findings, as illustrated in the following sections.

Kernels Progression: Linearly and Non-Linearly

Kernels may classify cancer progression as either linear or nonlinear. Cancer classification often uses linear kernels to translate data into a high-dimensional feature space. Linear kernels included principal component and logistic regression analyses. Nonlinear kernels, such as SVM, convert data into a higher-dimensional feature vector space where data points are more likely to be linearly separable. Complex datasets without linear separability can benefit from these kernels. In this work, it was observed that classification improvement is significant when nonlinear kernels are used instead of linear kernels.

More specifically, a number of information theoretic kernels (ITKs) may be defined, given two posterior distributions for (P1=P(i,j) and P2=(k,l)) from two locations (i,j) and (k,l) in the image respectively. Versions of these kernels that are appropriate for un-normalized measurements were also described in.21–23 Let us consider the two un-normalized probabilistic measures ie µ1 and µ2, such that  and

and  . Here µ1 and µ2 be two normalized counterparts with w1 and w2 being any random positive real integers type of weights. The weighted probabilistic kernel is described using the maximum entropy content of the pixel. The method here involves establishing a kernel space between the two identified locations as the composition of the posterior embedding function or the embedding with the kernels progressing either linearly or non-linearly to cover the area of the tumour or any abnormality. In this paper, the analysis for both cases are presented when this kernel using the proposed method is progressing linearly and non-linearly,

. Here µ1 and µ2 be two normalized counterparts with w1 and w2 being any random positive real integers type of weights. The weighted probabilistic kernel is described using the maximum entropy content of the pixel. The method here involves establishing a kernel space between the two identified locations as the composition of the posterior embedding function or the embedding with the kernels progressing either linearly or non-linearly to cover the area of the tumour or any abnormality. In this paper, the analysis for both cases are presented when this kernel using the proposed method is progressing linearly and non-linearly,

The proposed method-based embedding is based on components that are non-negative, and it is easy to see that this kernel is properly defined, as is evident from the posterior probabilistic embedding function and was indicated above for the proposed embedding process. Next, SVM learning is used after the kernel location is specified. One of the crucial requirements for the kernel’s applicability in SVM learning is positive definiteness, which is satisfied in the proposed model. In addition, nearest neighbour (NN) classifiers were used to evaluate the appropriateness of the produced kernels and present the impact of the proposed model on X-ray image analysis.

Results and Discussion

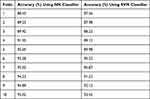

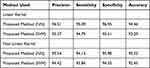

A portion of the data from the Kaggle online database [https://www.kaggle.com/datasets/tolgadincer/labeled-chest-xray-images] was used for classification experiments. A group of images were selected while maintaining the cancerous/benign cell ratio. Images with 256×256 pixels were isolated from the labelled X-ray images. All tests were performed on this collection of 214 images, which were separated into 10 folds. This study used the proposed PrCA model for every fold of the training dataset and applied it to the test dataset. The number of images was determined using the 10-fold-out cross validation approach on the training set (with nine training folds and utilised 9-fold cross validation to estimate the optimal number of images). The provided accuracy values were averages over 10 folds and were expressed as perceptual accuracy. The accuracy metric for the 10-fold cross-validation is presented in Table 1 and Table 2 summarises the training, testing, and validation setwise accuracy computations using the proposed method. The proposed PrCA is trained unsupervised, meaning that the class labels are ignored when the PrCA model is learned. These results demonstrate that nonlinear kernels outperform linear kernels in the proposed approach. Figure 2 shows Region identification based on generative embedding using proposed method. Figure 3 shows the qualitative results for the posterior distribution embedding E(I) in selected region from (a) Linear Kernel Progression in P1 (b) Linear Kernel Progression in P2 (c) Non-Linear Kernel Progression in P1 (d) Non-Linear Kernel Progression in P2.Table 3 presents the computation of performance metrics using the proposed method. The benefit of the kernels was observed using the proposed generative technique, even if NN was a poor option for this set of experiments (NN accuracy using the dataset was 95.02%). This work applied the similarities calculated by the kernels in the NN and SVM classifiers and achieved accuracies of 95.02% and 92.45%, respectively. Figure 4 shows region identification (in yellow color) for the nearest neighbour (NN) classifier in proposed method 4(a) Linear Kernel Progression 4(b) Non-Linear Kernel Progression. Figure 5 show the ROC Curves (a) Training (b) Test ROC (c) Validation ROC (d) All ROC.Figure 6 shows the confusion Matrix (a) Training (b) Test (c) Validation (d) Overall.

|

Table 1 Accuracy Metric During 10-Fold Cross Validation for Proposed Method |

|

Table 2 Training, Testing and Validation Set Wise Accuracy Computation Using Proposed Method (Using Linear Kernel and NN Classifier) |

|

Table 3 Performance Metrics Computation Using Proposed Method |

|

Figure 2 Region identification (in red color) based on generative embedding using proposed method where I (i,j) denotes specific pixel. |

|

Figure 4 Region identification (in yellow color) for the nearest neighbour (NN) classifier in proposed method (a) Linear Kernel Progression (b) Non-Linear Kernel Progression. |

|

Figure 5 ROC Curves (a) Training (b) Test ROC (c) Validation ROC (d) Overall ROC. |

|

Figure 6 Confusion Matrix (a) Training (b) Test (c) Validations (d) Overall. |

The proposed PrCA method was used in the experimental setting, which divided the training data into two groups and trained a separate PrCA model for each class. The fusion of the embeddings based on each of the two sub-models results in the final feature space embedding. Although the linear kernel in this example produces more accurate results than a single PRCA model, the kernels only slightly increase their accuracy of this linear kernel. This is because, when the outputs of each PRCA are combined in a supervised manner, the number of features doubles. Table 4 summarises the comparison of the proposed method with existing methods.34–37 It is observed that using the NN classification increases the accuracy compared to the SVM method.

|

Table 4 Comparison with Existing Methods |

The conclusions drawn in this paper is based on an innovative method for analyzing lung X-rays. It combines generative learning, discriminative learning, and principal component analysis (PCA). After that, in this work tests of the suggested methodology are conducting on several existing public datasets and arrives at results that are considered cutting edge. The findings of the study are significant because there is a possibility that they will lead to an increase in both the accuracy and the efficiency of diagnosing lung diseases. X-rays of the lung are an imaging technique that is frequently utilized for the diagnosis of lung disorders. On the other hand, manual interpretation of lung X-rays can be a time-consuming and difficult process, particularly for radiologists with less experience. The approach that was suggested can be utilized to construct computer-aided diagnosis (CAD) systems that can assist radiologists in the identification and detection of lung disorders in a manner that is both more accurate and time efficient. The conclusions of this work are confirmed rather well by the results when taken as a whole. The suggested method obtains results that are state of the art on numerous public datasets and has the potential to increase both the accuracy and efficiency of diagnosing long illness.

Conclusion and Future Work

The proposed generative discriminative classification technique solves the difficult classification problems. Kernel-based discriminative learning and generative techniques allow the model to predict and generalise new inputs accurately. This hybrid strategy can tackle many complicated categorisation problems more efficiently and effectively than the previous approaches. In this paper, we propose a novel classification strategy has been described that combines kernel-based discriminative learning based on kernels with generative embeddings based on PrCA. X-ray images were used to diagnose cancer areas using the suggested methodology. As we have shown, combining PrCA generative skills with the discriminative powers of kernels results in greater classification accuracy than earlier methods based on linear and nonlinear kernels using SVM and NN classifiers. The experimental results show that using a nonlinear kernel and NN classifier outperforms the other methods. In general, the results of the study provide persuasive evidence that the approach that was proposed is a promising approach for the analysis of lung X-rays. The findings of the study provide solid evidence to back up the findings and conclusions drawn from it.

The proposed method involves training two generative models, one for normal lung X-rays and the other for aberrant lung X-rays. Training generative models can be computationally intensive, and this method trains both of them. In addition, the PCA technique is utilized in the suggested method in order to lessen the dimensionality of the lung X-ray pictures, which is another factor that can contribute to increased computational costs. It is possible that the approach that has been described cannot be generalized to other types of medical imaging. Only X-rays of the lungs were used in the development and testing of the suggested method. It is not quite obvious how effectively the approach that has been described would generalize to other types of medical imaging modalities like CT scans and MRIs. The proposed method has the ability to increase the accuracy as well as the efficiency of the diagnosis of lung diseases. However, in order to address the concerns that were presented earlier, additional study is required.

Data Sharing Statement

The data used to support the findings of this study have been included in this article.

Ethical Approval

This study was conducted according to the relevant guidelines and regulations; however, institutional ethical approval was not required as the research did not encompass any human participants or biological specimens of human origin.

Acknowledgments

The authors are thankful to the Deanship of Scientific Research at Najran University for funding this work under the Distinguished Research Funding Program grant code (NU/DRP/MRC/12/30).

Funding

The authors are thankful to the Deanship of Scientific Research at Najran University for funding this work under the Distinguished Research Funding Program grant code (NU/DRP/MRC/12/30). For the purpose of open access, the author has applied a Creative Commons Attribution (CC BY) licence to any Author Accepted Manuscript version arising, the authors are thankful for the University of Sheffield Institutional Open Access Fund.

Disclosure

The authors declare that there is no conflict of interest.

References

1. Finn C, Abbeel P, Levine S. Model-agnostic meta-learning for fast adaptation of deep networks, In:

2. Boser BE, Guyon IM, Vapnik VN. A training algorithm for optimal margin classifiers, In:

3. Guan Q, Huang Y, Zhong Z, Zheng Z, Zheng L, Yang Y. Thorax Disease Classification with Attention Guided Convolutional Neural Network. Pattern Recognition Letters; 2019.

4. Hinton GE, Salakhutdinov RR. Reducing the dimensionality of data with neural networks. Science. 2006;313(5786):504–507. doi:10.1126/science.1127647

5. Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets. Adv Neural Inform Process Sys. 2014;2:2672–2680.

6. Hoffer E, Ailon N. Deep metric learning using triplet network. In: International Workshop on Similarity-Based Pattern Recognition. Springer; 2015:84–92.

7. de Hoop B, De Boo DW, Gietema HA, et al. Computer-aided detection of lung cancer on chest radiographs: effect on observer performance. Radiology. 2010;257(2):532–540. doi:10.1148/radiol.10092437

8. Szegedy C, Wei L, Yangqing J, et al. Going deeper with convolutions in Proc. IEEE CVPR; 2016:1–9.

9. Sunnetci KM, Ulukaya S, Alkan A. Periodontal bone loss detection based on hybrid deep learning and machine learning models with a user-friendly application. Biomed Signal Process Control. 2022;77:103844. doi:10.1016/j.bspc.2022.103844

10. Zhang J, Xia Y, Xie Y, Fulham M, Feng D. Classification of medical images in the biomedical literature by jointly using deep and handcrafted visual features. IEEE J Biomed Health Infom. 2018;22(5):1521–1530. doi:10.1109/JBHI.2017.2775662

11. Zhang J, Xia Y, Cui H. Pulmonary nodule detection in medical images: a survey, Biomed. Signal Proces. 2018;43:138–147.

12. Zhang J, Xia Y, Cui H, Zhang Y. NODULe: combining constrained multi-scale log filters with densely dilated 3d deep convolutional neural network for pulmonary nodule detection. Neurocomputing. 2018;317:159–167. doi:10.1016/j.neucom.2018.08.022

13. Oquab M, Bottou L, Laptev I, Sivic J, “Learning and transferring mid-level image representations using convolutional neural networks”, in Proc. CVPR; 2014:1717–1724.

14. Jaakkola T, Haussler D. Exploiting generative models in discriminative classifiers. Adv Neural Inform Process Sys. 1999;1:487–493.

15. Kononen J, Bubendorf L, Kallionimeni A, et al. Tissue microarrays for high-throughput molecular profiling of tumor specimens. Nat Med. 1998;4(7):844–847. doi:10.1038/nm0798-844

16. Lasserre J, Bishop C, Minka T. Principled hybrids of generative and discriminative models. In:

17. Ng A, Jordan M. On discriminative vs generative classifiers: a comparison of logistic regression and naive Bayes. In:

18. Perina A, Cristani M, Castellani U, Murino V, Jojic N. Free energy score space. Adv Neural Inform Process Sys. 2009;2:22.

19. Perina A, Cristani M, Castellani U, Murino V, Jojic N, A hybrid generative/ discriminative classification framework based on free-energy terms. In:

20. Rubinstein YD, Hastie T: Discriminative vs informative learning. In:

21. Tsuda K, Kawanabe M, R¨atsch G, Sonnenburg S, Muller KR. A new discriminative kernel from probabilistic models. Neural Computation. 2002;14(10):2397–2414. doi:10.1162/08997660260293274

22. Bosch A, Zisserman A, Mu˜noz X. Scene Classification via pLSA. Vol. 3954. Leonardis A, Bischof H, Pinz A, eds. Heidelberg: Springer; 2006:517–530. ECCV 2006. LNCS.

23. Hofmann T. Unsupervised learning by probabilistic latent semantic analysis. Machine Learn. 2001;42(1–2):177–196. doi:10.1023/A:1007617005950

24. Castellani U, Perina A, Murino V, Bellani M, Brambilla P: Brain morphometry by probabilistic latent semantic analysis. In:

25. Bicego M, Lovato P, Ferrarini A, Delledonne M. Biclustering of expression microarray data with topic models. In:

26. Bicego M, Lovato P, Oliboni B, Perina A: Expression microarray classification using topic models. In:

27. Martins A, Smith N, Xing E, Aguiar P, Figueiredo M. Nonextensive information theoretic kernels on measures. J Machine Learn Res. 2009;10:935–975.

28. Martins AFT, Bicego M, Murino V, Aguiar PMQ, Figueiredo MAT. Information Theoretical Kernels for Generative Embeddings Based on Hidden Markov Models. Vol. 6218. Hancock ER, Wilson RC, Windeatt T, Ulusoy I, Escolano F, eds. Heidelberg: Springer; 2010:463–472. SSPR&SPR 2010. LNCS.

29. Schuffler P, Ula¸s A, Castellani U, Murino V: A multiple kernel learning algorithm for cell nucleus classification of renal cell carcinoma. In:

30. Song Y, Cai W, Zhou Y, Feng DD. Feature-based image patch approximation for lung tissue classification. IEEE Transact Med Imaging. 2013;32(4):797–808. doi:10.1109/TMI.2013.2241448

31. Song Y, Cai W, Huang H, et al. Large margin local estimate with applications to medical image classification. IEEE Transact Med Imaging. 2015;34(6):1362–1377. doi:10.1109/TMI.2015.2393954

32. Li Q, Cai W, Feng DD. Lung image patch classification with automatic feature learning. EMBC. 2013;2013:6079–6082. doi:10.1109/EMBC.2013.6610939

33. Gao M, Bagci U, Lu L, et al. Holistic classification of CT attenuation patterns for interstitial lung diseases via deep convolutional neural networks”, in workshop on deep learning in medical image analysis; 2015.

34. Schlegl T, Waldstein SM, Vogl W-D, Schmidt-Erfurth U, Langs G. Predicting semantic descriptions from medical images with convolutional neural networks. IPMI. 2015;4:437–448.

35. Lo S-CB, Chan H-P, Lin J-S, Li H, Freedman MT, Mun SK. Artificial convolution neural network for medical image pattern recognition. Neural Networks. 1995;8(7–8):1201–1214. doi:10.1016/0893-6080(95)00061-5

36. Lo S-CB, A. lou S-L, Lin J-S, Freedman MT, Chien MV, Mun SK. Artificial convolution neural network techniques and applications for lung nodule detection. IEEE Transact Med Imaging. 1995;14(4):711–718. doi:10.1109/42.476112

37. Shen W, Zhou M, Yang F, Yang C, Tian J. Multi-scale convolutional neural networks for lung nodule classification in IPMI; 2015:588–599.

38. Kumar D, Wong A, Clausi DA. Lung nodule classification using deep features in CT images. Computer Robot Vision. 2015;4133–138.

39. Roth HR, Lu L, Seff A, et al., “A New 2.5D representation for lymph node detection using random sets of deep convolutional neural network observations”, in MICCAI; 2014:520–527.

40. Kingma DP, Mohamed S, Jimenez Rezende D, Welling M. Semisupervised learning with deep generative models. Adv Neural Inform Process Sys. 2014;3:27.

41. Kumar A, Sattigeri P, Fletcher T. Semi-supervised learning with gans: manifold invariance with improved inference. Adv Neural Inform Process Sys. 2017;5:30.

42. Wu S, Deng G, Li J, Li R, Yu Z, Wong HS. Enhancing triplegan for semi-supervised conditional instance synthesis and classification. In:

43. Liu M, Li F, Yan H, et al. A multi-model deep convolutional neural network for automatic hippocampus segmentation and classification in Alzheimer’s disease. Neuroimage. 2020;208:116459. doi:10.1016/j.neuroimage.2019.116459

© 2023 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2023 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.