Back to Journals » Advances in Medical Education and Practice » Volume 8

Evaluating medical students’ proficiency with a handheld ophthalmoscope: a pilot study

Authors Gilmour G, McKivigan J

Received 10 August 2016

Accepted for publication 26 September 2016

Published 28 December 2016 Volume 2017:8 Pages 33—36

DOI https://doi.org/10.2147/AMEP.S119440

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 2

Editor who approved publication: Dr Md Anwarul Azim Majumder

Gregory Gilmour,1 James McKivigan2

1Physical Medicine and Rehabilitation, Michigan State University, Lansing, MI, 2School of Physical Therapy, Touro University, Henderson, NV, USA

Introduction: Historically, testing medical students’ skills using a handheld ophthalmoscope has been difficult to do objectively. Many programs train students using plastic models of the eye which are a very limited fidelity simulator of a real human eye. This makes it difficult to be sure that actual proficiency is attained given the differences between the various models and actual patients. The purpose of this article is to introduce a method of testing where a medical student must match a patient with his/her fundus photo, ensuring objective evaluation as well as developing skills on real patients which are more likely to transfer into clinical practice directly.

Presentation of case: Fundus photos from standardized patients (SPs) were obtained using a retinal camera and placed into a grid using proprietary software. Medical students were then asked to examine a SP and attempt to match the patient to his/her fundus photo in the grid.

Results: Of the 33 medical students tested, only 10 were able to match the SP’s eye to the correct photo in the grid. The average time to correct selection was 175 seconds, and the successful students rated their confidence level at 27.5% (average). The incorrect selection took less time, averaging 118 seconds, yet yielded a higher student-reported confidence level at 34.8% (average). The only noteworthy predictor of success (p<0.05) was the student’s age (p=0.02).

Conclusion: It may be determined that there is an apparent gap in the ophthalmoscopy training of the students tested. It may also be of concern that students who selected the incorrect photo were more confident in their selections than students who chose the correct photo. More training may be necessary to close this gap, and future studies should attempt to establish continuing protocols in multiple centers.

Keywords: standardized patient, software based, physical exam, computer-based testing, education

A Letter to the Editor has been received and published for this article.

Introduction

Ophthalmoscopy is a skill that medical students typically are introduced to during their primary science education and then expected to master during their clinical years. Almost universally, students agree that they lack confidence and struggle with this difficult physical examination skill, and instructors have used countless teaching methods and models to attempt to bridge this perceived training gap.1–4 Models ranging from modified plastic containers to custom-made, anatomically correct plastic eyes have been tried with varying degrees of success.5,6 Distinct differences between a plastic model and an actual human patient, such as differences in dynamic pupil size, eye movement, and patient cooperation, make it difficult to be sure that skills learned on a model will translate directly to the effective examination of a patient in the clinic. Additionally, when learning ophthalmoscopy, most students have preferred practicing on humans instead of simulators.7 Other authors have incorporated fundus photographs in a kind of matching game, both live and over the internet for training.8–10 One paper even concluded that switching from conventional ophthalmoscopes to PanOptic scopes would increase proficiency but could, at a minimum, double the cost of the instrument.11 Despite all of these efforts, the level of competence in the use of the ophthalmoscope remains undefined.12 In this article, the authors used fundus photos in combination with a novel software package to establish a new method of testing that can be used as a real-time proficiency test of ophthalmoscopy skills as well as a tool that students can use to practice their skills without the assistance of an instructor.

Methods

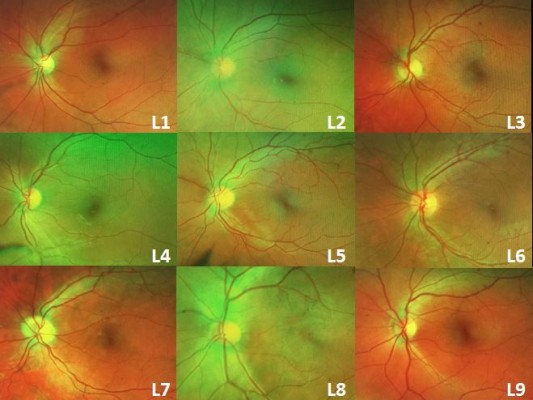

Healthy volunteers were recruited to be standardized patients (SPs). Sixteen SPs had undilated fundus photos of both the eyes taken using a retinal camera (200Tx; Optos, Dunfermline, UK). The photos were sent off-site, where identifying information was stripped, and they were randomized. The photos were then added to the prototype software developed for this study which put them in a grid of three photos by three photos, with a total of nine photos per grid (Figure 1). An identical grid was produced in a laminated, full-color printed copy. Each photo was labeled with a random letter–number combination; the key was held off-site, where the examiner collecting the data could not access it. The software placed the corresponding SP’s photo in the grid once and filled the remaining spaces with photos of other SPs. No pathology was identified in any of the volunteers. Thirty-three medical students from Ross University School of Medicine in their third and fourth years were recruited using a facility email address list to examine an SP and attempt to select the patient’s fundus photo out of the photo grid. Before beginning, the students were asked to complete a basic questionnaire. They provided their age, gender, year of training (all students were either third- or fourth-year medical students), and whether or not they had taken a one-month elective course in ophthalmology. After making a selection, they were asked to rate their confidence in their choice. Time spent performing the exam was recorded, and the data were sent off-site for determination of the correct choices. The data from the survey and results are displayed in Table 1. Each student read a sheet of instructions before beginning, and an instructional script was read to them by the examiner before they started the exam. Only the procedural instruction was given, and no attempt to educate or instruct on technique was made. Students were allowed to examine either the left or the right eye, and the software and paper copy were adjusted to show pictures of the chosen eye. Students were allowed to use any method they chose for the examination (technique was not evaluated), give any instructions necessary to the SP, and take as much time as needed. Two identical hand-held ophthalmoscopes (Welsh-Allyn, Skaneateles Falls, NY, USA) were provided, and the students were allowed to choose one and adjust it to their preference before the timer was started. Once beginning the examination, the students were allowed to refer to the computer screen or to the hard copy of the images. The software allowed the students to use the mouse and click on photos to “gray them out” to eliminate them from consideration; they could click again to restore them for reconsideration (Figure 2).

| Figure 1 A selection of nine randomized photos from 16 standardized patients were laid out on a grid and numbered with a random letter-combination. |

| Table 1 Participants’ responses to survey questions Note: Students who selected the correct photo are emphasized with bolded text. |

| Figure 2 Clicking on a photo could deselect it to remove it from consideration. |

When the students had made their final selection, they verbally reported it to the examiner. Before beginning recruitment of volunteer SPs and medical student test subjects, approval for this study was obtained from the institutional review board of St. Joseph Mercy Oakland Hospital in Pontiac, MI. Written informed consent was obtained from all participants.

Results

Upon all students completing the assessment, the results were compiled. Of the 33 students who participated, 10 correctly selected their SP’s photo out of the grid (30%) and completed the exam in an average time of 175 seconds with an average confidence rating of 27.5%. The remaining 23 students selected the incorrect photo in an average time of 118 seconds with an average confidence rating of 34.8%.

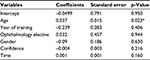

Multiple regression was performed to check for significance between any of the variables and making a correct selection, as shown in Table 2. Only the medical students’ age showed a p-value that was significant, 0.023 (<0.05).

| Table 2 Multiple regression of variables against correct photo selection Note: *Significant p-value (p<0.05). |

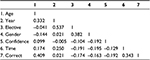

Additionally, a correlation matrix was assembled to look for associations between the variables. The most significant correlation was between the year of training and ophthalmology elective, which is not surprising since students further along in their training would be more likely to have taken an elective course in ophthalmology. Table 3 shows the correlation matrix.

| Table 3 Correlation matrix of age, year of medical school, gender, confidence, time, and correct selection |

Discussion

This paper describes a software-based method to evaluate objectively a student’s ability to visualize the fundus of a live human patient in real time without the assistance of mydriatic eye drops. The decision not to dilate the eyes was made with the consideration that a majority of medical students are likely to end up in a primary care setting where the eyes typically are not dilated for examination. The results suggest that there is a deficit in the proficiency of the students we tested. Even when the correct photo was selected, the students expressed a very low level of confidence in their selection. Perhaps even more concerning, the average confidence level of students who made incorrect choices was higher than those who selected correctly (27.5% vs. 34.8%).

Only the age of the students correlated significantly with their success in choosing the correct photo (p=0.02). This finding is likely to be a coincidental correlation as the student’s age does not have any effect on their training in a standardized medical program.

Limitations

There are several limitations to the methods used in this study. First, the generalizability of the results is questionable due to the limited sample size. A future multicenter trial would be useful. The potential cost of maintaining this training program could be high as compensation may be needed for photographing and compensating SPs. Although all the SPs used in this study were unpaid volunteers, it may be difficult to get volunteers on a larger scale. Some of the cost could be mitigated by making the students themselves act as the patients; this would also alleviate issues with scheduling the availability of SPs. Additionally, if students’ photos were used, they could employ the software as a tool to train using each other as SPs. Next, this method only demonstrates a student’s ability to visualize the fundus, and not to evaluate or identify pathology. The authors are confident that if students master the skill of visualizing the fundus, then it would be appropriate to train identification of pathology through photographs. Lastly, the use of an on-site proctor for collecting data for off-site interpretation added to the complexity of the examinations. If the software were made to randomize and interpret the data and report the results, perhaps students could use it for practice without any help or observation from a proctor.

Acknowledgment

The authors would like to thank W Scott Wilkinson and his staff for the generous donation of their time and use of their fundus camera.

Disclosure

The authors report no conflicts of interest in this work.

References

Gupta RR, Lam WC. Medical students’ self-confidence in performing direct ophthalmoscopy in clinical training. Can J Ophthalmol. 2006;41(2):169–174. | ||

Mackay DD, Garza PS, Bruce BB, Newman NJ, Biousse V. The demise of direct ophthalmoscopy: a modern clinical challenge. Neurol Clin Pract. 2015;5(2):150–157. | ||

Roberts E, Morgan R, King D, Clerkin L. Fundoscopy: a forgotten art. Postgrad Med J. 1999;75(883):282–284. | ||

Schulz C, Hodgkins P. Factors associated with confidence in fundoscopy. Clin Teach. 2014;11(6):431–435. | ||

Hoeg TB, Sheth BP, Bragg DS, Kivlin JD. Evaluation of a tool to teach medical students direct ophthalmoscopy. WMJ. 2009;108(1):24–26. | ||

Swanson S, Ku T, Chou C. Assessment of direct ophthalmoscopy teaching using plastic canisters. Med Educ. 2011;45:508–535. | ||

Kelly LP, Garza PS, Bruce BB, Graubart EB, Newman NJ, Biousse V. Teaching ophthalmoscopy to medical students (the TOTeMs study). Am J Ophthalmol. 2013;156(5):1056–1061. | ||

Asman P, Linden C. Internet-based assessment of medical students’ ophthalmoscopy skills. Acta Ophthalmol. 2010;88(8):854–857. | ||

Krohn J, Kjersem B, Hovding G. Matching fundus photographs of classmates. An informal competition to promote learning and practice of direct ophthalmoscopy among medical students. J Vis Commun Med. 2014;37(1–2):13–18. | ||

Milani BY, Majdi M, Green W, et al. The use of peer optic nerve photographs for teaching direct ophthalmoscopy. Ophthalmology. 2013;120(4):761–765. | ||

McComiskie JE, Greer RM, Gole GA. Panoptic versus conventional ophthalmoscope. Clin Exp Ophthalmol. 2004;32(2):238–242. | ||

Benbassat J, Polak BC, Javitt JC. Objectives of teaching direct ophthalmoscopy to medical students. Acta Ophthalmol. 2012;90(6):503–507. |

© 2016 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2016 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.