Back to Journals » Advances in Medical Education and Practice » Volume 8

Effective learning environments – the process of creating and maintaining an online continuing education tool

Authors Davies S, Lorello GR, Downey K, Friedman Z

Received 7 March 2017

Accepted for publication 10 May 2017

Published 30 June 2017 Volume 2017:8 Pages 447—452

DOI https://doi.org/10.2147/AMEP.S136348

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

Editor who approved publication: Dr Md Anwarul Azim Majumder

Sharon Davies,1 Gianni Roberto Lorello,2 Kristi Downey,1 Zeev Friedman1

1Department of Anesthesia, Sinai Health System, University of Toronto, Toronto, ON, Canada; 2Department of Anesthesia, Sunnybrook Health Sciences Centre, University of Toronto, Toronto, ON, Canada

Abstract: Continuing medical education (CME) is an indispensable part of maintaining physicians’ competency. Since attending conferences requires clinical absenteeism and is not universally available, online learning has become popular. The purpose of this study is to conduct a retrospective analysis examining the creation process of an anesthesia website for adherence to the published guidelines and, in turn, provide an illustration of developing accredited online CME. Using Kern’s guide to curriculum development, our website analysis confirmed each of the six steps was met. As well, the technical design features are consistent with the published literature on efficient online educational courses. Analysis of the database from 3937 modules and 1628 site evaluations reveals the site is being used extensively and is effective as demonstrated by the participants’ examination results, content evaluations and reports of improvements in patient management. Utilizing technology to enable distant learning has become a priority for many educators. When creating accredited online CME programs, course developers should understand the educational principles and technical design characteristics that foster effective online programs. This study provides an illustration of incorporating these features. It also demonstrates significant participation in online CME by anesthesiologists and highlights the need for more accredited programs.

Keywords: anesthesia, online, continuing medical education

Introduction

Since physicians must engage in lifelong learning in order to maintain competency and fulfill Maintenance of Certification requirements, continuing medical education (CME) is an indispensable part of medical practice. Moreover, it is likely that the requirements for CME as part of physician accreditation will become even more stringent.1

CME conferences are costly, require clinical absenteeism and have rigid schedules. In contrast, e-learning is an efficient medium for gaining knowledge2,3 and has been shown to influence physicians’ decision-making skills,4,5 improve quality of care6 and change both knowledge and behaviors in clinical practice.7–9 However, despite the increasing popularity of web-based educational activities in health care, few accredited online anesthesia CME programs have been created.

The purpose of this article is to provide a narrative report of the University of Toronto, Department of Anesthesia’s CME website creation and analyze information contained in the website’s database. The intent is to provide physicians interested in developing online CME programs with an illustration of incorporating important educational principles and technical design features, information typically found outside the anesthesia literature.10,11

In 2003 when the website was initially developed, there was little experience with online CME in anesthesia; hence, the site was created on an intuitive “learn as you go” basis. This is obviously suboptimal. In addition, Kanter12 recently challenged authors of educational innovations to go beyond descriptions of “what was done” and “did it work” to include a reflective, analytical and scholarly treatment of the innovation. Curran and Fleet10 also stressed the importance of understanding design characteristics that foster effective online programs. Therefore, prior to undertaking this report, a literature search was conducted to determine if the design was consistent with current literature regarding the development of effective e-learning programs.

Site design

Recognizing the absence of e-learning programs, a needs assessment survey was conducted to determine if practicing anesthesiologists were interested in online CME.13 Since the results revealed sufficient interest, combined with the expectation that the demand would continue to increase, a CME website was developed. The intent was to offer accredited CME for all anesthesia providers, particularly anesthesiologists with limited access to educational events. A crucial part of the design was to ensure the site met the criteria necessary for certification by the Royal College of Physicians and Surgeons of Canada (RCPSC). Following completion of the site development and prior to online publication, the program received institutional approval through the University of Toronto, Faculty of Medicine, Department of Professional Development as well as accreditation for the RCPSC Maintenance of Certification Program.

The final design was module-based and included interactive clinical cases, a literature review, a discussion board for participants’ questions with the authors’ answers and a course evaluation. Video instruction was utilized in one module to demonstrate a technical procedure. Each module included a postmodule examination testing the participants’ understanding of the material. Consistent with contemporary educational strategies encouraging learners to establish their personal learning goals14,15 and ensuring validity and integrity of assessment,16–18 pretests were recently added, only questions on concepts contained with the modules were included, and all examinations were peer reviewed prior to the tool going live.

Throughout the program development, the module content was drafted by expert anesthesiologists, reviewed and adapted for online publication by the author (SD) and peer reviewed by three additional anesthesiologists to document any issues with the module. Comments and feedback regarding content or technical difficulties were addressed. The modules were then submitted to the University of Toronto, Department of Continuing Education for approval.

The website presently contains 17 modules that can be accessed under Online Education Resources (http://www.anesthesia.webservices.utoronto.ca/edu/cme/courses.htm). Each module is accredited for RCPSC Section 3 Self-assessment credits as well as Category 1 of the Physician’s Recognition Award of the American Medical Association and the College of Family Physicians Canada Mainpro credits.

Database

Since inception, demographic information regarding the participants’ profession, geographic location and type of practice, as well as module evaluations, assessments of the educational experience and examination scores were collected. For this review, data collected during a representative 5.5-year period beginning in 2007 were subsequently organized and entered into a Microsoft Access (Redmond, WA, USA) database for analysis.

Literature search

Due to the paucity of online anasthesia education resources at the time, the site was initially developed on an intuitive and experimental basis. Therefore, prior to compiling this report, a literature search was conducted in order to measure the site against current educational principles. Working independently, the authors performed a selective review on online CME in anesthesiology and searched PubMed, Scopus, PsycINFO and Education Resources Information Center from inception to 2016. The strategy consisted of searching for topic (online continuing education, online CME, online continuing professional development), specialty (anesthesia) and modality (e-learning). We used the Boolean operators AND to link topics and OR to link the different medical subject headings for topic.

Analysis of the curriculum design

Kern et al19 constructed a six-step guide to curriculum design for medical education programs. This guide provides a general conceptual framework that can be applied to web-based CME program development. Using Kern’s guide, we analyzed the creation process of the website to determine adherence to the guidelines as well as identify any mistakes.

Kern’s first step entails the identification of a problem or health care need and includes a global needs assessment. Before developing the website, an interest in online CME was confirmed through a provincial survey of practicing anesthesiologists in Ontario and the lack of available online anesthesia programs was substantiated through review of the literature.13

Kern’s13 second step is a targeted needs assessment, which was conducted on a number of fronts. Initially, survey respondents were asked to suggest topics of interest. As well, needs assessments from local anesthesiology conferences and planning committee suggestions were utilized. Input from such advisory committees are considered key sources for identifying both perceived and unperceived learning needs for course development.20 Finally, suggestions regarding additional topics of interest are collected through the website evaluations.

Step three of Kern’s design includes defining goals and objectives to assist authors in developing the content and learning methods. Both the goals and objectives were developed by the module authors in concert with the site developer. The objectives, listed at the beginning of each module, also help focus the participant on the learning material.

Step four entails the choice of the educational strategy such as a lecture, case discussion, live demonstration or simulation. For this program, an interactive online format was chosen, which included case-based discussions and a topical review of the current literature. Practicality is also a consideration; the website is readily accessible, convenient and can be reviewed as many times as necessary.

The fifth step involves program implementation including political support for and administration of the curriculum, procurement of resources, identification of barriers to implementation and refinement of the curriculum over successive cycles. These may be some of the most challenging steps of initiating and maintaining the program. Political support for this program is through the University of Toronto, Department of Anesthesia, which provides a webmaster for technical maintenance, module installation, website monitoring and data collection.

The financial aspects of maintaining a CME program merit some discussion. The major barriers to implementation included the costs associated with accreditation and technical implementation, attracting authors for the modules, particularly at a time when academic credit was not established for such activities, and disseminating the program’s existence. Support from the pharmaceutical industry in the form of unrestricted educational grants covered the costs of implementation and technical upgrades, honorariums for the authors (excluding the site developer) and accreditation costs. A fee for accessing the modules is another option. This, however, may come at the risk of deterring potential participants, particularly those from underdeveloped countries.

Academic recognition is given to the authors in the Department of Anesthesia’s Annual Report, and the module evaluations and participant numbers are available to each author for inclusion in their educational dossier. Dissemination of the program’s existence has been through advertisement at local anesthesia conferences, listings in the Canadian Medical Association Journal and on the University of Toronto CME site. Curriculum refinement occurs regularly in keeping with participants’ suggestions regarding future topics, and module authors are contacted to review and update content as necessary.

Kern’s sixth and final step is the evaluation of the overall program and the performance of the learners. The online evaluation includes questions regarding the consistency of the content with the objectives, academic interest (appropriate level of content complexity), lack of commercial bias and applicability to the participant’s clinical practice. Free text comments to enable improvements in the course as well as suggestions for future topics (needs assessment) are encouraged.

Regarding individual performance, all participants complete a postmodule examination to obtain CME credit.15,21 In combination with the pretests, these tests provide the learners with the opportunity to compare and assess their knowledge acquisition and integrate the acquired information.14 The questions emphasize the module’s key concepts and depend on the author’s professional judgment.15,17 Both the module evaluations and the test results are submitted electronically and maintained in a database. These results are reported to the site developer, but the identity of the individual is withheld, thereby maintaining confidentiality. Any corrections or ambiguity within the content or examinations are addressed and altered accordingly. In addition, monitoring the exam results allows for changes to be made in the event in which consistently poor grades are achieved.

Analysis of the technical design

In addition to curriculum development, e-learning programs have specific design requirements to improve their educational effectiveness. In their review, Cook and Dupras22 suggested that online programs need to be more than “putting together a colorful webpage” and encouraged developers to employ the principles of effective online learning. Similarly, Grunwald and Corsbie-Massay14 recognized a lack of guidelines and quantitative scales to assess online medical education programs.

The technical design of an e-learning tool should be cognitively efficient and not place excessive demands on the participant’s attention.14 Clark24 and Sweller et al23 noted a common misconception is that very active visual and auditory designs add interest and increase the learner’s motivation and knowledge acquisition. Sweller et al23 suggested that busy screens may actually interfere with learning; therefore, simple screen designs are the most successful. Mayer25 suggested audio presentation is a more effective learning strategy. However, others found that medical students prefer reading text and retain 20% of what they hear as opposed to 40% of what they read.14 Adding interactivity to both modalities increases retention to 75%.14 Although combining animation with narration is useful, presenting identical written text and narration simultaneously should be avoided.26 The website contains written text divided into manageable portions and is consistent with these concepts. The video demonstration contains narration, while the written text is presented separately.

Physical and mental interactivity are the important aspects of e-learning. However, both over- and underutilization can lead to a less-satisfactory learning experience.22 Since all aspects of the site’s interactivity are easily navigated and the module template is constant, wasted hours in learning to navigate the system are avoided. Grunwald and Corsbie-Massay14 see this as a necessary characteristic to minimize the amount of memory required to interact with the learning environment. In addition, some educators suggest that participants be given some autonomy to navigate the system, contingent on their expertise.27 Since the module content is broken up into separate sections, it can be easily navigated according to the participant’s expertise and learning needs.

As Kern’s model suggested,19 program evaluations are important; however, the number of questions in the module evaluations was kept to a minimum with the intent of extracting important information while minimizing the burden to participants. Consistent with this approach, Booth et al21 recommend that online course developers reduce any impediments, such as compulsory evaluations, that may interfere with smooth course completion, particularly for non-fulltime learners. Since the purpose of the website is educational, evaluations are encouraged but not mandatory. To date, the response rate is over 40%.

An important aspect of online education programs is to maintain ongoing participation. Good design characteristics such as functionality, interactivity and knowledge validation will encourage learners to return to the site. Cook and Dupras22 suggest that placing a link on a frequently used homepage is beneficial. A direct link to the website is on the Department of Anesthesia’s homepage. In addition, interested participants are advised when a new module is added.

Database analysis

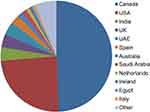

Data collection and analysis was performed purely for quality improvement purposes and in a completely anonymized fashion, and therefore, research ethics approval was not required. We collected information on 3937 completed modules; 928 participants completed one module and 631 completed more than one. Participants’ demographics are described in Table 1. Figure 1 shows the practice location of the participants. There are 17 different subject modules on the website. The average score on the test taken by participants after finishing a module was 84.

| Table 1 Participant demographics Abbreviation: n/a, not available. |

| Figure 1 Practice location. Note: Other represents <10 participants from 41 countries. |

A total of 1628 site evaluations were completed. Overall, the evaluations were extremely positive, further supported by the fact that 68% of the participants returned to complete additional modules. Over 90% of the participants completed the module in 2 hours or less. Site evaluations indicate that objectives were met in 97% (1079/1102) of cases (of note, this question was added in 2011). Participants were also asked: If this module is applicable to your clinical practice, on a scale of 1–5 (1=not at all, 5=extremely), do you feel that the knowledge you have gained will improve your patient management? The modules were described as extremely applicable by 765 (47.0%), very applicable by 555 (34.1%), somewhat applicable by 207 (12.7%), not very applicable by 34 (2.1%) and not at all applicable by 21 (1.3%) participants. In 46 of the evaluations, the question itself was not applicable (eg retired).

Discussion

Our analysis revealed that the creative process, current format and technical design of the program are consistent with published data on medical education curriculum development and efficient online courses. The website is being used extensively, as demonstrated by a continuous increase in participation since inception and highlighting the need for more online CME programs. The evaluations are extremely positive and 68% of the participants returned to complete additional modules. However, although previous research has shown self-reporting to be a valid method of assessing changes in patient care, particularly in regards to online education activities,7,9 we believe the self-reported changes in clinical practice should be audited. Further research is needed to determine if there is translation from the virtual world to clinical practice.

Since inception, the website has been a work in progress and design revisions have been made. For example, the format of the posttest was changed to provide immediate feedback regarding the correct answer, along with an explanation to reinforce the concept, once the answer is submitted. The most recent upgrade was the addition of the pretest, a feature we recommend to be included in future course developments. Although the posttest provides an indication that a level of knowledge was achieved, whether the knowledge was present prior to taking the course is unknown. The pretest allows the course developer and the participants to assess knowledge acquisition through comparison of pre- and postmodule scores. It consists of identical questions as the posttest, but without feedback regarding the correct answer. One could also consider utilizing different formats for the pre- and posttests, such as requiring participants to solve problems on the posttest, utilizing the knowledge gained,26 or alternatively, addressing the same content but with completely different wording.2 All formats have been used and, as there are currently no guidelines regarding which provides the optimal educational benefit,2,7,14 future comparative research is required.

Since our review confirmed that the curriculum design and technical functionality of the site are consistent with the literature, we feel that it has the potential to be used as an educational research tool or as an adjunct to other CME activities. For example, modules could be used in preparation for hands-on workshops or simulation exercises of rare events. Participants would receive CME credits from the accredited online course as well as the simulation or workshop participation. In addition, since online education has the added benefit of being available for frequent review, web-based courses could be compared to simulation and workshops in regards to knowledge retention.

An ongoing challenge for any innovation is sustaining its future presence. This requires a commitment to program maintenance from sponsoring departments and industry partners. As well, continued pressure from CME departments for academic recognition of online program development is an absolute requirement for longevity. Ruiz et al28 acknowledged the need to recognize and reward faculty for their dedication to this effort and suggest ways to promote e-learning as a scholarly pursuit. Progress is being made, as universities embrace the concept of lifelong learning, but there is still more to be done in terms of academic recognition for continuing education providers as well as online CME program development. Certainly, developing accredited web-based programs that can also be used for educational research would add to academic recognition, but should not replace the primary goal of providing quality education for anesthesiologists.

In order to maintain competency, the need for lifelong learning for physicians has been recognized by the universities and, to ensure patient safety, is being demanded by the hospitals, professional bodies and the public. Furthermore, a recent study found that physicians perceive medical knowledge as their highest priority and desire credit for learning, which can be achieved through CME learning.29 This means that there is an ever-increasing need for accredited online educational programs to enable distant learning, in particular, self-assessment programs. CME should not be limited to acquisition of knowledge, and should also strive for changes in practice and attitudes of physicians.30 Moreover, as the need to embrace global health continues to escalate, the use of educational technology will become even more important to physician education worldwide. Although each program will have unique educational and design features, course developers should begin by understanding the educational principles and design characteristics that foster effective online programs; for example, Kern’s six-step program for medical education curriculum design. One should then consider the technical features that are beneficial for knowledge acquisition, including a website that is interactive, easy to navigate and reasonably consistent in design. Technology is the way of the future for all medical education, including CME, and as our database demonstrated, “if you build it, they will come”.

Practice points

- CME is an indispensable part of maintaining physicians’ competency and demand for online modules continues to grow.

- CME websites need to adhere to curriculum design guidelines in order to be efficient.

- Maintaining a CME website following its inception may be the most challenging aspect of CME.

Acknowledgment

Support for this study was provided through a Department of Anesthesia, University of Toronto Research Merit Award.

Disclosure

The authors report no conflicts of interest in this work.

References

Choudhry NK, Fletcher RH, Soumerai SB. Systematic review: the relationship between clinical experience and quality of health care. Ann Intern Med. 2005;142(4):260–273. | ||

Henning PA, Schnur A. E-Learning in continuing medical education: a comparison of knowledge gain and learning efficiency. J Med Marketing. 2009;9(2):151–161. | ||

Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. JAMA. 2008;300(10):1181–1196. | ||

Podichetty VK, Booher J, Whitfield M, Biscup RS. Assessment of internet use and effects among healthcare professionals: a cross sectional survey. Postgrad Med J. 2006;82(966):274–279. | ||

Bennett NL, Casebeer LL, Kristofco RE, Strasser SM. Physicians’ Internet information-seeking behaviors. J Contin Educ Health Prof. 2004; 24(1):31–38. | ||

Holmboe ES, Meehan TP, Lynn L, Doyle P, Sherwin T, Duffy FD. Promoting physicians’ self-assessment and quality improvement: the ABIM diabetes practice improvement module. J Contin Educ Health Prof. 2006;26(2):109–119. | ||

Curran V, Lockyer J, Sargeant J, Fleet L. Evaluation of learning outcomes in Web-based continuing medical education. Acad Med. 2006; 81(10 Suppl): S30–S34. | ||

Wutoh R, Boren SA, Balas EA. eLearning: a review of Internet-based continuing medical education. J Contin Educ Health Prof. 2004; 24(1):20–30. | ||

Fordis M, King JE, Ballantyne CM, et al. Comparison of the instructional efficacy of Internet-based CME with live interactive CME workshops: a randomized controlled trial. JAMA. 2005;294(9):1043–1051. | ||

Curran VR, Fleet L. A review of evaluation outcomes of web-based continuing medical education. Med Educ. 2005;39(6):561–567. | ||

Bordage G. Conceptual frameworks to illuminate and magnify. Med Educ. 2009;43(4):312–319. | ||

Kanter SL. Towards better descriptions of innovations. Acad Med. 2008;83(8):703–704. | ||

Davies S, Cleave-Hogg D. Continuing medical education should be offered by both e-mail and regular mail: a survey of Ontario anesthesiologists. Can J Anaesth. 2004;51(5):444–448. | ||

Grunwald T, Corsbie-Massay C. Guidelines for cognitively efficient multimedia learning tools: educational strategies, cognitive load, and interface design. Acad Med. 2006;81(3):213–223. | ||

Sweller J. Why understanding instructional design requires an understanding of human cognitive evolution. In: O’Neill HF, Perez RS, editors. Web-Based Learning: Theory, Research and Practice. Hillsdale, NJ: Erlbaum Associates; 2006:279–295. | ||

Messick S. Validity of test interpretation and use. ETS Res Rep Series. 1990;1990(1):1487–1495. | ||

Howell S, Rowbotham DJ, Reilly CS. On-line continuing education – another step forward. Br J Anaesth. 2007;98(1):3. | ||

California State Personnel Board. Summary of the Standards for Educational and Psychological Testing, Appendix F. 2003. Available from: http://www.spb.ca.gov/content/laws/selection_manual_appendixf.pdf. Accessed September 20, 2016. | ||

Kern DE, Thomas PA, Howard DM, Bass EB. Curriculum Development for Medical Education: A Six-Step Approach. Baltimore, MD: Johns Hopkins University Press; 1998. | ||

Aherne M, Lamble W, Davis P. Continuing medical education, needs assessment, and program development: theoretical constructs. J Contin Educ Health Prof. 2001;21(1):6–14. | ||

Booth A, Carroll C, Papaioannou D, Sutton A, Wong R. Applying findings from a systematic review of workplace-based e-learning: implications for health information professionals. Health Info Libr J. 2009;26(1):4–21. | ||

Cook DA, Dupras DM. A practical guide to developing effective web-based learning. J Gen Intern Med. 2004;19(6):698–707. | ||

Sweller J, van Merrienboer J, Paas F. Cognitive architecture and instructional design. Educ Psychol Rev. 1998;10:251–296. | ||

Clark R. Research on web-based learning: a half-full glass. In: Bruning R, Horn C, PytlikZillig LM, editors. Web-Based Learning. What Do We Know? Where Do We Go? Greenwich, CT: Greenwich Information Age Publishing; 2003:1–22. | ||

Mayer R. Multimedia Learning. Cambridge, UK: Cambridge University Press; 2001. | ||

Mayer R, Moreno, R. Nine ways to reduce cognitive load in multimedia learning. Educ Psychol. 2003;38(1):43–52. | ||

Kirsh D. Interactivity and multimedia interfaces. Instr Sci. 1997;25:79–96. | ||

Ruiz JG, Mintzer MJ, Leipzig RM. The impact of E-learning in medical education. Acad Med. 2006;81(3):207–212. | ||

Cook DA, Blachman MJ, Price DW, West CP, Berger RA, Wittich CM. Professional development perceptions and practices among U.S. physicians: a cross-specialty national survey. Acad Med. 2007. Epub 2007 Feb 21. | ||

VanNieuwenborg L, Goossens M, De Lepeleire J, Schoenmakers B. Continuing medical education for general practitioners: a practice format. Postgrad Med J. 2016;92(1086):217–222. |

© 2017 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2017 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.