Back to Journals » Advances in Medical Education and Practice » Volume 14

Assessing the Critical Thinking and Deep Analysis in Medical Education Among Instructional Practices

Authors Alhassan AI

Received 18 April 2023

Accepted for publication 21 July 2023

Published 4 August 2023 Volume 2023:14 Pages 845—857

DOI https://doi.org/10.2147/AMEP.S417649

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

Editor who approved publication: Dr Md Anwarul Azim Majumder

Abdulaziz I Alhassan1,2

1Department of Medical Education, College of Medicine, King Saud bin Abdulaziz University for Health Sciences, Riyadh, Saudi Arabia; 2King Abdullah International Medical Research Center, Riyadh, Saudi Arabia

Correspondence: Abdulaziz I Alhassan, Department of Medical Education, College of Medicine, King Saud bin Abdulaziz University for Health Sciences (KSAU-HS), P.O. Box 3660, Riyadh, 11481, Saudi Arabia, Tel +966114299999, Email [email protected]

Objective: The purpose of this study was to examine the application of faculty to stimulate the critical thinking and deep analysis of their students through instructional practice including lecture design, assessment structure, and assignment instructions.

Methods: Faculty from multiple different health colleges at Saudi Arabia were asked to respond to survey items about the activities they use in their classrooms with regards to designing lectures, assessment structures, and instructional assignments. A correlation analysis was performed to determine if the level of applied critical thinking and deep analysis that is stimulated by faculty members were statistically related between designing lectures, assessment structure, and instructional assignments. An analysis of variance (ANOVA) was also performed to determine if there were significant differences based on the demographic characteristics of the participants and level of applied critical thinking and deep analysis.

Results: A correlational analysis revealed that the mean score for designing lectures was 67.276, following by a mean score of 65.233 for instructional assignment and 64.688 for assessment structure. The result of the ANOVA showed that there was a significant difference in the perceptions of the participants between designing lectures, assessment structure, and instructional assignment (p< 0.05).

Conclusion: The participants applied critical thinking and deep analysis when they design their lectures more than assessments and instructional assignments. They had the flexibility to stimulate critical thinking during the lecture activities. In contrast, this flexibility was limited when they were structuring the assessment as they had instructions to consider and were required to provide a rubric with unified key answer which is a mandatory requirement from the assessment department. This is due to the nature of high level of critical thinking answers that lead to high subjectivity in student responses.

Keywords: critical thinking, deep analysis, higher education, instructional design

Background

An on-going debate within higher education is whether the goal of higher education should be to merely prepare students for jobs and employment or whether it should it be to prepare students to engage in critical thinking regardless of specific content or course of study.1 Some have argued that education has been reduced to memorization and tests when the focus should be on helping students to think critically about information and develop the skills to engage in deep thought and analysis.2 From the arts and humanities to medical education, there are discussions and debates about the need to help students engage in more critical thinking and to provide teaching and course design that is based on helping students become critical thinkers.3,4 However, if there is a focus within higher education for faculty to increase efforts to have students engage in critical thinking and deep analysis rather than simply to memorize content for tests, it is necessary to understand what faculty are doing at the present time to motivate critical thinking and deep analysis.

The purpose of this study was to examine the application of faculty members to stimulate the critical thinking and deep analysis of their students through instructional practice including lecture design, assessment structure, and assignment instructions. If there is a concern across academic disciplines that higher education should not be solely about teaching to tests but to help students learn critical thinking and deep analysis skills, then there is a need to understand the activities that faculty are currently using to motivate critical thinking and deep analysis among their students. The findings of this study provide some understanding of the application of critical thinking and deep analysis stimulated by faculty activities in the classroom and can be used to make suggestions for future studies and future changes related to motivating critical thinking in the higher education classroom.

Defining Critical Thinking and Deep Analysis

Before examining some of the recent literature related to higher education activities related to increasing critical thinking and deep analysis skills of students, it is useful to define what is meant by critical thinking and deep analysis. Kahlke and Eva argued that while the idea of critical thinking is ubiquitous within higher education, there is a lack of agreement about what is meant by critical thinking.4 Unlu explained that one definition of critical thinking that is often cited is that of John Dewey who defined critical thinking as the highest level of awareness that is possible to a person through both human senses and the mind.5 Rear further explained that John Dewey also described critical thinking as reflective thinking in which people engage in attentive consideration of opinions and knowledge based on evidence that support the conclusions that they wish to make.6

Dumitru (2019) argued that the contemporary thinkers and philosophers on critical thinkers generally based their ideas of critical thinking on the definition and explanation of the concept provided by Dewey.3 In this regard, critical thinking can be viewed as the act of engaging with opinions and information to draw conclusions based on support and data for those conclusions. Another way of thinking about critical thinking might be to use data and facts to consider whether the opinions and information provided by others are indeed accurate and correct. Critical thinking is not merely about memorizing information provided by others but engaging with the information in relation to other information and facts.

The definition of critical thinking also relates directly to the idea of deep analysis. The concept of deep analysis is defined as the process of engaging in reflection of ideas and connecting information and knowledge for a greater understanding.7 Deep analysis is the process of using critical thinking to draw conclusions that are valid based on broader knowledge.8 As with critical thinking, deeper analysis requires more than just memorizing information. Instead, deep analysis requires bringing information and knowledge together from a variety of sources and disciplines to draw informed conclusions.

Course Design

The way in which courses are designed has gained an increase in interest and concern in higher education. The concern that exists around course design is whether higher education faculty are designing courses that require students to engage with information and take part in critical thinking and an innovation of ideas rather than simply listening to lectures and taking notes.9 Rather than having students sit through traditional lectures in which the professor presents information and the students attempt to memorize information, courses should be designed so that students have critically think about information and even develop new ideas from the information that is presented.10

Johnke and Liebscher (2020) explained that even while most educators and researchers agree that creativity in which students engage with problems and develop innovative solutions is important.11 Higher education continues to focus on teaching routines and replication to students, focuses on problems and solutions that are already well-defined, lacks a focus on current problems and developing new solutions for current problems, and does not provide students with the ability to think and act creatively.11 One of the problems that has been identified is that while higher education students have shown the ability to identify relevant and important information, they often lack the ability to justify solutions and critically assess information.12

Ulger explained that when students are given problems that do not have routine solutions or in which multiple solutions may be possible, they engage in greater critical thinking as compared to students who are given a problem with a single, routine solutions.13 Rather than students being given a problem for which a solution is already pre-determined or in which there may only be one solution, students should be given problems that require exploration, critical reflection, and self-assessment.14 In this regard, higher education courses should be designed so that students are not focused on finding a pre-determined correct answer to a problem, but in using information, assessing their actions, and reflecting on information to not only suggest a solution, but also justify why the solution is valid.

Assessment

The idea of assessment with regards to stimulating critical thinking and deep analysis in higher education students is an important issue given that faculty may not understand how to create assessments that require critical thinking. Rawlusyk (2018) noted that most higher education faculty learned about creating tests and assessments not through some formal course, but from personal experience and from information and advice provided by colleagues.15 One of the problems that exists in higher education is that motivating and measuring critical thinking often requires putting students in situations in which they have to solve real-world problems as opposed to giving students standardized tests.16 It is easier for higher education faculty, especially those who teach classes with large numbers of students, to rely on standardized tests to measure student performance. However, such tests are not likely to stimulate critical thinking in students.

Assessing students in a way that necessitates critical thinking requires giving them real-life problems and situations for which a solution is needed. For example, students might be given a problem such as whether increasing the number of migrants admitted into a country increases crime rates or whether a company is utilizing its financial resources in the best way to efficiently service its customers. Then, the students would be allowed to use course knowledge, statistics, data from other sources, and knowledge from other courses to address the problem and answer the question or provide a solution with justification.17 In this way, students are not merely required to remember information, but are instead required to engage in real-world problem solving involving the use of various types of information, knowledge, reflection, and justification for solutions.18

Another argument that has been made regarding assessment in higher education related to critical thinking and deep analysis is that the focus on critical thinking must occur in everyday practices before any assessments occur. In this regard, critical thinking activities such as reflective writings and problem solving need to be built into everyday lessons and assignments.19 Students cannot be expected to engage in critical thinking and deep analysis on a formal test if critical thinking and deep analysis have not been stimulated in instructional practices.

Instruction

If instructional practice is a vital part of the ability to stimulate critical thinking and deep analysis in assessment in higher education, it is important to understand the means of instruction that are likely to lead students to engage in critical thinking and deep analysis. The teaching methods that stimulate critical thinking and deep analysis are those that are diverge from the traditional lecture. Higher education students who receive instruction using methods that require their active engagement, such as group projects, group discussions, and case studies, elicit critical thinking and deep analysis.5

One instructional method that has received attention in recent years is the flipped classroom. The idea of the flipped classroom is that activities that would normally occur in the classroom, such as reading basic course information or receiving a lecture about new content, is performed at home while classroom time is used for using the content for problem solving and engagement.20 The flipped classroom is promoted in higher education because students are more engaged with their instructors and with each other in actively using the information and knowledge that is part of a course.21 However, a downside of the flipped classroom is that this instructional method generally requires more work for instructors because lectures and other learning materials must be prepared ahead of time and made available to students outside of classroom time. Furthermore, problems arise in the classroom if students have not consumed and studied the learning materials outside of class in preparation for the classroom activities.22

There are other instructional methods that are also used when the goal is to stimulate critical thinking and deep analysis. One method is the case study method in which students are given a narrative about a problem or situation and then asked to address the specific problem by creating a solution and justifying that solution with knowledge and data.23 Another instructional method that has been found to stimulate critical thinking and deep analysis among higher education students is peer review. The process of peer review involves students critiquing and assessing the work of other students and discussing the content of the work and ways to improve upon it.24 The peer review process serves as a way for students to actively share ideas and information, collaborate on how to improve the work that is performed, and better evaluate their own work.25

The underlying issue that seems apparent in stimulating critical thinking in the way in which higher education instruction is carried out is to have students engage in problem solving. Students need to receive instruction in which they are asked to examine information and use knowledge from various sources to create solutions to problems that they required to justify.25 While this may initially require additional work on the part of higher education faculty and be a change for those who are accustomed to traditional lecture instruction, it is what is needed if students are going to learn to engage in critical thinking and deep analysis.

Methodology

Sampling Method and Size

In order to evaluate the level of applied critical thinking and deep analysis that is stimulated by faculty members with regards to their instructional style, designing lectures, assessment structure, and assignment instructions, a cross sectional quantitative study design was used. A questionnaire was distributed to faculty members at King Saud bin Abdulaziz University for Health Sciences (KSAU-HS) at Saudi Arabia. KSAU-HS is a specialized university in health sciences and it has three campuses in three different cities situated at Riyadh, Jeddah and Al Ahsa. These campuses run the same curriculum for each educational program. The faculty members at all campuses share the same academic responsibilities. A unified criterion for student enrollment is applied at all campuses. A non-probability consecutive sampling technique was used in which faculty at the three different campuses were potential participants. The potential sample included faculty of all academic titles, teaching assistant, lecturer, assistant professor, associate professor, and full professor, to obtain a better representative sample of the intended population. Those faculty that do not have a teaching role were excluded from the study.

To achieve a confidence level of 95% with a margin of error of 5% and a prevalence of 50% faculty members in a population of 1714 faculty members, the estimated sample size required was 314 faculty members. The estimated sample size was calculated using Piface by Russell V. Lenth, version 1.76. The final sample consisted of 232 faculty members.

Data Collection

The participants were given a questionnaire that was designed based on the hierarchy of Bloom’s Taxonomy of critical thinking and deep analysis with items that were used to measure the three areas designing lectures, assessment structure, and instructional assignments. The questionnaire was administered via email to the targeted participants. The reason for administering the questionnaire via emails was to easily access health science faculty members at the three different campuses from which the participants were drawn. Three reminders were sent during a duration of one month. The emails were sent to the participants by the author includes a link for the survey that will redirect the participant to fill the survey without the need to reply to the email nor the need of identification disclosure to ensure confidentiality and anonymity of participants. In addition, by administering the questionnaire via email, it was hoped that a larger number of faculty would complete the questionnaire because of the ability for them to complete it at their convenience.

Instrument

The questionnaire consisted of a total of 18 items with six items for each area of designing lectures, assessment structure, and instructional assignments. A 5-point Likert scale ranging from Strongly Disagree to Strongly Agree was used as response options for each survey item. The questionnaire also contained five demographic questions regarding the gender, academic job title, years of experience, academic role, and college in which the participants taught. Two open-ended questions were also included in which participants were asked to list any points that might stimulate or hinder the critical thinking and deep analysis in the instructional practices. The questionnaire was administered on a smaller group of health science faculty members as a pilot test in another university to ensure clarity and feasibility of questionnaire items. Face validity was performed through medical education experts while construct validity was attained through alignment of each item with bloom’s taxonomy levels for evaluating critical thinking.

Data Analysis

The data analysis consisted of both descriptive statistics and correlational analysis. Descriptive statistics are presented for the means and standard deviations of the demographic variables and the questionnaire items related to designing lectures, assessment structure, and instructional assignments. A correlation analysis was performed to determine if the level of applied critical thinking and deep analysis that is stimulated by faculty members were statistically related between designing lectures, assessment structure, and instructional assignments. An analysis of variance (ANOVA) was also performed to determine if there were significant differences based on the demographic characteristics of the participants and level of applied critical thinking and deep analysis that is stimulated by faculty members with regards to their instructional style, designing lectures, assessment structure, and assignment instructions.

Results

Descriptive Statistics

Table 1 shows the descriptive statistics for the participants who took part in the study. The sample was split fairly evenly between males and females with 52.6% of the participants being female and 47.4% being male. In terms of academic job titles, most of the participants were either Lectures or Assistant Professors at 34.7% and 43.4%, respectfully. Another 12.8% of the participants held the job title of Teaching Assistant, while Only 6.6% of the participants were Associate Professors and only 2.6% were Full Professors. In terms of years of experience, 27% of the participants had 1 to 5 years of experience, 29.6% had 5 to 10 years of experience, and 43.4% had 10 or more years of experience. Most of the participants, 54.4%, were from Riyadh region, while 22.4% were from Jeddah region, and 23.2% were from Al Ahsa region.

|

Table 1 Demographic Variables of Faculty Member |

Table 2 shows the descriptive statistics for the 18 questionnaire items used to measure designing lectures, assessment structure, and instructional assignment of the participants. The faculty members who took part in the study indicated that they agreed or strongly agreed with almost all of the questionnaire items. The item that received the highest mean response of 4.50 was “ask students questions to ensure their understanding”. The item that received the lowest mean response of 3.560 was “student recalling of information”. The only three questionnaire items that received less than a mean of 4.000 from the participants was “student recalling information” at 3.560, “to recall knowledge from their memory” at 3.890, and “students’ ability to create and come up with innovative solutions” at 3.960.

|

Table 2 The Mean Response of Faculty Members for Questionnaire Items |

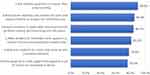

Figure 1 shows the percentage of participants who indicated that they strongly agreed with each of the items 18 items used to measure designing lectures, assessment structure, and instructional assignment. In total, 91% of the participants strongly agreed with the statement “to understand what they have learned” The questionnaire item that received the lowest percentage of participants indicating that they strongly agreed was “students recalling of information” at 58.6%.

|

Figure 1 Percentage of participants that strongly agreed with each questionnaire item. |

Item Consistency

Before more closely examining the perceptions of the participants along each of the three areas of designing lectures, assessment structure, and instructional assignment, it is important to determine if there was internal consistency among the six questions that made up each of the three areas of interest. Cronbach’s alpha was calculated for all 18 items together to determine if there was internal consistency between the items for the entire questionnaire. The Cronbach’s alpha for all 18 questions was 0.945, which indicated a very high level of internal consistency among the questions. Next, Cronbach’s alpha was calculated for each of the three sections of the questionnaire. Cronbach’s alpha was 0.899 for designing lectures, 0.856 for assessment structure, and 0.876 for instructional assignment. Based on these values, it was determined that internal consistency existed among the six questions that comprised each of the three areas of the questionnaire.

Designing Lectures

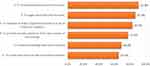

Figure 2 shows the percentage of participants who indicated that they strongly agreed with the six items related to designing lectures. The item that received the most agreement at 90.0% was “ask students questions to ensure their understanding”. In contrast, the item that received the lowest agreement related to designing lectures was “allow students to make judgements based on a set of criteria via evaluating evidence” at 76.2%.

|

Figure 2 Percent of participants who agreed on designing lecture items. |

Assessment Structure

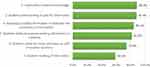

In terms of assessment structure, Figure 3 shows that the item that received the most agreement among the participants with regards to the questions in their assessments examining the students’ abilities was “to understand what they have learned” at 91.0%. However, the item that received the lowest agreement among the participants with regards to assessment structure was “to create and come up with innovative solutions”. Only 65.6% of the participants strongly agreed that that questions in their assessments tended to involve having students come up with innovative solutions was an important aspect for assessment structure.

|

Figure 3 Percent of participants who agreed on assessment structure items. |

Instructional Assignment

Regarding instructional assignment, Figure 4 shows that 88.3% of the participants strongly agreed that their assignments were structured to foster “application of learned knowledge”. In contrast, “student recalling of information” received the least agreement among the participants regarding instructional assessment. Only 58.6% of the faculty who were surveyed strongly agreed that their assignments were structured to foster the ability of students to recall information.

|

Figure 4 Percent of participants who agreed on instructional assignment items. |

Correlation Analysis

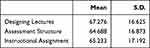

A correlation analysis was conducted between the three areas of designing lectures, assessment structure, and instructional assignment. The correlation analysis was performed by summing the scores for the six items in each of the three sections of the questionnaire and finding the mean total score for each section. Table 3 shows that the mean score for designing lectures was 67.276, following by a mean score of 65.233 for instructional assignment and 64.688 for assessment structure. An analysis of variance (ANOVA) was performed to determine if there was a significant difference in the total mean scores between the three areas. The result of the ANOVA showed that there was a significant difference in the perceptions of the participants between designing lectures, assessment structure, and instructional assignment (p<0.05). Based on these results, the participants more strongly agreed with the items related to designing lectures for deep learning and critical thinking as compared to the items related to using questions in their assessments for deep learning and critical thinking or having assignments for deep learning and critical thinking.

|

Table 3 The Mean Response of Faculty Members for Questionnaire Sections |

Table 4 shows the correlation matrix for the three sections of the questionnaire. The mean responses from the participants to each of the three sections of the questionnaire were significantly correlated with each other. The perceptions of the participants regarding designing lectures, assessment structure, and instructional assignment were statistically significantly related to each other. The significant correlations indicate that the perceptions of the participants regarding designing lectures was related to their perceptions of assessment structure, which were related to their perceptions of instructional assignment.

|

Table 4 Correlation of Questionnaire Sections |

Differences Based on Demographic Factors

One other analysis of the data was performed, which was an analysis of variance (ANOVA) of the mean responses to the three areas of the questionnaire in relation to each of the demographic variables. The reason for performing an ANOVA between each of the demographic variables and each of the three areas of the questionnaire was to determine if there were significant differences in the perceptions of the participants regarding designing lectures, assessment structure, and instructional design based on their gender, their academic job titles, their years of experience, the three main cities on which they worked, and health science colleges in which they worked.

Table 5 shows the mean values for each of the three areas of the questionnaire in relation to each of the demographic variables. The p-value below each demographic variable is the p-value of the ANOVA that was performed. The table shows that for each of the demographic variables and each of the three areas of the questionnaire, there was not a significant difference among the participants with regards to their perceptions of designing lectures, assessment structure, and instructional design. In this regard, there was not a significant difference in the application of stimulating critical thinking and deep analysis in the students in relation to the designing lectures, assessment structure, and instructional assignment based on gender, job title, years of experience, the three main cities on which the participants worked, or the specific health science colleges in which the participants taught.

|

Table 5 Analysis of Variance of Mean Questionnaire Section Responses Based on Demographic Variables |

Discussion

The results of this study raise some question as to whether the participants engaged in designing courses, assessment structure, and instructional assignment that stimulated critical thinking and deep analysis on the part of their students. While 84.1% of the participants strongly agreed that they designed lecture items to require students to apply what they had learned, only 76.2% of the participants strongly agreed that they design lectures to allow students to make judgements based on a set of criteria via evaluating evidence. Activities such as requiring students to apply what they have learned and to make judgements by evaluating evidence are important aspects of course design that stimulate critical thinking and deep analysis on the part of students.9,13

While it may seem a large percentage of the participants designed their lectures around activities, it is also concerning that about one-fifth of the participants did not design their lectures around activities that require students to engage in critical thinking and deep analysis. Instead, 90.0% of the participants strongly agreed that they design their lectures to ask students questions to ensure their understanding. This would seem to be a traditional action that occurs when higher education faculty deliver lectures. An instructor may lecture for a few minutes before stopping to ask students if they have any questions or understand the material. The argument can be made that asking questions to determine if students understand course content, even if all students participate in some way, is merely an activity of memorization. If students are only asked to recall something that was presented in a lecture without using that information to engage in problem solving or another task that requires the use of new information combined with other knowledge, then critical thinking and deep analysis are not occurring.

In terms of assessment structure, 87.0% of the participants strongly agreed that their assessment structures are created for students to apply what they have learned. In addition, 77.6% of the participants strongly agreed that their assessment structures allowed students to evaluate and make judgments based on a set of criteria or evidence and only 65.6% of participants strongly agreed that their assessment structures allowed students to create and come up with innovative solutions. From these figures, it seems appropriate to conclude that a large percentage of the faculty who took part in this study are not stimulating critical thinking and deep analysis among their students in their assessment structures.

Assessment structures that stimulate critical thinking and deep analysis in students require that students engage in analysis of knowledge and data and create solutions to problems.18 About one-fourth of the participants in this study did not strongly agree that they create assessments to allow students to provide answers based on their own analysis. Even more troubling is that about 45% of the participants did not strongly agree that they create assessment structures to allow students to come up with innovative solutions. The conclusion that can be made is that the faculty who took part in this study are not fully incorporating activities into their assessment structures to stimulate critical thinking and deep analysis in their students.

Instructional assignments that stimulate critical thinking and deep analysis among students are those that require the students to utilize information and knowledge to engage in problem solving and justifying solutions to problems.23 In terms of instructional assignment, 88.3% of the participant strongly agreed that their instructional assignments are created so that students can apply learned knowledge. However, only 84.1% of the participants strongly agreed that their instructional assignments were designed based on students’ ability to evaluate existing information or evidence and only 73.8% strongly agreed that their instructional assignments were created based on students’ ability to come up with innovative solutions.

The one area that is positive in terms of the participants stimulating critical thinking and deep analysis among their students with regards to instructional assignments is creating assignments for students to recall information. Only 58.6% of the participants strongly agreed that their instructional assignments were created for student recalling of information. Critical thinking and deep analysis do not occur when students merely must recall information.10 However, having nearly 60% of the participants strongly agree that their instructional assignments are created for students to recall information means that more than half of the participants give students assignments that do not stimulate critical thinking and deep analysis.

Overall, the responses provided by the participants show that some faculty are designing lectures, having assessment structures, and create instructional assignments that are meant to stimulate critical thinking and deep analysis among higher education students. The problem, however, is that there are many activities and processes that the participants indicated that they used in their lecture designs, assessment structures, and instructional assignments that do not stimulate critical thinking and deep analysis. Based on the data collected for this study, it appears that while the faculty who were surveyed engage in some activities that stimulate critical thinking and deep analysis, changes could be made to incorporate more activities that would further stimulate critical thinking and deep analysis among their students.

The strength of this study is that the participants were asked to respond to items that encompassed course design, assessment, and instruction. By collecting data that encompassed these three areas, it was possible to examine how faculty were stimulating critical thinking and deep analysis in their students across instructional practices. Fewer assumptions had to be made about how the participants may have interpreted survey items in relation to designing their courses as compared to instructional assignments they gave to students or the types of assessments they used.

The limitation of this study is that the majority of faculty were from one university in Saudi Arabia given the fact that it includes three campuses in three different cities. The ability to generalize the findings of this study to the larger population of higher education faculty in Saudi Arabia or even a single area of Saudi Arabia is not possible. In addition, the sample of participants likely does not represent the health science faculty members from which they were drawn. However, even with this limitation, the results of this study provide a basis from which to engage in further research to understand whether faculty are using activities in their courses that stimulate critical thinking and deep analysis among their students. While a great deal of discussion occurs within academia about the importance of helping students move beyond memorization to critical thinking and deep analysis, it is necessary to understand whether higher education faculty are stimulating critical thinking and deep analysis among students. All the discussion about engaging students in critical thinking means nothing if faculty are not responding to those discussions.

Several recommendations can be made for future research. One recommendation for future research is to replicate this study with faculty at other universities and in other locations. By replicating this study, it would be possible to compare the responses of higher education faculty to determine if other factors may be present in the types of activities that higher education faculty use that stimulate critical thinking and deep analysis among students. Another recommendation is to ask faculty about whether certain activities and practices stimulate critical thinking and deep analysis in students. It is possible that higher education faculty do not fully understand the types of activities that are most likely to stimulate critical thinking in their students.

Conclusion

The purpose of this study was to examine the application of faculty members to stimulate the critical thinking and deep analysis of their students through instructional practice including lecture design, assessment structure, and assignment instructions. The results of this study showed that the faculty who were surveyed are using some activities and processes in their lecture designs, assessment structures, and instructional assignments that stimulate critical thinking and deep analysis among their students. However, the results also showed that the faculty who were surveyed continued to rely on activities that did not stimulate critical thinking and deep analysis among their students, and instead required students to only engage in memorization of information gained in the classroom.

The significance of the results of this study is that higher education faculty still have work to do to utilize the types of activities that are likely to stimulate critical thinking and deep analysis among students. While faculty used some activities that encourage critical thinking in their students, there is still a reliance on activities, such as recalling information on instructional assignments, that do not stimulate critical thinking and deep analysis. If the goal for higher education institutions is to have faculty stimulate critical thinking and deep analysis in their students, then more work is needed to help faculty achieve that goal.

Data Sharing Statement

The datasets used and/or analyzed during the current study are available from the corresponding author on responsible request.

Ethics Approval and Consent to Participate

The methods of the study were performed in accordance with the guidelines and regulations. Participation in the study was voluntary, and all the participants had the option to withdraw from the study at any stage of the research without giving any reasons. Informed consent was obtained from all participants. It included the explanation, the purpose and benefits of the study, and they were reassured about anonymity. Information that could identify participants was saved securely. Ethics approval was obtained from the Institutional Review Board of King Abdullah International Medical Research Center, National Guard Health Affairs, Riyadh, Saudi Arabia with study number (NRC21R/489/11).

Acknowledgments

We would like to extend our gratitude to the faculty members who participated in this study. We would also like to thank King Abdullah International Medical Research Center for the support.

Disclosure

The author declares that he has no competing interests.

References

1. Erikson MG. Learning outcomes and critical thinking – good intentions in conflict. Stud High Educ. 2018;44(12):1–11. doi:10.1080/03075079.2018.1486813

2. Mohammad RF. Engaging teachers in professional development: course design at higher education. Eur J Teach Educ. 2021;3(3):25–34. doi:10.33422/ejte.v3i3.703

3. Dumitru D. Creating meaning. The importance of arts, humanities and culture for critical thinking development. Stud High Educ. 2019;44(5):870–879. doi:10.1080/03075079.2019.1586345

4. Kahlke R, Eva K. Constructing critical thinking in health professional education. Perspect Med Educ. 2018;7(3):156–165. doi:10.1007/s40037-018-0415-z

5. Unlu S. Curriculum development study for teacher education supporting critical thinking. Eurasian J Educ Res. 2018;18(76):165–186.

6. Rear D. One size fits all? The limitations of standardised assessment in critical thinking. Assess Eval High Educ. 2018;44(5):664–675. doi:10.1080/02602938.2018.1526255

7. Giacomazzi M, Fontana M, Camilli Trujillo C. Contextualization of critical thinking in Sub-Saharan Africa: a systematic integrative review. Think Ski Creat. 2022;43:1–17. doi:10.1016/j.tsc.2021.100978

8. Javier P, Candia C, Leonardi P. Cooperation is not enough: the role of instructional strategies in cooperative learning in higher education. arXiv. 2019;14:1–15. doi:10.13140/RG.2.2.35562.67525

9. Beligatamulla G, Rieger J, Franz J, Strickfaden M. Making pedagogic sense of design thinking in the higher education context. Open Educ Stud. 2019;1(1):91–105. doi:10.1515/edu-2019-0006

10. Khan MS, Abdou BO, Kettunen J, Gregory S. A phenomenographic research study of students’ conceptions of mobile learning: an example from higher education. SAGE Open. 2019;9(3):1–17. doi:10.1177/2158244019861457

11. Jahnke I, Liebscher J. Three types of integrated course designs for using mobile technologies to support creativity in higher education. Comput Educ. 2020;146:1–17. doi:10.1016/j.compedu.2019.103782

12. Al-Husban NA. Critical thinking skills in asynchronous discussion forums: a case study. Int J Technol Educ. 2020;3(2):82–91. doi:10.46328/ijte.v3i2.22

13. Ulger K. The effect of problem-based learning on the creative thinking and critical thinking disposition of students in visual arts education. Interdiscip J Probl Based Learn. 2018;12(1):1–19. doi:10.7771/1541-5015.1649

14. Mark F, Wotring A. Designing a framework to improve critical reflection writing in teacher education using action research. Educ Act Res. 2022;30:1–17. doi:10.1080/09650792.2022.2038226

15. Rawlusyk PE. Assessment in higher education and student learning. J Instructi Pedag. 2018;21:1–34.

16. Thorndahl KL, Stentoft D. Thinking critically about critical thinking and problem-based learning in higher education: a scoping review. Interdiscip J Probl Based Learn. 2020;14(1):1–20. doi:10.14434/ijpbl.v14i1.28773

17. Shavelson RJ, Zlatkin-Troitschanskaia O, Beck K, Schmidt S, Marino JP. Assessment of university students’ critical thinking: next generation performance assessment. Int J Test. 2019;19(4):337–362. doi:10.1080/15305058.2018.1543309

18. Ahern A, Dominguez C, McNally C, O’Sullivan JJ, Pedrosa D. A literature review of critical thinking in engineering education. Stud High Educ. 2019;44(5):816–828. doi:10.1080/03075079.2019.1586325

19. Ilyas S. Reflection completes the learning process: a qualitative study at higher education. Bull Educ Res. 2020;42(3):225–240.

20. Bouguebs R. Integrating flipped learning pedagogy in higher education: fitting the needs of COVID-19 generation. Appl Linguist. 2021;5(9):144–155.

21. Jang HY, Kim HJ. A meta-analysis of the cognitive, affective, and interpersonal outcomes of flipped classrooms in higher education. Educ Sci. 2020;10(4):115–131. doi:10.3390/educsci10040115

22. Al-Samarraie H, Shamsuddin A, Alzahrani AI. A flipped classroom model in higher education: a review of the evidence across disciplines. Educ Technol Res Dev. 2019;68(3):1017–1051. doi:10.1007/s11423-019-09718-8

23. Mahdi OR, Nassar IA, Almuslamani HA. The role of using case studies method in improving students’ critical thinking skills in higher education. Int J High Educ. 2020;9(2):297–308. doi:10.5430/ijhe.v9n2p297

24. Serrano-Aguilera JJ, Tocino A, Fortes S, et al. Using peer review for student performance enhancement: experiences in a multidisciplinary higher education setting. Educ Sci. 2021;11(2):1–21. doi:10.3390/educsci11020071

25. Pravita AR, Kuswandono P. Critical thinking implementation in an English education course: why is it so challenging? J Eng Educ Lit Cult. 2021;6(2):300–313. doi:10.30659/e.6.2.300-313

© 2023 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2023 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.