Back to Journals » Advances in Medical Education and Practice » Volume 12

A Weighted Evaluation Study of Clinical Teacher Performance at Five Hospitals in the UK

Authors Sam AH , Fung CY, Barth J, Raupach T

Received 27 May 2021

Accepted for publication 3 August 2021

Published 26 August 2021 Volume 2021:12 Pages 957—963

DOI https://doi.org/10.2147/AMEP.S322105

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 7

Editor who approved publication: Dr Md Anwarul Azim Majumder

Amir H Sam,1 Chee Yeen Fung,1 Janina Barth,2 Tobias Raupach3

1Medical Education Research Unit, Imperial College School of Medicine, Imperial College London, London, UK; 2Division of Medical Education Research and Curriculum Development, University Medical Centre Göttingen, Göttingen, Germany; 3Institute for Medical Education, University Hospital Bonn, Bonn, Germany

Correspondence: Tobias Raupach

Institute for Medical Education, University Hospital Bonn, Bonn, D-53127, Germany

Tel +49 228 287-52160

Email [email protected]

Introduction: Evaluation of individual teachers in undergraduate medical education helps clinical teaching fellows identify their own strengths and weaknesses. In addition, evaluation data can be used to guide career decisions. In order for evaluation results to adequately reflect true teaching performance, a range of parameters should be considered when designing data collection tools.

Methods: Clinical teaching fellows at five London teaching hospitals were evaluated by third-year students they had supervised during a ten-week clinical attachment. The questionnaire addressed (a) general teaching skills and (b) student learning outcome measured via comparative self-assessments. Teachers were ranked using different algorithms with various weights assigned to these two factors.

Results: A total of 133 students evaluated 14 teaching fellows. Overall, ratings on teaching skills were largely favourable, while the perceived increase in student performance was modest. Considerable variability across teachers was observed for both factors. Teacher rankings were strongly influenced by the weighting algorithm used. Depending on the algorithm, one teacher was assigned any rank between #2 and #10.

Conclusion: Both parts of the questionnaire address different outcomes and thus highlight specific strengths and weaknesses of individual teachers. Programme directors need to carefully consider the weight assigned to individual components of teacher evaluations in order to ensure a fair appraisal of teacher performance.

Keywords: clinical teaching, evaluation, learning outcome, undergraduate medical education

Introduction

According to Kern’s cycle of curriculum development, evaluation is the crucial link between teaching/assessment and improvement of a given curriculum.1 Evaluation may target structural and procedural aspects of teaching as well as teacher performance and student learning outcome.2 The focus of a specific evaluation needs to be aligned to the purpose of this particular evaluation; hence, the need for specific data collection tools. For example, a 360° programme evaluation may require a comprehensive approach, potentially involving multiple data sources (including, but not restricted to, students, graduates, teachers, patients, and programme directors) and collection methods (eg, quantitative and qualitative) while evaluations aimed at identifying specific strengths and weaknesses in teaching with regard to a pre-defined content area may require a more focussed approach.

While course evaluations help improve teaching quality with regard to structural and procedural aspects, teacher evaluations can inform (a) improvement of individual teaching skills3 and (b) career decisions.4 According to a common notion, teachers who adhere to good clinical teaching principles will inherently elicit favourable student learning outcome. This may not be the case, but testing this assumption requires simultaneous collection of evaluation data on teacher performance and learning outcome in a student group supervised by that same teacher. Combining these two concepts could be helpful, and indeed prerequisite fair appraisal of individual teaching quality.

Given the potential consequences, it is important for medical schools and programme directors to provide a clear definition of “good” teaching quality and use transparent assessment criteria. Evaluation of individual teacher performance usually involves students completing questionnaires covering general teaching skills. Most of these questionnaires are lengthy,5 thus thwarting curriculum-wide implementation. Moreover, only very few data collection instruments have been developed in alignment with a theoretical framework. One such framework is the Stanford Faculty Development Programme (S-FDP).6 It encompasses seven categories of good clinical teaching: establishing a positive learning climate, control of the teaching session, communicating goals, promoting understanding and retention, evaluation, feedback and promoting self-directed learning. Although questionnaires provide some information on the adherence of individual teachers to generally accepted principles of good teaching,7 they do not take student learning outcome into account. To date, there are no data to support the hypothesis that all teachers with good teaching skills (as measured by these questionnaires) also elicit favourable learning outcome in their students. Thus, these two dimensions of teaching quality need to be assessed separately. Evaluation tools producing reliable and valid data on student learning outcome are available,8 but, to our knowledge, these have not been combined with other questionnaires aimed at more general teaching skills in the context of clinical teaching.

The aims of this study were to (a) perform a multi-dimensional evaluation of individual teacher performance in clinical teaching, taking into account adherence to principles of good teaching as well as student learning outcome, and (b) assess potential differences in teacher rankings when assigning different weights to these two components.

Methods

In summer 2018, clinical teachers at five teaching hospitals affiliated to the School of Medicine at Imperial College (IC) London were offered to have their teaching performance evaluated by medical students, using a two-part questionnaire addressing (a) general teaching skills and (b) student learning outcome.

Description of Clinical Attachments

All third-year undergraduate students are required to take two nine/ten-week clinical attachments at one of IC’s affiliated hospitals. According to the Medical School curriculum, learning objectives for these clinical attachments include taking a complete medical history and performing and recording a comprehensive cardiovascular, respiratory, abdominal, and nervous system examination as well as interpreting these examination findings. These general principles are applied to various systems (eg, cardiovascular or nervous system examination). Learning activities during the clinical attachment include ward-based learning, bedside teaching, lectures, clinical skills sessions and tutorials. The clinical teaching fellows’ role is dedicated to supporting undergraduate students in achieving these learning objectives through a combination of bedside teaching, tutorials and revision sessions, including mock OSCEs, during their clinical attachment.

Data Collection

At the end of the clinical attachment, all students at five affiliated teaching hospitals were invited to attend either a revision or feedback session during which they were asked to complete a questionnaire consisting of two parts.

Questionnaire Part 1: General Teaching Skills

Based on the categories covered by the S-FDP, we developed and validated a questionnaire specifically aimed at assessing clinical teaching performance.9 The questionnaire consists of 18 items to be rated on 5-point scales (see Supplement and Table 1). According to international standards for questionnaire translation and adaptation,10 the original German version of the questionnaire was translated to English by three bilingual native speakers (born in the United Kingdom, Australia and the United States of America) who are currently enrolled in the undergraduate medical curriculum at Göttingen Medical School. Following the backward translation to German performed by two experts in dental and medical education as well as one English teacher (German native speaker), a cognitive debriefing session was held. This involved fourteen students sampled from the target group (3rd year medical students at IC London). During each of these steps, the wording of individual items was revised.

Questionnaire Part 2: Student Learning Outcome

Learning outcome was assessed using the method of comparative self-assessment (CSA) Gain.11 This method requires students to self-assess their own performance on specific learning outcomes on six-point scales for two different time-points: at present (post-rating) and retrospectively at the beginning of a course (then-rating).12 For each self-assessment statement, the difference of the two mean ratings is divided by the mean then-rating in order to adjust for initial performance level. CSA Gain ranges from -100% to +100% with values >60% indicating favourable student learning outcome. While self-assessments that are collected at only one point in time can be biased by individual tendencies to under- or overestimate performance levels,13 repeated self-assessments within the same student group control for this bias (as it should be the same for each participant at both time points). More detailed information on the approach can be found elsewhere.14

For the purpose of the present study, self-assessment statements were aligned to six learning objectives related to (i) medical history-taking and physical examination of (ii) the cardiovascular, (iii) respiratory and (iv) nervous system as well as (v) the abdomen. The sixth learning objective referred to the interpretation of physical examination findings (see Table 2).

|

Table 2 Descriptive Analysis of Student Self-Assessments and CSA Gain (Questionnaire Part 2) in the Overall Sample (n = 133). Items Were Rated on 6-Point Scales with 6 Being the Most Favourable Option. CSA Gain Was Calculated as Described Previously14 |

The complete questionnaire termed “Teacher Evaluation form for Clinical Teaching (TECT)” can be found in the Supplement. Paper versions of the questionnaire were completed by students and scanned using EvaSys® (Electric Paper, Lüneburg, Germany). Data were exported as a “.csv” file and imported to SPSS 24.0 (IBM Corp., Armonk, New York, USA).

Data Analysis

Data analysis was restricted to teaching fellows who had received at least 5 student ratings. First, descriptive analyses were performed on the entire dataset. Second, the sample was split into subsamples of students who had been supervised by the same teaching fellow. Mean values and standard deviations were calculated for the 18 general TECT items (questionnaire part 1). Following the calculation of z scores, these were averaged across items, thus producing one “teaching skills z score” per teaching fellow (outcome measure 1). CSA Gain for the six specific learning objectives was calculated at the level of individual students, averaged across all students supervised by one particular teaching fellow, converted to z scores and averaged across learning objectives, thus producing one “CSA Gain z score” per teaching fellow (outcome measure 2). The differences between the then-ratings and post-ratings for the learning outcomes were assessed using a Wilcoxon signed rank test and the effect sizes were calculated as Cohen’s d.15

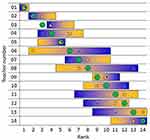

With regard to the second aim of this study, teaching fellows were ranked according to z scores, and different weights were assigned to the two components. Five different z score weightings were used to arrive at five different rankings: (i) teaching skills z score only; (ii) teaching skills: CSA Gain = 3:1; (iii) teaching skills: CSA Gain = 1:1; (iv) teaching skills: CSA Gain = 1:3; (v) CSA Gain only.

Ethics approval was obtained from the Medical Education Ethics Committee at Imperial College London (application number MEEC1718‐84). All participating students provided written consent to have their data analysed. No personal data were collected on the teaching fellows; each fellow was assigned a non-identifiable code during data collection, and these codes were used to match data obtained from students supervised by the same teaching fellow.

Results

Student and Teacher Sample

A total of 181 students were eligible for study participation, and 146 (81%) provided data on 18 out of 25 teaching fellows (72%). Four fellows were rated by fewer than 5 students, thus data analysis was restricted to 14 fellows who had received ratings from a total of 133 students. The number of students providing ratings for individual teaching fellows ranged from 5 (3 teaching fellows) to 14 (1 teaching fellow) with a median of 9 completed questionnaires per teaching fellow.

Overall Results

Table 1 presents the results of the descriptive analysis of the 18 items addressing general teaching skills in the overall sample. While there was evidence for a ceiling effect in some items (eg, teaching fellows treating students with respect and expressing themselves in an understandable way), data were indicative of room for improvement in other areas (eg, opportunities to examine patients; clinical application of theoretical knowledge).

Overall CSA Gain results are shown in Table 2. Initial performance levels were favourable (mostly >4 on a 6-point scale). Significant increases in student self-assessments were found when the then- and post-ratings were compared (p<0.0001). However, owing to relatively high initial performance levels and suboptimal post-ratings (on average 5 out of 6), these increments represented moderate CSA Gain values with particularly low learning outcome (<40%) for nervous system examination skills and for the interpretation of examination findings.

With regard to the first aim of this study, we found that student evaluations of the fellows’ teaching skills were generally positive while student learning outcome was at best moderate.

Teacher-Specific Results

Student ratings for individual items in part 1 of the questionnaire revealed considerable performance differences between teaching fellows. Students supervised by one fellow did not agree that they had had sufficient opportunities to examine patients during the sessions (2.77 ± 0.93 on a 5-point scale) while the same item received very positive ratings from a group supervised by a different teaching fellow (4.60 ± 0.55). When comparing the same two teaching fellows, an opposite pattern was observed for the item referring to respectful behaviour (4.00 ± 0.82 for the former and 3.20 ± 0.84 for the latter teaching fellow).

There was also great variability of student learning outcome across teachers. For example, CSA Gain for cardiovascular system examination skills ranged from 19.2 ± 50.0% to 75.6 ± 43.3%. More importantly, CSA Gain for different learning objectives also showed considerable intra-individual variability: In one student group supervised by a particular teaching fellow, learning outcome for respiratory examination skills was the lowest observed in all 14 groups (13.3 ± 29.8%); at the same time, this group scored the highest learning outcome for history taking skills (73.3 ± 43.5%) when compared with all other groups.

Teacher Rankings

In order to examine whether teachers who had received more favourable ratings on general teaching skills also elicited more favourable learning outcome in their students, teaching fellows were ranked according to a number of different algorithms assigning various weights to the two components (Figure 1).

While one teacher was ranked #1 regardless of the algorithm used, there was great variability in rankings for individual teachers, depending on how much weight was assigned to teaching skills or student learning outcome, respectively. For example, one teacher was ranked #13 of 14 when their performance was solely based on student ratings on the first part of the questionnaire (teaching skills) but ranked #5 when rankings were solely informed by student learning outcome.

Thus, with regard to the second aim of this study, we found considerable differences in teacher rankings depending on the weighting of two components of teaching quality.

Discussion

Bedside teaching is one of the most important clinical teaching modalities, which provides students with authentic opportunities to learn and receive feedback on clinical skills, decision-making, communication and professionalism under observation.16–18

In this study, clinical teaching fellows were evaluated with regard to their bedside teaching skills as well as learning outcome of the students they had supervised. While student ratings on items related to teaching skills were largely positive, CSA Gain was low to moderate for all learning objectives assessed. In addition to this discrepancy between the two outcomes, the way the two components were weighted had considerable impact on teacher rankings.

These findings give rise to a number of questions on the validity and fairness of using student satisfaction ratings to guide the evaluation of individual teaching performance. Apart from the fact that student ratings may be confounded by various factors unrelated to the construct underlying a particular evaluation,19,20 questionnaires focussing on teaching skills alone may not adequately reflect teachers’ abilities to help their students master relevant learning objectives. While adherence to general principles of good clinical teaching5 is desirable, it does not appear to guarantee favourable learning outcome. As a consequence, the latter should also be considered when using evaluation data to inform career decisions.4 In this study, various weights were assigned to the two components of teaching quality, and the results illustrate how the algorithm used impacts on teacher rankings. Our findings may enable programme directors to make informed choices on the design of teacher evaluations at their respective institutions.

On the level of individual teachers, the feedback generated with the combined evaluation tool used in this study may help identify specific strengths and weaknesses, the latter of which could be remedied by targeted faculty development or mentorship programmes. Teacher underperformance in specific areas (eg, student learning outcome with regard to nervous system examination skills) can only be detected if these areas are specifically addressed on evaluation forms. In order for individual evaluation results to be meaningful in this way, medical schools need to make considerable efforts to map specific learning objectives on particular teaching formats (and teachers involved in these). The overall analysis of CSA Gain in the present study revealed that, across all six learning objectives, the performance increase measured by student self-assessments was modest. This may be due to the fact that initial student performance levels were already quite favourable, thus allowing for relatively small increments. It would be interesting to run the evaluation in earlier clinical attachments, when initial performance levels are expected to be lower.

Strengths and Limitations

We used validated tools to combine student ratings of clinical teacher performance with student learning outcome data in order to critically appraise individual teaching performance. Medical students as well as experts in medical education research were involved in the development and translation of the questionnaire. Response rates were favourable, and study participants were drawn from a number of teaching hospitals, thereby increasing the representativeness of the data and the generalisability of our findings. At the same time, a larger sample of teachers with greater variability in student ratings of teaching skills should be included in future studies in order to conduct in-depth psychometric analyses and to further validate the approach of combining general teaching skills ratings with student learning outcome data. We cannot exclude the possibility of social desirability bias having affected student ratings of teacher performance; however, students were assured that data collection was strictly anonymous, and any bias of this type should have impacted all teachers to the same extent. While learning outcome was not externally validated in this study, previous research has shown that learning outcome measured as CSA Gain is a good surrogate marker of actual learning outcome measured in objective exams8 that is largely unaffected by response shift bias or implicit theories of change.12 Comprehensive evaluations of teaching fellows should factor in, but not be restricted to, student ratings.21 Given that only two dimensions of teaching quality were assessed in this study, and a number of additional sources may be used to generate 360° feedback. Future studies should try to identify other useful combinations of evaluation tools that can be easily implemented and are acceptable to students in terms of the time spent on evaluation.

Conclusion

Our results indicate that both parts of the questionnaire address different outcomes and thus highlight specific strengths and weaknesses of individual teachers. The feedback generated by this method could help individual teachers to select faculty development activities aligned to their individual needs. Combining different concepts in teacher evaluation may yield richer information for programme directors and result in a fairer appraisal of teacher performance.

Abbreviations

CSA, comparative self-assessment; IC, Imperial College; OSCE, objective structured clinical examination; S-FDP, Stanford Faculty Development Programme; SPSS, Statistical Package for the Social Sciences; TECT, Teacher Evaluation form for Clinical Teaching.

Data Sharing Statement

The datasets used in the current study are available from the corresponding author on reasonable request.

Ethics Approval and Consent to Participate

Ethics approval was obtained from the Medical Education Ethics Committee at Imperial College London (application number MEEC1718‐84). All participating students provided written consent to have their data analysed.

Consent for Publication

Not applicable: This manuscript does not contain individual person’s data in any form. Thus, consent for publication was not needed.

Acknowledgments

We would like to thank all clinical teachers and medical students who devoted their time to this study, particularly by helping with the forward and backward translation of the questionnaire as well as the cognitive debriefing of the English questionnaire version.

Author Contributions

All authors contributed to data analysis, drafting or revising the article, gave final approval of the version to be published, agreed to the submitted journal, and agree to be accountable for all aspects of the work.

Funding

There was no funding for this study.

Disclosure

The authors declare no conflicts of interest.

References

1. Chen BY, Kern DE, Hughes MT, Thomas PA, editors. Curriculum Development for Medical Education: A Six-Step Approach. Baltimore: Johns Hopkins University Press; 2016.

2. Gibson KA, Boyle P, Black DA, Cunningham M, Grimm MC, McNeil HP. Enhancing evaluation in an undergraduate medical education program. Acad Med. 2008;83:787–793. doi:10.1097/ACM.0b013e31817eb8ab

3. Schiekirka-Schwake S, Dreiling K, Pyka K, Anders S, von Steinbüchel N, Raupach T. Improving evaluation at two medical schools. Clin Teach. 2018;15(4):314–318. doi:10.1111/tct.12686

4. Fluit CRMG, Bolhuis S, Grol R, Laan R, Wensing M. Assessing the quality of clinical teachers: a systematic review of content and quality of questionnaires for assessing clinical teachers. J Gen Intern Med. 2010;25(12):1337–1345. doi:10.1007/s11606-010-1458-y

5. Litzelman DK, Westmoreland GR, Skeff KM, Stratos GA. Factorial validation of an educational framework using residentsʼ evaluations of clinician-educators. Acad Med. 1999;74(10):S25–S27. doi:10.1097/00001888-199910000-00030

6. Skeff KM. Enhancing teaching effectiveness and vitality in the ambulatory setting. J Gen Intern Med. 1988;3(S1):S26–S33. doi:10.1007/BF02600249

7. Beckman TJ, Lee MC, Rohren CH, Pankratz VS. Evaluating an instrument for the peer review of inpatient teaching. Med Teach. 2003;25(2):131–135. doi:10.1080/0142159031000092508

8. Schiekirka S, Reinhardt D, Beibarth T, Anders S, Pukrop T, Raupach T. Estimating learning outcomes from pre- and posttest student self-assessments: a longitudinal study. Acad Med. 2013;88(3):369–375. doi:10.1097/ACM.0b013e318280a6f6

9. Dreiling K, Montano D, Poinstingl H, et al. Evaluation in undergraduate medical education: conceptualizing and validating a novel questionnaire for assessing the quality of bedside teaching. Med Teach. 2017;39(8):820–827. doi:10.1080/0142159X.2017.1324136

10. Wild D, Grove A, Martin M, et al. Principles of good practice for the translation and cultural adaptation process for Patient-Reported Outcomes (PRO) measures: report of the ISPOR task force for translation and cultural adaptation. Value Health. 2005;8(2):94–104. doi:10.1111/j.1524-4733.2005.04054.x

11. Raupach T, Schiekirka S, Münscher C, et al. Piloting an outcome-based programme evaluation tool in undergraduate medical education. GMS Z Med Ausbild. 2012;29:Doc44.

12. Schiekirka S, Anders S, Raupach T. Assessment of two different types of bias affecting the results of outcome-based evaluation in undergraduate medical education. BMC Med Educ. 2014;14(1):149. doi:10.1186/1472-6920-14-149

13. Kruger J, Dunning D. Unskilled and unaware of it: how difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J Pers Psychol. 1999;77:1121. doi:10.1037/0022-3514.77.6.1121

14. Raupach T, Münscher C, Beissbarth T, Burckhardt G, Pukrop T. Towards outcome-based programme evaluation: using student comparative self-assessments to determine teaching effectiveness. Med Teach. 2011;33(8):e446–e453. doi:10.3109/0142159X.2011.586751

15. Cohen J. A power primer. Psychol Bull. 1992;112(1):155–159. doi:10.1037/0033-2909.112.1.155

16. K Ahmed ME. What is happening to bedside clinical teaching? Med Educ. 2002;36(12):1185–1188. doi:10.1046/j.1365-2923.2002.01372.x

17. Gonzalo JD, Heist BS, Duffy BL, et al. The value of bedside rounds: a multicenter qualitative study. Teach Learn Med. 2013;25(4):326–333. doi:10.1080/10401334.2013.830514

18. Peters M, ten Cate O. Bedside teaching in medical education: a literature review. Perspect Med Educ. 2014;3:76–88. doi:10.1007/s40037-013-0083-y

19. Hessler M, Pöpping DM, Hollstein H, et al. Availability of cookies during an academic course session affects evaluation of teaching. Med Educ. 2018;52:1064–1072. doi:10.1111/medu.13627

20. Schiekirka S, Raupach T. A systematic review of factors influencing student ratings in undergraduate medical education course evaluations. BMC Med Educ. 2015;15:30. doi:10.1186/s12909-015-0311-8

21. Watson RS, Borgert AJ, O’Heron CT, et al. A multicenter prospective comparison of the accreditation council for graduate medical education milestones: clinical competency committee vs. resident self-assessment. J Surg Educ. 2017;74(6):e8–e14. doi:10.1016/j.jsurg.2017.06.009

© 2021 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2021 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.