Back to Journals » Advances in Medical Education and Practice » Volume 9

Specialty Training’s Organizational Readiness for curriculum Change (STORC): validation of a questionnaire

Authors Bank L, Jippes M, Leppink J, Scherpbier AJ , den Rooyen C , van Luijk SJ, Scheele F

Received 11 July 2017

Accepted for publication 4 December 2017

Published 31 January 2018 Volume 2018:9 Pages 75—83

DOI https://doi.org/10.2147/AMEP.S146018

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 2

Editor who approved publication: Dr Md Anwarul Azim Majumder

Lindsay Bank,1,2 Mariëlle Jippes,3 Jimmie Leppink,4 Albert JJA Scherpbier,4 Corry den Rooyen,5 Scheltus J van Luijk,6 Fedde Scheele1,2,7

1Department of Healthcare Education, OLVG Hospital, 2Faculty of Earth and Life Sciences, Athena Institute for Transdisciplinary Research, VU University, Amsterdam, 3Department of Plastic Surgery, Erasmus Medical Center, Rotterdam, 4Faculty of Health, Medicine and Life Sciences, School of Health Professions Education, Maastricht University, Maastricht, 5Movation BV, Maarssen, 6Department of Healthcare Education, Maastricht University Medical Center+, Maastricht, 7School of Medical Sciences, Institute for Education and Training, VU University Medical Center, Amsterdam, the Netherlands

Background: The field of postgraduate medical education (PGME) is continuously evolving as a result of social demands and advancing educational insights. Change experts contend that organizational readiness for change (ORC) is a critical precursor for successful implementation of change initiatives. However, in PGME, assessing change readiness is rarely considered while it could be of great value for managing educational change such as curriculum change. Therefore, in a previous Delphi study the authors developed an instrument for assessing ORC in PGME: Specialty Training’s Organizational Readiness for curriculum Change (STORC). In this study, the psychometric properties of this questionnaire were further explored.

Methods: In 2015, STORC was distributed among clinical teaching teams in the Netherlands. The authors conducted a confirmatory factor analysis on the internal factor structure of STORC. The reliability of the measurements was estimated by calculating Cronbach’s alpha for all subscales. Additionally, a behavioral support-for-change measure was distributed as well to assess correlations with change-related behavior.

Results: In total, the STORC questionnaire was completed by 856 clinical teaching team members from 39 specialties. Factor analysis led to the removal of 1 item but supported the expected factor structure with very good fit for the other 43 items. Supportive behavior was positively correlated to a higher level of ORC.

Discussion: In this study, additional steps to collect validity evidence for the STORC questionnaire were taken successfully. The final subscales of STORC represent the core components of ORC in the literature. By breaking down this concept into multiple measurable aspects, STORC could help to enable educational leaders to diagnose possible hurdles in implementation processes and to perform specifically targeted interventions when needed.

Keywords: organizational readiness for change, postgraduate medical education, curriculum change, questionnaire development, change management

Introduction

For decades, the study of change, and in particular change readiness, has been one of the important research topics in social sciences because implementing change is notoriously challenging and success rates are low.1–3 Moreover, interest in this subject is still growing in light of the increasing environmental complexity, which requires organizations to rapidly adapt to external changes in order to survive.1 The latter is also true for health care systems as they need to be able to rapidly adapt in order to properly respond to changing societal needs, new public-health policies, and technological advances.4 Additionally, health care systems themselves become more and more complex as well.5 Consequently, the field of medical education is also continuously evolving as a result of external changes6 as well as advancing educational insights.7–9 Nevertheless, attention to change readiness of health care organizations has only recently emerged.2,10 Moreover, establishing change readiness as part of a curriculum change has unfortunately rarely been considered2,11–14 while it could be of great value for this field. Given the substantial amount of time, energy, and resources invested in organizational efforts to implement curriculum changes, increasing knowledge about organizational readiness for change (ORC) as well as having a valid tool to assess it could optimize organizational efforts to implement curriculum changes, and ultimately, improve health care quality.2

Change readiness is the most prevalent positive attitude toward change that has been studied in organizational literature.1 Change experts agree that ORC is a critical precursor for successful implementation of change initiatives.3,15 Indeed, it has been suggested that failure to establish sufficient readiness for change accounts for half of the unsuccessful organizational change efforts.3 In other words, for change to take place in the desired direction, a state of readiness must be obtained. ORC is a comprehensive construct that collectively reflects the extent to which members of an organization are inclined to accept, embrace, and adopt a particular change initiative to purposefully alter the status quo,16 and provides the foundation for either resistance or adoptive behaviors.13 Examples of these behaviors range from active and passive resistance by showing overt or subtle behaviors intended to ensure failure, to compliance and being prepared to make sacrifices as well as enthusiastically promoting change to others.17

Not surprisingly, the relevance assigned to ORC has resulted in the development of multiple instruments for assessing this concept.2,13 In recent years, instruments for assessing ORC in health care have also been developed, with a predominant focus on the implementation of new policies and clinical practices.2,10,13,18 The assessment of ORC prior to curriculum change has recently been introduced in undergraduate medical education as well.11,14

However, postgraduate medical education (PGME) is also continuously evolving, and while it would potentially benefit from a similar instrument that could help facilitate the implementation of curriculum innovation, such an instrument is currently lacking in this particular field.12

PGME is a unique setting in which patient care, teaching, and learning are interconnected with each other and cannot be separated.19 In teaching hospitals, PGME is completely integrated in clinical service. Therefore, any adjustments of the educational system will influence clinical service and could have consequences for working schedules, funding, and learning experiences.19 These implications of curriculum reforms justify an in-depth analysis of the implementation of these reforms, and the uniqueness of this particular setting generates the need for an ORC instrument that is adjusted to PGME. Especially, the instruments available in undergraduate medical education cannot fill this gap either, due to among other things their focus on medical faculties, to the neglect of students, with long-lasting hierarchical structures leading to a more diverse set of pressures to change.11,12 In contrast, in PGME, trainees play a more prominent role in change processes within smaller clinical teaching teams that also tend to have more volatile composition.12

The assessment of ORC enables educational leaders (ie, program directors) to identify significant gaps between their own expectations and those of other members of their clinical teaching team (trainees and clinical staff) and subsequently enables them to take action on this gap in order to prevent stagnation or even failure of the change implementation.13

Therefore, we developed an instrument to assess ORC in PGME, the so-called Specialty Training’s Organizational Readiness for curriculum Change (STORC) questionnaire, using a Delphi study.12 The aim of the present study was to further explore the psychometric properties of STORC.

Methods

Conceptual model

We used the conceptual model of Holt et al16 to guide the development of STORC.12 Holt’s model defines ORC by combining psychological factors, which reflect the extent to which members of an organization are inclined to accept and implement a change, with structural factors, which reflect the extent to which circumstances under which the change is occurring either enhance or inhibit the acceptance and implementation of the change. In other words, these different factors can either function as a facilitator or barrier to change. For instance, when looking at “management support,” the implementation process is accelerated in the presence of good leaders who are seen as role models or entrepreneurs, but can be slowed down in the absence of good leaders.20

Since ORC is a multifaceted construct, it can only be captured by breaking it down into measurable core components, such as “collective commitment,” the shared belief and resolve to pursue courses of action that will lead to successful change implementation, and “support climate,” that is, an intangible encouraging environment that supports the implementation of a change. As a third dimension to this framework, Holt added the level at which the analysis took place, that is, the individual level or the organizational level.16 However, in contrast to Holt et al, we focused on the assessment of ORC in PGME at the organizational level rather than the individual level, that is, at the level of the clinical teaching teams, which consist of a program director, clinical staff members, and trainees. Therefore, the following definition of ORC was adopted, “the degree to which clinical teaching team members are motivated and capable to implement curriculum change.” In this definition, “motivated” mainly refers to the psychological factors, whereas “capable” refers to the structural factors.12

Delphi study

As the first step toward development of a questionnaire, we conducted a Delphi study with an international sample of expert panelists. In this procedure, a 89-item preliminary questionnaire adapted from ORC questionnaire for business and health care organizations was further tailored to PGME.12 After 2 rounds, this Delphi study was complete and resulted in a questionnaire comprising 44 items, which are divided into 10 subscales.12 After this Delphi procedure, both the items and the additional instructions, as would be presented to the participants, were analyzed for redundancies, phrasing, and intelligibility by 6 other researchers (5 medical doctors and 1 educationalist) and 1 layperson. This analysis led to textual changes only.

Setting and selection of participants

The Dutch PGME training programs have recently been modernized according to the competency-based framework of CanMEDS.21,22 This nationwide effort created a suitable setting for collecting empirical data to test the psychometric properties of STORC. All clinical teaching teams registered at the Dutch Federation of Medical Specialists were eligible for participation.

In the Netherlands, all teaching hospitals have a separate educational department that supports and assists the clinical departments with their educational tasks (ie, the training of different health care professionals, such as medical students, doctors, nurses, and laboratory staff). Between February 2015 and November 2015, we contacted these educational departments and asked them to discuss our study with their educational board, which comprises at least one representative (in any case the program director) of each clinical teaching team involved in PGME. If the educational board of a hospital agreed to participate, we sent an official invitation to all program directors of that hospital. Additionally, we sent a direct invitation to the program directors within our own network. Subsequently, the program directors were responsible for inviting the other members of their clinical teaching team (ie, trainees and clinical staff members) to participate. Due to this method of recruitment (ie, snowball sampling), the total number of doctors invited by the program directors is unknown to the authors.

The invitation email included an informative letter explaining the purpose of our study, the voluntary nature of participation, and the confidentiality of the contributions. The email also contained a link to the web-based questionnaire. Besides STORC, the questionnaire included an informed consent form, general questions regarding gender, age, level of training, and years registered as a medical specialist, and an instrument to measure change-related behavior.17 During the study period, several reminders to complete the questionnaire were sent to the program directors of the participating clinical teaching teams.

Ethical approval

This study was approved by the Ethical Review Board of the Dutch Association for Medical Education (NVMO). Participation was voluntary and confidentiality of the contributions was guaranteed. Written informed consent was obtained from all participants for this study.

Materials

STORC12

All participants were asked to rate their agreement with the 44 items of STORC on a 5-point Likert scale (1= strongly disagree, 5= strongly agree). Alternatively, they had the option to choose “not applicable.” All items are group-referenced (eg, “we need to improve…”) rather than self-referenced (eg, “I’m willing to innovate…”) in order to focus on the organizational level of readiness for change. For the purpose of this study, participants were instructed that “this change” and “this innovation” in the questionnaire items referred to the introduction of CanMEDS in PGME. Based on our conceptual model, we expect the 10 subscales of STORC to be positively related to each other.

Behavioral support-for-change17

Besides STORC, a measure for assessing change-related behavior was administered to include a variable that would be expected to have a theoretical relation with ORC in order to strengthen the validation of STORC. This “behavioral support-for-change” measure consists of a 101-point behavioral continuum reflecting 5 types of resistance and support behavior, which were made visible along the following continuum: active resistance (score 0–20), passive resistance (score 21–40), compliance (score 41–60), cooperation (score 61–80), and championing (score 81–100).17 A written description of each of these behaviors was provided. Participants were asked to indicate the score that best represented their own reaction as well as their clinical teaching team’s reaction to the introduction of competency-based medical education leading to 2 separate scores. Based on the current literature, it is expected that when ORC is high this results in more change-supportive behavior, whereas when ORC is low, resistant behavior can be expected. Therefore, we hypothesized that high scores on the separate subscales, that is, reflecting high ORC, would correlate to high scores on the behavioral support-for-change measure, that is, change-supportive behavior.

Statistical analysis

We conducted a confirmatory factor analysis (CFA) to test the factor structure of STORC, using our previous work12 as input for which items should load on the same factor (ie, ordinal indicators of the same latent variable) with a factor loading >0.5. A priori we assumed that CFA would confirm the division of the STORC questionnaire into the 10 subscales we found in our Delphi study and which are rooted in theory.12 The following fit indices and criteria were used: root mean square error of approximation <0.06, comparative fit index >0.9, and Tucker–Lewis index >0.9. To estimate the reliability of the measurements, we calculated Cronbach’s alpha for each subscale. We estimated standard error of measurement (SEM, <0.26) for each subscale to estimate the number of participants needed to get a reliable score. The structural equation modeling software Mplus (version 7.3; Muthén & Muthén, Los Angeles, CA, USA) was used for conducting the CFA. For all other analyses, SPSS version 23 (IBM Corporation, Armonk, NY, USA) was used.

Results

Participants

In total, 873 clinical teaching team members agreed to participate in this study. Three respondents were excluded because their specialty (ie, medical psychology) is not registered at the Federation of Medical Specialists and therefore they did not meet our inclusion criteria. Nine respondents (1.0%) were excluded due to lack of information about their current hospital (7 respondents) or age (2 respondents). Six respondents (0.7%) were excluded because they were the only respondent within their hospital, which precluded investigation of ORC at the level of their clinical teaching team. Two respondents (0.2%) could not be included because they did not complete the questionnaire.

As a result, 856 doctors (98.1% of the respondents) were included in this study: 297 (34.7%) trainees, 315 (36.8%) clinical staff members, and 244 (28.5%) program directors.

All together, the respondents represent 223 clinical teaching teams in 23 teaching hospitals thereby representing about one third of all teaching hospitals in the Netherlands, and 39 different specialties. Respondents were either working at an academic medical center (49%) or at a nonacademic teaching hospital (51%).

CFA

One item, “current pressure to implement this innovation in residency training comes from external authorities” (originally item 4) from subscale “pressure to change,” had to be removed as its loading on the expected factor, “pressure to change,” was below 0.5 (ie, −0.173) and it did not load well on other factors either. All the other items loaded on the factors as expected. As a result, the adapted STORC questionnaire consists of 43 items divided into 10 subscales (Table 1).

Table 2 provides means and standard deviations of the items along with the loadings of the items per factor and, in the footnote, fit statistics of the final model (Mplus version 7.3) as well as Cronbach’s alpha values per factor (SPSS version 23). The latter varied from 0.544 to 0.877 for the various factors. Additionally, the table shows the number of respondents needed for an SEM of ≤0.26, namely, 5–8 respondents (SPSS version 23).

Table 3 reports the correlations between factors (Mplus version 7.3). These results indicate that all factors were positively correlated to each other, with the exception of factor 3.

| Table 3 Factor–factor correlations of STORC resulting from the confirmatory factor analysis reported in Table 2 Note: Correlations of “0” have been fixed to zero. Abbreviation: STORC, Specialty Training’s Organizational Readiness for curriculum Change. |

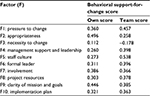

Behavioral support-for-change

An individual score for behavioral support-for-change was given by 825 (96.4%) doctors, and a clinical teaching team’s score was given by 824 (96.3%) doctors. Table 4 provides the correlations between each of the factors and each of the 2 behavioral support-for-change scores. Again, all factors except factor 3 were positively correlated to the behavioral support-for-change measure. In other words, a higher score on this continuum, that is, supportive behavior, was related to a higher level of ORC and a lower score, that is, resistant behavior, was related to a lower level of ORC as measured by the questionnaire STORC.

Discussion

The aim of this study was to further develop and validate the STORC questionnaire based on a stepwise approach comprising a conceptual model, a Delphi procedure,12 CFA, reliability analysis, and the inclusion of a theoretically related variable, namely, change-related behavior. This approach yielded a solid base to explore and assess the psychometric properties of STORC. Based on our conceptual model and available instruments,17,23–27 CFA confirmed the different subscales. Indeed, both psychological and structural factors are represented in the 43 remaining items of STORC, and the 10 subscales represent most of the core components of ORC described in the literature (Tables 1 and 5).

| Table 5 Final subscales, number of items per subscale, and original subscales from which STORC was adapted Abbreviation: STORC, Specialty Training’s Organizational Readiness for curriculum Change. |

However, the 1 item that was excluded was the only item concerning “external pressure” that had been retained after our Delphi study;12 hence, this component of ORC is now no longer represented in STORC. In PGME, external pressure refers to pressure exerted by, for example, the educational board, the hospital board, scientific societies, accreditation bodies, the Ministry of Health, or the Ministry of Education. Since current curriculum reforms are top-down driven, the exclusion of this item is surprising, but it might be the result of the specific setting STORC was developed for. The removal could simply be due to the fact that the participants in our study do not experience external pressure on a daily basis. On the other hand, it might be due to the fact that hospitals and the medical profession are organized differently than organizations in other fields. In general, hospitals are not organized according to habitual management practices (eg, formal planning and control systems).28 Furthermore, during specialty training, doctors do not receive a solid foundation in management practices. The recognition of management as such, or the exertion of sufficient influence (eg, by educational board members or scientific societies), requires sufficient knowledge and information about management principles.28 Alternatively, this result elucidates a more fundamental aspect of the medical profession. Medical doctors have a very prominent professional autonomy, and research in this field has shown that medical doctors resist attempts to increase management control of medical practice.29 As a result, they might be less receptive to external pressure.

As we expected, based on our conceptual model, the various subscales of STORC were positively related to each other as well as to the behavioral support-for-change scores, with subscale 3 as the only exception. Subscale 3, “necessity to change,” includes items about whether or not there is a need for improvement of residency training in general, and unlike most of the other subscales, it does not refer to the particular change proposed. As organizational readiness is situational or change-specific,15 the more general focus of this subscale could explain why this subscale shows different results. The content of change matters as much as the context of change.15,23 In other words, a clinical teaching team could have a very receptive attitude toward change in general (context) but might at the same time exhibit a low ORC toward implementing assessment method A (content) and a high ORC toward implementing assessment method B (content).

Practical implications

STORC can be used to assess ORC at several stages of the change process, that is, prior or during change, as a way to diagnose any possible or current hurdles in the implementation process in order to facilitate any corrective interventions. For instance, when team members express doubts about whether the proposed change will be an appropriate solution for the intended improvement of practice, educational leaders can try to show the relative advantage of the innovation by means of best practices. Alternatively, they can let team members adapt and refine the innovation on a limited basis to let it suit their own needs and local context. Subsequently, STORC could be used to assess the effects of these interventions.

Strengths and limitations

One of the strengths of this study was its sample size of 856 participants, which is large in relation to the number of survey items, which was 44. Furthermore, we were able to include clinical teaching teams from 39 different specialties representing about one third of all teaching hospitals in the Netherlands, which constitutes a remarkably heterogeneous sample. On the other hand, however, the number of participants per hospital varied widely.

When looking at the reliability scores, subscale 4, that is, “management and leadership,” showed a relatively low Cronbach’s alpha (0.544). The outline of STORC before the statistical analysis was the result of our previous Delphi study which had reduced the number of items about management support from 10 to 2. Since this subscale only has 2 questions, the interpretive value of a Cronbach’s alpha score is reduced. Although the correlations with the other subscales were positive and confirmed our hypothesis, this subscale should be interpreted with some caution.

In our stepwise approach to develop STORC, we first conducted a Delphi study in an international setting.12 We subsequently collected empirical data in the Netherlands, rather than including an international sample, but the factor analysis clearly confirmed our previous results. Therefore, we expect STORC to be internationally applicable.

Conclusion

In this study, additional steps to validate STORC for PGME were taken successfully. The final subscales of STORC represent the core components of ORC in the literature. By breaking down the concept of ORC into multiple measurable aspects, STORC could help to enable educational leaders to diagnose possible hurdles in implementation processes and to perform specifically targeted interventions when needed.

Acknowledgments

The authors wish to thank all the participating clinical teaching teams for their input and the time they invested, and Lisette van Hulst for her editing assistance.

Author contributions

LB and MJ participated in data collection, after which LB and JL performed the data analysis. LB drafted the manuscript. All authors contributed toward data analysis, drafting and revising the paper and agree to be accountable for all aspects of the work.

Disclosure

The authors report no conflicts of interest in this work.

References

Rafferty AE, Jimmieson NL, Armenakis AA. Change readiness: a multilevel review. J Manag. 2013;39(1):110–135. | ||

Weiner BJ, Amick H, Lee SY. Conceptualization and measurement of organizational readiness for change: a review of the literature in health services research and other fields. Med Care Res Rev. 2008;65(4):379–436. | ||

Kotter JP. Leading Change. Boston, MA: Harvard Business School Press; 1996. | ||

Plsek PE, Greenhalgh T. Complexity science: the challenge of complexity in health care. BMJ. 2001;323(7313):625–628. | ||

Greenhalgh T, Stramer K, Bratan T, Byrne E, Mohammad Y, Russell J. Introduction of shared electronic records: multi-site case study using diffusion of innovation theory. BMJ. 2008;337:a1786. | ||

Fraser SW, Greenhalgh T. Coping with complexity: educating for capability. BMJ. 2001;323(7316):799–803. | ||

Jones R, Higgs R, de Angelis C, Prideaux D. Changing face of medical curricula. Lancet. 2001;357(9257):699–703. | ||

Carraccio C, Englander R, Van Melle E, et al. Advancing competency-based medical education: a charter for clinician-educators. Acad Med. 2016;91(5):645–649. | ||

Frank JR, Snell L, Sherbino J, editors. CanMEDS 2015 Physician Competency Framework. Ottawa: Royal College of Physicians and Surgeons of Canada; 2015. | ||

Gagnon MP, Attieh R, Ghandour el K, et al. A systematic review of instruments to assess organizational readiness for knowledge translation in health care. PLoS One. 2014;9(12):e114338. | ||

Jippes M, Driessen EW, Broers NJ, Majoor GD, Gijselaers WH, van der Vleuten CP. A medical school’s organizational readiness for curriculum change (MORC): development and validation of a questionnaire. Acad Med. 2013;88(9):1346–1356. | ||

Bank L, Jippes M, van Luijk S, den Rooyen C, Scherpbier A, Scheele F. Specialty Training’s Organizational Readiness for curriculum Change (STORC): development of a questionnaire in a Delphi study. BMC Med Educ. 2015;15:127. | ||

Holt DT, Armenakis A, Harris SG, Feild H. Toward a comprehensive definition of readiness for change: a review of research and instrumentation. Res Organ Change Dev. 2007;16:289–336. | ||

Malau-Aduli BS, Zimitat C, Malau-Aduli AEO. Quality assured assessment processes evaluating staff response to change. Higher Educ Manag Policy. 2011;23(1):1–24. | ||

Weiner BJ. A theory of organizational readiness for change. Implement Sci. 2009;4:67. | ||

Holt DT, Helfrich CD, Hall CG, Weiner BJ. Are you ready? How health professionals can comprehensively conceptualize readiness for change. J Gen Intern Med. 2010;25(Suppl 1):50–55. | ||

Herscovitch L, Meyer JP. Commitment to organizational change: extension of a three-component model. J Appl Psychol. 2002;87(3):474–487. | ||

Shea CM, Jacobs SR, Esserman DA, Bruce K, Weiner BJ. Organizational readiness for implementing change: a psychometric assessment of a new measure. Implement Sci. 2014;9:7. | ||

Billett S. Constituting the workplace curriculum. J Curriculum Studies. 2006;38(1):31–48. | ||

Jippes E, Van Luijk SJ, Pols J, Achterkamp MC, Brand PL, van Engelen JM. Facilitators and barriers to a nationwide implementation of competency-based postgraduate medical curricula: a qualitative study. Med Teach. 2012;34(8):e589–e602. | ||

Scheele F, Van Luijk S, Mulder H, et al. Is the modernisation of postgraduate medical training in the Netherlands successful? Views of the NVMO Special Interest Group on Postgraduate Medical Education. Med Teach. 2014;36(2):116–120. | ||

Frank JR. CanMEDS 2005 Physician Competency Framework: Better Standards, Better Physicians, Better Care. Ottawa, ON: Royal College of Physicians and Surgeons of Canada; 2005. | ||

Helfrich CD, Li YF, Sharp ND, Sales AE. Organizational readiness to change assessment (ORCA): development of an instrument based on the Promoting Action on Research in Health Services (PARIHS) framework. Implement Sci. 2009;4:38. | ||

Lehman WE, Greener JM, Simpson DD. Assessing organizational readiness for change. J Subst Abuse Treat. 2002;22(4):197–209. | ||

Holt D, Armenakis A, Feild H, Harris S. Readiness for organizational change: the systematic development of scale. J Appl Behav Sci. 2007;43(2):232–255. | ||

Bouckenooghe D, Devos G, van den Broeck H. Organizational change questionnaire – climate of change, processes, and readiness: development of a new instrument. J Psychol. 2009;143(6):559–599. | ||

Molla A, Licker P. eCommerce adoption in developing countries: a model and instrument. Information Manag. 2005;42(6):877–899. | ||

Witman Y, Smid GAC, Meurs PL, Willems DL. Doctor in the lead: balancing between two worlds. Organization. 2011;18(4):477–495. | ||

Kitchener M. The ‘bureaucratization’ of professional roles: the case of clinical directors in UK hospitals. Organization. 2000;7(1):129–154. |

© 2018 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2018 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.