Back to Journals » Advances in Medical Education and Practice » Volume 8

Are they ready? Organizational readiness for change among clinical teaching teams

Authors Bank L, Jippes M, Leppink J, Scherpbier AJ , den Rooyen C , van Luijk SJ, Scheele F

Received 11 July 2017

Accepted for publication 28 September 2017

Published 14 December 2017 Volume 2017:8 Pages 807—815

DOI https://doi.org/10.2147/AMEP.S146021

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 2

Editor who approved publication: Dr Md Anwarul Azim Majumder

Lindsay Bank,1,2 Mariëlle Jippes,3 Jimmie Leppink,4 Albert JJA Scherpbier,4 Corry den Rooyen,5 Scheltus J van Luijk,6 Fedde Scheele1,2,7

1Department of Healthcare Education, OLVG Hospital, 2Faculty of Earth and Life Sciences, Athena Institute for Transdisciplinary Research, VU University, Amsterdam, 3Department of Plastic Surgery, Erasmus Medical Centre, Rotterdam, 4Faculty of Health, Medicine and Life Sciences, School of Health Professions Education, Maastricht University, Maastricht, 5Movation BV, Maarssen, 6Department of Healthcare Education, Maastricht University Medical Center+, Maastricht, 7School of Medical Sciences, Institute for Education and Training, VU University Medical Center, Amsterdam, the Netherlands

Introduction: Curriculum change and innovation are inevitable parts of progress in postgraduate medical education (PGME). Although implementing change is known to be challenging, change management principles are rarely looked at for support. Change experts contend that organizational readiness for change (ORC) is a critical precursor for the successful implementation of change initiatives. Therefore, this study explores whether assessing ORC in clinical teaching teams could help to understand how curriculum change takes place in PGME.

Methods: Clinical teaching teams in hospitals in the Netherlands were requested to complete the Specialty Training’s Organizational Readiness for curriculum Change, a questionnaire to measure ORC in clinical teaching teams. In addition, change-related behavior was measured by using the “behavioral support-for-change” measure. A two-way analysis of variance was performed for all response variables of interest.

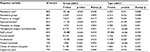

Results: In total, 836 clinical teaching team members were included in this study: 288 (34.4%) trainees, 307 (36.7%) clinical staff members, and 241 (28.8%) program directors. Overall, items regarding whether the program director has the authority to lead scored higher compared with the other items. At the other end, the subscales “management support and leadership,” “project resources,” and “implementation plan” had the lowest scores in all groups.

Discussion: The study brought to light that program directors are clearly in the lead when it comes to the implementation of educational innovation. Clinical teaching teams tend to work together as a team, sharing responsibilities in the implementation process. However, the results also reinforce the need for change management support in change processes in PGME.

Keywords: organizational readiness for change, postgraduate medical education, curriculum change, change management, questionnaire, innovation

A Letter to the Editor has been received and published for this article.

Introduction

Curriculum change and innovation are inevitable parts of progress in postgraduate medical education (PGME). Even though implementing change is known to be challenging,1 little support has been sought from change management principles.2 In view of the resources invested and the increasing regulatory and social demands, it is necessary to acquire knowledge on how curriculum change takes place in PGME and which factors either support or impair these implementation processes.

In general, innovation involves the introduction of new ideas into a product or service to create value and, by doing so, satisfies a specific need.3,4 Innovation may be driven by visionary ideas, quality requirements, or the need for higher efficiency. For an innovation to add value to its context, it needs to be adopted and routinized into standard practice;5 especially the latter proves to be challenging.5

In the last decade, the medical profession has faced a major innovation of PGME with the introduction of competency-based medical education (CBME). This innovation is driven by, among others, changes in healthcare needs and in expectations of the public.6,7 PGME needs to show a greater accountability to society by being more transparent about the content and quality of medical education.8 CBME added value by introducing a broader definition of competencies needed by future medical specialists to meet the needs of their patients7 as well as requirements for teaching and assessment strategies.6 In essence, the introduction of CBME requires a paradigm shift from a focus solely on reaching medical expertise to a focus on becoming a medical expert as well as acquiring other competencies for trainees to successfully address the roles they have in meeting societal needs. For instance, in the case of CanMEDS, this means trainees also need to become, among others, a competent “manager,” “collaborator,” and “scholar.” In addition, faculty development is essential to ensure an adequate uptake of CBME in daily practice such as proper use of feedback and reflection on learning.7 However, as mentioned earlier, adopting and routinizing an innovation can be difficult and the transition from theory to practice does not necessarily lead to the intended changes.6,9

In practice, CBME has indeed led to more conscious attention to other competencies besides the role of the medical expert10,11 as well as to more frequent direct observation and increased documented feedback about a trainee’s performance.12 However, details of generic models for CBME are not always explicitly outlined, which leads to a lack of clarity about its content, meaning, and relevance.13–17 In addition, the implementation of CBME frameworks is further complicated by a lack of support from expert facilitators such as educationalists who can help with understanding the educational concepts and relating them to the clinical work environment.6,18

Despite the challenges that PGME is facing with the implementation of CBME, attention to a change management perspective on supporting these processes is still rather limited.2 One of the potentially beneficial change management strategies for PGME could be the assessment of organizational readiness for change (ORC).2 ORC is a comprehensive construct that reflects the degree to which members of an organization are collectively primed, motivated, and capable of adopting and executing a particular change initiative to purposefully alter the status quo.19 Change experts contend that ORC is a critical precursor for the successful implementation of change initiatives.20,21 It is believed that when change leaders establish insufficient readiness, a range of predictable and undesirable outcomes would occur: change efforts make a false start from which it might or might not recover, change efforts stall as resistance grows, or the change fails altogether.21 Actions to create readiness include, among others, establishing a sense of urgency, empowering your team members, and creating an appealing vision for the future as well as fostering a sense of confidence that this can be realized.20,21

Change readiness can be assessed at several stages of the change process, ie, before or during the change, as a way to diagnose any possible or current hurdles in the implementation process in order to facilitate any corrective interventions. In addition, readiness can be assessed repeatedly to explore the effects of the interventions.2 Team members will commit to a change because they want to, have to, or ought to. Regardless of this reason, this form of commitment will lead to behavioral compliance with the requirements for change. Some, however, might show resistance, either active or passive, and fail to comply. On the other end of the spectrum are those who show behaviors that go beyond what is formally required to ensure success and enthusiastically promote change to others.22

When looking at the current transition in PGME, many of the components relevant for the establishment of ORC can also be recognized in the implementation processes of CBME, such as proactive knowledge management to increase clarity about the content and meaning of CBME,5 establishment of a need for change toward CBME,1,20 training of staff,12 availability of resources,5 access to expert facilitators such as educationalists5 to support relating educational concepts to the clinical work environment, and so on. This suggests that ORC could potentially play a vital role in the implementation processes of PGME. By understanding change management principles, educational leaders may improve the clinical teaching team’s ability to implement the planned change.19 Therefore, this study explores whether assessing ORC in clinical teaching teams could help to understand how curriculum change takes place in PGME and, as a result, could provide support in overcoming the challenges in the implementation processes.

Methods

Setting and selection of participants

In the Netherlands, legislation has formalized the requirement that all postgraduate medical training programs must be reformed according to the competency-based framework of CanMEDS.8 As a result, clinical teaching teams of all medical specialties registered at the Dutch Federation of Medical Specialists were eligible for participation as they had to implement this new curriculum in their local settings. In general, educational science was implemented relatively rapidly since an assessment method like simulation or multisource feedback has such obvious advantages that it was easily accepted into practice. Furthermore, attention to the generic skills is growing. However, reflective practices have not been institutionalized everywhere, and patient feedback has not been used sufficiently.14

In the Netherlands, there are 8 academic medical centers, each of which coordinates PGME within a geographical region. Each geographical region contains multiple affiliated nonacademic teaching hospitals. Within each teaching hospital, at least one clinical teaching team offers residency training. Both academic medical centers and nonacademic teaching hospitals provide PGME and patient care and participate in research projects. However, academic medical centers are bigger, provide more specialized patient care, and both develop and participate in research projects at a much larger scale. Besides, they are responsible for providing undergraduate medical education as well.

In general, training programs in PGME are 4–6 years in duration depending on the subject. Trainees will complete several years of their training in an academic medical center and several years in one of the affiliated nonacademic teaching hospitals. In daily practice, trainees are supervised and trained by a clinical teaching team (ie, clinical staff members), which is led by a program director appointed by the Dutch Federation of Medical Specialists.

All teaching hospitals have a separate educational department that supports and assists the clinical teaching teams with their educational tasks. Between February and November 2015, we asked these educational departments to contact the individual clinical teaching team in their teaching hospital and discuss our study with the program directors. If a program director agreed to participate, an official invitation was sent including an information letter and a link to the web-based questionnaire. In addition, we sent a direct invitation to the program directors within our own network.

Subsequently, the program directors were responsible for inviting the other members of their clinical teaching team (ie, trainees and clinical staff members) to participate. During the study period from February till November 2015, several reminders to complete the questionnaire were sent to the program directors of the participating clinical teaching teams.

Ethical approval

This study was approved by the Ethical Review Board of the Dutch Association for Medical Education. All the participants received an information letter explaining study purpose, confidentiality, and voluntary participation. Written informed consent was obtained from all the participants for this study.

Materials

Specialty Training’s Organizational Readiness for curriculum Change (STORC)

ORC was measured using STORC (Table 1).2,34 This questionnaire was designed to measure readiness for change in clinical teaching teams at a team level, rather than at an individual level. This questionnaire was developed in an international Delphi study,2 followed by a confirmatory factor analysis validating the clustering of items within the 10 subscales.34 Generalizability study showed 5–8 responses are needed for a reliable outcome.34 Participants were asked to rate their level of agreement with the 43 items of STORC on a 5-point Likert scale (1= strongly disagree and 5= strongly agree). Alternatively, they had the option to choose “not applicable.”

| Table 1 Subscales and topics covered by the STORC questionnaire Notes: Data from Bank et al.2,34 Abbreviation: STORC, Specialty Training’s Organizational Readiness for curriculum Change. |

Behavioral support-for-change

In addition, change-related behavior was measured using the “behavioral support-for-change” measure reflecting the 5 types of resistance and support behavior described by Herscovitch and Meyer:22 active resistance (score =0–20), passive resistance (score =21–40), compliance (score =41–60), cooperation (score =61–80), and championing (score =81–100). These 5 types of behavior were made visible along a behavioral continuum of 101 points (ie, from 0 to 100). Participants were provided with a written description of each of the behaviors and were asked to indicate the score that best represented their own reaction as well as their clinical teaching team’s reaction to the introduction of competency-based medical education.

Statistical analysis

Statistical analyses were conducted using IBM SPSS Version 24.0 (IBM Corp., Armonk, NY, USA). Intraclass correlation due to respondents being nested within hospitals frequently requires multilevel analysis, in which hospital (upper level) and respondent (lower level) are treated as hierarchical levels.23 However, in the current study, as the intraclass or intrahospital correlation for all response variables of interest was very small (ie, ranged from 0 to about 0.065), two-way analysis of variance (ANOVA) was performed for all response variables of interest: the individual score on change-related behavior (0–100ww), team’s score on change-related behavior (0–100), and the 10 separate subscales of STORC (Table 1).

Results

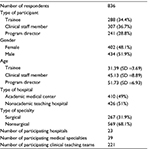

In total, 836 clinical teaching team members were included in this study: 288 (34.4%) trainees, 307 (36.7%) clinical staff members, and 241 (28.8%) program directors (Table 2). Respondents were working either at an academic medical center (49%) or at a nonacademic teaching hospital (51%), and about one third of the respondents were working in a surgical specialty. In total, the respondents represent 221 clinical teaching teams in 23 teaching hospitals, thereby representing 30.0% of clinical teaching teams (n=736) and 37.1% of teaching hospitals (n=62) in the Netherlands, respectively. About half of the respondents were female.

| Table 2 Descriptive characteristics of the respondents |

Statistical analysis

A two-way ANOVA was performed for the individual score on change-related behavior (0–100), the team’s score on change-related behavior (0–100), and the 10 separate subscales of STORC. The two factors in ANOVA were group of respondents and the type of hospital. The group-by-type interaction was very small for all response variables (partial η2 values <0.01) and, after correction for multiple testing, not statistically significant at the conventional α=0.05 significance level. Table 3 presents the main effects of group and type.

Group of respondent

In general, looking at the three groups of respondents in our study, program directors gave higher scores on almost all of the subscales of STORC (Figure 1A). Their scores on 7 subscales differ significantly from the scores of clinical staff members and trainees (Table 3). Studying the scores on the different subscales of STORC in more depth revealed a similar pattern for all groups of respondents (Figure 1A). The subscale “formal leader,” consisting of items regarding whether the program director has the authority to lead and accept responsibility for the success of the change process, scored higher than the other scales. High scores were also given on the subscale “staff culture,” which includes items about teamwork and clinical staff’s receptiveness to changes, as well as on the subscale “appropriateness.” At the other end, the subscales “management support and leadership,” “project recourses,” and “implementation plan” had the lowest scores in all respondent groups.

Type of hospital

When comparing responses from nonacademic teaching hospitals and academic medical centers, respondents in nonacademic teaching hospitals showed higher scores on almost all of the subscales (Figure 1B). For 7 subscales, their scores were significantly higher than the scores of respondents in academic medical centers (Table 3). Further analysis of the different subscales of STORC showed that a similar pattern can be recognized when comparing respondents based on hospital type and on group of respondent: again high scores were given on the subscales “formal leader” and “staff culture” and low scores on “implementation plan” and “project recourses” (Figure 1B).

Change-related behavior

When comparing change-related behavior between the groups, program directors judged their own reaction to change more positively than trainees and clinical staff members. In addition, when asked to judge their team’s change-related behavior, program directors were significantly more pessimistic than their colleagues (Figure 2A; Table 3). Looking at the different types of hospitals, respondents in nonacademic teaching hospitals judged their own reaction to change as well as their clinical teaching team’s reaction to change significantly more positively than their academic counterparts (Figure 2B; Table 3).

Discussion

In this study, we used a change management perspective to understand how clinical teaching teams deal with a curriculum change such as the introduction of CBME. By looking at the team’s “state” of readiness for change as well as their change-related behavior, insights into leadership roles, teamwork, shared commitment, perceived support, and behavioral reactions to change were gathered.

Results show that the program directors are clearly seen as the “doctor in the lead” of the educational change, both by their own judgment and by that of their colleagues. First, this is supported by high scores on the subscale “formal leader” throughout the entire sample. One of the core components of ORC is the belief that formal leaders are committed to the success of the change and take responsibility for it.19 Previous research in PGME had shown that the implementation process is accelerated in the presence of good leaders who are seen as role models and as entrepreneurs and who are able to inspire their team.18 Previous research on general innovations in healthcare service and organization has also shown that strong leadership may be especially helpful in encouraging clinical team members to break through convergent thinking and routines.24 Second, program directors judge their own behavior to change as significantly more supportive of the change as that of their other team members. However, according to the program directors, team members do tend to comply and therefore show commitment to change.22 At the least, this gives the impression that program directors feel that they invest more effort than their colleagues. Whether they actually think this is appropriate or not cannot be determined by the present study.

Besides the role of the program director, the subscale “staff culture” was highly rated as well, which is reassuring for two reasons. First, team dynamics such as motivation, teamwork, and visionary staff are factors that contribute to successful change, as was previously shown in healthcare innovations.24 More in particular, these factors combined with strong leadership increase the capacity to absorb knowledge, ie, to identify, interpret, and share new knowledge and subsequently link it to the team’s existing knowledge base in order to put it to appropriate use.5,24 Second, in the philosophy of CBME, teaching is not the responsibility of the program director alone, but rather of the entire clinical staff.25 The results show that clinical staff members do feel and share a sense of responsibility for the improvement of training and that they work together as a team.

Based on the scores on the subscale appropriateness, which reflects the belief that a specific change is correct for the situation being addressed,19 CBME indeed seems to meet the needs in PGME and therefore is accepted as a necessary and correct innovation. This is also supported by the fact that most team members showed behavioral compliance at the least or, in other words, were committed to this change. It is unclear whether this commitment is merely based on a desire to provide support or on a sense of obligation. However, scores representing actual resistant behavior, either passive or active, were rarely seen. In other words, respondents did comply and were supportive of the current curriculum change.

However, the lowest scores were found on the subscales “management support and leadership,” “project recourses,” and “implementation plan.” These subscales represent components that are clearly recognizable as being related to change management. As was stated earlier, the knowledge and use of change management strategies are lacking in change processes in PGME.2 Not surprisingly, these subscales affirm this shortcoming, which becomes evident in, for instance, the absence of descriptions of tasks and timelines, and the shortage of evaluation cycles, training facilities, and financial resources.

When looking at the differences between responses from academic hospitals and nonacademic teaching hospitals, the latter seem to have an advantage. Possible reasons could be differences in department size, in culture, and in the balance between education, patient care, and research. Firstly, in nonacademic teaching hospitals, departments are usually smaller, which might lead to more efficient communication and decision-making processes.18 Possibly, it might also cause team members to feel a stronger sense of a shared responsibility for implementing the proposed change,18 thus promoting teamwork when implementing change. Earlier research looking at readiness for organizational change in healthcare has also shown that a socially supportive workplace may play an important role in the team members’ ability to cope with stress resulting from change.26 This underscores the importance of a shared responsibility and teamwork.

Second, the academic cultural environment rewards individual accomplishments due to a stronger individualistic and competitive environment.27–29 In addition, the primary focus tends to be more on pursuing an active career in research rather than an active career in medical education.29–32 Potentially, this could impede gaining sufficient support and shared efforts to implement educational change.

In sum, clinical teaching teams appear to comply with the implementation of curriculum change if the proposed change is seen as a correct innovation. In that case, program directors receive and take the responsibility for the job that needs to be done, but they lack a fully equipped toolbox of change management principles to actually get that job done as efficiently as possible. Too little guidance from appropriate change models and implementation strategies slows down the implementation process, mainly because opportunities for advanced assessment and planning are missed.18,24

Strengths, limitations, and future research

Our findings extend the existing literature about implementation processes in medical education,6,18,33 since this study was the first to particularly explore implementation processes in PGME from a change management perspective. The inclusion of 836 medical doctors from 39 hospitals allowed for a thorough assessment of change readiness and change-related behavior in this field. This sample size allowed us to assess differences between program directors, clinical staff members, and trainees. However, due to our method of recruitment, we were not informed how many colleagues each program director had invited to participate. Another limitation is that both questionnaires used in this study were distributed in English, as we assumed that the English language comprehension of all participants would be sufficient to participate. We cannot rule out the possibility that some participants might have misunderstood the items due to a language barrier. Nevertheless, these effects can be expected to be minimal since our findings show clear trends and are in accordance with change management principles deduced from other fields19 and healthcare settings.24,26

A more in-depth analysis of the implementation of curriculum change in PGME is justified to further explore the way these changes occur in clinical teaching teams, which will strengthen our understanding of these processes and improve implementation efforts in this field.

Conclusion

The present analysis of readiness for change in clinical teaching teams brought to light that program directors are clearly in the lead when it comes to the implementation of educational innovation. Clinical teaching team members tend to work together as a team, sharing responsibility in the implementation process. However, results also reinforce the need for change management support in change processes in PGME in order to enhance the efficiency of the process itself as well as to improve the chances for success.

Acknowledgments

The authors wish to thank all the clinical teaching teams participated for their input and the time they invested and Lisette van Hulst for her editing assistance.

Author contributions

LB and MJ participated in data collection, after which LB and JL performed the data analysis. LB drafted the manuscript. All authors contributed toward data analysis, drafting and revising the paper and agree to be accountable for all aspects of the work.

Disclosure

The authors report no conflicts of interest in this work.

References

Weiner BJ, Amick H, Lee SY. Conceptualization and measurement of organizational readiness for change: a review of the literature in health services research and other fields. Med Care Res Rev. 2008;65:379–436. | ||

Bank L, Jippes M, van Luijk S, den Rooyen C, Scherpbier A, Scheele F. Specialty Training’s Organizational Readiness for curriculum Change (STORC): development of a questionnaire in a Delphi study. BMC Med Educ. 2015;15:127. | ||

dictionary.cambridge.org [homepage on the Internet]. Cambridge: Cambridge University Press; 2017. Available from: https://dictionary.cambridge.org/dictionary/english/innovation. Accessed July 03, 2017. | ||

businessdictionary.com [homepage on the Internet]. Fairfax: 2017 WebFinance Inc. Available from: http://www.businessdictionary.com/definition/innovation.html. Accessed July 03, 2017. | ||

Williams I. Organizational readiness for innovation in health care: some lessons from the recent literature. Health Serv Manage Res. 2011;24:213–218. | ||

Lillevang G, Bugge L, Beck H, Joost-Rethans J, Ringsted C. Evaluation of a national process of reforming curricula in postgraduate medical education. Med Teach. 2009;31:e260–e266. | ||

Frank JR, Danoff D. The CanMEDS initiative: implementing an outcomes-based framework of physician competencies. Med Teach. 2007;29:642–647. | ||

Scheele F, Teunissen P, Van Luijk S, et al. Introducing competency-based postgraduate medical education in the Netherlands. Med Teach. 2008;30:248–253. | ||

Renting N, Dornan T, Gans RO, Borleffs JC, Cohen-Schotanus J, Jaarsma AD. What supervisors say in their feedback: construction of CanMEDS roles in workplace settings. Adv Health Sci Educ Theory Pract. 2016;21:375–387. | ||

Carraccio C, Englander R, Van Melle E, et al. Advancing competency-based medical education: a charter for clinician-educators. Acad Med. 2016;91:645–649. | ||

Borleffs JC, Mourits MJ, Scheele F. CanMEDS 2015: nog betere dokters? [CanMEDS 2015: better doctors?]. Ned Tijdschr Geneeskd. 2016;160:D406. Dutch. | ||

Schultz K, Griffiths J. Implementing competency-based medical education in a postgraduate family medicine residency training program: a stepwise approach, facilitating factors, and processes or steps that would have been helpful. Acad Med. 2016;91:685–689. | ||

Chou S, Cole G, McLaughlin K, Lockyer J. CanMEDS evaluation in Canadian postgraduate training programmes: tools used and programme director satisfaction. Med Educ. 2008;42:879–886. | ||

Scheele F, Van Luijk S, Mulder H, et al. Is the modernisation of postgraduate medical training in the Netherlands successful? Views of the NVMO Special Interest Group on Postgraduate Medical Education. Med Teach. 2014;36:116–120. | ||

Hassan IS, Kuriry H, Ansari LA, et al. Competency-structured case discussion in the morning meeting: enhancing CanMEDS integration in daily practice. Adv Med Educ Pract. 2015;6:353–358. | ||

Ringsted C, Hansen TL, Davis D, Scherpbier A. Are some of the challenging aspects of the CanMEDS roles valid outside Canada? Med Educ. 2006;40:807–815. | ||

Zibrowski EM, Singh SI, Goldszmidt MA, et al. The sum of the parts detracts from the intended whole: competencies and in-training assessments. Med Educ. 2009;43:741–748. | ||

Jippes E, Van Luijk SJ, Pols J, Achterkamp MC, Brand PL, Van Engelen JM. Facilitators and barriers to a nationwide implementation of competency-based postgraduate medical curricula: a qualitative study. Med Teach. 2012;34:e589–e602. | ||

Holt DT, Helfrich CD, Hall CG, Weiner BJ. Are you ready? How health professionals can comprehensively conceptualize readiness for change. J Gen Intern Med. 2010;25:50–55. | ||

Kotter JP. Leading Change. Boston, MA: Harvard Business School Press; 1996. | ||

Weiner BJ. A theory of organizational readiness for change. Implement Sci. 2009;4:67. | ||

Herscovitch L, Meyer JP. Commitment to organizational change: extension of a three-component model. J Appl Psychol. 2002;87:474–487. | ||

Leppink J. Data analysis in medical education research: a multilevel perspective. Perspect Med Educ. 2015;4:14–24. | ||

Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82:581–629. | ||

Frank JR; Royal College of Physicians and Surgeons of Canada. The CanMEDS 2005 Physician Competency Framework: Better Standards, Better Physicians, Better Care. Ottawa, ON: Royal College of Physicians and Surgeons of Canada; 2005. | ||

Cunningham CE, Woodward CA, Shannon HS, et al. Readiness for organizational change: A longitudinal study of workplace, psychological and behavioural correlates. J Occup Organ Psychol. 2002;75:377–392. | ||

Pololi L, Conrad P, Knight S, Carr P. A study of the relational aspects of the culture of academic medicine. Acad Med. 2009;84:106–114. | ||

Krupat E, Pololi L, Schnell ER, Kern DE. Changing the culture of academic medicine: the C-Change learning action network and its impact at participating medical schools. Acad Med. 2013;88:1252–1258. | ||

Lowenstein SR, Fernandez G, Crane LA. Medical school faculty discontent: prevalence and predictors of intent to leave academic careers. BMC Med Educ. 2007;7:37. | ||

Pololi L, Kern DE, Carr P, Conrad P, Knight S. The culture of academic medicine: faculty perceptions of the lack of alignment between individual and institutional values. J Gen Intern Med. 2009;24:1289–1295. | ||

Fairchild DG, Benjamin EM, Gifford DR, Huot SJ. Physician leadership: enhancing the career development of academic physician administrators and leaders. Acad Med. 2004;79:214–218. | ||

Beasley BW, Simon SD, Wright SM. A time to be promoted. The Prospective Study of Promotion in Academia (Prospective Study of Promotion in Academia). J Gen Intern Med. 2006;21:123–129. | ||

Jippes M, Driessen EW, Broers NJ, Majoor GD, Gijselaers WH, van der Vleuten CP. A medical school’s organizational readiness for curriculum change (MORC): development and validation of a questionnaire. Acad Med. 2013;88:1346–1356. | ||

Bank L, Jippes M, Leppink J, et al. Specialty training’s organizational readiness for curriculum change (STORC): validation of a questionnaire. Adv Med Educ Pract. In press 2017. |

© 2017 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2017 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.