Back to Archived Journals » Innovation and Entrepreneurship in Health » Volume 2

Organization-wide approaches to patient safety

Authors Wheeler DS

Received 20 April 2015

Accepted for publication 3 July 2015

Published 14 August 2015 Volume 2015:2 Pages 49—57

DOI https://doi.org/10.2147/IEH.S60793

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

Editor who approved publication: Professor Rubin Pillay

Derek S Wheeler1,2

1Cincinnati Children's Hospital Medical Center, 2Department of Pediatrics, University of Cincinnati College of Medicine, Cincinnati, OH, USA

Abstract: The Institute of Medicine’s report, To Err is Human, raised the public awareness of medical errors and medical harm. However, even after 10 years following the release of this report, studies showed that safety had not significantly improved in health care. In order to address the ongoing concerns with medical errors and harm, many health care organizations have started to learn from other industries, including the automobile industry, the nuclear power industry, commercial aviation, and the military. Many of these industries are so-called high reliability organizations (HROs), which are defined as organizations that possess certain cultural characteristics that allow them to operate in highly dangerous environments with near-perfect safety records. In this context, health care organizations should learn from HROs and try to adopt some of these characteristics in order to improve safety, by ultimately becoming HROs themselves. While it is clear that HROs are not the definitive or even the only answer, many hospitals that have adopted techniques, skills, knowledge, and behaviors of HROs have started to make significant progress in improving patient safety. The purpose of this review is to highlight some of the approaches that HROs use to improve safety, with a specific emphasis on how health care organizations can apply some of these approaches to improve patient safety.

Keywords: high reliability organization, high reliability theory, normal accident theory, quality improvement, patient safety

Introduction

By now the US Institute of Medicine’s seminal report, To Err is Human,1 is so well known that it has become one of the most frequently cited reports in patient safety literature. While the concept of harm has been appreciated since the time of Hippocrates, who was perhaps the first to coin the term iatrogenesis (from the Greek meaning “originating from a physician”), it was really not until the publication of the Institute of Medicine’s report in late 1999 that medical errors and harm really came to the forefront.2 This report, which was largely based upon the results of the Harvard Medical Practice Study,3,4 as well as a follow-up study performed in Utah and Colorado,5 estimated that there were between 44,000 to 98,000 deaths every year in the USA as a result of medical errors. The analogy of a full jumbo jet crashing every day for a year made a significant impact on the lay media and arguably triggered the subsequent quality improvement and patient safety movement.2 These findings were later replicated in other countries as well,6–10 focusing attention on an issue of global scale. Moreover, patients in both high income and low income countries appear to be equally affected by medical errors.11 Even hospitalized children were shown to be at risk for iatrogenic injury.7

While the importance of the Harvard Medical Practice Study,3,4 the Utah and Colorado study,5 and To Err is Human1 are not disputed, it is important to recognize one of the major drawbacks to the “44,000 to 98,000 deaths” statistic that has been so often quoted. The definition of “adverse event” in these studies excluded hospital-acquired infections, specifically central line-associated bloodstream infections (CLA-BSIs), ventilator-associated respiratory infections (which include ventilator-associated pneumonia, ventilator-associated tracheobronchitis, and nosocomial sinusitis), surgical site infections, and catheter-associated urinary tract infections. All of these infections have been associated with significant increases in morbidity, mortality, and health care costs in both children12–15 and adults.16,17 Moreover, the Centers for Disease Control and Prevention estimates that approximately 99,000 patients die every year of in the USA alone.18 If HAI are included in the definition of medical harm, and by all accounts, they should be included,19,20 the “44,000 to 98,000 deaths” statistic is grossly underestimated.

Another important issue should also be mentioned here. Most studies suggest that between 3% to 6% of all hospital deaths are preventable.6,21–23 More than half of these preventable deaths occurred in patients who were at the end of life.21,23 These patients certainly deserve quality care too; however, there is a stark difference when comparing years of life lost between patients in their last 6 months of life versus those with considerably more years of life ahead. As such, while the “44,000 to 98,000 deaths” worked great as an eye-catching statistic that inspired the patient safety movement, preventable deaths may not depict the true extent of the gravity of medical errors.2 Approximately 20% of HAI16,24 and slightly more than half of adverse errors are considered preventable.25

Between 5 to 10 years after the publication of To Err is Human, follow-up reports showed that patient safety had not significantly improved.26–29 The lack of significant progress called into question many of the transformational changes that had been put into place, as well as casting doubt on many of the initiatives and techniques borrowed from industries outside health care. Fortunately, preliminary estimates from a recently completed update on annual hospital-acquired conditions by the Agency for Healthcare Research and Quality were far more encouraging. These estimates from calendar year 2013 showed that the rate of hospital-acquired conditions declined by 9% from 2012 to 2013 and by slightly over 17% from 2010 to 2013. The lead investigators in this study further estimated that 50,000 fewer patients died in the hospital as a result of this decrease at a total cost savings of approximately US$12 billion. As it then turns out, the quality movement has made substantial gains in improving patient safety, though it is clear that we still have a long journey ahead.30

High reliability theory (HRT) versus normal accident theory (NAT)

Historically, most efforts at improving patient safety focused on changing individual behavior, ie, “getting rid of all the bad apples”.31–33 In other words, only “bad physicians” or “bad nurses” made the kinds of errors that resulted in medical harm.34 The so-called “good physicians” and “good nurses” were the ones who never made mistakes. Unfortunately, by focusing on the individual, health care systems and hospitals neglected to focus on the underlying systems issues that were truly the root cause of medical harm.1,35,36 As it turns out, blaming well-intentioned individuals for their mistakes is not a very effective way to learn from and hopefully prevent errors from occurring in the future. It is not that physicians, nurses, and other health care providers do not make mistakes. As the 18th century English poet, Alexander Pope stated, “To err is human”. Studies from the highway traffic safety and aviation safety literature suggest that the majority of accidents (some statistics would say that as many as 70% of all accidents) are, in fact, associated with human error.37–40 The key issue is to fully acknowledge the fact that humans will make mistakes and to therefore design systems that will identify these errors early and prevent them from escalating to the point of causing harm. By analyzing the systems issues that cause accidents rather than blaming individuals, commercial aviation and other industries have been able to dramatically improve their safety record.41

Virtually all accidents are associated with systems factors. Two prevailing schools of thought have helped safety experts focus on these systems factors – HRT and NAT. HRT is based on the premise that errors can be prevented through top leadership commitment to a culture of safety and reliability. HRT was initially developed by a group of researchers from the University of California, Berkeley (Todd LaPorte, Gene Rochlin, and Karlene Roberts), based upon their initial work with aircraft carrier flight operations (in partnership with Rear Admiral Tom Mercer, on the USS Carl Vinson), the Federal Aviation Administration’s air traffic control, and nuclear power plant operations (largely at Pacific Gas and Electric’s Diablo Canyon facility in southern California).42–47 The theory was further refined by Schulman48 and Weick and Sutcliffe.49 The fundamental concept in HRT is that there are so-called high reliability organizations (HROs), which are defined as organizations that possess certain cultural characteristics that allow them to operate in highly dangerous environments with near-perfect safety records. In this context, organizations should learn from HROs and try to adopt some of these characteristics in order to improve safety, by ultimately becoming HROs themselves. To this end, an entire consultative industry has developed around the belief that organizations can improve quality and safety by adopting HRO characteristics.50

In contrast, NAT takes a more pessimistic viewpoint. NAT was developed largely by Perrow, following his thorough analysis of the Three Mile Island nuclear power plant accident.51 NAT assumes that accidents are inevitable (it is indeed “normal” to experience accidents), due to the inherent complexity of some organizations. In a complex system, it is difficult to predict all of the ways that a system can fail. Complex systems are often non-linear, interdependent, and highly connected. NAT also predicts that systems will fail due to a property called tight coupling. Tight coupling causes a small defect or failure to quickly scale out of control – in this kind of system, it is difficult to stop an impending disaster once a chain of events has been initiated. Perrow would definitely consider health organizations to be complex and tightly coupled, suggesting that accidents are inevitable – indeed, they are normal. Unfortunately, Perrow’s NAT does not offer much in the way of helpful advice on how to address the fact that accidents are inevitable. The strength of NAT is that it provides a framework with which to view complex systems and the associated risks of system failure. Rather than viewing HRT and NAT as mutually exclusive, several authorities now recognize that these two theories provide complementary insight into how systems factors can be designed in such a way to minimize the level of risk in order to improve safety.52–54 Indeed, some of the elements of HRT described below address some of the points raised by Perrow’s NAT.

HROs

From a systems engineering perspective, reliability is defined as the absence of undesirable or unwanted process variation, often resulting in error-free operation over a sustained period of time.55,56 Reliability is commonly expressed as an inverse of a system’s failure or defect rate. For example, if a system experiences one defect out of every ten chances, the defect rate is 10% (1/10) and the reliability is expressed as 10−1.55,56 In health care, the rate of CLA-BSIs is commonly expressed as the number of infections per 1,000 central line days (the total number of central line days for all patients in the hospital in a certain period of time, typically a month or year). If a hospital has a CLA-BSI rate of one infection per 1,000 central line days, the reliability of this particular system would be denoted as 10−3. Table 1 provides a comparison of different reliability measurements for processes in a number of industries and health care.

| Table 1 Levels of reliability |

As stated above, HROs are defined as hazardous organizations that operate with exemplary safety records over extended periods of time.42,43,45,49 The processes and systems within an HRO typically operate in the range of 10−5 or 10−6 (Table 2). Roberts suggested that HROs could be identified by the following simple question, “How many times could this organization have failed, resulting in catastrophic consequences, but did not?” If the answer to this question is “countless times” (or something on that scale and order), the organization was likely to be an HRO.42,43 The typical HRO is an organization that exists in a hostile environment or at least one that is rich in the potential for errors. Indeed, most HROs are characterized by complex systems that are tightly coupled (mutually interdependent, highly connected, often time-dependent systems with significant redundancy – see discussion on NAT above). The nature of these environments is such that learning through either experimentation or trial-and-error is almost impossible. For example, trying to change the manner in which an aircraft lands on a rolling, pitching flight deck in heavy seas at night is probably ill-advised. Finally, HROs often utilize complex technologies. However, what sets HROs apart from less reliable organizations are five important characteristics that were described by Weick and Sutcliffe.49 “Risk” is really a function of the probability of error and the consequence of that error. HROs minimize risk by reducing the probability of error through: i) a preoccupation with failure, ii) a sensitivity to operations, and iii) a reluctance to simplify. HROs minimize the consequence of errors through: i) a commitment to resilience, and ii) a deference to expertise.49,57

| Table 2 System characteristics as they relate to differing levels of reliability |

Preoccupation with failure

HROs continuously monitor critical processes and proactively conduct risk assessments in order to prevent errors. They focus on their failures as much as they do (if not more) on their successes. HROs do not hide their mistakes, but rather view their errors as “opportunities for improvement” and act accordingly. As Thomas J Watson, founder of International Business Machines (IBM) stated, “If you want to increase your success rate, double your failure rate”. Individuals in the HRO will report their mistakes, even when nobody else notices that a mistake has been made, and their managers reward the individuals for reporting their mistakes rather than punishing or blaming them.48,58 For example, Navy pilots are evaluated by one of their peers (the “landing safety officer”) every time that they land a plane on the flight deck of an aircraft carrier. In many cases, the landing is recorded on video and analyzed and critiqued in detail.42,46 The equivalent in health care would be if every surgical procedure performed by an individual surgeon was monitored, recorded on video, and critiqued by a fellow surgeon. Again, as discussed above, most health care organizations have not progressed far enough beyond the deeply ingrained culture of blame to be able to accomplish something that is similar, but orders of magnitude above and beyond peer review.59–63

Sensitivity to operations

HROs focus on what happens on the front-line, or sharp end (the personnel or parts of the system that are closest to the action). The individuals on the front-line are provided with the necessary resources to accomplish their work. If a problem does occur, these individuals recognize the problem and are able to contain it before the problem propagates through the rest of the system. Front-line managers have the knowledge and skills to provide assistance whenever necessary. They constantly assess the potential threats to completing the tasks that must be completed.

Situation awareness is defined as the recognition or perception of what is happening, the comprehension of its meaning, and the projection of how what is currently happening will impact the future.64,65 In this context, sensitivity to operations essentially means that individuals in HROs have situation awareness. The US Air Force used to describe pilots during the war in Korea and Vietnam who always seemed to know what was going on around them during aerial combat – these pilots had the uncanny ability to observe their opponent’s move and anticipate their next move in a mere fraction of seconds.66 These pilots had what was called the ace factor.67

Wayne Gretzky, one of the greatest hockey players in the history of the National Hockey League (his nickname is “The Great One”) was once asked what made him such a great hockey player. Gretzky replied that he skated “where the puck is going, not where it’s been”. Skating to where the puck is going is what situation awareness is all about. There are many examples of situation awareness in health care today.68–71 For example, similar to the ace factor described above, every physician and nurse can usually recall an instance in which a nurse or physician looked at a patient and was quickly able to tell that the patient was going to become critically ill, even before anyone else was worried about the patient.72 “It was like the nurse had a sixth sense of what was going to happen”, many would say. Again, here is a perfect example of situation awareness at its best.

Reluctance to simplify

Individuals in HROs take absolutely nothing for granted. As discussed above, HROs encourage a questioning attitude. Moreover, the simplest explanation is not accepted by HROs – individuals in HROs dig deeper for the real answer to why a particular incident occurred. HROs encourage diversity in experience and opinion. HROs are so-called learning organizations41,54,73–77 – they create environments that are conducive to learning from mistakes and refining processes until they are executed nearly flawlessly. HROs move beyond what is called first-order problem-solving to higher orders of problem-solving.75,78,79 As an example, consider a physician who is placing a central line in a critically ill patient. If all the necessary equipment is not readily available, the physician will likely go find the rest of the equipment and supplies and bring it to the patient’s bedside, so that the central line can be placed. While the initial problem has been solved (through first-order problem-solving), the root cause of the problem (equipment and supplies are not placed in the same area) has not been adequately addressed. HROs would move to second-order problem-solving and create a system where all of the necessary equipment is readily available when needed.80

The US Army was one of the first organizations to systematically review and capture lessons learned on a routine basis, through a process called the “after action review” (AAR). The AAR was originally designed to maximize learning from battlefield simulations,81 though many other organizations have adopted this process as a way to maximize organizational learning.82–84 HROs utilize AARs and other similar techniques in order to take full advantage of learning opportunities, improve key processes, and sustain nearly perfect performance. Many health organizations have adopted a similar technique, called the “root-cause analysis”85 to systematically review events of harm in the hospital setting.86

Commitment to resilience

HROs are resilient systems – they recover quickly from setbacks or other difficult situations. HROs are able to do so through continuous training and re-training. Front-line personnel are trained through simulation and other innovative techniques to complete their jobs with minimal variation in performance. HROs recognize that not every mistake can be prevented. The key to high reliability is to develop strategies, techniques, and systems that will prevent these small mistakes from becoming catastrophic, system-wide failures. For example, every day at sea, everyone on the flight deck of a US Navy aircraft carrier will stand shoulder-to-shoulder and walk from one end of the flight deck to the other, looking for foreign object debris (FOD), in what is called an “FOD walk”. Even small pieces of debris can be deadly, if they are sucked up into a jet engine. In health care, there are several potential analogous examples to the FOD walk, though widespread implementation of these techniques has limited their utility. For example, Gawande87 and Pronovost and Vohr88 popularized the use of checklists in the operating room and intensive care unit, respectively.89,90 The surgical safety checklist was designed to improve team communication and reduce complications – implementation of this checklist has significantly reduced complications and improved outcomes.91 Daily goal sheets,92,93 standardized order sets, clinical pathways, protocols, structured communication during multi-disciplinary rounds,94,95 and standardized hand-offs96–100 are additional techniques that are analogous to the FOD walk and may improve care delivery in the hospital setting.

Deference to expertise

HROs push decision making to the individuals at the sharp end with the most knowledge, experience, and expertise. The individuals who are most qualified to make a decision are the ones who make the key decisions. Experience and expertise are valued more than hierarchical rank. Individuals in an HRO will take ownership of a problem or issue until it is resolved.

The concept of deference to expertise is not new. One of the major arguments to health care organizations becoming HROs is the fact that health care does not have the hierarchical, command and control leadership structure that is believed to exist in US Navy nuclear-powered aircraft carriers. While it is true that most individuals in the military know to follow orders, the military has, in fact, become less hierarchical to some degree. The fog of war is often used to describe the rapidly changing, ambiguous, volatile, unpredictable nature of fighting on the battlefield. As a famous Marine Corps general once said, “Once the shooting starts, all plans fall apart”. Moreover, the command-and-control structure becomes very difficult in the middle of a battle. For these reasons, the military has adopted a doctrine called “commander’s intent”, which was first used by the German military during World War II, although it was called “auftragstaktik”.101,102 The concept here is that front-line leaders are provided with mission-type orders that describe the overall goals and objectives of a particular mission, the strategy that will be used, the resources that will be available, and the overall battle plan. These orders also include a description of what the battlefield commander wants to specifically accomplish – the commander’s intent. Based on these orders, the front-line leader can operate with some autonomy to accomplish the overall goals and objectives of the battlefield commander.103 The United States Marine Corps104 and the Australian Army105 have extended this concept with the definition of the strategic corporal, which shares many of the features of the concepts of commander’s intent, auftragstaktik, and deference to expertise (see below).

There are a few examples of deference to expertise in health care today. For example, rapid response systems have been designed as early warning systems to detect hospitalized patients who are clinically deteriorating. These systems were designed to minimize cardiac arrests outside of the intensive care unit – in many systems, bedside nurses (and in some cases, even family members) are empowered to activate the rapid response system without seeking approval from another provider.106,107 The key here is that if a decision needs to be made quickly, it should be made by the individual who has the most experience, knowledge, and expertise. In many cases in health care, as in HROs, that individual is on the sharp end.

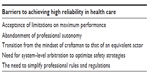

Potential barriers to high reliability health care

Some authors have argued that health care organizations will never become HROs.108,109 Amalberti et al110 have listed five system barriers to achieving high reliability in health care today (Table 3). The current health care delivery system is highly fragmented and exists in silos in which individual providers are isolated. The nature of the current system makes learning from errors extremely difficult. Moreover, there is too much variation and not enough standardization, which further compounds the risk of errors as well as waste and inefficiency. Finally, the relative lack of transparency, as well as the fear of litigation has been a major barrier to learning from errors.111–113 However, these barriers are not insurmountable, but will require a concerted effort, leadership support, and engagement of the front-line providers.114

| Table 3 Barriers to achieving high reliability in health care |

Conclusion

There are probably many different approaches to improving patient safety that have not been discussed here. Some authors have questioned whether hospitals can ever become HROs.108 However, there is a growing consensus that adopting some of the behaviors from other HROs will improve patient safety. Some of the recent statistics on patient safety suggest that the HRO approach is on the right track.30 The key point here is that hospitals can no longer ignore the fact that some hospitals are, in fact, moving toward becoming HROs. As Albert Einstein said, “Insanity is doing the same thing over and over again and expecting different results”. With Einstein’s thought in mind, health care organizations need to learn from other industries and continue to borrow, adopt, and/or modify some of the techniques, skills, and knowledge that these industries use to improve quality and safety.

Disclosure

The author reports no conflicts of interest in this work.

References

Medicine Io. To Err is Human: Building a Safer Health Care System. Washington DC: Institute of Medicine and National Academy Press; 1999. | |

Shojania KG. Deaths due to medical error: Jumbo jets or just small propeller planes? BMJ Qual Saf. 2012;21(9):709–712. | |

Brennan TA, Leape LL, Laird NM, et al. Incidence of adverse events and negligence in hospitalized patients. Results of the Harvard Medical Practice Study I. N Engl J Med. 1991;324(6):370–376. | |

Leape LL, Brennan TA, Laird N, et al. The nature of adverse events in hospitalized patients. Results of the Harvard Medical Practice Study II. N Engl J Med. 1991;324(6):377–384. | |

Thomas EJ, Studdert DM, Burstin HR, et al. Incidence and types of adverse events and negligent care in Utah and Colorado. Med Care. 2000;38(3):261–271. | |

Zegers M, de Bruijne MC, Wagner C, et al. Adverse events and potentially preventable deaths in Dutch hospitals: Results of a retrospective patient record review study. Qual Saf Health Care. 2009;18(4):297–302. | |

Woods D, Thomas EJ, Holl J, Altman S, Brennan T. Adverse events and preventable adverse events in children. Pediatrics. 2005;115(1):155–160. | |

Vincent C, Neale G, Woloshynowych M. Adverse events in British hospitals: Preliminary retrospective record review. BMJ. 2001; 322(7285):517–519. | |

Davis P, Lay-Yee R, Briant R, Ali W, Scott A, Schug S. Adverse events in New Zealand public hospitals I: Occurrence and impact. N Z Med J. 2002;115(1167):U271. | |

Baker GR, Norton PG, Flintoft V, et al. The Canadian Adverse Events Study: The incidence of adverse events among hospital patients in Canada. CMAJ. 2004;170(11):1678–1686. | |

Jha AK, Larizgoitia I, Audera-Lopez C, Prasopa-Plaizier N, Waters H, Bates DW. The global burden of unsafe medical care: Analytic modelling of observational studies. BMJ Qual Saf. 2013;22(10):809–815. | |

Sparling KW, Ryckman FC, Schoettker PJ, et al. Financial impact of failing to prevent surgical site infections. Qual Manag Health Care. 2007;16(3):219–225. | |

Nowak JE, Brilli RJ, Lake MR, et al. Reducing catheter-associated bloodstream infections in the pediatric intensive care unit: Business case for quality improvement. Pediatr Crit Care Med. 2010;11(5):579–587. | |

Brilli RJ, Sparling KW, Lake MR, et al. The business case for preventing ventilator-associated pneumonia in pediatric intensive care unit patients. Jt Comm J Qual Patient Saf. 2008;34(11):629–638. | |

Wheeler DS, Whitt JD, Lake M, Butcher J, Schulte M, Stalets E. A case-control study on the impact of ventilator-associated tracheobronchitis in the PICU. Pediatr Crit Care Med. 2015;16(6):565–571. | |

Umscheid CA, Mitchell MD, Doshi JA, Agarwal R, Williams K, Brennan PJ. Estimating the proportion of healthcare-associated infections that are reasonably preventable and the related mortality and costs. Infect Control Hosp Epidemiol. 2011;32(2):101–114. | |

Zimlichman E, Henderson D, Tamir O, et al. Health care-associated infections: A meta-analysis of costs and financial impact on the US health care system. JAMA Intern Med. 2013;173(22):2039–2046. | |

Klevens RM, Edwards JR, Richards CL, et al. Estimating health care-associated infections and deaths in U.S. hospitals, 2002. Public Health Rep. 2007;122(2):160–166. | |

Crandall WV, Davis JT, McClead R, Brilli RJ. Is preventable harm the right patient safety metric? Pediatr Clin North Am. 2012;59(6):1279–1292. | |

Brilli RJ, McClead RE Jr, Davis T, Stoverock L, Rayburn A, Berry JC. The Preventable Harm Index: An effective motivator to facilitate the drive to zero. J Pediatr. 2010;157(4):681–683. | |

Hayward RA, Hofer TP. Estimating hospital deaths due to medical errors: Preventability is in the eye of the reviewer. JAMA. 2001;286(4):415–420. | |

Briant R, Buchanan J, Lay-Yee R, Davis P. Representative case series from New Zealand public hospital admissions in 1998 – III: Adverse events and death. N Z Med J. 2006;119(1231):U1909. | |

Hogan H, Healey F, Neale G, Thomson R, Vincent C, Black N. Preventable deaths due to problems in care in English acute hospitals: A retrospective case record review study. BMJ Qual Saf. 2012;21(9):737–745. | |

Harbarth S, Sax H, Gastmeier P. The preventable proportion of nosocomial infections: An overview of published reports. J Hosp Infect. 2003;54(4):258–266. | |

Smits M, Zegers M, Groenewegen PP, et al. Exploring the causes of adverse events in hospitals and potential prevention strategies. Qual Saf Health Care. 2010;19(5):e5. | |

Scalise D. 5 years after IOM … the evolving state of patient safety. Hosp Health Netw. 2004;78(10):59–62. | |

Leape LL, Berwick DM. Five years after To Err is Human: What have we learned? JAMA. 2005;293(19):2384–2390. | |

Landrigan CP, Parry GJ, Bones CB, Hackbarth AD, Goldmann DA, Sharek PJ. Temporal trends in rates of patient harm resulting from medical care. N Engl J Med. 2010;363(22):2124–2134. | |

Classen DC, Resar R, Griffin F, et al. ‘Global trigger tool’ shows that adverse events in hospitals may be ten times greater than previously measured. Health Aff (Millwood). 2011;30(4):581–589. | |

ahrq.gov [homepage on the Internet]. Interim Update on 2013 Annual Hospital-Acquired Condition Rate and Estimates of Cost Savings and Deaths Averted From 2010 to 2013. Washington, DC: US Department of Health and Human Services; 2015 [cited April 19, 2015]. Available from: http://www.ahrq.gov/professionals/quality-patient-safety/pfp/interimhac2013-ap2.html. Accessed July 14, 2015. | |

Kennedy D. Analysis of sharp-end, frontline human error: Beyond throwing out “bad apples”. J Nurs Care Qual. 2004;19(2):116–122. | |

Hsia DC. Medicare quality improvement: Bad apples or bad systems? JAMA. 2003;289(3):354–356. | |

Canadian Health Services Research Foundation. We can eliminate errors in health care by getting rid of the “bad apples”. J Health Serv Res Policy. 2006;11(1):63–64. | |

Nicholson D, Hersh W, Gandhi TK, Weingart SN, Bates DW. Medication errors: not just a few “bad apples”. J Clin Outcomes Manag. 2006;13(2):114–115. | |

Berwick DM. A primer on leading the improvement of systems. BMJ. 1996;312(7031):619–622. | |

Woodhouse S, Burney B, Coste K. To err is human: Improving patient safety through failure mode and effect analysis. Clin Leadership Manage Rev. 2004;18(1):32–36. | |

Brehmer B. Variable errors set a limit to adaptation. Ergonomics. 1990;33(10–11):325–332. | |

McFadden KL, Hosmane BS. Operations safety: An assessment of a commercial aviation safety program. Journal of Operations Management. 2001;19:579–591. | |

Li WC, Harris D. Pilot error and its relationship with higher organizational levels: HFACS analysis of 523 accidents. Aviat Space Environ Med. 2006;77(10):1056–1061. | |

Shappell S, Detwiler C, Holcomb K, Hackworth C, Boquet A, Wiegmann DA. Human error and commercial aviation accidents: An analysis using the human factors analysis and classification system. Hum Factors. 2007;49(2):227–242. | |

Kaissi A. “Learning” from other industries: Lessons and challenges for health care organizations. Health Care Manag (Frederick). 2012; 31(1):65–74. | |

Rochlin G, La Porte T, Roberts K. The self-designing high reliability organization: Aircraft carrier flight operations at sea. Naval War College Review. 1987;42:76–91. | |

Roberts KH. Some characteristics of one type of high reliability organization. Organization Science. 1990;1:160–176. | |

Weick KE, Roberts KH. Collective mind in organizations: Heedful interrelating on flight decks. Administrative Science Quarterly. 1993;38:357–81. | |

Roberts KH. Cultural characteristics of reliability enhancing organizations. Journal of Managerial Issues. 1993;5:165–181. | |

Roberts K, Rousseau D, La Porte T. The culture of high reliability: Quantitative and qualitative assessment aboard nuclear powered aircraft carriers. J High Technol Manage Res. 1994;5:141–161. | |

Rochlin GI. Reliable organizations: Present research and future directions. Journal of Contingencies Crisis Management. 1996;4(2):55–59. | |

Schulman PR. Heroes, organizations, and high reliability. Journal of Contingencies Crisis Management. 1996;4(2):72–82. | |

Weick KE, Sutcliffe KM. Managing the Unexpected: Assuring High Performance in an Age of Complexity. San Francisco, CA: Jossey-Bass; 2007. | |

Boin A, Schulman P. Assessing NASA’s safety culture: The limits and possibilities of high-reliability theory. Public Administration Review. 2008;68(6):1050–1062. | |

Perrow C. Normal Accidents: Living with High Risk Technologies. Princeton, NJ: Princeton University Press; 1984. | |

Tamuz M, Harrison MI. Improving patient safety in hospitals: Contributions of high-reliability theory and normal accident theory. Health Serv Res. 2006;41(4 Pt 2):1654–1676. | |

Shrivastava S, Sonpar K, Pazzaglia F. Normal accident theory versus high reliability theory: A resolution and call for an open systems view of accidents. Human Relations. 2009;62:1357–1390. | |

Cooke DL, Rohleder TR. Learning from incidents: From normal accidents to high reliability. System Dynamics Review. 2006;22:213–239. | |

Niedner MF, Muething SE, Sutcliffe KM. The high-reliability pediatric intensive care unit. Pediatr Clin North Am. 2013;60(3):563–580. | |

Luria JW, Muething SE, Schoettker PJ, Kotagal UR. Reliability science and patient safety. Pediatr Clin North Am. 2006;53(6):1121–1133. | |

Sutcliffe KM. High reliability organizations (HROs). Best Pract Res Clin Anaesthesiol. 2011;25(2):133–144. | |

Schulman PR. General attributes of safe organisations. Qual Saf Health Care. 2004;13 Suppl 2:ii39–ii44. | |

Helmreich RL, Merritt A. Culture at Work in Aviation and Medicine. Aldershot: Ashgate; 1998. | |

Sexton JB, Thomas EJ, Helmreich RL. Error, stress, and teamwork in medicine and aviation: Cross sectional surveys. BMJ. 2000;320(7237):745–749. | |

Gaba DM. Structural and organizational issues in patient safety: A comparison of health care to other high-hazard industries. Calif Manage Rev. 2001;43:83–102. | |

Gaba DM, Singer SJ, Sinaiko AD, Bowen JD, Ciavarelli AP. Differences in safety climate between hospital personnel and Naval aviators. Hum Factors. 2003;45(2):173–185. | |

Loreto M, Kahn D, Glanc P. Survey of radiologist attitudes and perceptions regarding the incorporation of a departmental peer review system. J Am Coll Radiol. 2014;11(11):1034–1037. | |

Jones DG, Endsley MR. Sources of situation awareness errors in aviation. Aviat Space Environ Med. 1996;67(6):507–512. | |

Jones DG, Endsley MR. Overcoming representational errors in complex environments. Hum Factors. 2000;42(3):367–378. | |

Watts BD. Situation Awareness in Air-to-Air Combat and Friction. Washington DC: Institute of National Strategic Studies; 2004. | |

Spick M. The Ace Factor: Air Combat and the Role of Situation Awareness. Annapolis: Naval Institute Press; 1988. | |

Wright MC, Taekman JM, Endsley MR. Objective measures of situation awareness in a simulated medical environment. Qual Saf Health Care. 2004;13 Suppl 1:i65–i71. | |

Schulz CM, Endsley MR, Kochs EF, Gelb AW, Wagner KJ. Situation awareness in anesthesia: Concept and research. Anesthesiology. 2013;118(3):729–742. | |

Brady PW, Wheeler DS, Muething SE, Kotagal UR. Situation awareness: A new model for predicting and preventing patient deterioration. Hosp Pediatr. 2014;4(3):143–146. | |

Brady PW, Muething S, Kotagal U, et al. Improving situation awareness to reduce unrecognized clinical deterioration and serious safety events. Pediatrics. 2013;131(1):e298–e308. | |

Crandall B, Getchell-Reiter K. Critical decision method: A technique for eliciting concrete assessment indicators from the intuition of NICU nurses. ANS Adv Nurs Sci. 1993;16(1):42–51. | |

Resar RK. Making noncatastrophic health care processes reliable: Learning to walk before running in creating high-reliability organizations. Health Serv Res. 2006;41(4 Pt 2):1677–1689. | |

Mulholland P, Zdrahal Z, Dominque J. Supporting continuous learning in a large organization: The role of group and organizational perspectives. Appl Ergon. 2005;36(2):127–134. | |

Garvin DA, Edmondson AC, Gino F. Is yours a learning organization? Harv Bus Rev. 2008;86(3):109–116, 134. | |

Garvin DA. Building a learning organization. Harv Bus Rev. 1993;71(4):78–91. | |

Firth-Cozens J. Cultures for improving patient safety through learning: The role of teamwork. Qual Health Care. 2001;10 Suppl 2:ii26–ii31. | |

Tucker AL, Edmondson AC, Spear S. When problem solving prevents organizational learning. Journal of Organizational Change Management. 2002;15(2):122–137. | |

Edmondson AC. Learning from failure in health care: Frequent opportunities, pervasive barriers. Qual Saf Health Care. 2004;13 Suppl 2: ii3–ii9. | |

Pronovost P, Needham D, Berenholtz S, et al. An intervention to decrease catheter-related bloodstream infections in the ICU. N Engl J Med. 2006;355(26):2725–2732. | |

Headquarters DotA. A Leader’s Guide to After-Action Reviews. Washington DC: Department of the Army; 1993. | |

Darling M, Parry C, Moore J. Learning in the thick of it. Harv Bus Rev. 2005;83(7):84–92, 192. | |

Cronin G, Andrews S. After action reviews: A new model for learning. Emerg Nurse. 2009;17(3):32–35. | |

Allen JA, Baran BE, Scott CW. After-action reviews: A venue for the promotion of safety climate. Accid Anal Prev. 2010;42(2):750–757. | |

Shaqdan K, Aran S, Daftari Besheli L, Abujudeh H. Root-cause analysis and health failure mode and effect analysis: Two leading techniques in health care quality assessment. J Am Coll Radiol. 2014;11(6):572–579. | |

Muething SE, Goudie A, Schoettker PJ, et al. Quality improvement initiative to reduce serious safety events and improve patient safety. Pediatrics. 2012;130(2):e423–e431. | |

Gawande A. The Checklist Manifesto. New York: Metropolitan Books; 2009. | |

Pronovost P, Vohr E. Safe Patients, Smart Hospitals: How One Doctor’s Checklist can Help Us Change Health Care from The Inside Out. New York: Hudson Street Press; 2010. | |

Gawande A. The checklist: If something so simple can transform intensive care, what else can it do? New Yorker. 2007;10:86–101. | |

Hales BM, Pronovost PJ. The checklist – a tool for error management and performance improvement. J Crit Care. 2006;21(3):231–235. | |

Haynes AB, Weiser TG, Berry WR, et al. A surgical safety checklist to reduce morbidity and mortality in a global population. N Engl J Med. 2009;360(5):491–499. | |

Phipps LM, Thomas NJ. The use of a daily goals sheet to improve communication in the paediatric intensive care unit. Intensive Crit Care Nurs. 2007;23(5):264–271. | |

Agarwal S, Frankel L, Tourner S, McMillan A, Sharek PJ. Improving communication in a pediatric intensive care unit using daily patient goal sheets. J Crit Care. 2008;23(2):227–235. | |

Schwartz JM, Nelson KL, Saliski M, Hunt EA, Pronovost PJ. The daily goals communication sheet: A simple and novel tool for improved communication and care. Jt Comm J Qual Patient Saf. 2008;34(10):608–613, 561. | |

Vats A, Goin KH, Villareal MC, Yilmaz T, Fortenberry JD, Keskinocak P. The impact of a lean rounding process in a pediatric intensive care unit. Crit Care Med. 2012;40(2):608–617. | |

Catchpole KR, de Leval MR, McEwan A, et al. Patient handover from surgery to intensive care: Using Formula 1 pit-stop and aviation models to improve safety and quality. Paediatr Anaesth. 2007;17(5):470–478. | |

Joy BF, Elliot E, Hardy C, Sullivan C, Backer CL, Kane JM. Standardized multidisciplinary protocol improves handover of cardiac surgery patients to the intensive care unit. Pediatr Crit Care Med. 2011;12(3):304–308. | |

Zavalkoff SR, Razack SI, Lavoie J, Dancea AB. Handover after pediatric heart surgery: A simple tool improves information exchange. Pediatr Crit Care Med. 2011;12(3):309–313. | |

Chen JG, Wright MC, Smith PB, Jaggers J, Mistry KP. Adaptation of a postoperative handoff communication process for children with heart disease: A quantitative study. Am J Med Qual. 2011;26(5):380–386. | |

Starmer AJ, Spector ND, Srivastava R, et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803–1812. | |

Bashista RJ. Auftragstaktik: It’s more than just a word. ARMOR. 1994;103:19. | |

Goal Systems International. Dettmer HW. Business and the blitzkrieg. Port Angeles, WA: Goal Systems International; 2005. Available from: http://www.goalsys.com/systemsthinking/documents/Part-2-BusinessandtheBlitzkrieg.pdf. Accessed July 14, 2015. | |

Pech RJ, Durden G. Manoeuvre warfare: A new military paradigm for business decision making. Management Decision. 2003;41(2):168–179. | |

au.af.mil [homepage on the Internet]. Krulak CC. The strategic corporal: Leadership in the three block war. Marines Magazine; 1999. Available from: http://www.au.af.mil/au/awc/awcgate/usmc/strategic_corporal.htm. Accessed July 14, 2015. | |

Liddy L. The strategic corporal: Some requirements in training and education. Australian Army Journal. 2005;2(2):139–147. | |

Brilli RJ, Gibson R, Luria JW, et al. Implementation of a medical emergency team in a large pediatric teaching hospital prevents respiratory and cardiopulmonary arrests outside the intensive care unit. Pediatr Crit Care Med. 2007;8(3):236–247. | |

Brady PW, Zix J, Brilli R, et al. Developing and evaluating the success of a family activated medical emergency team: A quality improvement project. BMJ Qual Saf. 2015;24(3):203–211. | |

Bagnara S, Parlangeli O, Tartaglia R. Are hospitals becoming high reliability organizations? Appl Ergon. 2010;41(5):713–718. | |

Nembhard IM, Alexander JA, Hoff TJ, Ramanujam R. Why does the quality of health care continue to lag? Insights from management research. Acadamy of Management Perspectives. 2009;23:24–42. | |

Amalberti R, Auroy Y, Berwick D, Barach P. Five system barriers to achieving ultrasafe health care. Ann Intern Med. 2005;142(9):756–764. | |

Mello MM, Senecal SK, Kuznetsov Y, Cohn JS. Implementing hospital-based communication-and-resolution programs: Lessons learned in New York City. Health Aff (Millwood). 2014;33(1):30–38. | |

Mello MM, Boothman RC, McDonald T, et al. Communication-and-resolution programs: The challenges and lessons learned from six early adopters. Health Aff (Millwood). 2014;33(1):20–29. | |

Bell SK, Smulowitz PB, Woodward AC, et al. Disclosure, apology, and offer programs: Stakeholder’s views of barriers to and strategies for broad implementation. Milbank Q. 2012;90(4):682–705. | |

Chassin MR, Loeb JM. High-reliability health care: Getting there from here. Milbank Q. 2013;91(3):459–490. |

© 2015 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2015 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.