Back to Journals » Journal of Healthcare Leadership » Volume 8

Design, implementation, and demographic differences of HEAL: a self-report health care leadership instrument

Authors Murphy KR, McManigle JE, Wildman-Tobriner BM, Little Jones A, Dekker TJ, Little BA, Doty JP, Taylor DC

Received 5 June 2016

Accepted for publication 15 July 2016

Published 20 October 2016 Volume 2016:8 Pages 51—59

DOI https://doi.org/10.2147/JHL.S114360

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 2

Editor who approved publication: Professor Russell Taichman

Kelly R Murphy, John E McManigle, Benjamin M Wildman-Tobriner, Amy Little Jones, Travis J Dekker, Barrett A Little, Joseph P Doty, Dean C Taylor

Duke Healthcare Leadership Program, Duke University School of Medicine, Durham, NC, USA

Abstract: The medical community has recognized the importance of leadership skills among its members. While numerous leadership assessment tools exist at present, few are specifically tailored to the unique health care environment. The study team designed a 24-item survey (Healthcare Evaluation & Assessment of Leadership [HEAL]) to measure leadership competency based on the core competencies and core principles of the Duke Healthcare Leadership Model. A novel digital platform was created for use on handheld devices to facilitate its distribution and completion. This pilot phase involved 126 health care professionals self-assessing their leadership abilities. The study aimed to determine both the content validity of the survey and the feasibility of its implementation and use. The digital platform for survey implementation was easy to complete, and there were no technical problems with survey use or data collection. With regard to reliability, initial survey results revealed that each core leadership tenet met or exceeded the reliability cutoff of 0.7. In self-assessment of leadership, women scored themselves higher than men in questions related to patient centeredness (P=0.016). When stratified by age, younger providers rated themselves lower with regard to emotional intelligence and integrity. There were no differences in self-assessment when stratified by medical specialty. While only a pilot study, initial data suggest that HEAL is a reliable and easy-to-administer survey for health care leadership assessment. Differences in responses by sex and age with respect to patient centeredness, integrity, and emotional intelligence raise questions about how providers view themselves amid complex medical teams. As the survey is refined and further administered, HEAL will be used not only as a self-assessment tool but also in “360” evaluation formats.

Keywords: emotional intelligence, patient centeredness, sex, specialty, age, leadership assessment

Introduction

The medical community increasingly values competency in leadership in its health care providers.1–8 While the concept of a “leader” has been described extensively across disciplines, it has yet to be fully defined with respect to health care. In part, this stems from the diverse competencies and complex interactions inherent to health care, a potentially hierarchical setting in which both “formal” and “informal” leaders are present. Health care teams consist of a myriad of provider types (eg, medical doctors, registered nurses, and physician assistants) and levels of proficiency (from student to independent professional), making a standardized definition of leadership that applies to all health care providers problematic. Whether a person holds a formal title such as “chairman of medicine” or an informal position such as “upper level resident,” each provider is charged with the responsibility of displaying leadership for colleagues and for patients.9

Leadership skills in this domain are necessary for coordination of the increasingly intricate care that modern medicine patients receive. Whether through modeling, organizing, motivating, or otherwise, both formal and informal leaders manage individual patient interactions, small treatment teams, larger departments, hospitals, or even entire health systems. Within teams, degrees of hierarchy dictate some leadership boundaries, but a spectrum of leadership is demanded of each person in health care – from the student who must show communication skills in patient presentations, to the nurse who must convey the needs of the patient to the physician, and to the therapist who must motivate the patient toward goals of rehabilitation. Providers must know when to take charge but equally must know when to show deference to either a colleague or a patient.

While the degree of complexity may be similar to fields outside of medicine, the “life and death” decisions and emphasis on patient autonomy further underscore the uniqueness and need for medical leadership. Patient outcomes, patient satisfaction, and employee success may be related to having strong leaders, and critical moments of care such as emergent codes, goals of care conversations, and trainee missteps present opportunities to highlight the leadership abilities, or lack thereof, of a provider. Inherently, there can be knowledge differentials between providers and patients – patients often seek the influence of the provider who is well learned in specific aspects of medicine to guide them and make appropriate recommendations. The patient thus relies on the communication skills and integrity of the provider to minimize the knowledge differential, thereby empowering the patient toward understanding critical aspects of their disease and treatment options. The need for leaders in medicine who neither neglect this responsibility nor take advantage of this power differential is imperative.

Acknowledging the importance of specifically physician leaders as both formal and informal leaders, medical schools increasingly incorporate leadership into their curriculum through focused seminars and class settings.3,7,10,13–16 Some residency programs similarly integrate leadership teaching and competency measures into their training. There are no data, however, to discern if this additional training improves skills. Identifying a model specific to health care leadership that can apply to both formal and informal leaders is essential to evaluate providers on leadership ability and to develop skills that nurture leadership in the health care setting. A simple, validated, clinically relevant, and generalizable means of measuring leadership competency would address this need by providing a basis upon which clinicians are guided through professional development in leadership.7,17 Furthermore, leadership skills reflect aspects of personality and character that often vary according to age and sex.18–24 Specifically, self-reported ratings show significant differences by sex; men often overreport and women often underreport their abilities.25 To date, it is not known whether there are differences in leadership strengths and weaknesses by specialty or provider pathway.

To further understand the concept of leadership in health care, the study team identified an established model upon which to formulate an assessment of leadership competencies in health care. The Duke Healthcare Leadership Program at Duke University Medical Center created one such model that represents the key principles of serving as a leader in health care based on extensive literature review, focus groups, and iterations of the model from medical provider feedback (Figure 1). The model contains five core competencies (critical thinking, emotional intelligence, teamwork, selfless service, and integrity), which surround the central core principle of patient centeredness. The Duke Healthcare Leadership Model establishes a framework for the essential characteristics and skills of a medical leader in both formal and informal leadership roles. As the name indicates, the model is generalizable to all providers in the health care setting and can be applied to any level of training or care setting. The model predicates what it means to be a leader in medicine in order to 1) cultivate a culture of leadership, 2) provide a springboard for skill development strategies, 3) allow subsequent assessment of such predefined tenets, and 4) assess the impact of the leadership training and propagative efforts.

| Figure 1 Duke Healthcare Leadership Model. |

The initial purpose of this study was to design an instrument to assess the tenets of the Duke Healthcare Leadership Model, initially through self-report, but with flexibility to apply in peer and multirater evaluations. The secondary purpose of this study was to investigate possible differences in self-reported leadership competency by specialty and sex, as well as across age groups, according to the self-reported data from instrument administration. The investigators hypothesized differences in self-reported leadership by specialty and sex, predicting nonmedicine specialties and males would self-report higher than the medicine and female colleagues. By advancing the understanding and utilization of this instrument, the team aimed to lay the foundation for future leadership evaluation and eventually more customized and effective leadership training to promote a more tangible understanding of leadership in health care settings.

Methods

Content creation

After reviewing the Duke Healthcare Leadership Model,16 the study team designed a self-assessment instrument that allowed individuals to appraise their proficiency on each of the six tenets of the model. Fifty questions were formulated that related to the five core competencies and one core principle. Efforts were made to make the items as singular and simplistic as possible. The instrument was named the Healthcare Evaluation & Assessment of Leadership (HEAL) and was designed to be applicable across various health care settings and health care providers. In this study, the instrument’s content validity and construct validity were specifically evaluated with respect to inpatient physicians, medical students, and physician assistants, with future plans to extend analysis to additional health care providers and settings. The study was reviewed by the institutional review board of Duke University School of Medicine and was determined to be exempt from further review.

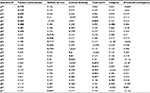

Initial steps of validating the instrument’s content involved a focus group of ~40 Duke University physician medical leaders at an alumni conference. In addition, two steering questions were included per item (“Is this question relevant to healthcare leadership?” and “Is this question clearly worded?”) to evaluate the item’s usefulness with respect to medical leadership and clarity of wording. After initial review by the focus group, 25 questions with low scores were eliminated (based on the second steering question). Three of the competencies had five questions each while emotional intelligence had six characteristics and selfless service had four. Five questions, distributed across the competencies, incorporated aspects of the “patient centeredness” core principle. Table 1 presents the results of the focus group’s efforts at identifying questions specific to the five competencies.

In addition to these leadership evaluation questions, each respondent filling in the questionnaire was prompted to answer demographic questions (age, sex, job title, clinical field, degrees earned, job duties, team size, and number of direct reports). To preserve anonymity, demographic questions accepted multiple-choice, binned response options.

Interface creation and design

The instrument was delivered via a custom web interface programmed using open-source tools including PHP Version 5.6 (PHP Group) on the server side and JavaScript (Ecma International, Geneva, Switzerland) on the client side. The open-source jQuery Mobile framework (jQuery Foundation, Boston, MA, USA) was used to facilitate a mobile device-friendly survey interface, and the instrument was supported by a MySQL (Oracle Corporation, Morrisville, NC, USA) database backend. The interface was hosted on a private virtual machine (Bluehost, Orem, UT, USA) running the Apache web server (Version 2.2.31; The Apache Software Foundation, Los Angeles, CA, USA) on a GNU/Linux operating system (kernel Version 3.12).

Upon reaching the instrument website, users were invited to enter a “ticket code” that permitted the user access to the set of questions. The “ticket code” also facilitated the ease of use on mobile devices. After identifying the ticket code, the web system presented the respondent with demographic questions, followed by leadership evaluation questions. The leadership evaluation questions were presented in a random order, and each was scaled on a visual analog scale anchored with “disagree strongly” to “agree strongly” (Figure 2). Answers were stored in the database as integers between -50 (disagree strongly) and 50 (agree strongly). Each question could also be responded with “not applicable.” At the conclusion of the survey, respondents were invited to submit feedback.

| Figure 2 Instrument interface with example question. |

Responses were stored in the database as they were submitted, allowing analysis of partial responses. A custom program written in Python (Python Software Foundation, Beaverton, OR, USA) transformed responses into tabular form for analysis.

Survey distribution

Over the course of 3 months, members of the study team provided printed handouts of the instrument’s weblink to treatment teams at teaching conferences within the Duke University Hospital. Regardless of team rotation, a provider was only able to complete the survey one time over this period. Respondents were allowed to complete it immediately or at a later time, but the study team was not able to observe the responses. All information was anonymized by the use of “ticket codes,” and no names were collected. Personal, de-identified information collected included training level, sex, specialty, and race. The instrument was distributed to physicians of varying training levels, physician assistants, and medical students. It was not given at this stage of analysis to nurses or other members of the health care team, such as pharmacists, social workers, and physical therapists.

Statistics

Routine descriptive statistics were used to summarize the data. Reliability analysis was completed with Cronbach’s alpha with a priori cutoff of 0.70. Incomplete responses were excluded from analysis in the competencies from which they were missing. The study team completed a factor analysis (principal component analysis) to examine factors for independence and contribution to variance. Varimax with Kaiser normalization allowed evaluation of the factors in a rotated component matrix. Factors that loaded in more than one factor or loaded into a factor independently were identified for additional subjective evaluation in order to determine whether rewording or exclusion was necessary. Factor loadings of ≥0.4 were considered for interpretive purposes. Univariate analysis of variance was used to compare the aggregate scores on each of the six competencies by sex, age, and specialty with the a priori significance set to P<0.05.

Results

Demographics

In all, 200 invitations were distributed to participate, and a total of 126 unique health care providers completed the instrument (63% response rate), of whom 50% were female. Specialties of internal medicine, pediatrics, surgery, and radiology were represented. Clinician types included physician assistants (2%), residents (79%), clinical-year medical students (7%), and attending physicians (11%). The most common specialties were internal medicine (37%), radiology (34%), and pediatrics (17%).

Item inclusion

Survey respondents provided feedback on question wording and content. From this, the study team arrived at a 24-question survey that uses the question wording of the second iteration of survey (Table 1). Among all questions, patient centeredness was an overlying theme in five of the 24 questions.

Reliability and factor analysis

Using Cronbach’s alpha, each competency nearly met or exceeded the reliability cutoff of 0.7: emotional intelligence 0.664, selfless service 0.689, critical thinking 0.691, patient centeredness 0.725, teamwork 0.726, and integrity 0.738. Six factors explained 62% of the variance. These factors loaded items in a rotated component matrix (Table 2). Items that loaded to multiple factors were further compared against their reliability factors. One item within emotional intelligence, “I am aware of my personal limitations” was the only item to load in factor 6 and also provided the greatest improvement in reliability when excluded. With exclusion of this item, Cronbach’s alpha for emotional intelligence increased to 0.67. As such, this item was considered for exclusion. No other items improved reliability with elimination and were thus left in the survey.

| Table 2 Principal component analysis summary Notes: aRefer Table 1 for verbal descriptions of each question number. Factor loadings in bold (≥0.4) were considered for interpretive purposes. Factor loading on one category reflects concept independence for that particular question, whereas multiple factor loadings ≥0.4 suggest the question embodies several leadership categories. Concept independence was considered desirable for validity purposes. |

Self-assessment scores

Sex differences

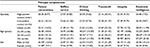

Results of the self-assessment scores are presented in Table 3. There was a statistically significant sex difference (P=0.016) in self-assessment scores on the core principle of patient centeredness (questions 64, 71, 73, 78, and 82; Table 1), with women (31.08±9.01) assessing themselves higher than men (26.44±11.83). A similar, though not statistically significant (P=0.056), difference emerged between the sexes for self-assessment of selfless service with women (29.66±9.99) rating themselves higher than men (25.87±11.83).

| Table 3 Mean (standard deviation) of the category scores by patient contact, age, and sex. Note: *P≤0.05. |

Age differences

Respondent ages were grouped as 25–29 years (n=56), 30–34 years (n=37), and 35+ years (n=16). Seventeen responses were excluded for missing age information. The youngest group had lower emotional intelligence self-assessments with a mean of 23.25 (SD 8.69) compared to both older groups (30–34 years: 28.64 [SD 10.10] and 35+ years: 27.25 [SD 8.18]). The oldest age group had slightly higher self-assessments of integrity with a mean of 30.92 (SD 8.04) compared to the middle-age group’s mean of 29.09 (SD 8.34). Both older groups self-reported much higher integrity scores than the youngest age group (22.19 [SD 10.86]). For critical thinking, the middle-age group had highest self-reported values (26.41 [SD 7.71]) compared to both older providers (22.64 [SD 11.25]) and younger providers (18.24 [SD 10.39]). In analysis of variance, there were statistically significant age differences for three of the core principles: critical thinking (F=4.17; P=0.018), integrity (F=8.22; P<0.001), and emotional intelligence (F=8.02; P=0.001). Across all core values, the youngest age group reported lower self-ratings than the older age groups (Table 3).

Specialty differences

For analysis purposes, specialties were grouped in a clinically relevant way as high patient-contact providers (internal medicine and pediatrics, n=37) and low patient-contact providers (radiology, n=34). Surgical providers (n=14) and those who were “other” or “no response” (n=12) were excluded due to low sample size. There were no statistically significant differences according to the grouping of specialties analyzed.

Discussion

Displaying leadership as a health care provider constitutes a necessary component of providing effective patient care.3,5,8 This health care leadership should be considered distinct from leadership of business, law, and government because of two key differences being the patient–provider interaction and service-oriented nature of health care.4,9,11,12,26 While a provider must assert their experience and knowledge to care for a patient, he or she must also respect patient autonomy – a principle increasingly emphasized in 21st century medicine. Similarly, the most senior member of a treatment team must defer at times to the trainees to empower their independence as a provider and leadership development. At other times, providers differ in the amount of time they interact with the patient, which can dictate leadership contexts. For example, a nurse might exert leadership over a physician frequently as they are more often bedside and can advocate the wishes of the patient to the physician. The complexity and dynamic nature of leadership in medicine could be described in countless ways – at any moment a medical provider must be ready to lead and at any moment ready to follow and show deference to another provider or more importantly the patient. Whether a provider assumes a specified leadership position, health care providers inevitably serve as both formal and informal leaders throughout their career such that a health care leadership model must account for both forms of leadership to be widely applicable and effective.3 As advances in leadership training continue to improve the skills of trainees, it is necessary to provide leadership feedback to ensure this training is effective and that providers continue to display leadership throughout their careers.

The surplus of surveys and evaluations in the medical profession and time constraints make the speed of completion a high priority in designing a leadership instrument. From focus group input on “survey burnout” and well-established health care provider burnout from increased paperwork and documentation demands,27,28 the investigators aimed to design a survey that could be completed quickly and during clinical shifts. Completion time is an especially important consideration for surveys that assume a “360” format. As such, creating a reliable and valid health care leadership instrument that was efficient and applicable to all training levels and provider types was of highest priority. Previous work by the Duke Healthcare Leadership Program established the core competencies and principle of a health care leader (Figure 1).16 Using these core competencies and core principle of patient centeredness, a 25-question leadership survey was designed, which was widely distributed to physicians, medical students, and physician assistants of inpatient provider teams within the Duke University Medical Center. From these responses, self-assessed leadership qualities were evaluated, as well as the reliability of each question is related to other items in each of the leadership components. Reliability and factor analysis allows us to eliminate one question, resulting in a final 24-question iteration of the survey.

Importantly, the results showed a sex difference in the self-assessment of the core principle, patient centeredness, and a marginally different finding of the competency of selfless service with women rating themselves as higher in these categories compared to men. Leadership differences by sex have been well established in the literature.13,21,29–36 Dickson et al1 showed only sex to be associated with leadership competency in medicine, and similar to this study, did not find differences according to specialty. Differences in sex have also been reported in self-assessed abilities, including those of intelligence,37 research talent,18 empathy,19 and clinical skills.23,26 Women typically underpredict their leadership abilities compared to men.25 The finding that women self-assessed their patient centeredness may reflect several possibilities. Women may be truly more patient centered, perceive themselves as more patient centered, or value patient centeredness more than men. The quality of placing the patient first might be something that females value more highly and therefore rate this as more important. Another explanation could be the ability of women to prioritize the patient in interactions based on published sex differences according to emotional skills, medical communication, and personality.38–42 McKinley et al22 reported sex differences in emotional intelligence of resident physicians. In the present study, there were no sex differences based on emotional intelligence. Similar to the findings of McKinley et al, these results indicate that components of patient centeredness and selfless service reasonably contribute to overall emotional intelligence in health care.

The study also reports age differences among several constructs. The younger group (25–29 years) had lower self-report across all constructs, supporting leadership as a characteristic that develops longitudinally with increased experience and exposure to settings necessitating leadership. The self-assessment of integrity was significantly higher in the oldest age grouping (35+ years), with younger providers having lower ratings compared to both older age groupings. This finding could be a result of young trainees feeling removed from the decision-making process as several of the integrity items involve “acting decisively” and “navigating difficult decisions.” It further underscores, however, the need to educate and empower young trainees to choose the path of integrity over attempts to appear talented or competent. While the youngest study participants may be confident in their ability to be honest and trustworthy, they may have less self-reported efficacy in decision making since they are not the individuals responsible for making decisions at this juncture of their career. Additional studies would be needed to elucidate the item-by-item differences by age to better understand what is driving lower self-reported integrity in younger providers.

The middle-age group (30–34 years) reported higher self-rated competency in critical thinking and emotional intelligence than the other age groups. Clinically, such self-efficacy understandably aligns with training differences, where the ability to make clinically sound judgments develops over time with clinical experience and knowledge acquisition. Ratings from the older age group were similar to middle-age group for emotional intelligence, suggesting it is a skill that develops with experience. The self-report of critical thinking, however, was drastically higher in the middle-age group compared to youngest and oldest age groups. This group of providers (30–34 years) lies at a convenient juncture between gained clinical experience and proximity to training. Comprising mainly upper-level residents, fellows, and young attending physicians, confidence in critical thinking might be explained by the items surrounding “evidence based medicine” and “up-to-date in my field.” While the youngest providers may not have the benefit of longitudinal insight to implement intuition, older providers might be less accustomed to the most recent findings in their field by relying on orthodox teachings and practice methods to lead their teams as opposed to the most up-to-date evidence-based findings.

This study was limited by a relatively small sample size and the lack of diverse specialties analyzed and may be subject to selection bias. Future studies across both specialties and experience within the health care system will help identify gaps and needs for specific leadership training within health care education. Although the described findings support previous findings that show no difference in leadership based on specialty,1 additional studies that explore potential specialty leadership differences are warranted.

Another potential limitation is the length of the instrument. The initial version of the instrument included 50 questions that would have undoubtedly given more robust data that might have improved the reliability factors. Yet, a lengthy instrument would be unrealistic to implement within busy care teams and would be especially unrealistic for implementation in widespread clinical practice. Furthermore, the lengthy survey could cause habituation bias based on multiple survey questions testing similar aspects of leadership.

While self-assessment has been shown important in clinical development,43–47 another limitation of this study is the absence of peer-assessed data.48,49 Comparing self-assessment with the reports of others would allow us to determine whether differences in self-efficacy might be driving the age differences, and to some degree, the sex differences. Future studies utilizing this survey would allow for identification of gaps in self-identified leadership strengths and weaknesses to those observed by all of those who work with the individual. Specifically within health care where formal leadership training rarely occurs, the investigators believe that leadership self-assessment might be inflated compared to the objective findings found by those identified by the team. Performance evaluation often includes 360° metrics that gather the opinions and feedback from those at every position relative to the person being evaluated.50–53 Differences are often reported between self vs others’ evaluation.46,49,54 Self-perception of leadership competency as it compares to the perception of others can offer valuable information in the training and accountability of health care providers as it pertains to their leadership abilities. The study team intends to expand the implementation of the HEAL instrument to interprofessional teams. Differentiating results by training level would more appropriately reflect stage of leadership development than does age alone, as well as results comparing physicians to nonphysician care providers.

Conclusion

The present study describes the design and implementation process of a self-reported HEAL instrument derived from a leadership model previously developed. The pilot supports the instrument’s preliminary validity. The findings of age and sex differences support both the study hypothesis and the leadership literature, despite inability to reject the null hypothesis for differences in self-reported leadership competency among specialties. The importance of providing reliable leadership feedback in an expedited way for health care providers justifies further exploration of the HEAL instrument.

Acknowledgments

The authors would like to thank Doctor Kathleen Ponder for advising the research team on this project as well as Doctor Kelly Hannum for advice and statistical assistance. They also acknowledge the assistance of Donald T Kirkendall, a contracted medical editor, for his help in the preparation of this manuscript.

Disclosure

The authors report no conflicts of interest in this work.

References

Dickson G, Owen K, Chapman AL, Johnson D, Kilner K. Leadership styles used by senior medical leaders: patterns, influences and implications for leadership development. Leadersh Health Serv. 2014;27(4):283–298. | ||

Frich JC, Brewster AL, Cherlin EJ, Bradley EH. Leadership development programs for physicians: a systematic review. J Gen Intern Med. 2015;30(5):656–674. | ||

Gabel S. Expanding the scope of leadership training in medicine. Acad Med. 2014;89(6):848–852. | ||

Mittwede PN. On leadership and service during medical training. Acad Med. 2015;90(4):399. | ||

Schwartz RW, Pogge C. Physician leadership is essential to the survival of teaching hospitals. Am J Surg. 2000;179(6):462–468. | ||

Sonnino RE. Professional development and leadership training opportunities for healthcare professionals. Am J Surg. 2013;206(5):727–731. | ||

Sonnino RE. Health care leadership development and training: progress and pitfalls. J Healthc Leadersh. 2016;8:19–29. | ||

Stoller JK. Developing physician-leaders: a call to action. J Gen Intern Med. 2009;24(7):876–878. | ||

Garber JS, Madigan EA, Click ER, Fitzpatrick JJ. Attitudes towards collaboration and servant leadership among nurses, physicians and residents. J Interprof Care. 2009;23(4):331–340. | ||

Haidet P, Kelly PA, Chou C, Communication, Cirriculum, and Culture Study Group. Characterizing the patient-centeredness of hidden curricula in medical schools: development and validation of a new measure. Acad Med. 2005;80(1):44–50. | ||

Anderson RJ. Building hospital-physician relationships through servant leadership. Front Health Serv Manage. 2003;20(2):43. | ||

Parris DL, Peachey JW. A systematic literature review of servant leadership theory in organizational contexts. J Bus Ethics. 2013;113(3):377–393. | ||

Graham EA, Wallace CA, Stapleton FB. Developing women leaders in medicine at the grass roots level: evolution from skills training to institutional change. J Pediatr. 2007;151(1):1.–2. | ||

Pool LD, Qualter P. Improving emotional intelligence and emotional self-efficacy through a teaching intervention for university students. Learn Individ Differ. 2012;22(3):306–312. | ||

Quince T, Abbas M, Murugesu S, et al. Leadership and management in the undergraduate medical curriculum: a qualitative study of students’ attitudes and opinions at one UK medical school. BMJ Open. 2014;4(6):e005353. | ||

Webb AM, Tsipis NE, McClellan TR, et al. A first step toward understanding best practices in leadership training in undergraduate medical education: a systematic review. Acad Med. 2014;89(11):1563–1570. | ||

Stoller JK. Commentary: recommendations and remaining questions for health care leadership training programs. Acad Med. 2013;88(1):12–15. | ||

Bakken LL, Sheridan J, Carnes M. Gender differences among physician–scientists in self-assessed abilities to perform clinical research. Acad Med. 2003;78(12):1281–1286. | ||

Berg K, Majdan JF, Berg D, Veloski J, Hojat M. Medical students’ self-reported empathy and simulated patients’ assessments of student empathy: an analysis by gender and ethnicity. Acad Med. 2011;86(8):984–988. | ||

Clack GB, Head JO. Gender differences in medical graduates’ assessment of their personal attributes. Med Educ. 1999;33(2):101–105. | ||

Kent RL, Moss SE. Effects of sex and gender role on leader emergence. Acad Manage J. 1994;37(5):1335–1346. | ||

McKinley SK, Petrusa ER, Fiedeldey-Van Dijk C, et al. Are there gender differences in the emotional intelligence of resident physicians? J Surg Educ. 2014;71(6):e33–e40. | ||

Minter RM, Gruppen LD, Napolitano KS, Gauger PG. Gender differences in the self-assessment of surgical residents. Am J Surg. 2005;189(6):647–650. | ||

Sloma-Williams L, McDade SA, Richman RC, et al. The role of self-efficacy in developing women leaders. In: Dean DR, Bracken SJ, Allen JK, editors. Women in Academic Leadership: Professional Strategies, Personal Choices. Sterling, VA: Stylus; 2009:50–73. | ||

Sturm RE, Taylor SN, Atwater LE, Braddy PW. Leader self-awareness: an examination and implications of women’s under-prediction. J Organ Behav. 2014;35(5):657–677. | ||

Trastek VF, Hamilton NW, Niles EE. Leadership models in health care – a case for servant leadership. Paper presented at: Mayo Clinic Proceedings. 2014;89(3):374–381. | ||

Thomas NK. Resident burnout. JAMA. 2004;292(23):2880–2889. | ||

Felton JS. Burnout as a clinical entity – its importance in health care workers. Occup Med. 1998;48(4):237–250. | ||

Barbuto JE Jr, Fritz SM, Matkin GS, Marx DB. Effects of gender, education, and age upon leaders’ use of influence tactics and full range leadership behaviors. Sex Roles. 2007;56(1–2):71–83. | ||

Eagly AH, Karau SJ. Gender and the emergence of leaders: a meta-analysis. J Pers Soc Psychol. 1991;60(5):685. | ||

Ely RJ, Ibarra H, Kolb DM. Taking gender into account: theory and design for women’s leadership development programs. Acad Manage Learn Educ. 2011;10(3):474–493. | ||

Isaac C, Kaatz A, Lee B, Carnes M. An educational intervention designed to increase women’s leadership self-efficacy. CBE Life Sci Educ. 2012;11(3):307–322. | ||

Korac-Kakabadse A, Korac-Kakabadse N, Myers A. Demographics and leadership philosophy: exploring gender differences. J Manage Dev. 1998;17(5):351–388. | ||

Nomura K, Yano E, Fukui T. Gender differences in clinical confidence: a nationwide survey of resident physicians in Japan. Acad Med. 2010;85(4):647–653. | ||

Reichenbach L, Brown H. Gender and academic medicine: impacts on the health workforce. BMJ. 2004;329(7469):792–795. | ||

Richman RC, Morahan PS, Cohen DW, McDade SA. Advancing women and closing the leadership gap: the executive leadership in academic medicine (ELAM) program experience. J Womens Health Gend Based Med. 2001;10(3):271–277. | ||

Syzmanowicz A, Furnham A. Gender differences in self-estimates of general, mathematical, spatial and verbal intelligence: four meta analyses. Learn Individ Differ. 2011;21(5):493–504. | ||

Feingold A. Gender differences in personality: a meta-analysis. Psychol Bull. 1994;116(3):429. | ||

Groves KS. Gender differences in social and emotional skills and charismatic leadership. J Leadersh Organ Stud. 2005;11(3):30–46. | ||

Roter DL, Hall JA. Physician gender and patient-centered communication: a critical review of empirical research. Annu Rev Public Health. 2004;25:497–519. | ||

Roter DL, Hall JA, Aoki Y. Physician gender effects in medical communication: a meta-analytic review. JAMA. 2002;288(6):756–764. | ||

Tsaousis I, Kazi S. Factorial invariance and latent mean differences of scores on trait emotional intelligence across gender and age. Pers Individ Dif. 2013;54(2):169–173. | ||

Boerebach BC, Arah OA, Heineman MJ, Busch OR, Lombarts KM. The impact of resident-and self-evaluations on surgeon’s subsequent teaching performance. World J Surg. 2014;38(11):2761–2769. | ||

Colthart I, Bagnall G, Evans A, et al. The effectiveness of self-assessment on the identification of learner needs, learner activity, and impact on clinical practice: BEME Guide no. 10. Med Teach. 2008;30(2):124–145. | ||

de Blacam C, O’Keeffe DA, Nugent E, Doherty E, Traynor O. Are residents accurate in their assessments of their own surgical skills? Am J Surg. 2012;204(5):724–731. | ||

Gordon MJ. Self-assessment programs and their implications for health professions training. Acad Med. 1992;67(10):672–679. | ||

Taylor SN. Student self-assessment and multisource feedback assessment: exploring benefits, limitations, and remedies. J Manage Educ. 2014;38(3):359–383. | ||

Dunning D, Heath C, Suls JM. Flawed self-assessment implications for health, education, and the workplace. Psychol Sci Public Interest. 2004;5(3):69–106. | ||

Johnson D, Cujec B. Comparison of self, nurse, and physician assessment of residents rotating through an intensive care unit. Crit Care Med. 1998;26(11):1811–1816. | ||

Berk RA. Using the 360 multisource feedback model to evaluate teaching and professionalism. Med Teach. 2009;31(12):1073–1080. | ||

Brinkman WB, Geraghty SR, Lanphear BP, et al. Effect of multisource feedback on resident communication skills and professionalism: a randomized controlled trial. Arch Pediatr Adolesc Med. 2007;161(1):44–49. | ||

Joshi R, Ling FW, Jaeger J. Assessment of a 360-degree instrument to evaluate residents’ competency in interpersonal and communication skills. Acad Med. 2004;79(5):458–463. | ||

Massagli TL, Carline JD. Reliability of a 360-degree evaluation to assess resident competence. Am J Phys Med Rehabil. 2007;86(10):845–852. | ||

Lipsett PA, Harris I, Downing S. Resident self-other assessor agreement: influence of assessor, competency, and performance level. Arch Surg. 2011;146(8):901–906. |

© 2016 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2016 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.