Back to Archived Journals » Reports in Medical Imaging » Volume 14

An Overview of Current Trends, Techniques, Prospects, and Pitfalls of Artificial Intelligence in Breast Imaging

Authors Goyal S

Received 4 December 2020

Accepted for publication 22 February 2021

Published 11 March 2021 Volume 2021:14 Pages 15—25

DOI https://doi.org/10.2147/RMI.S295205

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

Editor who approved publication: Professor Tarik Massoud

Video abstract presented by Swati Goyal.

Views: 636

Swati Goyal

Department of Radiodiagnosis, Government Medical College & Hospital, Bhopal, Madhya Pradesh, India

Correspondence: Swati Goyal D/16 Upant Colony, Bhopal, Madhya Pradesh, 462016 Tel +91 9424427774

Email [email protected]

Abstract: This review article aims to discuss current trends, techniques, and promising uses of artificial intelligence (AI) in breast imaging, apart from the pitfalls that may hinder its progress. It includes only the commonly used and basic terminology imperative for physicians to know. AI is not just a computerized approach but an interface between humans and machines. Apart from reducing workload and improved diagnostic accuracy, radiologists get more time for patient care or clinical work by using various machine learning techniques that augment their productivity. Inadequate data input with suboptimal pattern recognition, data extraction challenges, legal implications, and exorbitant costs are a few pitfalls that AI algorithms still face while analyzing and giving appropriate outcomes. Various machine learning approaches are used to construct prediction models for clinical decision support and ameliorating patient management. Since AI is still in its fledgling state, with many limitations for clinical implementation, clinical support and feedback are needed to avoid algorithmic errors. Hence, both machine learning and human insight complement each other in revolutionizing breast imaging.

Keywords: machine learning, augmented intelligence, ANN, CNN, CAD, GANs

Introduction

Artificial intelligence (AI) has become a buzzword that exhilarates the contemporary medical community to both extreme enthusiasm and grave concern about its capacity to surpass human potential. Though AI still lacks a standard definition, in 1955, John McCarthy considered artificial intelligence a multi-disciplinary field and defined it as “the science and engineering of making intelligent machines” in a workshop at Dartmouth Summer Research Project on AI.1 While radiologists have used computer-aided detection (CADe) and computer-aided diagnosis (CAD) in imaging — especially breast imaging — to assist them in the early detection and diagnosis of lesions for many years, the imprecision and unpredictability of CAD systems have suggested the need for more clinical studies and development of potential imaging applications. Hence, there is tremendous potential for evolution in the applicability of AI to radiological images.2

A Brief Overview of AI

AI is a blanket term that encompasses various subdomains like artificial neural networks (ANNs), natural language processing (NLP), and deep learning (DL) used for predictive modeling.3 Machine learning refers to the techniques used to achieve artificially intelligent models. Machine learning falls into three categories and can be supervised if the machine is trained by providing training data and corresponding labels, such as biopsy-confirmed cancers and benign lesions. It can be unsupervised if no labeling is done, and the AI is expected to figure patterns out by itself. It is called semi-supervised if a combination of labeled and unlabeled images is used.4, 5 Supervised learning is useful for two types of problems, depending on whether outputs are real values (regression) or categories (classification). It includes linear/logistic regression, random forest, or support vector machines for these problems. Unsupervised learning is also divided into three categories, namely association, clustering, and dimensionality reduction. It is termed as an association if we were going to find a set of rules that describe our data, clustering if we want to see similar groups in our data like k-clustering and dimensionality reduction for big data visualization, text mining, and image recognition. Neural networks are also such kinds of algorithms, though they have multiple subtypes too: convolutional networks, recurrent networks, and deep feedforward networks, to name a few.6, 7

Using different algorithms specific to particular tasks, computers can organize and scrutinize large amounts of data, including text, medical images, and medical records, in exponentially less time than required for manual processing. The creation of synthetic images from an available database to train an algorithm is a subsequent step to meet the challenges faced due to the shortage of annotated image data. Augmented intelligence, an escalated human intellect with the help of artificial intelligence, uses a multi-perspective and team-based approach for an alliance between technology and physicians to handle vast amounts of imaging data, accelerate workflow, and improve patient care.8 Modern-day high-speed computers with cloud computing and enhanced storage capacities facilitated the progress and advancements of the various AI techniques. Dissecting these networks to look at their weights and biases might even help identify patterns, even some that had not been noticed earlier by experts either.3, 9 Besides, unlike humans, computers are not tired, bored, or distracted toward the end of a long day of, say, reading mammograms.10

This review article discusses the current trends, techniques, and prospects of AI in breast imaging, including its shortcomings.

Materials and Methods

A thorough literature search was conducted to determine the prevailing status of AI in radiodiagnosis, in specific reference to breast imaging. Validation from an institutional review board was not mandatory for this literature review as no human data has been used. All English language articles published on AI in the last two and a half decades were searched for authentic research, literature reviews, meta-analysis, and systematic review articles from the databases accessible online. Although this search was wide-ranging due to the dynamic nature of the topic, the author is aware that all of the published data could not be included, though the pivotal issues have been covered.

The articles mainly published during the last decade were considered to summarize recent advances, novel techniques, and future scope, along with the prior work that contributed to the framework of current progress. Artificial intelligence, AI, AI in medical imaging, AI in radiology, AI in breast imaging, computer-aided diagnosis, and computer-aided detection, CAD, neural network, NN, artificial neural network, ANN, convolutional neural network, CNN, machine learning, ML, transfer learning, deep learning, DL, generative adversarial networks (GANs), radiomics, natural language processing, NLP and augmented intelligence were used as the major and minor MeSH headings and keywords to limit the search narrowly.

Results

Initial literature research revealed more than a million articles related to AI in medicine. More than a thousand articles relevant to AI in radiology, primarily breast imaging, were found after applying the appropriate search filters defined above, of which I went through 296. Most of these discussed the current trends, applications, and drawbacks of AI in breast imaging. Various articles exhaustively delineated AI and the techniques used to construct the algorithms for deep learning for most medical specialties, including imaging. AI techniques, along with augmented intelligence, CAD, and radiomics, have been explained outstandingly and seem to be easily discernible in these exhaustive articles by Currie et al,4 Arieno et al,8 Giger11 and Ribeiro et al.12 Augmented intelligence has been considered as a personalized tool to aid radiologists and upgrade patient management.8 Also, Hosny et al,13 Yi et al,9 Soffer et al,14 Ayer et al15 and Baker et al16 in their article stress the significance of deep learning, convolutional neural networks, artificial neural networks, computer-aided diagnosis, generative adversarial networks (GANs), and radiomics for radiologists, as their profession mainly constitutes an assessment of visual data. Langlotz et al17 discussed the need for publicly available, well-annotated, and reusable datasets to evaluate various AI algorithms and techniques. In an article published over two decades ago, the study assessed the inter-and intraobserver variability of radiologists’ interpretations and ANN predictions with Cohen kappa analysis with improved accuracy of mammographic interpretation of breast lesions.16

The following paragraphs delineate the analytical results of some of the studies regarding the use of subsets of AI — ANNs, CNNs, radiomics, transfer learning, CAD with AI — in breast imaging.

Marchevsky et al suggested that AI applications in breast imaging research are being navigated by clinical interests to upgrade patient care. It used neural networks and logistic regression to infer nodal status in 279 patients with breast cancer according to prognostic determinants such as age, family history, axillary nodal involvement, receptors and tumor type, and grade and size in the surgical specimen. The best neural network model was trained with 224 cases using 19 input neurons. The results – a specificity of 97.2%, sensitivity of 80.0%, positive predictive value of 93.8%, negative predictive value of 87.5% — were satisfactory, accurately classifying 49 of 55 (89.0%) unknown cases.18 A 2007 article supports using an artificial neural network as a machine learning technique and possibly replacing the more invasive sentinel node imaging for predicting metastatic involvement of axillary lymph nodes in patients with breast cancer.19 Saritas20 in 2012 commenced a study to determine whether an ANN could anticipate breast cancer from the evaluation of patient age and imaging features of a breast lesion (ie, shape, margin, and density). Confusion matrix and receiver operating characteristic (ROC) analyses were used to investigate the data from the ANN model with the sensitivity of 90.2% and specificity of 81.4%. Conducting a study on 800 patients’ biopsies yielded a disease prediction of 90.5%, thus validating the process. These studies might be useful for patients without a diagnosis who are hesitant to undergo further testing if the probability of malignancy helps decide the need for biopsy. The BI-RADS (Breast Imaging- Reporting And Data System) assessment has been compared with radiologists’ interpretations by using artificial neural networks.

Mohamed et al21 used deep learning to classify the mammograms into scattered densities or heterogeneously dense breasts and help assign a BIRADS category. The CNN model was trained from scratch on their mammographic images with an AUC of 0.942. Rodríguez-Ruiz et al22 concluded the improvement in the efficiency of a radiologist in detecting breast cancer with the help of AI in comparison to unaided reading images. 240 digital mammography images with 100 normal, 100 malignancies and 40 false-positive cases were used as the input datasets. DL (CNNs) feature classifiers and image analysis algorithms were used to delineate microcalcifications and soft tissue lesions. The results with AUC (0.89 vs 0.87, P= 0.002), sensitivity (86% VS 83%, P= 0.046), specificity (79% VS 77%, P= 0.06) supported the AI system as an aid to radiologists. Qiu et al23 exhibited the feasibility of applying 8-layered DL with three pairs of convolutional-max-pooling layers for automatic feature extraction and multiple layer perceptron (MLP) classifier for feature categorization to process 560 ROIs (region of interests) extracted from digital mammograms. Overall, AUC, 0.790 ± 0.019, was found by applying the DL-based CADx scheme to classify and differentiate benign from malignant lesions.

Radiomics score was created based on ultrasound texture feature analysis to discriminate 186 pathologically proven triple-negative breast malignancy from 715 benign fibroadenomas in a retrospective study conducted by Lee et al Triple-negative invasive breast carcinomas might appear, round or oval, enhancing with circumscribed margins, and without spiculation. They can be falsely detected as benign cysts or fibroadenomas by ultrasound if imaging parameters are poorly modulated. Three different ultrasound machines were used to image the lesions and check whether the results’ discrepancies were attributable to image quality differences of the systems. The radiology resident selected an image for each lesion and outlined the mass’s margin to indicate the ROI suggesting the study’s supervised nature. Around 730 features were extracted, including 14 pixel intensity-based features, 132 textural features, and 584 wavelet-based features. Radiomics score showed a substantial difference (p < 0.001) between the cancers and fibroadenomas for all the three ultrasound systems with high diagnostic efficiency (AUC 0.910) after the proper training, validation, and testing of the algorithms.24

The study by Huynh et al25 inferred that transfer learning could improve the current CADx methods by using 219 breast lesions (607 full-field digital mammographic images) and comparing support vector machine classifiers based on the CNN-extracted image features and their previous computer-extracted features (AUC 0.86) to differentiate benign and malignant lesions.

Zelst et al26 inferred the significance of CAD software for automated breast ultrasound (ABUS) images to improve the detection of radiologists screening for breast cancer. The study selected 90 patients, and after follow-up, 40 were normal, 20 were malignant, and 30 were benign. The average area under the curve (AUC) was 0.77 without CAD, which improved to 0.84 (p= 0.001) with CAD. However, no significant difference was discerned in the AUC between the experienced radiologists with and without CAD.

Applying machine learning, Cai et al27 conducted a study and combined morphology of DWI and kinetic features of contrast-enhanced MRI to classify the lesions as benign or malignant using semi-automated segmentation on 234 cases (85 benign and 149 malignant). The sensitivity of 0.85, the specificity of 0.89, the 0.93 accuracy, and AUC of 90.9% were achieved while diagnosing and classifying the breast lesions as benign or malignant accurately.

Statistically significant (p <0.0001) and the accurate prediction was made by the neural networks compared to the Cox proportional hazard model for predicting breast malignancy relapse in high-risk intervals on 3811 patients, in a study conducted by Jerez et al.28 The five input covariates (age, tumor size, lymph node status, tumor grade, type of treatment) with survival time (months) were studied with a three-layer neural network model (each input node corresponded to prognostic factor plus one node for time; one hidden layer, and one output layer) constructed with a software.

A recent study conducted by Mc Kinney et al29 used two large datasets from the United States (US) and United Kingdom (UK) and discussed an absolute reduction of 5.7%/1.2% (US/UK) in false positives and 9.4%/2.7% (US/UK) in false negatives, hence improving the accuracy and efficiency of breast cancer screening. The AUC-ROC for the AI system exceeded that of an average radiologist by 11.5%.

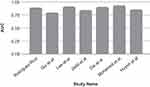

Of the 12 studies described above, AUC (Figure 1) of 7 studies and sensitivity and specificity (Figure 2) of 4 studies were compared by graphical representation. As evident from the bar diagrams, the study by Mohamed et al achieved the highest AUC. Maximum sensitivity was achieved by Saritas, and maximum specificity by Marchevsky et al (Figures 1 and 2).

|

Figure 1 Comparison of AUC of various studies. |

|

Figure 2 Comparison of sensitivity and specificity of various studies. |

Discussion

Rapidly evolving changes in healthcare delivery systems combined with the recent state-of-the-art advances in breast imaging has necessitated a review of the current trends and techniques used for AI in breast imaging. Various international radiologic organizations, such as the American College of Radiology (ACR), and the Canadian, Indian and European associations of radiologists, are progressively and efficiently integrating AI techniques.30–32

Current Trends and Techniques Used for AI in Imaging

A neural network is gaining popularity as it forms a series of algorithms that simulate the human brain in perceiving the dataset relationship to analyze image feature extraction and identification patterns, sometimes even better than human recognition, if an adequate amount of high-quality data is available.11 Deep learning is preferred as it is performed by neural networks with multiple hidden layers that can determine both simple and complex features like lines, texture, edges, intensity, shapes, and other lesions.33 In the following paragraphs, the application of various AI techniques (Figure 3) have been discussed briefly.

|

Figure 3 Various techniques of artificial intelligence. |

Convolutional neural networks (CNNs), the type of deep, feedforward artificial neural networks (ANNs), are spontaneous in learning the spatial hierarchies of features through backpropagation algorithms. It constitutes multiple building blocks, namely convolutional layers, pooling layers, non-linearity layers, and fully connected layers, which transform the inputs in the form of images to the output as in classifying the lesion. They are applied for recognizing the images most commonly since they are designed for feature extraction, and their implementation may improve the detection and classification of lesions to be much faster and efficient than conventional ANNs. The data can be used to train (CNNs) as radiological images’ pixel values, orientation, and relation to other pixels can be analyzed, which helps CNNs comprehend lines, curves, and objects within images. Once trained, CNNs can classify the microcalcifications as either benign or malignant on images it has not seen before.3, 8, 32, 35 The automatic hierarchical feature learning ability of deep CNNs also helps reduce false-positive rates in breast cancer screening.36 However, owing to the small sample size and variable presentation, it is a herculean task to train the CNNs from scratch for the medical images. Hence comes the use of transfer learning, another approach to machine learning in which knowledge of previously trained models is applied to a different and new task by pretraining the data in a generalized, broad-spectrum way and further fine-tuning the data to with a particular region of interest to re-apply it to some other task.25, 37

The combination of convolutional neural network (CNN) and transfer learning can shorten the data training time, reduce the amount of data and processor requirements, and improve diagnostic accuracy.38

Another AI technique commonly used for language translation and data extraction from text, such as radiology reports, is natural language processing (NLP).39 It can be used to analyze what such reports say and make predictions based on that.

Representation learning is another subset of ML in which input features are not defined clearly, and the computer algorithm trains itself to become proficient and provide classification as the output. While this method gives results only if an adequate amount of high-quality data is available, feature representation learning techniques help reduce the time to train data and are promising ways to facilitate computer-aided-diagnosis systems for breast lesions.40 Radiomics, yet another subfield of AI, aids in extracting vast amounts of features, sometimes even indiscernible to the human eye, from radiographic medical images from different imaging modalities, such as mammography, sonography, and MRI, using data characterization algorithms. It operates similarly to computer-aided diagnosis (CAD) and associates these radiological images with digital pathology images, enhancing our knowledge of imaging-pathology concurrence.11

Trending these days is another term; augmented intelligence — an amalgamation of machine intelligence and human ingenuity — has been described as a sequential approach of artificial intelligence in which humans and machines are interknitted in a constant learning and development loop.8 Hybrid-augmented intelligence has been recommended to focus on the high levels of human inconsistency and uncertainty by introducing human-in-the-loop human-computer alliances or embedding cognitive models in the machine learning system.41

Taking a step further, AI algorithms have been developed to create new synthetic mammographic images from digital databases by applying generative adversarial networks (GAN) to train the data and other algorithms and improve the performance of the CNN classifier. Generative adversarial networks (GANs) seem to be especially prospective due to the dearth of publicly available annotated data and its high cost. GANs create synthetic images through the pairing of neural networks, helping bridge these gaps. A GAN contains a generator that generates a new dataset of fake images based on the input training set and a discriminator to evaluate those images for their authenticity. Hence the novel data generated by GANs can be used to improve research, education, and patient management.9, 42

Workflow

As of now, AI is omnipresent in our digital lives, in the form of spam-detecting, voice recognition, video recommendation, and face identification algorithms, among many others. However, in spite of its normal potential, it has not, as of yet, seen widespread use in the medical field. For example, after appropriate training, natural learning processes could serve as a second opinion indicating the final report’s errors. If we know how, where, and when to use AI, parts of the radiological workflow like optimizing patient scheduling, image acquisition, post-processing image segmentation and quantification, automated detection and interpretation of findings, automated radiation dose estimation, automated data integration and analysis, and automated image quality evaluation, to name a few, could be significantly improved.2, 7, 43

Next, we shall discuss the sequence of steps (Figure 4) — data collection, processing, categorization, diagnosis, and management — involved in the lesion detection and management using AI.

|

Figure 4 Various steps involved in the lesion detection using AI. |

Data Collection and Processing by AI Algorithms

Diverse data from multiple vendors or different institutions should be used to prepare algorithms and train them to prevent bias. Proper scrutiny of the data is crucial since it might reveal a fault in the clinical history or improper image quality can be sorted out by segmentation, feature extraction, and computational analysis. Feature extraction is a fundamental element of dimensionality reduction and a mathematical way of obtaining the relevant constituents from the original input image by Principal component analysis (PCA) and Linear discriminant analysis. Segmentation simplifies representation images into a meaningful and easily analyzable form by methods such as edge detection, thresholding, and clustering.33 The processing of images, primary source data, text, and quantifiable data is possible using these algorithms’ neural network techniques. Proper scrubbing of data should be done to de-identify any protected health information and prevent legal and ethical issues. Overall, a well-annotated and curated image dataset is required to enable radiomics to discern subtle differences in benign and malignant pathologies and train the appropriate algorithms.11, 44 Inadequate quality or quantity of data may hinder the study, even if we use AI techniques to plan patient management.45, 46

There should be three datasets: a training dataset to train the model, a validation dataset to evaluate whether changes in the model improve or make it worse, and a test dataset, which should be entirely different from the training dataset and only used for final evaluation. To avoid overfitting and improve the algorithms, successive iterations of training and validation may be needed.11, 33, 47, 48

Categorizing Images and Data

The system of categorizing images and its integration into AI data to differentiate benign and malignant breast lesions is unpredictable due to inadequate image quality, overlapping diagnostic criteria, the difference in the breast density in patients of various age groups, and apprehension of false-positive cases.22, 49

The BI-RADS lexicons of the American College of Radiology have evaluated and quantified the probability of malignancy as class 4 and its smaller subdivisions by assessing the feature analysis and identifying the image pattern using AI algorithms.50, 51 Deep learning CNN tools have also been described to classify mammograms according to the breast’s density and thus established the use of BI-RADS–based structured reporting and helped the radiologists and physicians in the management of these lesions.29, 52

Diagnosis and Management

AI can expand the physician’s potential to collect, understand, and interpret enormous amounts of patients’ specific data. Progress in medical image analysis, such as radiomics, data computation, and machine learning, has augmented our understanding of disease processes and their management.53 A well-designed AI algorithm with appropriately selected techniques can influence patient management positively. They recommend a user-friendly computer model that can compare the output results of the CNN with the radiologist’s imaging assessment for further evaluation. Furthermore, information technology experts can be consulted to resolve the problems related to algorithmic failure.17

A radiomics-based approach using CNNs with radiology and pathology images as input data can facilitate the recognition of patterns differentiating various lesions and their different probabilities of improvement rate and, hence, can help the breast imagers better demonstrate the need for changing or maintaining current clinical management.54, 55 AI with ANNs and DL algorithms can be used to access the available reporting systems, databases, and electronic medical records to predict and analyze the detection rates of breast lesions, differentiate benign from malignant lesions, and assess them as per BIRADS for further management decisions.56 The case studies can be used as a future dataset, with the objective of the output being the diagnosis of lesions for appropriate patient management and documentation of guidelines.57 Moreover, interdependent analyses of large amounts of data and integrated input from a range of medical specialties like imaging, pathology, surgery, and oncology can help ascertain the best management protocol for patients.46, 56

Advantages of AI

The AI-based strategy reduces radiologists’ workload for breast cancer screening programs without affecting the sensitivity attained by the radiologist.58 AI offers superior quality of work for radiologists and patients with faster turnaround, better results, and improved diagnostic certainty (Figure 5). By using machine learning techniques that augment their productivity, radiologists get more time for other patient care activities.59 AI systems can accurately diagnose a proportion of the screening population as non-cancerous and reduce false positives, hence augmenting the efficiency of screening mammography.60 Factors such as high patient volume, complex diagnostic evaluations, responsibility for biopsies, and direct patient communication make AI beneficial in improving the workflow without dropping out the human factor, which is crucial for both the patients and breast imagers. AI helps the radiologists recognize their virtues and constraints, and eventually, deliver the best patient care.33, 34, 47, 56, 58

|

Figure 5 Advantages of AI. |

Standardized views, availability of images for comparison, systematized reporting format, and classifiable outcome constitute the desirable properties that make screening mammography suitable to train AI algorithms. It may help take a second opinion, generate auto reports for typical cases, predict the likelihood of malignancy, and triage the patients. It is instrumental in the densely populated and developing countries with an inequitable supply of medical resources. The use of AI algorithms can mitigate the excessive workload and shortage of doctors to a certain degree.61

Challenges with AI

AI algorithms might be confused with situations like inadequate data input or suboptimal pattern recognition that may result from an atypical or rare lesion (Figure 6). Features frequently encountered during imaging — like tumor heterogeneity, broader disease spectrum with atypia and carcinoma in situ, inter and intraobserver variability in diagnosing the lesions, and subjective assessment of breast density — along with substandard image quality might stunt the performance of the neural network.11, 20, 27, 39, 40 Data extraction challenges include lack of standardization, non-uniformity of stored data fields, erroneous data, limited annotated data, and image quality variation. Obtaining all data from the same source or equipment may also result in overfitting, whereby a model is unable to generalize patterns beyond the training set.13, 47, 48, 62 So, while preparing a well-annotated and curated data set to train the algorithms is costly and time-consuming, it is a highly advantageous process too.44 Apart from these, ethical and legal issues regarding decision-making constitute a significant concern.6

|

Figure 6 Various challenges faced by AI. |

Inadequate availability of training data sets and overfitting act as major stumbling blocks and can be dealt with by data augmentation, an approach to artificially create the data by increasing the number of training images using image rotation and image flipping.14

Future Perspectives

Radiomics and the creation of synthetic images via GANs are an evolving subset of artificial intelligence that converts images into mineable high-dimensional data to help analyze and predict further management. In the coming times, combining imaging and non-imaging data in electronic medical records will result in analysis and prediction of clinical diagnosis, hence improving the clinical decision making.7

The efforts are being made to improve diagnostic accuracy by deep learning-based CAD-enhanced synthetic mammography and deep learning-based CAD systems to classify breasts according to the density and tailor patient’s management.63

Summary

The literature review can only explore what has already been reported, and therefore there is an element of publication bias even before the search has begun. The current literature delineates far-reaching progress in the knowledge and insight of AI in medical imaging, specifically in radiodiagnosis and breast imaging. AI has a tremendous capability of contributing to the categorization and training of data, including high-quality images. Breast lesions can be predicted more effectively by increasing the study’s sample size, adding suitable input parameters, and using appropriate AI techniques. The recent trend of radiomics, augmented intelligence, and the creation of synthetic images via generative adversarial networks (GANs) will also boost up and prepare the radiologists to collaborate with machines and hence improve the diagnosis, management, and workplace productivity in breast imaging practices.8, 20, 56, 63, 64

Conclusion

Computers, employing various algorithms, can systematize and analyze massive data, including text, medical images, and medical records, in exponentially less time than required for manual processing. With the availability of high-quality imaging data in substantial numbers, the creation of synthetic images, the availability of high-speed computers, cloud computing, and efficient computer programs, AI algorithms can help facilitate radiologists’ workflow, increase diagnosing capacity, and improve patient management.

Clinical support and feedback will be invaluable in developing AI to its full potential in revolutionizing breast imaging.

Abbreviations

AI, artificial intelligence; NN, neural network; ANN, artificial neural networks; CNN, convolutional neural networks; CADe, computer-aided detection; CAD, computer-aided diagnosis; ML, machine learning; DL, deep learning; GAN, generative adversarial networks.

Acknowledgments

Rushank Goyal, CEO Betsos, Madhya Pradesh, India, for his contributions in delineating various AI techniques and revising the article.

Disclosure

The author reports no conflicts of interest in this work.

References

1. Monett D, Lewis CW, Thórisson KR, et al. Special issue “On defining artificial intelligence”—commentaries and author’s response. J Artif Gen Intell. 2020;11(2):1–100. doi:10.2478/jagi-2020-0003

2. Doi K. Computer-aided diagnosis in medical imaging: historical review, current status and future potential. Comput Med Imaging Graph. 2007;31(4–5):198–211. doi:10.1016/j.compmedimag.2007.02.002

3. Pesapane F, Codari M, Sardanelli F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp. 2018;2:1–10. doi:10.1186/s41747-018-0061-6

4. Currie G, Hawk KE, Rohren E, Vial A, Klein R. Machine learning and deep learning in medical imaging: intelligent imaging. J Med Imaging Radiat Sci. 2019;50(4):477–487. doi:10.1016/j.jmir.2019.09.005

5. Alloghani M, Al-Jumeily D, Mustafina J, et al. A systematic review on supervised and unsupervised machine learning algorithms for data science. In: Berry M, Mohamed A, Yap B editors. Supervised and Unsupervised Learning for Data Science. Unsupervised and Semi-Supervised Learning. Cham:Springer; 2020. doi:10.1007/978-3-030-22475-2_1.

6. Kann BH, Thompson R, Thomas CR, Dicker A, Aneja S. Artificial intelligence in oncology: current applications and future directions. Oncology. 2019;33(2):46–53.

7. Choy G, Khalilzadeh O, Michalski M, et al. Current applications and future impact of machine learning in radiology. Radiology. 2018;288(2):318–328. doi:10.1148/radiol.2018171820

8. Arieno A, Chan A, Destounis SV. A review of the role of augmented intelligence in breast imaging: from automated breast density assessment to risk stratification. Am J Roentgenol. 2019;212(2):259–270. doi:10.2214/AJR.18.20391

9. Yi X, Walia E, Babyn P. Generative adversarial network in medical imaging: a review. Med Image Anal. 2019;58:101552. doi:10.1016/j.media.2019.101552

10. Grady D A.I. is learning to read mammograms. The Newyork Times website; Availble from: www.nytimes.com/2020/01/01/health/breast-cancer-mammogram-artificial-intelligence. Published January 1, 2020.

11. Giger ML. Machine learning in medical imaging. J Am Coll Radiol. 2018;15(3Pt B):512–520. doi:10.1016/j.jacr.2017.12.028

12. Ribeiro MT, Singh S, Guestrin C “Why should I trust you?”: explaining the predictions of any classifier. arXiv website; Available from: arxiv.org/abs/1602.04938.

13. Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18(8):500–510. doi:10.1038/s41568-018-0016-5

14. Soffer S, Ben-Cohen A, Shimon O, Amitai MM, Greenspan H, Klang E. Convolutional neural networks for radiologic images: a radiologist’s guide. Radiology. 2019;290(3):590–606. doi:10.1148/radiol.2018180547

15. Ayer T, Chen Q, Burnside ES. Artificial neural networks in mammography interpretation and diagnostic decision making. Comput Math Methods Med. 2013;2013:832509. doi:10.1155/2013/832509

16. Baker JA, Kornguth PJ, Lo JY, Floyd CE. Artificial neural network: improving the quality of breast biopsy recommendations. Radiology. 1996;198(1):131–135. doi:10.1148/radiology.198.1.8539365

17. Langlotz CP, Allen B, Erickson BJ, et al. A roadmap for foundational research on artificial intelligence in medical imaging: from the 2018 NIH/RSNA/ACR/the academy workshop. Radiology. 2019;291(3):781–791. doi:10.1148/radiol.2019190613

18. Marchevsky AM, Shah S, Patel S. Reasoning with uncertainty in pathology: artificial neural networks and logistic regression as tools for prediction of lymph node status in breast cancer patients. Mod Pathol. 1999;12(5):505–513.

19. Tez S, Yoldaş O, Kiliç YA, Dizen H, Tez M. Artificial neural networks for prediction of lymph node status in breast cancer patients. Med Hypotheses. 2007;68(4):922–923. doi:10.1016/j.mehy.2006.09.028

20. Saritas I. Prediction of breast cancer using artificial neural networks. J Med Syst. 2012;36(5):2901–2907. doi:10.1007/s10916-011-9768-0

21. Mohamed AA, Berg WA, Peng H, Luo Y, Jankowitz RC, Wu S. A deep learning method for classifying mammographic breast density categories. Med Phys. 2018;45:314–321. doi:10.1002/mp.12683

22. Rodríguez-Ruiz A, Krupinski E, Mordang JJ, et al. Detection of breast cancer with mammography: effect of an artificial intelligence support system. Radiology. 2019;290(2):305–314. doi:10.1148/radiol.2018181371

23. Qiu Y, Yan S, Gundreddy RR, et al. A new approach to develop computer-aided diagnosis scheme of breast mass classification using deep learning technology. J Xray Sci Technol. 2017;25(5):751–763. doi:10.3233/XST-16226

24. Lee SE, Han K, Kwak JY, et al. Radiomics of US texture features in differential diagnosis between triple-negative breast cancer and fibroadenoma. Sci Rep. 2018;8(1):13546. doi:10.1038/s41598-018-31906-4

25. Huynh BQ, Li H, Giger ML. Digital mammographic tumor classification using transfer learning from deep convolutional neural networks. J Med Imag. 2016;3(3):034501. doi:10.1117/1.JMI.3.3.034501

26. van Zelst JCM, Tan T, Platel B, et al. Improved cancer detection in automated breast ultrasound by radiologists using computer aided detection. Eur J Radiol. 2017;89:54–59. doi:10.1016/j.ejrad.2017.01.021

27. Cai H, Peng Y, Ou C, Chen M, Li L. Diagnosis of breast masses from dynamic contrast-enhanced and diffusion-weighted MR: a machine learning approach. PLoS One. 2014;9(1):e87387. doi:10.1371/journal.pone.0087387

28. Jerez JM, Franco L, Alba E, et al. Improvement of breast cancer relapse prediction in high risk intervals using artificial neural networks. Breast Cancer Res Treat. 2005;94(3):265–272. doi:10.1007/s10549-005-9013-y

29. McKinney SM, Sieniek M, Godbole V, et al. International evaluation of an AI system for breast cancer screening. Nature. 2020;577(7788):89–94. doi:10.1038/s41586-019-1799-6

30. Tang A, Tam R, Cadrin-Chênevert A, et al. Canadian association of radiologists white paper on artificial intelligence in radiology. Can Assoc Radiol J. 2018;69(2):120–135. doi:10.1016/j.carj.2018.02.002

31. McGinty GB, Allen B. The ACR data science institute and AI advisory group: harnessing the power of artificial intelligence to improve patient care. J Am Coll Radiol. 2018;15(3Pt B):577–579. doi:10.1016/j.jacr.2017.12.024

32. Kakileti ST, Madhu HJ, Manjunath G, Wee L, Dekker A, Sampangi S. Personalized risk prediction for breast cancer pre-screening using artificial intelligence and thermal radiomics. Artif Intell Med. 2020;105:101854. doi:10.1016/j.artmed.2020.101854

33. Chartrand G, Cheng PM, Vorontsov E, et al. Deep learning: a primer for radiologists. Radiographics. 2017;37(7):2113–2131. doi:10.1148/rg.2017170077

34. Fuchsjäger M. Is the future of breast imaging with AI? Eur Radiol. 2019;29(9):4822–4824. doi:10.1007/s00330-019-06286-6

35. Yamashita R, Nishio M, Do RKG, et al. Convolutional neural networks: an overview and application in radiology. Insights Imaging. 2018;9:611–629. doi:10.1007/s13244-018-0639-9

36. Le EPV, Wang Y, Huang Y, Hickman S, Gilbert FJ. Artificial intelligence in breast imaging. Clin Radiol. 2019;74(5):357–366. doi:10.1016/j.crad.2019.02.006

37. Shin HC, Roth HR, Gao M, et al. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans Med Imaging. 2016;35(5):1285–1298. doi:10.1109/TMI.2016.2528162

38. Christodoulidis S, Anthimopoulos M, Ebner L, et al. Multisource transfer learning with convolutional neural networks for lung pattern analysis. IEEE J Biomed Health Inform. 2017;21(1):76–84. doi:10.1109/JBHI.2016.2636929

39. Zech J, Pain M, Titano J, et al. Natural language based machine learning models for the annotation of clinical radiology reports. Radiology. 2018;287:570–580. doi:10.1148/radiol.2018171093

40. Shen D, Wu G, Suk HI. Deep learning in medical image analysis. Annu Rev Biomed Eng. 2017;19:221–248. doi:10.1146/annurev-bioeng-071516-044442

41. Zheng N, Liu Z, Ren P, et al. Hybrid-augmented intelligence: collaboration and cognition. Front Inf Technol Electronic Eng. 2017;18:153–179. doi:10.1631/FITEE.1700053

42. Sorin V, Barash Y, Konen E, Klang E. Creating artificial images for radiology applications using generative adversarial networks (GANs) - a systematic review. Acad Radiol. 2020;27(8):1175–1185. doi:10.1016/j.acra.2019.12.024

43. Liew C. The future of radiology augmented with artificial intelligence: a strategy for success. Eur J Radiol. 2018;102:152–156. doi:10.1016/j.ejrad.2018.03.019

44. Willemink MJ, Koszek WA, Hardell C, et al. Preparing medical imaging data for machine learning. Radiology. 2020;295(1):4–15. doi:10.1148/radiol.2020192224

45. Price WN, Cohen IG. Privacy in the age of medical big data. Nat Med. 2019;25(1):37–43. doi:10.1038/s41591-018-0272-7

46. Bi WL, Hosny A, Schabath MB, et al. Artificial intelligence in cancer imaging: clinical challenges and applications. CA Cancer J Clin. 2019;69(2):127–157. doi:10.3322/caac.21552

47. Bahl M. Artificial intelligence: a primer for breast imaging radiologists. Journal of Breast Imaging. 2020;2(4):304–314. doi:10.1093/jbi/wbaa033

48. Bluemke DA, Moy L, Bredella MA, et al. Assessing radiology research on artificial intelligence: a brief guide for authors, reviewers, and readers-from the radiology editorial board. Radiology. 2020;294(3):487–489. doi:10.1148/radiol.2019192515

49. Reiner BI. Quantitative analysis of uncertainty in medical reporting: creating a standardized and objective methodology. J Digit Imaging. 2018;31(2):145–149. doi:10.1007/s10278-017-0041-z

50. Burnside ES, Sickles EA, Bassett LW, et al. The ACR BI-RADS experience: learning from history. J Am Coll Radiol. 2009;6(12):851–860. doi:10.1016/j.jacr.2009.07.023

51. Nam SY, Ko EY, Han BK, Shin JH, Ko ES, Hahn SY. Breast imaging reporting and data system category 3 lesions detected on whole-breast screening ultrasound. J Breast Cancer. 2016;19(3):301–307. doi:10.4048/jbc.2016.19.3.301

52. Stavros AT, Freitas AG, deMello GGN, et al. Ultrasound positive predictive values by BI-RADS categories 3–5 for solid masses: an independent reader study. Eur Radiol. 2017;27:4307–4315. doi:10.1007/s00330-017-4835-7

53. Shaikh F, Dehmeshki J, Bisdas S, et al. Artificial intelligence-based clinical decision support systems using advanced medical imaging and radiomics. Curr Probl Diagn Radiol. 2020.

54. Bahl M, Barzilay R, Yedidia AB, Locascio NJ, Yu L, Lehman CD. High-risk breast lesions: a machine learning model to predict pathologic upgrade and reduce unnecessary surgical excision. Radiology. 2018;286(3):810–818. doi:10.1148/radiol.2017170549

55. Kopans DB. Deep learning or fundamental descriptors? Radiology. 2018;287(2):728–729. doi:10.1148/radiol.2017173053

56. Mendelson EB. Artificial intelligence in breast imaging: potentials and limitations. Am J Roentgenol. 2019;212(2):293–299. doi:10.2214/AJR.18.20532

57. Hamidinekoo A, Denton E, Rampun A, Honnor K, Zwiggelaar R. Deep learning in mammography and breast histology, an overview and future trends. Med Image Anal. 2018;47:45–67. doi:10.1016/j.media.2018.03.006

58. Morgan MB, Mates JL. Applications of Artificial Intelligence in Breast Imaging. Radiol Clin North Am. 2021;59(1):139–148. doi:10.1016/j.rcl.2020.08.007.

59. Thrall JH, Li X, Li Q, et al. Artificial intelligence and machine learning in radiology: opportunities, challenges, pitfalls, and criteria for success. J Am Coll Radiol. 2018;15(3 Pt B):504–508. doi:10.1016/j.jacr.2017.12.026

60. Lång K, Dustler M, Dahlblom V, et al. Identifying normal mammograms in a large screening population using artificial intelligence. Eur Radiol. 2020. doi:10.1007/s00330-020-07165-1

61. Yao X, Yiyuan Qu, Tingting Wu . Application of artificial intelligence in breast medical imaging diagnosis. Am J Cancer Res Rev. 2020;4:12. doi:10.28933/ajocrr-2020-03-1505

62. Scott IA, Cook D, Coiera EW, Richards B. Machine learning in clinical practice: prospects and pitfalls. Med J Aust. 2019;211(5):203–205.e1. doi:10.5694/mja2.50294

63. Gao Y, Geras KJ, Lewin AA, et al. New frontiers: an update on computer-aided diagnosis for breast imaging in the age of artificial intelligence. AJR Am J Roentgenol. 2019;212(2):300–307. doi:10.2214/AJR.18.20392

64. Chiwome L, Okojie OM, Rahman AKMJ, Javed F, Hamid P. Artificial intelligence: is it armageddon for breast radiologists? Cureus. 2020;12(6):e8923.

© 2021 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2021 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.