Back to Journals » Advances in Medical Education and Practice » Volume 6

Perceptions of postgraduate trainees on the impact of objective structured clinical examinations on their study behavior and clinical practice

Authors Opoka R, Kiguli S, Ssemata A, Govaerts M , Driessen E

Received 19 December 2014

Accepted for publication 18 March 2015

Published 3 June 2015 Volume 2015:6 Pages 431—437

DOI https://doi.org/10.2147/AMEP.S79557

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

Editor who approved publication: Dr Md Anwarul Azim Majumder

Robert O Opoka,1 Sarah Kiguli,1 Andrew S Ssemata,2 Marjan Govaerts,3 Erik W Driessen3

1Department of Paediatrics and Child Health, 2Department of Psychiatry, College of Health Sciences, Makerere University, Kampala, Uganda; 3Faculty of Health, Medicine and Life Sciences, Maastricht University, the Netherlands

Background: The objective structured clinical examination (OSCE) is a commonly used method of assessing clinical competence at various levels, including at the postgraduate level. How the OSCE impacts on learning in higher education is poorly described. In this study, we evaluated the perceptions of postgraduate trainees regarding the impact of the OSCE on their study and clinical behavior.

Methods: We employed an explorative qualitative research design by conducting focus group discussions with 41 pediatric postgraduate trainees at the College of Health Science, Makerere University. A semi-structured tool was used to obtain the views and experiences of the trainees. Transcripts from the discussion were analyzed in an iterative manner using thematic content analysis.

Results: The trainees reported the OSCEs as a fair and appropriate tool for assessing clinical competency at the postgraduate level. However, they noted that whereas OSCEs assess a broad range of skills and competencies relevant to their training, there were areas that they did not adequately assess. In particular, OSCEs did not adequately assess in-depth clinical knowledge or detailed history-taking skills. Overall, the majority of the trainees reported that the OSCEs inspired them to study widely and improve their procedural and communication skills.

Conclusion: OSCEs are a useful tool for assessing clinical competencies in postgraduate education. However, the perceived limitations in their ability to assess complex skills raises concerns about their use as a standalone mode of assessment at the postgraduate level. Future studies should evaluate how use of OSCEs in combination with other assessment tools impacts on learning.

Keywords: objective structured clinical examination, assessment, higher education, perceptions, clinical practice, study, learning behavior

Introduction

The objective structured clinical examination (OSCE) is a popular method used in many medical education institutions and licensure boards at all levels (undergraduate, postgraduate, and continuing medical education).1 One of the reasons for the popularity of the OSCE is that it is user-friendly compared with other methods of clinical examination, like the long and short case formats.2,3 The main strengths of the OSCE are that it can assess a wide range of skills and competencies in a comprehensive, consistent, and standardized manner.4

However, the major challenges of OSCE are that it is labor-intensive in terms of preparation man-hours and costly to organize, especially if professional actors are used at the stations.5 These shortcomings appear to be negated by its strengths, making the OSCE a popular method of assessing competencies.6

Like any good assessment tool, the OSCE not only assesses competency but has also been shown to impact on various aspects of student learning.7 However, it is inconclusive as to how the OSCE impacts on student behavior, especially at the postgraduate training level.8,9

In this study, we set out to understand the influence of a summative assessment like the OSCE on the process of student learning and practice. The theoretical framework for the impact of assessment proposed by Cilliers et al10 was adopted as it provides a comprehensive understanding of how assessment impacts student behavior and learning. The model proposes that the impact of assessment on students’ behavior is influenced by four factors: appraisal of impact, ie, how likely the consequences of assessments (good or bad) are to happen and what the magnitude of the consequences is likely to be; appraisal of response, ie, the efficacy, cost, and value attached to the learning response required to achieve a particular outcome in assessment; perceived self-efficacy, ie, sense of what students are able to achieve in a given time frame (students calculate the magnitude, distribution, and nature of their learning efforts to achieve their predetermined assessment goals); and contextual factors, ie, level or motivation to calibrate behavior to the normative belief of referents (people whose opinion an individual values).

The purpose of this study was to explore the perceptions of postgraduate trainees of the impact of OSCEs on their training. Understanding the impact of assessment on the learning behavior of postgraduate trainees will help us to design appropriate assessment tools for clinical clerkship in graduate training.

Materials and methods

Study setting

The study was conducted at the College of Health Sciences, Makerere University, Kampala, Uganda. The college trains both undergraduates and postgraduates in various specialties. The postgraduate program is an academic course leading to the award of a master’s degree in a particular specialty. Trainees are doctors who have already completed their undergraduate degree in medicine and usually have practiced for a few years as general practitioners before joining the course. The course is 3 years long and divided into two main semesters for each year of study.

How OSCEs are conducted at the College of Health Sciences

The OSCEs usually consist of 12–17 stations each conducted for 10 minutes. The examination team discuss and decide on the number of stations and skills to be examined at each station. A variety of skills are examined at the stations, including physical examination and clinical reasoning, communication, history-taking, teaching, procedural skills, resuscitation, and interpretation of laboratory results. Real patients and caregivers are used in stations for history-taking and demonstration of clinical signs. In order to reduce fatigue, more than one patient or caregiver with similar history or physical signs are used for each station. For the history-taking, caretakers are asked to adhere to a particular story line. Manikins are used for the resuscitation stations. Nurses or other health workers are asked to “act” or role-play at the communication and teaching stations. These “actors” are briefed to behave and maintain a consistent behavior at these stations. Usually eight to ten of the stations are manned by a faculty member who observes and scores the performance of the candidate and the rest are designed as unmanned stations. The unmanned stations mainly assess reading and interpretation of investigation results relevant in patient management, and at these stations the trainees write down their answers. Written instructions are provided for the trainees to perform particular tasks at each of the stations. Predesigned checklists are used to score the stations. The examiners discuss the checklists and how to score prior to the examination.

Study design

We used an exploratory qualitative research design employing focus group discussions (FGDs) and thematic content analysis to explore trainees’ perceptions and experiences. Qualitative methodology was chosen because it is the most appropriate naturalistic method of inquiry in a situation where there is little pre-existing knowledge.11

Participants

All postgraduate trainees in the Department of Pediatrics and Child Health were considered eligible. At the time of the study, there were 41 trainees in the three classes (years) of the postgraduate program, with 29 (71%) being females. The trainees were from various backgrounds, having received their undergraduate training from different medical schools within and outside Uganda. A number of the first-year students had never experienced an OSCE-type examination prior to joining the program.

Focus group discussions

FGDs were conducted for trainees who consented to participate. Separate FGDs for each year of study were conducted in order to encourage the trainees to open up and express their views freely among their peers. A total of five FGDs were conducted (two for year 3, one for years 1 and 2, respectively, and a combined FGD for years 1 and 2). The FGDs consisted of five to six members and in total 27 of the 41 trainees (65.9%) participated in the discussions.

All the FGDs were conducted by the same moderator (ASS) and note writer using an open-ended FDG guide (Supplementary materials). These FGDs lasted about 45–60 minutes and were held in the trainees’ reading rooms at a convenient time when the participants were less occupied, ie, after ward rounds. All FGDs were conducted in English and audio-recorded.

Data collection procedure

An initial sample of three FGDs for all the years of the program (years 1, 2, and 3) were conducted. Key broad themes and categories were identified, and these were the main focus during the following FGDs. An additional two FGDs were conducted for the second round (one for year 3 and one year 1 and 2 combined) to enable further exploration of the findings from the first set of FGDs. After a total of five FGDs, saturation was reached, with no new concepts being raised. At the end of each FGD, the audio recordings were transcribed verbatim and coded incorporating the summarized field notes.

Data analysis

The audio recordings and annotated transcripts were reviewed as a whole together, with field notes to ensure that no relevant information was missed during transcription and to identify key emotions in the discussion that were not easily captured during the transcription. ROO and ASS each coded the data separately then discussed the codes and refined them in an iterative process after discussion. The codes were clustered during data collection and also at the end, leading to emergence of key themes. Ethical approval was obtained from the School of Medicine research and ethics committee. Study participants agreed to be part of the study by signing their informed consent.

Results

From the five FGDs, we explored the trainees’ views and perceptions. The findings are presented based on three broad themes that emerged, namely, the appropriateness of OSCEs for assessing clinical competence at the postgraduate level, their impact on trainees’ behavior, and their role in trainee’s motivation to learn.

Appropriateness of OSCEs in assessing clinical competences

Many of the trainees thought OSCEs are a fair and appropriate way of assessing their clinical competencies as they all go through the same experience in terms of questions, cases, time allocation, and examiners. This reduces examiner bias owing to having many examiners at different assessment points.

Year 3 FGD 2: “For OSCEs you all get an equal chance to pass through the same examiners, to do the same exams, there is really no malice, no bias because you are all doing the same questions, same supervisor, same time, same everything so you get an equal opportunity.”

Assesses relevant skills and competencies

Trainees highlighted that the OSCE evaluated a wide range of areas and competencies like communication, teaching, and interpretation of results. Importantly, the trainees acknowledged the skill set (physical examination, history-taking, test interpretation, procedural skills, clinical reasoning, and communication skills) was relevant to what they are doing on the wards and later as pediatricians.

Year 1 FGD 1: “(OSCEs) assess what I have been doing on the wards and what I will do when I qualify. They help to assess the skills that you are supposed to have.”

A number of the trainees thought there were areas that OSCEs did not sufficiently assess, in particular, history-taking skills and depth of knowledge. The time allocated (about 10 minutes) for each station was not enough for detailed history-taking, which is essential in making proper differential diagnoses. Similarly, the 10 minutes was insufficient for a deeper discussion and articulation of knowledge as expected of a postgraduate. This does not encourage in-depth study or learning.

Year 1 FGD 1: “I think the time is limited. You cannot explain, yet at postgraduate level you are expected to explain – to throw more light about something. Before you even start explaining time is up!”

How the OSCE impacts on trainee behavior

According to the framework of Cilliers et al,10 which was used in this study, the impact of assessment on learning is mediated by four determinants. From the data, three of the determinants, ie, likelihood of impact, appraisal of response, and perceived self-efficacy, were applicable. For the purpose of this study, the responses were classified as having an impact on either clinical or study behavior.

Likelihood of impact

Study behavior

The trainees noted that they were compelled to read widely and cover a broad range of material since the OSCE assesses a broad range of skills.

Years 1 and 2 FGD 4: “With the OSCEs you know that you will be examined in all systems, so you go back and develop yourself in all those systems.”

Clinical behavior

There was a clear impact on the communication skills frequently assessed during the OSCEs. The trainees appreciated the need to be good listeners and to always check the understanding of patients (and students) when giving information.

Year 3, FGD 2: “Then again communication skills have also improved. At [the communication] you are required to first get to know what she [mother of the child] knows then later fill in the gaps. I didn’t know that but now am better. They just think you are being a good doctor because you listened to them and asked them what they knew.”

The likelihood of the OSCEs having an impact seemed to work differently for different students. A few of the trainees reported that OSCEs had not had a significant impact on their behavior. This is probably because it is not always possible to practice the skills demanded by OSCEs during clinical work.

Year 1 FGD 1: “I don’t think OSCEs have affected my study behavior. When you actually look at the way we actually behave in acute [on the wards], and how we behave in an exam, two different personalities, too different.”

Appraisal of response

In the framework devised by Cilliers et al, three aspects are considered, ie, efficacy, cost, and value of response measured against personal goals. The efficacy of response was applicable as the trainees tried to match their learning to what was likely to be assessed and adapted their learning behavior according to the expectations of the OSCEs.

Year 3 FGD 5: “I have gone back to that old tradition of doing things the way it’s supposed to be done because I know it will be examined in an OSCE and of course in our training how you do something is very important.”

Perceived self-efficacy

This is a perception of being able to exert some control over a situation. In terms of study behavior, the unanimous opinion was that if one prepared for the OSCEs, one should be able to pass the examination. However, two differing views emerged relating to the time one needed to prepare for the OSCEs. Some of the trainees expressed the feeling that one needed a long time and to integrate the OSCE in their daily practice, whereas other trainees felt one could pass an OSCE with little effort since OSCEs were quite predictable.

Year 3 FGD 2: “The OSCEs become like a routine. I know I will get the chest, I know I will get an x-ray or a lab exam, so basically I can just work around that and ensure I pass. It’s hard to fail OSCEs.”

Motivation for graduate learning

One of the key findings of this study not covered by the framework of Cilliers et al10 was the inherent motivation for graduate training as a driver for learning. Some of the trainees were highly motivated to learn and improve their clinical skills regardless of their views on OSCEs. For these trainees, the motivation to learn was to acquire the knowledge and skills they need to become specialists rather than to simply pass an examination.

Year 1 FGD 1: “By the time somebody commits to come for a specialist training, there is a particular interest that they are drawn to rather than the exams. I do not think OSCEs are going to define who the person is going to be because will you do the exam all the time?”

Discussion

Overall, the trainees thought the OSCEs were a fair and appropriate way of assessing clinical competencies. This is consistent with the literature, which shows that OSCEs are perceived to be a fair and unbiased examination, accepted by both students and faculty.12–14 Standardization and objectivity made the OSCEs particularly acceptable to trainees. However, the literature shows that standardization of the OSCE per se does not make it a reliable and equitable tool.15,16 It is the appropriate sampling of the domains assessed at the stations that makes the OSCE reliable.15 This is an aspect the trainees did not consider.

In this study, the majority of trainees reported that the OSCE had positively impacted on their study and clinical behavior. This is consistent with literature. OSCEs have been previously reported to improve student’s clinical performance,13 and procedural, communication, and physical examination skills.1,17

It has been previously thought that the effects of summative assessment like OSCEs are most pronounced in the period prior to the assessment.18 In this study, however, although the OSCEs were done at a predetermined time (at the end of the semester), they were not associated with a marked change of behavior on the part of the trainees toward their examination time. This is probably because the trainees perceived that the OSCEs inspired them to acquire skills that were necessary for their daily clinical work.12

In the OSCEs, trainees are expected to demonstrate particular skill sets at each station.6 This may be different from the reality of clinical practice, which the OSCE was designed to approximate in the first place.4 In real settings, patients present with their conditions and are managed as a whole rather than in discrete phases.4,19 In our study, the fragmented approach of testing employed by OSCEs had both a positive and negative impact on the trainees. On the positive side, the trainees reported that the short timed session encouraged them to think fast and make quick decisions about patients, which can be an important skill especially in emergency and busy situations. However, the fragmented timed approach of assessment did not encourage in-depth learning. With the OSCEs, students are not motivated to practice complex skills that require longer times, given that OSCEs only assess skills that can be performed within a short time (10 minutes). One possible way of addressing this weakness of the OSCE is to increase the time spent at the stations to allow for more in-depth assessment of clinical skills.

The other issue is that of transferability of skills assessed in the OSCEs to real-life clinical settings.20 In our study, there was a view that the skills and competencies were transferrable because OSCEs compelled trainees to practice these skills on patients. On the other hand, some trainees reported that because the conditions on the wards were different from those in examinations, the skills demonstrated in the OSCE were different from the skills required to practice on the wards. Specifically, students encountered congestion and a high demand for attention from caretakers onwards, which is not the same as the controlled structured environment of OSCEs.

To improve the authenticity of the OSCEs, it is important to create an environment that resembles the real clinical setting.21 In our setting, we try to do this by use of real patients with actual clinical features or simulated patients (actors who simulate clinical features of patients) in the OSCE stations.22 An option is to use the OSCE in conjunction with other work-based assessment tools.21 Work-based assessments have been shown to have acceptable reliability and validity and to reflect reality when testing students in the place of patient care.23 However, how work-based assessment impacts on trainee learning and whether it results in adoption of deep learning approaches or better clinical care is not well described.24,25

The trend in medical education is to use multiple rather than single tools for assessing competence.19 In our study setting, in addition to OSCEs, other assessment tools like direct observations and rating of student interaction with patients on the wards, mini-clinical evaluation exercises, and case-based discussions are used. Given that all these assessments are done concurrently, it is difficult to separate the impact of one from the other. It is possible that some of the trainees did not consider other assessments when making comments about the impact of OSCEs on their learning and clinical practice. Future studies should focus on how assessment programs impact on student learning rather than the impact of single assessment tools like the OSCE.

This study was designed to describe the impact of OSCEs from the perspective of the trainees and relied solely on the views and experiences of the trainees themselves. It would have been helpful if the other stakeholders, like their teachers and other clinicians, correlated the trainees’ views and perceptions. Secondly, OSCEs vary depending on the numbers and design of the stations, skills assessed, time taken at each station, and the way the stations are scored.3 Therefore, the impact of the OSCE as an assessment tool may vary according to the design of the OSCE used.6

Conclusion and further research

This study found that trainees perceive OSCEs as a useful tool in assessing their clinical competencies at the postgraduate level. The use of OSCEs for summative assessments positively impacted on both the study and clinical behavior of the trainees. The impact of the OSCE on the trainees’ behavior is moderated by some factors in the theoretical framework proposed by Cilliers et al.10 Future work should explore how OSCEs can be combined with the other tools to maximally impact on student learning. More studies need to be conducted to establish theoretical frameworks for how assessments affect the behaviors of graduate trainees.

Acknowledgments

We thank all the senior house officers from the Department of Paediatrics and Child Health, Makerere University, who participated and freely shared their views and experiences with us. We acknowledge the contributions of Mabel Mayende and Carol Akullu in assisting with the focus group work as well as those of Jolly Nankunda and Joseph Rujumba for useful suggestions to improve this research. A summary of this work was presented at a medical education conference in Kampala in June 2014. The study was supported in part by a MEPI grant (5R24TW008886) awarded to a consortium of Ugandan Medical Schools (MESAU).

Author contributions

ROO, SK, MG, and ED were involved in the design of this study. ROO wrote the first draft of the paper. ASS conducted the focus group discussions. All authors contributed toward data analysis, revising the paper and agree to be accountable for all aspects of the work.

Disclosure

The authors report no conflicts of interest in this work.

References

Carracio C, Englander R. The Objective Structured Clinical Examination – a step in the direction of competency-based evaluation. Arch Pediatr Adolesc. 2000;154:736–741. | |

Harden RM, Stevenson M, Wilson DW, Wilson GM. Assessment of clinical competence using objective structured examination. BMJ. 1975;1:447–451. | |

Harden RM, Gleeson FA. Assessment of clinical competence using an objective structured clinical examination (OSCE). Med Educ. 1979;13: 41–54. | |

Turner JL, Dankoski ME. Objective structured clinical exams: a critical review. Fam Med. 2008;40:574–578. | |

Cusimano CA, Cohen R, Tucker W, Murnaghan J, Kodama R. A comparative analaysis of the costs of administration of an OSCE. Acad Med. 1994;69:571–576. | |

Hodges B. OSCE! Variations on a theme by Harden. Med Educ. 2003;37:1134–1140. | |

Newble DI. Techniques for measuring clinical competence: objective structured clinical examinations. Med Educ. 2004;38:199–203. | |

Norman G, Neville A, Blake JM, Mueller B. Assessment steers learning down the right road: impact of progress testing on licensing examination performance. Med Teach. 2010;32:496–499. | |

Al-Kadri HM, Al-Moamary MS, Roberts C, Van der Vleuten CP. Exploring assessment factors contributing to students’ study strategies: literature review. Med Teach. 2012;34:S42–S50. | |

Cilliers FJ, Schuwirth LW, Adendorff HJ, Herman N, van der Vleuten CP. The mechanism of impact of summative assessment on medical students’ learning. Adv Health Sci Educ Theory Pract. 2010;15:695–715. | |

Silverman D. Doing Qualitative Research: A Practical Handbook. London, UK: Sage Publications Ltd; 2000. | |

Newble DI. Eight years’ experience with a structured clinical examination. Med Educ. 1988;22:200–204. | |

Duerson MG, Romrell LJ, Stevens CB. Impacting faculty teaching and student performance: nine years’ experience with Objective Structured Clinical Examination. Teach Learn Med. 2000;12:176–182. | |

Pierre RB, Wierenga A, Barton M, Branday JM, Christie CD. Student evaluation of an OSCE in Paediatric at the University of the West Indies, Jamaica. BMC Med Educ. 2004;4:22. | |

van der Vleuten CP, Schuwirth LW, Driessen EW, et al. A model for programmatic assessment fit for purpose. Med Teach. 2012;34:205–214. | |

Schuwirth L, Ash J. Assessing tomorrow’s learners: in competency-based education only a radically different holistic method of assessment will work. Six things we could forget. Med Teach. 2013;5:341–345. | |

Cohen R, Reznick RK, Taylor BR, Provan J, Rothman A. Reliability and validity of the objective structured clinical examination in assessing surgical residents. Am J Surg. 1990;160:302–305. | |

Cilliers FJ, Schuwirth LW, Herman N, Adendorff HJ, van der Vleuten CP. A model of pre-assessment learning effects of summative assessment in medical education. Adv Health Sci Educ Theory Pract. 2012;17:39–53. | |

van der Vleuten CP, Schuwirth LW. Assessing professional competence: from methods to programmes. Med Educ. 2005;39:309–317. | |

Rethans JJ, Norcini JJ, Barón-Maldonado M, et al. The relationship between competence and performance: implications for assessing practice performance. Med Educ. 2002;36:901–909. | |

Marwaha S. Objective Structured Clinical Examinations (OSCEs), psychiatry and the Clinical assessment of Skills and Competencies (CASC). Same evidence, different judgement. BMC Psychiatry. 2011;11:85. | |

Gormley GJ, McCusker D, Booley MA, McNeice A. The use of real patients in OSCEs: a survey of medical students predictions and opinions. Med Teach. 2011;33:684. | |

Norcini JJ. Setting standards on educational tests. Med Educ. 2003;37: 464–469. | |

Al-Kadri HM, Al-Kadi MT, Van Der Vleuten CP. Workplace-based assessment and students’ approaches to learning; a qualitative inquiry. Med Teach. 2013;35:S31–S38. | |

Miller A, Archer J. Impact of workplace based assessment on doctor’s education and performance: a systematic review. BMJ. 2010;341:c5064. |

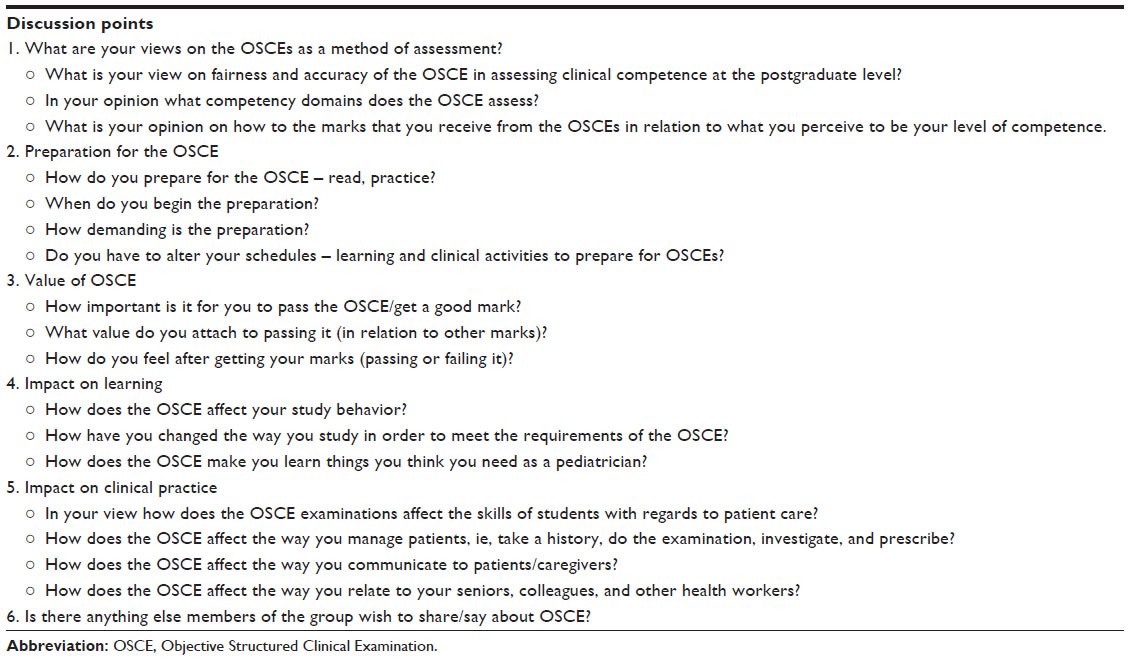

Supplementary materials

Focus group discussion guide

Introduction

Assessment drives learning – both desirable and undesirable learning strategies. The OSCE is the commonly acceptable method used for assessing clinical competencies. The aim of this study is to explore what impact the OSCE as a method of assessment has on your day-to-day study and clinical behavior.

© 2015 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2015 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.