Back to Journals » Medical Devices: Evidence and Research » Volume 12

Undermining a common language: smartphone applications for eye emergencies

Authors Charlesworth JM , Davidson MA

Received 5 September 2018

Accepted for publication 26 October 2018

Published 15 January 2019 Volume 2019:12 Pages 21—40

DOI https://doi.org/10.2147/MDER.S186529

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 2

Editor who approved publication: Dr Scott Fraser

Jennifer M Charlesworth,1,2 Myriam A Davidson2

1School of Medicine, National University of Ireland, Galway, Ireland; 2AM Charlesworth & Associates Science and Technology Consultants, Ottawa, ON, Canada

Background: Emergency room physicians are frequently called upon to assess eye injuries and vision problems in the absence of specialized ophthalmologic equipment. Technological applications that can be used on mobile devices are only now becoming available.

Objective: To review the literature on the evidence of clinical effectiveness of smartphone applications for visual acuity assessment marketed by two providers (Google Play and iTunes).

Methods: The websites of two mobile technology vendors (iTunes and Google Play) in Canada and Ireland were searched on three separate occasions using the terms “eye”, “ocular”, “ophthalmology”, “optometry”, “vision”, and “visual assessment” to determine what applications were currently available. Four medical databases (Cochrane, Embase, PubMed, Medline) were subsequently searched with the same terms AND mobile OR smart phone for papers in English published in years 2010–2017.

Results: A total of 5,024 Canadian and 2,571 Irish applications were initially identified. After screening, 44 were retained. Twelve relevant articles were identified from the health literature. After screening, only one validation study referred to one of our identified applications, and this one only partially validated the application as being useful for clinical purposes.

Conclusion: Mobile device applications in their current state are not suitable for emergency room ophthalmologic assessment, because systematic validation is lacking.

Keywords: visual assessment, visual acuity and emergency medicine, epidemiology, methodology, ophthalmology, ocular

Background

Clinical utility of available smartphone applications for emergency health care providers who evaluate ophthalmologic complaints has not yet been established. Emergency room physicians evaluate a variety of ophthalmologic emergencies, including acute glaucoma, retinal detachment, and episcleritis/scleritis. These emergencies potentially threaten vision and require careful visual examination. A quick, accessible, portable electronic tool that evaluates vision in patients of all ages at the bedside is required.1–4 Before use, however, such tools need to be rigorously evaluated. Transferring a tool from its paper to smartphone version does not necessarily mean that reliability and validity remain intact.5–7

Visual acuity (VA) tools (eg, Eye Handbook, Visual Acuity XL) are available on smartphones, and are employed variably in emergency departments. VA tests give clinicians an estimate of a patient’s ability to perceive spatial detail,8 and are one aspect of a full assessment. VA is the easiest and most important test for bedside evaluation, because it correlates positively with both quality of life and degree of limitation in independent activities of daily living, especially in the geriatric population.9–11

Evaluation of VA faces a number of challenges12–14 as it comprises detection acuity (ability to interpret visual stimulus and note if present or absent), resolution acuity (ability to evaluate and express if all the spatial detail is absorbed and resolved from the background), and recognition acuity (ability to identify a target and recognize it). This evaluation can be especially difficult when assessing young children13 or the elderly.15

Today, eye care professionals use the Bailey–Lovie chart14 and the Early Treatment of Diabetic Retinopathy Study (ETDRS).16 Both tools have standard letter optotypes (letter-like images) with five optotypes per line. These tools correlate well with ocular pathology in the adult population and are the gold standard for VA. In research circles, VA is now expressed in terms of logarithm of minimum angle of resolution (logMAR) equivalents, as opposed to Snellen equivalent distances (eg, 20/40 feet [6/12 m]), although the latter are often still used in modern emergency departments.13,17

There are smartphone applications for VA tests that could replace the older paper versions. The problem is that these applications may not have undergone the rigorous methodological assessment necessary for either screening out pathological conditions or arriving at an accurate diagnosis. Previous reviews of smartphone applications in other areas of medicine have demonstrated substantial variation in quality.17–20 Quality is especially important in acute care settings, where urgent treatment decisions need to be made. The aim of this paper was to assess the evidence for the usefulness and validity of selected smartphone applications intended for ophthalmologic assessment of acute eye emergencies.

Methods

This systematic review identified relevant smartphone applications in Canada and Ireland through a search of the websites of two mobile technology vendors (iTunes and Google Play) on three separate occasions between November 2014 and July 2017 using the search terms “eye”, “ ocular”, “ ophthalmology”, “ optometry”, “ vision”, “ and visual assessment”. Secondly, we searched four medical databases (Cochrane, Embase, PubMed, Medline) for research papers on the applications we had identified. We used the same search terms, with the addition of “mobile” or “smartphone”. We included only papers written in English from 2010 to 2017. During the whole analysis, the two authors performed data extraction independently, and conflicts on pertinence were resolved by discussion. This systematic review thus evaluates existing smartphone applications marketed to health care professionals for the determination of VA in Canada and Ireland.

Application search

Identification of mobile applications

An iterative ongoing search in both countries for applications in online stores for iPhone (iTunes, App Store) and Google Play (Google Play) was done, with search terms (Figure S1) altered for use in each database. Both authors independently reviewed applications for inclusion on the basis of a priori criteria (Figure S2). The final update was completed in November 2017. The search was limited to the two stores listed, as they represent the majority (99.7%) of smartphone user platforms according to the International Data Corporation Worldwide Quarterly Mobile Phone Tracker (May 2017) and make up the majority of the market share in the two target regions.21

Selection criteria

English language applications marketed for evaluation of vision by health care professionals were screened by title and description. Applications that were targeted for educational purposes/knowledge dissemination, games, self-monitoring, multimedia/graphics, recreational health and/or fitness, business, travel, weather, or sports or were clinically outdated were all excluded. Where it was unclear whether the application should be included, further review of any linked websites was performed.

Data extraction and encoding

Data elements extracted included year of release, affiliation (academic, commercial), target as stated, content source, and cross-platform availability (for use with tablets and/or computers). A preliminary coding system was developed based on the first application store.

Health literature search

A systematic search was conducted of the four major databases (Figure 1) from January 1, 2010 to July 31, 2016. The search strategy was developed in consultation with a medical librarian and methodological search professional (Figure S1). This was supplemented with a review of relevant reference material for any missed literature.

| Figure 1 Identification of relevant smartphone studies. |

Identification of articles for literature review

Selection criteria, data extraction, and coding

Twelve relevant articles were identified in the literature using the processes described (Figure 1). Additional exclusion criteria for articles included preclinical studies or those addressing clinically specialized ophthalmologic/neurological populations not seen in the emergency room (Table S1).

Results

Applications

A total of 44 applications were retained in the final data set after screening of 7,595 applications. In the Canadian iTunes store, 2,526 applications met our initial search criteria. Of those, 927 were unique and suitable for detailed review (Figure 2): 229 were screened by title, of which 21 were selected based on description. Similar selections were made in Google Play. The results were combined and four additional duplicates removed, to obtain a final data set of 24 applications whose characteristics are summarized in Table 1. The Irish iTunes store had substantially fewer applications and duplicates, with 1,100 applications identified and 307 duplicates removed, to obtain a total of 793 applications to be screened by title (Figure 3).

| Figure 2 Selection of Canadian smartphone applications. |

| Table 1a Results of systematic application review, Canada (n= 24) |

| Figure 3 Selection of Irish smartphone applications. |

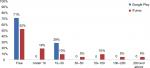

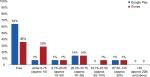

On the two Canadian stores, on average Google Play had a much lower percentage of on-target applications (0.5% vs 2.3%), and iTunes had a much higher percentage of costing vs free applications (52% vs 29%; Figure 4). Applications in the Google Play store were more likely to be free and less expensive overall. This trend was also seen in the Irish store (Figure 5). In the iTunes store, only a single application cost above $200, but 19% of the identified applications carried a cost of $50 or more (Figure 4). Of applications that appeared in both stores, 50% (n=2) remained free regardless of where they appeared. One app was more expensive in the iTunes store, and one was more expensive in Google Play. Again, this trend was also seen in the Irish stores.

| Figure 4 Cost of Canadian smartphone applications by platform. |

| Figure 5 Cost of Irish smartphone applications by platform. |

None of the listed providers are academic institutions. One application (4%) referred to an academic affiliation in the form of a validation study from the University of Auckland. The majority (75%, n=18) of the identified applications originated from companies. Individuals provided the remaining 21% (n=5) of applications, while 4% (n=1) of applications did not list any provider.

Eighteen of 24 applications in the two Canadian stores vs 22 of 24 in the two Irish stores (75 vs 92%) explicitly indicated which health care professionals they were directly marketed toward. Targeted professionals included medical doctors, ophthalmologists, opticians, and medical students, to name a few. However, 58% of these applications in Canadian stores vs 42% in Irish stores included some sort of disclaimer, eg, “Should not be used as a primary visual acuity measuring tool, it can provide a handy rough vision screen when a chart is not available, or it can be used to complement static, wall-based Snellen charts” (Eye Chart HD by Dok LLC).

In the Canadian stores, four applications (17%) claimed they were validated although none, when investigated, had used large-scale head-to-head studies under clinical conditions or included a variety of modern smartphones or tablet technology. In the Irish stores, even fewer (two of 24, 8%) applications stated some type of validation. Of the four validated Canadian applications, Visual Acuity XL showed the most methodological rigor, as evidenced by its reference to an affiliation with the University of Auckland and a 2013 validation study by Black et al.22 All four of the validated applications were available in the iTunes store, but only one, Pocket Eye Exam by Nomad, was also available on the Google Play platform. In the Irish stores, validated applications appeared only in the iTunes store (Table 2).

Of note, applications varied by country, although 58% (14 of 24) of applications were present in both countries. Each country had ten distinct applications. Moreover, within a given country, applications were not universally available for both platforms. For example, KyberVision Japan’s Visual Acuity XL, the most robustly validated application, was available in both countries, but only on the iTunes platform.

Overall, only 21% (n=5) of applications in the Canadian store were affiliated with academic institutions, which (given limited information) was our best proxy measure for academic rigor. Only one application, Visual Acuity XL, explicitly mentioned a validation study. In the Irish store, the same four applications were affiliated with academic institutions. The additional application in the Canadian store spoke of a research group without explicitly naming an affiliated academic institution. However, it was retained based on consensus of the investigators.

Systematic review of the literature

Twelve relevant articles were retained from an initial 5,648 identified by the systematic review of the literature (Figure 3). Only ten of 15 assessed methods of validation for smartphone applications. Two review articles spoke about a variety of applications, but primary data sources were not identified. Cheng et al23 discussed in limited fashion some of the challenges in this area in 2014. Mostly, articles compared one to three applications on the basis of a specific smartphone or tablet. One of the articles discussed validation of the Eye Handbook vs a near vision chart and identified that the application tends to overestimate near-VA vs conventional near vision card by an average of 0.11 logMAR (P<0.0001).

Given the rate of mobile technology change or upgrade,21 the specific applications reviewed here are likely already obsolete. Moreover, there is poor correlation of the literature with the identified smartphone applications. There is poor cross-referencing between the applications and the health literature. Only one application cited a validation study. Furthermore, the health literature, when referenced, may not always be accessible to the consumer, due to copyright limitations when journals are not open source. While the Eye Handbook did have some validation in the health literature,24,25 this was not referred to in the online platform of the application (Table 3). This makes it impossible for busy health care professionals to distinguish a validated application from among the others.

Discussion

This systematic review demonstrates that despite the availability of many mobile device applications for ophthalmologic assessment, they are either not suitable for the emergency room or else systematic validation is lacking. A combination of 5,024 Canadian and 2,571 Irish applications were identified on Google Play and iTunes as having the potential for use in ocular emergency diagnostics. Less than 1% of the identified applications (n=44) were unique and on target as potentially suitable. Four applications that were available in both stores and one additional one from a Canadian store only (n=5) were affiliated with an academic institution. Only a single application explicitly cited a validation study in its online store. This validation, based on the current standards of best practice, would have to be described as only partial.

When searched in the academic literature, three applications – Visual Acuity XL, Eye Handbook, and AmblyoCare – had some evidence of validation. The Eye Handbook was validated by a single study26 on iPhone 5, but did not address issues of glare, which in studies of other applications have been shown to make the results unreliable.27 Black et al22 used a cross-sectional design with a convenience sample of 85 healthy volunteers to demonstrate that a first-generation iPad and Visual Acuity XL could reproduce gold standard eye chart evaluation data, but only with significant attention to position and modifications to the tablet’s screen to avoid glare. Outside these standardized conditions, iPad results were significantly poorer than standardized paper-based/wall-mounted eye charts.28

Aurora et al28 noted the advantage of preliminary screening using the Eye Handbook application for purposes of home monitoring or public health data, but considered it not yet useful in the clinical setting. These authors did not comment on the reliability or validity of the Eye Handbook adapting the gold standard ETDRS into a now obsolete application for an iPod touch and an iPhone 3G. They did not address the impact of screen glare or size of the device on the patient’s required distance from it and how these variables impacted test validity either.

The online store text for the AmblyoCare28 application indicates that it is registered as a medical device with the Austrian medical board. However, no original research data for this application came up in our literature review, making the limits of this application for clinical use unclear.28 Therefore, there is a lack of transparent validation of this tool that is accessible to health-care professionals.

The difficulty with all these applications is that adapting a visual chart, eg, a Snellen chart, to the varying screen sizes/properties of various smartphone devices does not ensure that the size, font, or required distance from the image preserve the diagnostic properties of the original paper chart.5,7,29,30 One may argue that these tools could provide a rough estimate of vision sufficient for an emergency clinician. However, we would respond by saying that the results of tools that have not been validated cannot be usefully compared to known benchmarks to make treatment decisions concerning a patient’s eyesight. For example, if an application categorizes someone’s sight as normal, that is not comparable to 20–20 vision on a logMAR chart. Such results may generate treatment decisions based on faulty information.

Limitations

We did not examine smaller electronic markets, and our analysis looked only at the description of the application. Applications for non-English-speaking foreign markets were excluded from the review. We did not address the variation in regulatory requirements in the different global markets. We did not ask the opinion of professionals in this field, as some studies similar to ours have done.

Conclusion

We conclude that efficient regulation and standardization of valid clinical tools for smartphones are needed.18,31,32 This is a major challenge. One possible solution could come from the business world. Instead of free ad-based revenue passed to individual health care professionals, which rewards individual developers with low-quality applications, a business case can be developed to amalgamate resources, first nationally and perhaps eventually internationally, to fund high-quality applications that can be used globally. This would consolidate funds and expertise into a high-quality validated application that has international value (on a par with paper tools).

Despite the bright future for smartphone technology, mobile device applications in their current state are not suitable for emergency room ophthalmologic assessment. Furthermore, education for clinicians about measurement science and the limits of technological validation is also required. The importance of quality electronic diagnostic tools for patients and the challenges introduced by nonvalidated tools need to be disseminated to all health professionals.

Data-sharing statement

Most data generated or analyzed during this study are included in this published article and its supplementary information files. The original data sets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Acknowledgments

This work would not have been possible without the generous support of the following dedicated individuals. Thank you: Dr Mary V Seeman, Mrs Jennifer Desmarais, Mr Tobias Feih, Dr Joshua Chan, Ms Chelsea Lefaivre, Ms Johanna Tremblay, Ms Jennifer Lay, Dr Amanda Carrigan, Dr Clarissa Potter, Dr Gerald Lane, and Dr Vinnie Krishnan.

Author contributions

JMC conceived and designed the study and supervised the conduct of the study and data collection. Both authors collected and analyzed the data, managed the data and quality control, provided statistical advice, drafted the manuscript, and contributed substantially to its revision, gave final approval of the version to be published, and agree to be accountable for all aspects of the work.

Disclosure

The authors report no conflicts of interest in this work.

References

Bourges JL, Boutron I, Monnet D, Brézin AP. Consensus on Severity for Ocular Emergency: The BAsic SEverity Score for Common OculaR Emergencies [BaSe SCOrE]. J Ophthalmol. 2015;2015:576983. | ||

Collignon NJ. Emergencies in glaucoma: a review. Bull Soc Belge Ophtalmol. 2005;296(296):71–81. | ||

Muth CC. Eye Emergencies. JAMA. 2017;318(7):676. | ||

Tarff A, Behrens A. Ocular Emergencies: Red Eye. Med Clin North Am. 2017;101(3):615–639. | ||

Bellamy N, Campbell J, Hill J, Band P. A comparative study of telephone versus onsite completion of the WOMAC 3.0 osteoarthritis index. J Rheumatol. 2002;29(4):783–786. | ||

Bond M, Davis A, Lohmander S, Hawker G. Responsiveness of the OARSI-OMERACT osteoarthritis pain and function measures. Osteoarthritis Cartilage. 2012;20(6):541–547. | ||

Hawker GA, Davis AM, French MR, et al. Development and preliminary psychometric testing of a new OA pain measure-an OARSI/OMERACT initiative. Osteoarthritis Cartilage. 2008;16(4):409–414. | ||

Kniestedt C, Stamper RL. Visual acuity and its measurement. Ophthalmol Clin North Am. 2003;16(2):155–170. | ||

Chou R, Dana T, Bougatsos C, Grusing S, Blazina I. Screening for Impaired Visual Acuity in Older Adults: Updated Evidence Report and Systematic Review for the US Preventive Services Task Force. JAMA. 2016;315(9):915–933. | ||

Matthews K, Nazroo J, Whillans J. The consequences of self-reported vision change in later-life: evidence from the English Longitudinal Study of Ageing. Public Health. 2017;142:7–14. | ||

Hochberg C, Maul E, Chan ES, et al. Association of vision loss in glaucoma and age-related macular degeneration with IADL disability. Invest Ophthalmol Vis Sci. 2012;53(6):3201–3206. | ||

Gerra G, Zaimovic A, Gerra ML, et al. Pharmacology and toxicology of Cannabis derivatives and endocannabinoid agonists. Recent Pat CNS Drug Discov. 2010;5(1):46–52. | ||

Sonksen PM, Salt AT, Sargent J. Re: the measurement of visual acuity in children: an evidence-based update. Clin Exp Optom. 2014;97(4):369. | ||

Bailey IL, Lovie JE. New design principles for visual acuity letter charts. Am J Optom Physiol Opt. 1976;53(11):740–745. | ||

Abdolali F, Zoroofi RA, Otake Y, Sato Y. Automatic segmentation of maxillofacial cysts in cone beam CT images. Comput Biol Med. 2016;72:108–119. | ||

Elliott DB, Whitaker D, Bonette L. Differences in the legibility of letters at contrast threshold using the Pelli-Robson chart. Ophthalmic Physiol Opt. 1990;10(4):323–326. | ||

Anstice NS, Thompson B. The measurement of visual acuity in children: an evidence-based update. Clin Exp Optom. 2014;97(1):3–11. | ||

Bender JL, Yue RY, To MJ, Deacken L, Jadad AR. A lot of action, but not in the right direction: systematic review and content analysis of smartphone applications for the prevention, detection, and management of cancer. J Med Internet Res. 2013;15(12):e287. | ||

Lalloo C, Shah U, Birnie KA, et al. Commercially available smartphone apps to support postoperative pain self-management: scoping review. JMIR Mhealth Uhealth. 2017;5(10):e162. | ||

Larsen ME, Nicholas J, Christensen H. A Systematic assessment of smartphone tools for suicide prevention. PLoS One. 2016;11(4):e0152285. | ||

Smartphone OS Market Share, 2017 Q1. Smartphone OS 2017. Available from: https://www.idc.com/promo/smartphone-market-share/os. Accessed November 17, 2017. | ||

Black JM, Jacobs RJ, Phillips G, et al. An assessment of the iPad as a testing platform for distance visual acuity in adults. BMJ Open. 2013;3(6):e002730. | ||

Cheng NM, Chakrabarti R, Kam JK. iPhone applications for eye care professionals: a review of current capabilities and concerns. Telemed J E Health. 2014;20(4):385–387. | ||

Perera C, Chakrabarti R. Response to: ‘Comment on The Eye Phone Study: reliability and accuracy of assessing Snellen visual acuity using smartphone technology’. Eye. 2015;29(12):1628. | ||

Perera C, Chakrabarti R, Islam A, Crowston J. The eye phone study (EPS): Reliability and accuracy of assessing snellen visual acuity using smart-phone technology. Clinical and Experimental Ophthalmology. 2012; Conference: 44th Annual Scientific Congress of the Royal Australian and New Zealand College of Ophthalmologists, RANZCO 2012 Melbourne, VIC Australia. Conference Start: 20121124 Conference End: 20121128. Conference Publication: (var.pagings). 20121140 (pp 20121121). | ||

Tofigh S, Shortridge E, Elkeeb A, Godley BF. Effectiveness of a smartphone application for testing near visual acuity. Eye. 2015;29(11):1464–1468. | ||

Black JM, Hess RF, Cooperstock JR, To L, Thompson B. The measurement and treatment of suppression in amblyopia. J Vis Exp. 2012;70:e3927. | ||

Arora KS, Chang DS, Supakontanasan W, Lakkur M, Friedman DS. Assessment of a rapid method to determine approximate visual acuity in large surveys and other such settings. Am J Ophthalmol. 2014;157(6):1315–1321. | ||

Bastawrous A, Rono HK, Livingstone IA, et al. Development and Validation of a Smartphone-Based Visual Acuity Test (Peek Acuity) for Clinical Practice and Community-Based Fieldwork. JAMA Ophthalmol. 2015;133(8):930–937. | ||

Sullivan GM. A primer on the validity of assessment instruments. J Grad Med Educ. 2011;3(2):119–120. | ||

Gagnon L. Time to rein in the “Wild West” of medical apps. CMAJ. 2014;186(8):E247. | ||

Cole BL. Measuring visual acuity is not as simple as it seems. Clin Exp Optom. 2014;97(1):1–2. | ||

Aslam TM, Parry NR, Murray IJ, Salleh M, Col CD, Mirza N, et al. Development and testing of an automated computer tablet-based method for self-testing of high and low contrast near visual acuity in ophthalmic patients. Graefes Arch Clin Exp Ophthalmol. 2016;254(5):891–899. | ||

Brady CJ, Eghrari AO, Labrique AB. Smartphone-Based Visual Acuity Measurement for Screening and Clinical Assessment. JAMA. 2015;314(24):2682–2683. | ||

Chacon A, Rabin J, Yu D, Johnston S, Bradshaw T. Quantification of color vision using a tablet display. Aerosp Med Hum Perform. 2015;86(1):56–58. | ||

Kollbaum PS, Jansen ME, Kollbaum EJ, Bullimore MA. Validation of an iPad test of letter contrast sensitivity Optom Vis Sci. 2014;91(3):291–296. | ||

Perera C, Chakrabarti R, Islam FM, Crowston J. The Eye Phone Study: reliability and accuracy of assessing Snellen visual acuity using smartphone technology. Eye (Lond). 2015;29(7):888–894. doi: 10.1038/eye.2015.60. Epub 2015 May 1. | ||

Phung L, Gregori NZ, Ortiz A, Shi W, Schiffman JC. Reproducibility and comparison of visual acuity obtained with Sightbook mobile application to near card and Snellen chart. Retina. 2016;36(5):1009–1020. | ||

Toner KN, Lynn MJ, Candy TR, Hutchinson AK. The Handy Eye Check: a mobile medical application to test visual acuity in children. J AAPOS. 2014;18(3):258–260. | ||

Zhang ZT, Zhang SC, Huang XG, Liang LY. A pilot trial of the iPad tablet computer as a portable device for visual acuity testing. J Telemed Telecare. 2013;19(1):55–59. |

© 2019 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2019 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.