Back to Journals » Advances in Medical Education and Practice » Volume 7

Portfolio as a tool to evaluate clinical competences of traumatology in medical students

Authors Santonja-Medina F, García-Sanz MP, Martínez F, Bo Rueda D, Garcia-Estan J

Received 29 June 2015

Accepted for publication 5 October 2015

Published 11 February 2016 Volume 2016:7 Pages 57—61

DOI https://doi.org/10.2147/AMEP.S91401

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

Editor who approved publication: Dr Md Anwarul Azim Majumder

Fernando Santonja-Medina,1,2 M Paz García-Sanz,3 Francisco Martínez-Martínez,1,2 David Bó,1,2,4 Joaquín García-Estañ,5

1Faculty of Medicine, Department of Traumatology, 2Faculty of Medicine, University Hospital Virgen de la Arrixaca, 3Faculty of Medicine, Department of Education, 4Faculty of Medicine, University Hospital Morales Meseguer, 5Faculty of Medicine, Department of Physiology, University of Murcia, Murcia, Spain

Abstract: This article investigates whether a reflexive portfolio is instrumental in determining the level of acquisition of clinical competences in traumatology, a subject in the 5th year of the degree of medicine. A total of 131 students used the portfolio during their clinical rotation of traumatology. The students’ portfolios were blind evaluated by four professors who annotated the existence (yes/no) of 23 learning outcomes. The reliability of the portfolio was moderate, according to the kappa index (0.48), but the evaluation scores between evaluators were very similar. Considering the mean percentage, 59.8% of the students obtained all the competences established and only 13 of the 23 learning outcomes (56.5%) were fulfilled by >50% of the students. Our study suggests that the portfolio may be an important tool to quantitatively analyze the acquisition of traumatology competences of medical students, thus allowing the implementation of methods to improve its teaching.

Keywords: competence-based education, evaluation, assessment, teaching methodologies

Introduction

Since 2008, all medical schools in Spain have implemented new medical curricula that follow the requirements of the so-called Bologna process. These plans encourage a shift from a professor-centered classical scheme related mostly to the acquisition of theoretical knowledge to a competence-based process, in which competences form the basis of the teaching–learning equation.1,2

Competences are not easy to evaluate3,4 with traditional tools because these are not often designed to determine whether a competence has been acquired by students5 or designed to provide formative evaluation.6 One of the new tools used for this purpose is the portfolio,7 considered as a method that allows continuous and formative assessment, bringing critical reflexion8–11 and autoregulation.12,13 Many authors have described the benefits of using portfolios in medical education,14–18 but limitations regarding their use19–21 and reliability and validity have also been reported.22,23

Although portfolios have been used in different clinical medical subject areas,24–28 to the best of our knowledge, there are no reports on the reliability of portfolio to assess the acquisition of competences in surgical areas or subjects such as traumatology. Thus, in the current article, we report the application of a portfolio tool to assess the level of acquisition of clinical skills and competences in medical students of the Medical School of the University of Murcia (Spain), during the clinical rotations of traumatology and orthopedic surgery. An important part of the study is also devoted to the analysis of the reliability of the portfolio.29

Methods

A portfolio was designed to assess the level of acquisition of clinical competences, according to the competences and learning outcomes assigned to traumatology in the new study plan of the degree of medicine of the Medical School of the University of Murcia. The list of competences and the learning outcomes are shown in Table 1. This study was approved by the ethics committee of the Universidad de Murcia and written informed consent was obtained from all participants.

| Table 1 Frequency and percentage of achievement of clinical competences |

Academic structure

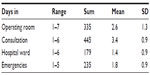

Traumatology is a compulsory subject in the 5th year of the degree, consisting of 55 hours of theoretical classes, of which 28 hours take place in the classroom, 14 hours are of a seminar type, 10 hours are about clinical cases, and 3 hours are about cooperative learning; 21 hours of classes on laboratory skills in seven sessions; and 2 weeks of hospital rotation (50 hours, 10 days). Of these 50 hours, students rotate in the traumatology service of the university hospitals between hospital ward, medical consultation, operating rooms, and emergency services. Only one student is assigned to one professor in the hospital. Table 2 shows the number of days students spent during these rotations.

| Table 2 Days spent by students during the traumatology rotation |

Portfolio structure

The portfolio was given to all the students during the course (n=131) in a booklet format. After the identification of data, the students read about legal and ethical issues in relation to the need for keeping medical secret and signed conformity. Each day the students were expected to fill up their reflections before leaving the hospital, writing about the clinical activities observed during the day. In addition, the portfolio had to be signed by the assigned professor every day. The students were given enough space to include everything needed, but there were three main paragraphs:

- Annotate what you have seen today.

- Comment the issues you have observed during the day (patient care, clinical signs, pathologies, diagnostic obtained, treatments performed, communication with patient, and family).

- Write up the most important thing you have learned today.

Portfolio evaluation

The portfolios were evaluated by four professors, three of them professors of traumatology and one a colleague professor from a different area, that is, physiology; all of them were medical doctors. A checklist with 23 indicators was built to help evaluators to assign the activities written up by the students to a learning outcome. These learning outcomes were selected from a total of ten competences of the matter. All the portfolios underwent blind evaluation. All the students agreed and participated enthusiastically in the study.

Statistical analysis

Data analysis was performed using the Statistical Package for the Social Sciences, Version 19. The Cohen’s kappa coefficient was used as a statistical measure of interevaluator agreement for qualitative items.29 Frequencies, percentages, means, and standard deviations were also obtained to describe the results.

Results

A total of 138 comparisons were made to obtain the kappa index among the four evaluators for each of the 23 learning outcomes. The distribution of frequencies and percentages of agreement, according to the classification of Landis and Koch,29 are shown in Table 3. As it can be seen, out of 138 comparisons, 83 had kappa indexes >0.4 and 44 of them had values >0.6, which indicates that in a 31.9% of the occasions, the agreements between evaluators have been very substantial.

| Table 3 Distribution of frequencies and percentages of kappa values in every category |

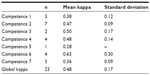

Table 4 shows the mean and standard deviation of the kappa index of the four evaluators. It can be seen that the global kappa is 0.48, indicating that the portfolio has a moderate reliability, according to Landis and Koch.29 As an additional way of assessing the reliability of the portfolio, 6 months after the initial evaluation, two of the evaluators revised again the portfolios (second revision or reference). Table 5 shows the level of agreement obtained by each evaluator when both revisions were analyzed. Globally, the differences among evaluators are not very important because kappa indexes show that evaluators 1 and 4 have a substantial agreement in both revisions, whereas evaluators 2 and 3 have a moderate level of agreement.

| Table 4 Mean of kappa indexes of the four evaluators |

The level of achievement of the traumatology competences is shown in Table 1. The data show the mean percentage of the four evaluations as well as the number of times the procedures were performed. A total of 59.8% of the students have acquired the competences as expected during their hospital stay. In all, 13 of the 23 learning outcomes (56.5%) have been acquired by >50% of the students. Competence 5 (87.4%) and competence 1 (81.4%) show the greater values, whereas the rest of them show an acceptable level, except competence 7, showing only a value of 26%.

Discussion

The current article shows the results obtained with the application of a portfolio to medical students as a tool to obtain information regarding the level of acquisition of the clinical competences of the topic traumatology. One of the important aspects of any portfolio is its reliability, the consistency and accuracy of the assessment tool in measuring students’ performance. Our results quite agree with those found by other authors;30,31 although in some studies of nurse students, higher numbers (0.8) have been described.32 To prove that the results were not obtained by chance, the second review of the portfolios took place 6 months after the initial evaluation in order to see whether the reliability index could be improved. As observed in Table 5, the maximum level of agreement among evaluators is found in competence 6 (observe and assist in surgical treatments). A moderate agreement exists in competences 2, 3, and 4, whereas competences 1, 5, and 7 show a low level of agreement among evaluators.

The results of Table 1 show important information regarding the level of acquisition of the competences analyzed. Two of them, competences 1 and 5, related to diagnostic and treatment obtained the greatest value, with an important frequency of occurrence. Next competence in terms of the level of acquisition is number 3 (70%), probably due to the high number of radiographic images viewed in contrast to magnetic resonance images, where it is likely that doctors rely more on the radiologist report, without actually interpreting them. Competence 6 has also a moderate level of acquisition (55.8%), in spite of the fact that almost all students (99.2%) report attendance to operating rooms (2.6 + 1.3 days). This is likely due to the fact that the surgeons do not invite all the students to wash and be prepared to help, an activity we recommend to all our professors. A similar reason may be behind the moderate level achieved in competences 2 (47.1%) and 4 (51.5%), showing that not all the students are able to explore or perform maneuvers with the patients and that this may be due to a time limitation problem while in the ward or consultation. We believe that this should be corrected and doctors should allow more time for the students to perform, under the appropriate supervision, exploration, or treatment of the patients. Finally, competence 7 has the lowest level. It is likely related to the fact that students are not allowed to sign discharge reports or other forms, although it is important that they learn from their professors how to do it. In any case, this is the first time that we obtained this quantitative information regarding the clinical stays of the medical students and this will help us to analyze our teaching results and to develop new strategies to enhance the level of clinical competence the students should reach.

A problem learned from the application of this portfolio is that many of the learning outcomes were checked without taking into account the number of times these were carried out. The rest of the learning outcomes were quantitative, so we could obtain the number of times the student performed them. For instance, they attended the operating rooms 5.5 + 3.3 times (range, 1–26), and viewed a mean of 2.3 magnetic resonance images (range, 1–14). We believe that it is important that the portfolios are designed to collect also the quantitative information, since both quantitative and qualitative information give the real essence of the learning process. A drawback we have seen with the application of the portfolio to clinical students is that there is a random factor affecting the acquisition of the competences. This is due to the fact that the students rotate between different hospitals and at different times of the year. Thus, it depends on the type of medical problems available on the days they are assigned to the hospital; thus they may not see all the problems selected in the portfolio. Clearly, the greater the number of days of hospital stay, the better it is. However, this is not always possible. A better selection of the learning outcomes will also be helpful. Moreover, the portfolio is designed to be an evaluation tool that should be used in conjunction with others (written or oral exams, laboratory evaluations) because it is not possible to find just one tool that is able to assess all the competences.33,34

Conclusion

In conclusion, the portfolio is an important tool that has allowed to obtain qualitative and quantitative data regarding the degree of acquisition of traumatology competences by medical students. Although some factors need to be improved, such as the inclusion of more quantitative elements, a more comprehensive selection of learning outcomes, and combination with other tools, we believe that the use of this kind of tool by clinical teachers will be useful to ascertain the degree of practical competence reached by their students.

Acknowledgments

The authors would like to thank all the medical students who participated enthusiastically in the study and also all the tutors who reviewed the portfolios during the rotations.

Author contributions

FSM and JGE designed the portfolios, and the analysis of portfolios was performed by FSM, FMM, DB, and JGE. The statistical analysis and first draft were made by MPGS, and the final writing and edition was mainly done by JGE, although all the authors have checked and approved the final version. All authors contributed toward data analysis, drafting and revising the paper and agree to be accountable for all aspects of the work.

Disclosure

The authors report no conflicts of interest in this work.

References

Bok HG, Teunissen PW, Favier RP, et al. Programmatic assessment of competency-based workplace learning: when theory meets practice. BMC Med Educ. 2013;13:123. | |

Steinhaeuser J, Chenot JF, Roos M, Ledig T, Joos S. Competence-based curriculum development for general practice in Germany: a stepwise peer-based approach instead of reinventing the wheel. BMC Res Notes. 2013;6:314. | |

Chaytor AT, Spence J, Armstrong A, McLachlan JC. Do students learn to be more conscientious at medical school? BMC Med Educ. 2012;12:54. | |

Perlman RL, Christner J, Ross PT, Lypson ML. A successful faculty development program for implementing a sociocultural ePortfolio assessment tool. Acad Med. 2014;89:257–262. | |

Duque G, Finkelstein A, Roberts A, Tabatabai D, Gold SL, Winer LR. Learning while evaluating: the use of an electronic evaluation portfolio in a geriatric medicine clerkship. BMC Med Educ. 2006;6:4. | |

Hanan MFAK, Mohamed SAM, van der Vleuten C. Students’ and teachers’ perceptions of clinical assessment program: a qualitative study in a PBL curriculum. BMC Res Notes. 2009;2:263. | |

Altman DC, Tavabie A, Kidd MR. The use of reflective and reasoned portfolios by doctors. J Eval Clin Pract. 2012;18:182–185. | |

McNeill H, Brown JM, Shaw NJ. First year specialist trainees’ engagement with reflective practice in the e-portfolio. Adv Health Sci Educ. 2010;15:547–558. | |

O’Sullivan AJ, Howe AC, Miles S, et al. Does a summative portfolio foster the development of capabilities such as reflective practice and understanding ethics? An evaluation from two medical schools. Med Teach. 2012;34:e21–e28. | |

Hall P, Byszewski A, Sutherland S, Stodel EJ. Developing a sustainable electronic portfolio (ePortfolio) program that fosters reflective practice and incorporates CanMEDS competencies into the undergraduate medical curriculum. Acad Med. 2012;87:744–751. | |

Dannefer EF. Beyond assessment of learning toward assessment for learning: educating tomorrow’s physicians. Med Teach. 2013;35:560–563. | |

Dannefer EF, Prayson RA. Supporting students in self-regulation: use of formative feedback and portfolios in a problem-based learning setting. Med Teach. 2013;35:655–660. | |

Van Schaik S, Plant J, O’Sullivan P. Promoting self-directed learning through portfolios in undergraduate medical education: the mentors’ perspective. Med Teach. 2013;35:139–144. | |

Marhers NJ, Challis MC, Howe AC, Field NJ. Portfolios in continuing medical education-effective and efficient? Med Educ. 1999;33:521–530. | |

Driessen E, Van Tartwijk J, Overeem K, Vermunt JD, Van der Vleuten CP. Conditions for successful reflective use of portfolios in undergraduate medical education. Med Educ. 2005;39:1230–1235. | |

Buckley S, Coleman J, Khan K. Best evidence on the educational effects of undergraduate portfolios. Clin Teach. 2010;7:187–191. | |

Dannefer EF, Bierer SB, Gladding SP. Evidence within a portfolio-based assessment program: what do medical students select to document their performance? Med Teach. 2012;34:215–220. | |

Wong A, Trollope-Kumar K. Reflections: an inquiry into medical students’ professional identity formation. Med Educ. 2014;48:489–501. | |

Davis MH, Ponnamperuma GG. Examiner perceptions of a portfolio assessment process. Med Teach. 2010;32:e211–e215. | |

Johnson S, Cai A, Riley P, Millar LM, McConkey H, Bannister C. A survey of core medical trainees’ opinions on the e-portfolio record of educational activities: beneficial and cost-effective? J R Coll Physicians Edinb. 2012;42:15–20. | |

Vance G, Williamson A, Frearson R, et al. Evaluation of an established learning portfolio. Clin Teach. 2013;10:21–26. | |

Driessen E, Van Tartwijk J, Van der Vleuten CPM, Wass V. Portfolios in medical education: why do they meet with mixed success? A systematic review. Med Educ. 2007;41:1224–1233. | |

Michels NR, Denekens JB, Driessen EW, Van Gaal LF, Bossaert LL, De Winter BY. A Delphi study to construct a CanMEDS competence based inventory applicable for workplace assessment. BMC Med Educ. 2012;12:86. | |

Sánchez Gómez S, Ostos EMC, Solano JMM, Salado TFH. An electronic portfolio for quantitative assessment of surgical skills in undergraduate medical education. BMC Med Educ. 2013;13:65. | |

Jones RW. Anaesthesiology training within Australia and New Zealand. J Med Sci. 2008;28:1–7. | |

Haldane T. “Portfolios” as a method of assessment in medical education. Gastroenterol Hepatol Bed Bench. 2014;7:89–93. | |

Ingrassia A. Portfolio-based learning in medical education. Adv Psych Treat. 2013;19:329–336. | |

Mubuuke AG, Kiguli-Malwadde E, Kiguli S, Businge F. A student portfolio: the golden key to reflective, experiential, and evidence-based learning. J Med Imag Rad Sci. 2010;41:72–78. | |

Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–174. | |

Rees CE, Sheard CE. The reliability of assessment criteria for undergraduate medical students’ communication skills portfolios: the Nottingham experience. Med Educ. 2004;38:138–144. | |

Moonen-van Loon JMW, Overeem K, Donkers HHLM, van der Vleuten CPM, Driessen EW. Composite reliability of a workplace-based assessment toolbox for postgraduate medical education. Adv Health Sci Educ. 2013;18:1087–1102. | |

Karlowicz KA. Development and testing of a portfolio evaluation scoring tool. Nurse Educ. 2010;49:78–86. | |

Gerard FM. Évaluer des Compétences. Guide Pratique. Bruxelles. De Boeck; 2008. | |

Ilic D. Assessing competency in evidence based practice: strengths and limitations of current tools in practice. BMC Med Educ. 2009;9:53. |

© 2016 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2016 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.