Back to Journals » Journal of Multidisciplinary Healthcare » Volume 12

Evaluation of the research capacity and culture of allied health professionals in a large regional public health service

Authors Matus J, Wenke R , Hughes I , Mickan S

Received 29 June 2018

Accepted for publication 2 November 2018

Published 14 January 2019 Volume 2019:12 Pages 83—96

DOI https://doi.org/10.2147/JMDH.S178696

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

Editor who approved publication: Dr Scott Fraser

Janine Matus,1 Rachel Wenke,1 Ian Hughes,1 Sharon Mickan1,2

1Allied Health, Gold Coast Health, Gold Coast, QLD, Australia; 2School of Allied Health Sciences, Griffith University, Gold Coast, QLD, Australia

Purpose: The first aim of this study was to evaluate the current research capacity and culture among allied health professionals (AHPs) working in a large regional health service. The second aim of this study was to undertake principal component analyses (PCAs) to determine key components influencing our research capacity and culture.

Patients and methods: As part of a cross-sectional observational study, the Research Capacity and Culture (RCC) tool was administered to AHPs working in Gold Coast Health to measure self-reported research capacity and culture across Organization, Team, and Individual domains, including barriers to and motivators for performing research. An exploratory PCA was performed to identify key components influencing research capacity and culture in each of the three domains, and the results were compared with the findings of a previous study performed in a large metropolitan health district.

Results: This study found moderate levels of research capacity and culture across all domains, with higher scores (median, IQR) reported for the Organization domain (7,5–8) compared to the Team (6,3–8) and Individual domains (5,2–7). Two components were identified in each domain. Components in the Organization domain included “research culture” and “research infrastructure”; components in the Team domain included “valuing and sharing research” and “supporting research”; and components in the Individual domain included “skills for conducting research” and “skills for searching and critiquing the literature”. These components were found to be highly correlated with each other, with correlations between components within each domain ranging from 0.459 to 0.702.

Conclusion: The results of this study reinforce the need for an integrated “whole of system” approach to research capacity building. Ongoing investment in tailored support and infrastructure is required to maintain current areas of strengths and build on identified areas of weakness at the level of organizations, teams, and individual AHPs, and consideration should also be given as to how support across these three levels is integrated.

Keywords: research culture, infrastructure, organization, team, individual

Introduction

Building research capacity and embedding research into core business have been recognized internationally as a priority for health care organizations due to benefits these bring for patients, clinicians, organizations, and society more broadly.1,2 For example, clinician engagement in research supports the production and translation of research evidence to positively influence health care policy and practice.3–5 A recent systematic review found that among health care organizations in the USA, UK, and Germany, higher levels of research activity were positively associated with increased organizational efficiency, improved staff satisfaction, reduced staff turnover, improved patient satisfaction, and decreased patient mortality rates.6

Research capacity building has been defined as “a process of individual and institutional development which leads to higher levels of skills and greater ability to perform useful research”.7 In a health care setting, useful research generates knowledge that translates into sustainable benefits for patients, clinicians, and the community.8 Multiple factors have been found to influence research capacity within an organization, including its culture.9,10

Organizations seeking to improve their research capacity and culture require valid, reliable, and resource-efficient methods of measuring change over time, so that the outcomes of research capacity building initiatives can be rigorously evaluated. Cooke5 suggested that a framework for evaluating research capacity building should assess individual, team, and organizational levels and be inclusive of both output and process measures. Output measures are useful for capturing the ultimate goals of research activity, such as the creation of useful research evidence, which informs practice and leads to improved health outcomes, and may include measures such as changes in the number of peer-reviewed journal publications and conference presentations, higher degree research qualifications, and amount of competitive grant funding.11 In contrast, process measures may capture smaller steps toward achieving these outputs, such as organizational culture shifts and changes in clinicians’ research experience, knowledge, skills, attitudes and confidence, partnerships, and the number of grant applications and research protocols developed.5,12 It has been suggested that process measures may be more sensitive and could be used as a complement to output measures,13 especially among research emergent professions, which may find it difficult to attract competitive grants and publish in peer-reviewed journals.12

Allied health professionals (AHPs), including physiotherapists, occupational therapists, speech pathologists, psychologists, dieticians, social workers, and podiatrists, represent a substantial proportion of the clinical workforce who are neither doctors or nurses.14 Although AHPs have reported that they are motivated and interested in conducting research,15–18 their research culture and engagement remain limited due to a number of barriers.10 Multiple studies have evaluated these barriers as well as the overall research capacity, culture, and engagement of AHPs using a range of tools including the Research Spider;12,15,19–22 the Research Knowledge, Attitudes and Practices of Research Survey; the Edmonton Research Orientation Survey; and the Barriers to Utilization Scale.10 These tools are however limited in that they only evaluate research capacity at an individual level and not at team or organizational levels, failing to provide the recommended “whole of organization” approach.5

In contrast to the other available tools, the Research Capacity and Culture (RCC) tool measures indicators of research capacity and culture at the level of individuals as well as teams and organizations. It has been developed for the purpose of conducting needs assessment and planning and evaluating research capacity building interventions.23 The RCC tool has been validated in an Australian health care context with data from 134 AHPs, demonstrating strong internal consistency (Cronbach’s alpha of 0.95, 0.96, and 0.96 and factor loading ranges of 0.58–0.89, 0.65–0.89, and 0.59–0.93 for Organization, Team, and Individual domains, respectively) and high test–retest reliability (intraclass correlation coefficient of 0.77–0.83).23 Several studies have used the RCC tool to measure the research capacity and culture of AHPs.24–33 Most of these studies used the RCC tool to measure baseline levels of skill, success, barriers and motivators, and research activity and to identify priorities and inform recommendations for future research capacity building initiatives, while one study used it to evaluate changes before and after a team-based research capacity building intervention.28

Alison et al24 recently used the RCC tool with 276 AHPs in a large Australian metropolitan health service and conducted an exploratory factor analysis (principal component analysis [PCA]) to identify key factors (components) influencing their research engagement. They identified two components within the Organization domain (“infrastructure for research” and “research culture”), two components within the Team domain (“research orientation” and “research support”), and one component factor within the Individual domain (“research skill of the individual”). As the study used a sample of AHPs from a single health service, the overall generalizability of their results to other health services is limited. Further use of the RCC tool and PCA, across different organizational contexts, will confirm which components are consistent across contexts.

The first aim of our study was to evaluate the current research capacity and culture among AHPs working in a large regional health service using the RCC tool. This formed part of a needs assessment to inform the development of tailored allied health research capacity building strategies to address local strengths, limitations, and priorities within this health service.

The second aim of our study was to undertake a PCA to identify key components influencing allied health research capacity and culture at the level of individuals, teams, and organizations.

Patients and methods

This study was designed as a cross-sectional observational study. Ethical approval was obtained from the human research ethics committee of the Gold Coast Hospital and Health Service – HREC/10/QGC/177.

Sample

All 875 AHPs and 97 allied health assistants employed by Gold Coast Health (GCH) in April 2017 were invited to participate in a survey consisting of the RCC tool. Students and non-AHPs including medical and nursing staff were excluded. GCH is a publicly funded large regional health service located in Southeast Queensland, Australia, which includes two hospital facilities (750 and 364 beds) as well as outpatient and community-based services.

Survey tool

A survey consisting of the RCC tool was administered via a secure online platform (SurveyMonkey®) and paper surveys. The tool includes 52 questions that examine participants’ self-reported success or skill in a range of areas related to research capacity or culture across three domains, including the Organization (18 questions), Team (19 questions), and Individual (15 questions). Each question uses a 10-point numeric scale format where 1 is the lowest possible level of skill or success and 10 is the highest possible level of skill or success. There is also an option of “unsure”. Consistent with previous studies, scores are further categorized as “low” (less than 4), “moderate” (between 4 and 6.9), or “high” (7 and above).24,27 The RCC tool also includes questions about perceived barriers to and motivators for undertaking research. Participants can indicate which, from a list of 18 barriers and 18 motivators, apply to themselves as individuals and within their team and to add any other items that are not included in this list. Finally, the tool includes questions about participants’ demographics, work role, professional qualifications, and formal research engagement and what research activities are required and supported in their current work role.23 For the purpose of our study, the “Team” construct in the RCC tool was defined as the participants’ professional group, for example physiotherapy.

Procedures

The survey was made available for 6 weeks from early April 2017. This timeframe was a pragmatic choice as it represented two key months after the summer holidays and before increased service activity over winter. The survey took ~15 minutes to complete. The survey was widely promoted across all allied health professions using comprehensive and systematic communication strategies, including phone calls and emails to all heads of professions, email broadcasts, newsletters, and presentations at allied health leadership meetings, to enlist support from senior managers and heads of professions. Upon accessing the survey, potential participants were invited to read an electronic participant information sheet and to provide voluntary informed consent to participate.

Statistical analyses

Statistical analyses were conducted using Stata 14 (StataCorp LP, College Station, TX, USA) and IBM SPSS Statistic 24 (IBM Corporation, Armonk, NY, USA). Likert-scale items were summarized by the median and IQR. A Skillings–Mack test, which takes into consideration that the same participants completed questions relating to the Organization, Team, and Individual domains and is a generalization of the Friedman test,34 was performed to determine if the median responses to questions in each domain were significantly different. Where a significant Skillings–Mack test was obtained, post hoc Wilcoxon signed-rank tests were performed to identify specifically which domains differed from each other. All procedures and analyses were done to replicate those performed by Alison et al,24 so that our results are directly comparable to their results. Similar to Alison et al, an exploratory PCA was performed within each of the Organization, Team, and Individual domains to identify potential underlying themes represented by the groups of questions. Note that while Alison et al used the term factor analysis, they performed a PCA. In this study, we use the term component rather than factor. The PCAs were performed on Spearman rank correlation matrices of responses to questions asked in each domain. An oblique rotation (direct oblimin) was used as it was expected, from results of Alison et al,24 that the components would be correlated. Components were retained based on Kaiser’s criterion of the associated eigenvalues being larger than 1.0 and on inspection of scree plots. A component correlation matrix is presented for each PCA to demonstrate correlations between each component. In addition, as required following oblique rotation PCA, the regression coefficients for each component for each item (ie, question) and the correlations between each component and each item were reported. Commonly in PCA, these are referred to as the pattern matrix and structure matrix, respectively. Cronbach’s alpha scores, measuring internal consistency of items within a component, were calculated for clusters of items representing each retained component. For each retained component, the initial eigenvalue and the percentage of total variance of the items that the component explained (as derived from these) are also presented.

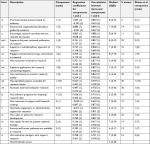

Results

A total of 369 AHPs accessed the survey during the data collection period. Of them, four did not consent to participate in the survey, 63 provided consent but did not answer any of the questions, 44 incompletely answered the questions, and 258 completed the entire survey. In total, data from 302 AHPs were available for analysis, which represent 30% of the estimated total allied health workforce within our health service. As shown in Table 1, most participants were working in hospital-based services. The sample included a range of pay grades and number of years of experience. Most participants had completed at least an undergraduate degree, with 35% also having completed a postgraduate qualification by coursework and 19.4% having completed a research higher degree. An additional 7.1% of participants were enrolled in a research higher degree or other professional development relating to research.

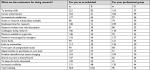

Fewer than one-third of participants (30%) considered research activities to be a part of their role description, while another one-third (32%) were unsure. The most commonly reported provisions for undertaking research activities included access to the library, software programs, research mentoring, and research training. Of the 62% participants who reported having undertaken some research activities over the preceding 20 months, the most common activities included collecting data, completing a literature review, and writing an ethics application. Further details are listed in Table S1.

Overall, the most commonly reported barriers at both the level of individuals and teams included a lack of time, funding and suitable backfill, other competing work priorities, a lack of research skills, and concerns about the impact that engaging in research would have on work–life balance. Overall, the most commonly reported motivators included skill development, increased job satisfaction, career advancement, enhanced professional credibility, and addressing identified problems in practice. Other motivators included having support from mentors, dedicated time for research, encouragement from managers, and links to universities. Further details are listed in Tables S2 and S3.

On average, participants reported moderate-to-high levels of skill and success across all three domains, with the highest scores in the Organization domain and the lowest scores in the Individual domain. There were significant differences between the overall median scores for each domain as detected from the Skillings–Mack test (P=0.001). Post hoc Wilcoxon signed-rank tests demonstrated that the Organization domain had a significantly higher overall median (IQR) score of 7 (5–8) compared to the score of Team domain of 6 (3–8) (z=12.2, P=0.001) and that the overall median score for the Team domain was significantly higher than the overall median score for the Individual domain, 5 (2–7) (z=12.8, P=0.001).

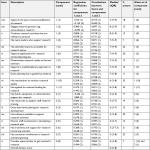

As illustrated in Table 2, participants reported their organization’s strengths to include promoting evidence-based clinical practice (8, 6–9), encouraging research activities that are relevant to practice (8, 6–9), engaging external partners (8, 7–9), supporting the peer-reviewed publication of research (8, 5–9), and resourcing staff research training (7, 5–8). In contrast, areas of relative weakness were reported to include having funds, equipment, and administrative support for research (6, 3–7) and having staff career pathways available in research (6, 3–7). As illustrated in Table 3, similar areas of strength and relative weakness were also reported in the Team domain. Although there was variability between professional groups, a notable area of weakness was a lack of funds, equipment, and administrative support for research (4, 2–6).

As illustrated in Table 4, participants considered themselves as individuals to be the most skilled at finding relevant literature (7, 6–8), critically reviewing the literature (7, 5–8), and using research evidence to inform practice (7, 4–8). In contrast, they considered themselves to be the least skilled at securing research funding (3, 1–4), writing an ethics application (3, 1–6), and writing for publication in peer-reviewed journals (3, 2–6).

The data were considered suitable for exploratory PCA as the sample size of 277–299 participants, depending on the domain, resulted in greater than the 10:1 ratio of participants to items (up to 19 in each domain) as is recommended.35 Similarly, for each domain, a majority of inter-item correlations (Spearman’s rank) were seen to be >0.3 as is recommended for PCA.35 In fact, only four correlations, all in the Individual domain, were less than 0.3. Results from Kaiser–Meyer–Olkin (KMO) tests (Organization 0.94, Team 0.95, Individual 0.93), which estimate the proportion of variance among items that may be due to a common source, also confirmed the appropriateness (KMO score >0.6) of performing a PCA. Bartlett’s test of sphericity that tests the hypothesis that the correlation matrix is an identity matrix (items are unrelated) and thus unsuitable for structure detection was also performed. P values of <0.001 for each domain, therefore, also indicated the data as being suitable for PCA. Exploratory PCAs were conducted separately for the Organization, Team, and Individual domains of the RCC tool. Both the use of Kaiser’s criterion (retention of components with an eigenvalue of ≥1.0) and the scree test suggested retention of two components in each domain. As, in each case, the retained components were highly correlated (refer legends of Tables 2–4), a direct oblimin (oblique) rotation was used in the final analysis.

Comparison with another health service

Our PCA resulted in the identification of two components for each of the three domains of the RCC tool, including the Organization, Team, and Individual domains (Tables 2–4). For the Organization domain, our component 1 shared four of the top 5 ranked items in component 2 of Alison et al,24 namely, promoting clinical practice based on evidence, ensuring that organizational planning is guided by evidence, encouraging research activities that are relevant to practice, and supporting the peer-reviewed publication of research. As such, we have named our component 1 “research culture” in line with Alison et al. Similarly, the top 2 ranked items in our component 2 were the same as those in component 1 of Alison et al, namely, having resources to support staff research training and having funds, equipment, and administrative support for research. Thus, we named our component 2 “research infrastructure” as did Alison et al (Table 2).

For the Team domain, the items in our component 1 were similar to those in component 1 of Alison et al, although the ranking of items was somewhat different. The top ranked items in our component 1 were publishing and disseminating research, engaging external partners, conducting research that is relevant to practice, having experts accessible for advice, and having team leaders who support research. While Alison et al referred to their component 1 as research orientation, we have named ours “valuing and sharing research” to reflect these differences. The ranking of items in our component 2 was almost identical to those in component 2 of Alison et al. Furthermore, our component 2 shared three of the top 4 ranked items in component 2 of Alison et al, namely, having funds, equipment, and administrative support for research; having adequate resources to support staff research training; and doing team-level planning for research development. As such, we have named our component 2 “supporting research” in line with Alison et al (Table 3).

In our study, the Individual domain items resolved into two components, whereas Alison et al detected only one component, which they named research skill of the individual. The top ranked items in our component 1 relate to skills for planning, conducting and reporting research projects including securing research funding, writing an ethics application/research protocol, and writing for publication, while the items in our component 2 relate to skills for using research evidence including finding and critically reviewing the literature. Accordingly, we have named our component 1 “skills for conducting research” and component 2 “skills for searching and critiquing the literature” (Table 4).

Cronbach’s alpha for each component is listed in Tables 2–4. Each score is greater than 0.9 indicating “excellent” internal consistency for the items within each component. The initial eigenvalues and the percentage of the variance explained for each component are also shown.

Discussion

This study first aimed to evaluate the current research capacity and culture of AHPs working within a large regional health service and second to identify key components that influence research capacity and culture among AHPs. In relation to the first aim, participants generally reported moderate-to-high levels of skill and success across the Organization, Team, and Individual domains, with the highest scores in the Organization domain and the lowest scores in the Individual domain. These findings, including the trend of lower scores in the Individual domain compared to the Team and Organization domains, are consistent with findings of previous research.23–25,27,29,30,32,33,36 In relation to the second aim, two key components were found to represent the Organization domain influences on research capacity and culture, which we named “research culture” and “research infrastructure”. Two components were found to represent the Team domain, namely, “valuing and sharing research” and “supporting research”, and two components were found to represent the Individual domain, namely, “skills for conducting research” and “skills for searching and critiquing the literature”. It should be noted however that the components within each domain were highly correlated. That is, that the concepts described by each component overlapped with those of the other. For example, “research culture” cannot be viewed as being distinct from “research infrastructure”. This suggests that research capacity and culture, as measured by the RCC tool, are highly interrelated.

Compared to other studies that have used the RCC tool with multidisciplinary samples of AHPs working in publicly funded health services in Queensland,23,28 Victoria,36 and New South Wales,24 our scores were similar or higher across the Organization and Team domains and similar across the Individual domain. For example, our participants reported a higher level of engagement with external partners in both the Team domain (median 8 [5–9] compared to 5 [3–8],24 5 [3–7],36 and 3 [1–7])23 and the Organization domain (median 8 [7–9] compared to 7 [4–8],24 6 [4–8],36 and 5.5 [3.5–8]).23 Our participants also reported having better access to research training and experts who can provide research advice, both within their teams and their organization.23,24,36 Over the past 3 years, our organization has invested considerably in research development initiatives, which may explain these results. These initiatives included the appointment of a professor of allied health and allied health research fellows, the creation of a research council and strategic research advisory committee, and increased staffing of the research office to support research development, ethics, and governance. A range of research training, mentoring, and local grant initiatives have also been implemented, which may have contributed to the higher scores reported by GCH AHPs. Furthermore, the main hospital in our health service is situated adjacent to a university, which may enhance opportunities for research collaborations with external academics. The variation in RCC tool scores seen between organizations may therefore reflect differences in their developmental level in regards to building research infrastructure and culture.

Despite some variation in RCC tool scores, the motivators and barriers reported in our study are consistent with those reported in other studies.10,12,19,25,31 Understanding the nature of barriers, motivators, and enablers to engaging in research can help inform which research capacity building strategies should be prioritized at individual, team, and organization levels. Motivators have also been found to be more strongly associated with research activity than barriers.32 While it is relevant to address both barriers and motivators, it might therefore be especially effective to focus on maximizing motivators, including increasing skills, career advancement, and job satisfaction.

Some of the components identified in our study are similar to those identified by Alison et al,24 particularly within the Organization and Team domains. Interestingly, our study identified two components in the Individual domain, whereas Alison et al identified only one component. The two items in our second component (“skills for searching and critiquing the literature”) generally scored higher than the items in our first component (“skills for conducting research”), which is consistent with findings of previous studies using the RCC tool with AHPs.23,24,28,33,36 This may be because skills for searching and appraising the literature are more readily used in practice and taught as part of undergraduate university degrees compared to other skills required for planning, conducting, and reporting research projects. Indeed, previous studies have found that AHPs are generally more interested and experienced in performing earlier stages of the research process such as finding and critically reviewing the literature as compared to later stages of the research process.15,16 This is appropriate given that the goal of research capacity building is for all health professionals to be using research evidence to inform their practice, while some are also participating in or leading research projects.37

A potential reason for some of the differences in our item rankings for components in the Individual and Team domains may be related to the differences in the nature of the samples used in the two studies. For example, while the sample of Alison et al had almost one-third of participants as physiotherapists and ≤4% of the sample as speech pathologists or psychologists, our study’s sample included >20% of participants from these latter two professions. As professional background has been found to influence RCC tool results,24,32 the variation between the two samples may therefore have contributed to the differing results for the Individual and Team domains and hence the differences in the nature of the components extracted.

Limitations of the RCC tool

While the RCC tool is the only validated tool to evaluate individual, team, and organizational research capacity and culture, it does have certain limitations. First, the tool is a self-report measure and may be prone to social desirability bias, which suggests that participants may provide a more positive rating of themselves than their true performance.38 As such, the tool should ideally be used together with objective measures of research engagement and output. Second, the tool has been repeatedly modified by inclusion and deletion of items,24–33 without providing a rationale for deviating from the original validated version.23 Third, previous studies used different descriptive statistics to report their results. While some studies reported mean and SD values,25,27–29,32 others reported median and IQR values23,24,30,33,36 or a combination of both.28 Finally, previous studies had variable response rates ranging from 6%33 to 60%30 and some did not report their response rates. These inconsistencies make it difficult to reliably compare results between studies.

Limitations of this study and future directions

This study and most previous studies using the RCC tool have achieved less than one-third response rate.26,27,29,32,33 One reason for this may be that the RCC tool is quite time consuming to administer due to the large number of items. As the PCA results from our study and the study of Alison et al24 indicate some redundancy in items, future research may use these results to further explore the potential for the development of a shortened version of the tool, which may improve its usability and participant response rates. It would be interesting to see if similar redundancies in items are seen in a larger range of organizational contexts.

Another potential limitation of our study was the high proportion of unsure responses to items, particularly in the Organization domain (up to 47.2%) and Team domain (up to 39.4%). This is consistent with findings of Friesen and Comino27 who reported up to 54.6% and 60.8% unsure responses to items in the Organization and Team domains, respectively and Alison et al24 who also reported up to 30% and 25% unsure responses to items in the Organization and Team domains, respectively. This may be attributable to participants’ survey fatigue due to the lengthy number of items in the RCC tool or reflect their lack of awareness of research capacity and culture at organization and team levels. As the survey was voluntary, there is also the potential of self-selection bias whereby more research-interested AHPs completed the survey, which may limit generalizability to other AHPs. While the RCC tool to date has only been validated with AHPs, future research may aim to validate the tool with other health workforces including medical and nursing.

Recommendations for health care organizations

The present study may offer some insights for health care organizations considering building their research capacity and culture. Our findings highlight the need for a whole of system approach to research capacity building, in that multi-layered strategies are required at the level of individuals, teams, and organizations, which is consistent with previous recommendations.5,9,28,31,33,39 Indeed, at an organizational and team level, there should be consideration given to developing both research infrastructure, including research support, and aspects of research culture.

Similar barriers and motivators are commonly reported across different studies, and these should be addressed across the organizational, team, and individual levels. Any research capacity building intervention also needs to consider the developmental level of the organization and teams, thereby ensuring that research development goals are both feasible and strategic. The use of frameworks that support such strategic research capacity building may be useful in undertaking this step.5,28,40 Additionally, greater attention to motivators as opposed to barriers may result in better progress. Owing to the high proportions of unsure responses reported in our study and other related studies,24,27 organizations should consider how they are disseminating information to teams regarding research infrastructure and culture developments to increase clinicians’ awareness, visibility, and uptake of available resources and supports. Specifically, the finding of more positive ratings in the Organizational domain compared to the Team domain and particularly the Individual domain suggests that the health service may need to consider how interventions and infrastructure at higher levels are being integrated and filtered down to clinicians and managers who are working “at the coalface” in health care services. For example, at an organizational level, the items pertaining to support for research training and having funds and equipment to support research were rated 7 and 6, respectively, whereas at a team level, the same items were rated notably lower as 5 and 4, respectively. A more bottom–up approach may therefore be relevant to ensure that any organizational infrastructure is able to reach the team and individual levels. Consideration should also be given to how to address some of the areas rated as least successful at the individual level, including writing for publication, applying for grant funding, and writing an ethics application. Indeed, most of these skills are developed through the doing of research, thereby creating opportunities for clinicians to have greater exposure to research experiences, including being part of an established research team and receiving mentoring to undertake specific research tasks.

Conclusion

To develop the research capacity of the allied health workforce, ongoing support and investment of resources and infrastructure are required to implement tailored strategies targeting the level of individuals, teams, and organizations. Our study highlighted the disparity that can occur between the perceived success of research at an organizational level compared to that at the team and individual levels. Health services must consider how positive research infrastructure and culture at the organizational level are being integrated and used by AHPs, including teams and individuals. This may mean ensuring greater visibility of organizational initiatives and using organizational resources to promote opportunities and experience for clinicians to increase their research skills through doing. The RCC tool provides useful information about existing research capacity and culture as part of a needs assessment to inform tailored research capacity building interventions and establish a baseline for evaluating the effectiveness of such interventions. This study provides evidence that there is some consistency in components of research capacity and culture between two health services. Future research is indicated to investigate whether it is feasible to shorten the RCC tool by removing redundant questions, which could improve its usability.

Ethics approval and informed consent

Ethical approval was obtained from the human research ethics committee of the Gold Coast Hospital and Health Service (HREC/10/QGC/177). All participants were provided with a participant information sheet and provided informed consent to participate in the study. Potential participants were informed that their participation is completely voluntary and that non-participation would not carry any penalty or consequence or influence their employment or regard within the organization in any way.

Data sharing statement

The final manuscript contains all pertinent data extracted from the studies included in the review. Access to raw data may be negotiated with the authors upon request.

Acknowledgment

The authors wish to thank Leanne Bisset for contributing to the original conceptualization of this project and Ashlea Walker for assisting with data acquisition.

Author contributions

SM and RW conceptualized and designed the project. RW and JM completed the protocol and developed an online version of the survey tool. JM led the data acquisition and descriptive analysis. IH led the statistical factor analysis. All authors contributed to interpreting the data, data analysis, drafting and critically revising the article, gave final approval of the manuscript version to be published, and agree to be accountable for all aspects of the work.

Disclosure

The authors report no conflicts of interest in this work.

References

Australian Government Department of Health and Ageing. Strategic Review of Health and Medical Research Final Report. Canberra: Commonwealth of Australia; 2013. | ||

Hanney S, Boaz A, Jones T, Soper B. Engagement in research: an innovative three-stage review of the benefits for health-care performance. Health Services and Delivery Research. 2013;1(8):1–152. | ||

Blevins D, Farmer MS, Edlund C, Sullivan G, Kirchner JE. Collaborative research between clinicians and researchers: a multiple case study of implementation. Implement Sci. 2010;5(1):76. | ||

Bornmann L. What is societal impact of research and how can it be assessed? a literature survey. Journal of the American Society for Information Science and Technology. 2013;64(2):217–233. | ||

Cooke J. A framework to evaluate research capacity building in health care. BMC Fam Pract. 2005;6(1):1–11. | ||

Harding K, Lynch L, Porter J, Taylor NF. Organisational benefits of a strong research culture in a health service: a systematic review. Aust Health Rev. 2017;41(1):45–53. | ||

Trostle J. Research capacity building in international health: definitions, evaluations and strategies for success. Soc Sci Med. 1992;35(11):1321–1324. | ||

Pickstone C, Nancarrow S, Cooke J, et al. Building research capacity in the allied health professions. Evidence & Policy: A Journal of Research, Debate and Practice. 2008;4(1):53–68. | ||

Golenko X, Pager S, Holden L. A thematic analysis of the role of the organisation in building allied health research capacity: a senior managers’ perspective. BMC Health Serv Res. 2012;12:276. | ||

Borkowski D, Mckinstry C, Cotchett M, Williams C, Haines T. Research culture in allied health: a systematic review. Aust J Prim Health. 2016;22(4):294–303. | ||

Patel VM, Ashrafian H, Ahmed K, et al. How has healthcare research performance been assessed? a systematic review. J R Soc Med. 2011;104(6):251–261. | ||

Pighills AC, Plummer D, Harvey D, Pain T. Positioning occupational therapy as a discipline on the research continuum: results of a cross-sectional survey of research experience. Aust Occup Ther J. 2013;60(4):241–251. | ||

Carter YH, Shaw S, Sibbald B. Primary care research networks: an evolving model meriting national evaluation. Br J Gen Pract. 2000;50(460):859–860. | ||

Turnbull C, Grimmer-Somers K, Kumar S, May E, Law D, Ashworth E. Allied, scientific and complementary health professionals: a new model for Australian allied health. Aust Health Rev. 2009;33(1):27–37. | ||

Finch E, Cornwell P, Ward EC, Mcphail SM. Factors influencing research engagement: research interest, confidence and experience in an Australian speech-language pathology workforce. BMC Health Serv Res. 2013;13:144. | ||

Stephens, D, Taylor N, Leggat SG. Research experience and research interests of allied health professionals. J Allied Health. 2009;38(4):107–111. | ||

Harvey D, Plummer D, Nielsen I, Adams R, Pain T. Becoming a clinician researcher in allied health. Aust Health Rev. 2016;40(5):562–569. | ||

Ried K, Farmer EA, Weston KM. Bursaries, writing grants and fellowships: a strategy to develop research capacity in primary health care. BMC Fam Pract. 2007;8:19. | ||

Harding KE, Stephens D, Taylor NF, Chu E, Wilby A. Development and evaluation of an allied health research training scheme. J Allied Health. 2010;39(4):143–148. | ||

Harvey D, Plummer D, Pighills A, Pain T. Practitioner research capacity: a survey of social workers in northern Queensland. Australian Social Work. 2013;66(4):540–554. | ||

Pain T, Plummer D, Pighills A, Harvey D. Comparison of research experience and support needs of rural versus regional allied health professionals. Aust J Rural Health. 2015;23(5):277–285. | ||

Smith H, Wright D, Morgan S, Dunleavey J, Moore M. The ‘Research Spider’: a simple method of assessing research experience. Primary Health Care Research and Development. 2002;3(3):139–140. | ||

Holden L, Pager S, Golenko X, Ware RS. Validation of the research capacity and culture (RCC) tool: measuring RCC at individual, team and organisation levels. Aust J Prim Health. 2012;18(1):62–67. | ||

Alison JA, Zafiropoulos B, Heard R. Key factors influencing allied health research capacity in a large Australian metropolitan health district. J Multidiscip Healthc. 2017;10:277–291. | ||

Borkowski D, Mckinstry C, Cotchett M. Research culture in a regional allied health setting. Aust J Prim Health. 2017;23(3):300–306. | ||

Elphinston RA, Pager S. Untapped potential: psychologists leading research in clinical practice. Aust Psychol. 2015;50(2):115–121. | ||

Friesen EL, Comino EJ. Research culture and capacity in community health services: results of a structured survey of staff. Aust J Prim Health. 2017;23(2):123–131. | ||

Holden L, Pager S, Golenko X, Ware RS, Weare R. Evaluating a team-based approach to research capacity building using a matched-pairs study design. BMC Fam Pract. 2012;13:16. | ||

Howard AJ, Ferguson M, Wilkinson P, Campbell KL. Involvement in research activities and factors influencing research capacity among dietitians. J Hum Nutr Diet. 2013;26(Suppl 1):180–187. | ||

Lazzarini PA, Geraghty J, Kinnear EM, Butterworth M, Ward D. Research capacity and culture in podiatry: early observations within Queensland Health. J Foot Ankle Res. 2013;6(1):1. | ||

Pager S, Holden L, Golenko X. Motivators, enablers, and barriers to building allied health research capacity. J Multidiscip Healthc. 2012;5:53–59. | ||

Wenke RJ, Mickan S, Bisset L. A cross sectional observational study of research activity of allied health teams: is there a link with self-reported success, motivators and barriers to undertaking research? BMC Health Serv Res. 2017;17(1):114. | ||

Williams CM, Lazzarini PA. The research capacity and culture of Australian podiatrists. J Foot Ankle Res. 2015;8:11. | ||

Chatfield M, Mander A. The Skillings-Mack test (Friedman test when there are missing data). Stata J. 2009;9(2):299–305. | ||

Pallant J. SPSS Survival Manual: A Step by Step Guide to Data Analysis Using IBM SPSS. 6th ed. Sydney: Allen and Unwin; 2016. | ||

Williams C, Miyazaki K, Borkowski D, Mckinstry C, Cotchet M, Haines T. Research capacity and culture of the Victorian public health allied health workforce is influenced by key research support staff and location. Aust Health Rev. 2015;39(3):303–311. | ||

Del Mar C, Askew D. Building family/general practice research capacity. Ann Fam Med. 2004;2(Suppl 2):S35–S40. | ||

van de Mortel TF. Faking it: social desirability response bias in self-report research. Aust J Adv Nurs. 2008;25(4):40–48. | ||

Farmer E, Weston K. A conceptual model for capacity building in Australian primary health care research. Aust Fam Physician. 2002;31(12):1139–1142. | ||

Whitworth A, Haining S, Stringer H. Enhancing research capacity across healthcare and higher education sectors: development and evaluation of an integrated model. BMC Health Serv Res. 2012;12:287. |

Supplementary materials

| Table S1 Research engagement and support for research |

| Table S2 Barriers to undertaking research (n=277) Abbreviation: N/A, not available. |

| Table S3 Motivators for undertaking research (n=271) Abbreviation: N/A, not available. |

© 2019 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2019 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.