Back to Journals » Advances in Medical Education and Practice » Volume 12

Evaluation of Educational Workshops for Family Medicine Residents Using the Kirkpatrick Framework

Authors Almeneessier AS , AlYousefi NA , AlWatban LF , Alodhayani AA, Alzahrani AM , Alwalan SI , AlSaad SZ, Alonezan AF

Received 22 September 2020

Accepted for publication 3 March 2021

Published 19 April 2021 Volume 2021:12 Pages 371—382

DOI https://doi.org/10.2147/AMEP.S283379

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 2

Editor who approved publication: Prof. Dr. Balakrishnan Nair

Aljohara S Almeneessier,1,2 Nada A AlYousefi,1,2 Lemmese F AlWatban,1,2 Abdulaziz A Alodhayani,1,2 Ahmed M Alzahrani,1,2 Saleh I Alwalan,1 Samaher Z AlSaad,1 Anas F Alonezan1

1Department of Family and Community Medicine, College of Medicine, King Saud University (KSU), Riyadh, Kingdom of Saudi Arabia; 2King Saud University Medical City, King Saud University, Riyadh, Kingdom of Saudi Arabia

Correspondence: Aljohara S Almeneessier

Department of Family and Community Medicine, College of Medicine, King Saud University (KSU), Riyadh, Kingdom of Saudi Arabia

Tel +96611505274489

Email [email protected]

Background/Objectives: Practicing independently in an ambulatory care setting demands mastering the knowledge and skills of commonly performed minor procedures. Educational hands-on activities are one way to ensure competent family medicine practitioners. This study aims to evaluate a minor procedure workshop for family medicine trainees using the Kirkpatrick model for short- and long-term workshop effectiveness and to identify facilitators and obstacles faced by the trainees during their practices to gain procedural skills.

Methods: A cross-sectional study was conducted in four-time intervals: during the workshop (pre- and post-workshop), 12 weeks after the workshop to evaluate the short-term effectiveness and change of behavior, and 12 months after the workshop to evaluate the long-term effectiveness of the workshop. Statistical Package for Social Sciences 22 was used for data analysis.

Results: Forty postgraduate trainees (R1-R4) attended the workshop and participated in the survey. Overall, the workshop was accepted and highly perceived by the trainees, and the pre-workshop confidence level was lower than the post-workshop confidence level. The workshop met the expectation of 100% in obstetric and gynecological procedures workshop with 97% satisfaction rate, followed by dermatology (97.5%, 90%), orthopedic (95%, 87%), general surgery (97.5%, 84%), combined ophthalmology and otorhinolaryngology workshop (82.5%, 74%). At 12 weeks, 24 postgraduate trainees (R2–R4) responded to the survey, and low competency occurred with uncommon procedures in practice. At 12 months only 16 trainees (R3–R4) responded to the survey. Learning effect was higher as post-workshop and varied with the passage of time. Changes in the competency level were noticed, with the number of procedures performed being not statistically significant (P> 0.05).

Conclusion: Practicing family medicine in an ambulatory health-care setting safely needs the mastering of minor office procedure skills. Evaluating educational workshops is important to ensure effective outcomes and identify the factors of trainees, supervisors, institutions, and patients that influence or hinder the performance of minor procedures in a family medicine clinic.

Keywords: educational activity, evaluation, family practice, minor procedures, simulation

Introduction

Managing patients safely in ambulatory health-care settings demands a wealth of knowledge and skills in minor office procedures; among other professional qualities, ie, interpersonal communication skills and ethics. Clinical minor procedure is any procedure that is carried out in an ambulatory health care setting and performed by a trained health care provider as doctors or nurses. The minor office procedures include examination procedures such as ophthalmoscopy or pelvic examination; diagnostic such as pap smear or skin scrapping; or surgical under local anesthesia such as wound suturing or male circumcision.

The Saudi Board of Family Medicine recommends more than fifty essential minor office procedures (Appendix 1) as requirements for a competent family physician.1 The lack of training is one of the reasons why family residents are not conducting minor office procedures as part of daily clinical activities. A study on minor surgical procedures among Saudi family physicians has affirmed that despite the high level of interest, there was a low level of knowledge and confidence to conduct different minor procedures as part of daily clinic activities.2 This lack of confidence is due to infrequent training and practice of procedures during daily professional activities.2 Goertzen reported that family medicine residents acquire different levels of procedural competencies on the basis of distinct training sites and instructor experiences.3,4 Office procedure training should be structured, started early with medical students, and emphasized during continuous professional education courses.4–6 Assessment should be through an observation and an objective structured clinical examination (OSCE) as part of the evaluation of residents.5

A study of 146 residents has documented that 80% of the respondents could perform independently five procedures out of 69 evaluated procedures.7 This study necessitates the adoption of interventional teaching activities to achieve a competency in minor office procedures. It was reported that technical skills retention varies between ten weeks8 and ten months,6 raising the need for structured regular interventional training programs.

Interventional educational workshop is one method of training residents to master procedural skills. Learner satisfaction and desired change in behavior are positive indicators of educational program success. Evaluating an interventional educational program is as crucial as planning and conducting an educational program. The lack of educational program evaluation may mislead the focus of the program, its implementation, and the beneficiaries.9 The Kirkpatrick model is a common tool to measure the effect of educational activities across four levels (reaction–learning–behavior–result).10 The first level measures the reaction, acceptance, and educational activity satisfaction of learners, and the second level of the Kirkpatrick model is where learning is evaluated as a result of an interventional educational program. Measuring learning is better done before and after an educational activity. The amount of learning implies nothing if it is not reflected as a desired behavioral change by learners, which leads to the third level of the model when the acquired knowledge and skills are confidently practiced in daily work. The fourth level (result) is the major and ultimate impact of the educational activity reflected on the organizational changes and benefits. Unfortunately, the Kirkpatrick evaluation model has been criticized for at least three limitations: (1) not considering other interpersonal and institutional factors that contribute to training and training transfer, (2) assuming a linear relationship between a positive reaction and a positive end result; high satisfaction does not necessarily mean that a sufficient amount of learning was acquired, and (3) assuming that the importance of collected evaluation data increases with advancing model levels.11,12 Level four of the Kirkpatrick model may not provide information on improving the structure and process of training programs.11 The majority of program evaluations that used the Kirkpatrick model focused on level 1 (72%) and level 2 (31%) and to a lesser extent level 3 (20%) and level 4 (12%).12 For the purpose of our study, authors focused on levels one, two, and three of the Kirkpatrick evaluation model.

We conducted this study with objectives; to evaluate a simulated educational activity in the form of a workshop on minor office procedures; measure trainees’ satisfaction and learning as a result of this activity; to identify facilitators and obstacles faced by the trainees during their practices of minor procedural skills; and to measure the retained workshop outcome skills within one year after the workshop activity.

Methodology

Method

A cross-sectional survey aimed to evaluate an educational training workshop utilizing the Kirkpatrick model. The study was approved by the College of Medicine Institutional Research Board (IRB No. E-19-3860).

Setting

The setting of this study was the Clinical Skill Simulation Centre at the College of Medicine at King Saud University.

Participants

Fifty residents enrolled in the family medicine program, only forty residents attended the five-day; thirty hours workshop. All family medicine residents (R1–R4) who attended the workshop were invited to participate in this study (no. = 40). Consent was obtained from all the residents to enroll in this survey and complete a daily questionnaire for each mini-workshop.

Educational Workshop

A five-day workshop with hands-on instructions was conducted to train the residents on minor office procedures commonly performed in family medicine practices. The procedures were selected on the basis of the need assessment of the residents; the residents were requested to select and rank office procedures as most important, important, and less important. The selected most important procedures were matched with the Saudi-Med recommendations of family medicine program competency outcomes.1 Moreover, the final selection was made on the basis of the available resources at the clinical skill simulation center. The office procedures were categorized according to specialties as follows: general surgery (Suturing and laceration repair, Neonatal circumcision, I & D of superficial abscesses, Excision of ingrown nails, Proctoscopy, Wound care); OB/GYN (Pelvic exam, Pap smear, IUCD insertion and removal, Obstetric US, Endometrial Bx); orthopedics (soft tissue injection, large joints aspirations and injections, small joints aspirations and injections, splint and immobilization, (upper and lower limb), closed reduction (joint dislocations)); dermatology (cryotherapy for warts, punch skin bx, shave skin bx, scraping for mycology, cryosurgery of skin tumours); ophthalmology (fundoscopy examination, corneal foreign body (fb) removal, slit-lamp examination); and otorhinolaryngology (ant. nasal packing for epistaxis, ear fb/wax removal, nasal fb removal) to be conducted over five days. The instructors were consultants from previously mentioned specialties. The educational workshop activity was started with daily 45 minutes theoretical lectures covering description of procedures, indications and contraindications; to prime the mindset of the trainees for the minor procedure workshop. The theoretical lectures were delivered to trainees as one group before they were divided into small groups for hands-on 60 minutes training session for each procedure. The clinical skill center provides low fidelity simulators, simulated patients and instruments needed for the training (Figure 1A–D).

|

Figure 1a Continued. |

Instrument

The instrument employed in this survey was developed and validated for program evaluation (unpublished thesis) with good internal consistency (Cronbach’s alpha = 0.85). The original questionnaire was developed by informal meeting with master program stakeholders (director, instructors, trainees and alumni), validated by two PhD instructors of medical education, and piloted on trainees who were not included in the survey. Minor changes applied to the current questionnaire to suit the workshop3,13,14 and piloted on five service residents working in ambulatory health care clinics. The self-administered questionnaire was piloted for readability, comprehension and timing.

For the measurement of the first and second levels of the Kirkpatrick model (reaction and learning), a self-administered questionnaire utilizing a 5-point Likert scale (1 = strongly disagree, 2 = disagree, 3 = neutral, 4= agree, 5 = strongly agree) was used (Appendix 2). The questionnaires were distributed on day one through day five to measure the perception reaction of the participants and the effectiveness of each workshop and to measure the overall perception and impact of the workshop. The effectiveness of individual mini-workshops was assessed by measuring the teaching activity usefulness of each procedure, teaching methods, amount learned, simulation, and overall score. Each category was allocated the following responses on a Likert scale: 1 = extremely not effective, 2 = not effective, 3 = neutral, 4 = effective, 5 = extremely effective. Learning was measured by pre- and post-workshop self-assessment questionnaires, asking how confident the participant felt before and after each procedure. The responses were measured on a Likert scale as follows: 1 = extremely not confident, 2 = not confident, 3 = somewhat confident, 4 = confident, 5 = extremely confident. The third level of the Kirkpatrick model was obtained through emailed questionnaires filled out by the participants 12 weeks after the completion of the workshop. Each participant received a post-workshop questionnaire that measured the number of procedures done, the competency level and identified any factors that facilitated or hindered the performance of the learned skills. These factors were related to trainee, supervisor, setting, and institution. The competency level was defined as the following: 1= not competent (if guided by the supervisor), 2= somewhat competent (closely observed by the supervisor), 3= competent (supervisor was present but did not intervene), and 4= extremely competent (supervisor was not present in the same clinic). Another follow-up email was sent to the participants 12 months after the completion of the workshop to measure the number of procedures, retained competency, facilitators, and barriers that prevent the practice and performance of the gained skills. Level three was measured twice to ensure the persistence of gained competencies. Level four was not feasible to be measured because most of the trainees left the organization after completion of the family medicine program.

Statistical Analysis

The data were analyzed by using Statistical Package for Social Sciences (SPSS 22; IBM Corp., New York, NY, USA). The continuous variables were expressed as mean ± standard deviation, and the categorical variables were expressed as percentages. The paired t-test and one-way ANOVA were employed for continuous variables. A chi-square test was used for categorical variables. Linear Mixed Model was used for repeated measure analysis; “time” was identified as the predictor variable significantly affecting the outcome. For the purpose of this study repeated measures were expressed as measuring confidence and competency reflecting the workshop outcome at four points of time (pre- and post-workshop, twelve weeks and twelve months after the workshop). Confidence and competency (outcome) levels were rescaled to (0–10) using formula Y = (X–Xmin/Xrange)n; Y is the adjusted variable, X is the original variable, Xmin is the minimum observed value on the original variable and Xrange is the difference between the maximum potential score and the minimum potential score on the original variable and n is the upper limit of the rescaled variable. Harrison et al recommend selecting fixed effect model for small sample size, unbalanced data and if there is no intention to generalize the result to general population.15 Based on this recommendation authors selected the fixed effect model that includes two predictor variables; time and amount learned. Main effect of “time” and interaction effect of “time*learned”. Graph was produced by Microsoft Excel and a p-value < 0.05 was considered statistically significant.

Result

Forty trainees in the family medicine program attended the office procedure workshop and responded to the survey with a 100% response rate. Twenty-four (60%) were female residents, and16 (40%) were male residents. The age range was between 25 and 30 with a mean age of 27.4 (±1.17). The distribution of the residents according to program level was as follows: seven residents from first year (R1) and 11 residents from each second, third, and fourth years (R2, R3, and R4) of the family medicine training program.

Participants’ Reaction and Satisfaction

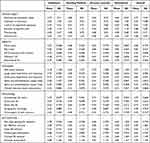

Generally, the five-day workshop met the expectation of all the participants (100%), with 95% thinking that the workshop objectives were in line with their learning objectives. A total of 97.5% of the trainees felt that they earned valuable knowledge because of this workshop, and almost one-third (32.5%) of the participants were not comfortable with the duration of the sessions. The same percentage (32.5%) anticipated barriers in putting the acquired skills into practice. Table 1 details the trainee perception and anticipated impact of the five-day training workshop. Overall, 73% felt that they were very skillful after attending the workshop, while 27% felt that they were skillful to some extent. Fourth-year residents acknowledge being less skillful in some procedures than first- and second-year residents, but there was no significant statistical difference between the residents in different program levels (R1–R4) with a p-value = 0.09. The workshop met the expectation of 100% in obstetric and gynecological procedures workshop with 97% satisfaction rate, followed by dermatology (97.5%, 90%), orthopedic (95%, 87%), general surgery (97.5%, 84%), combined ophthalmology and otorhinolaryngology workshop (82.5%, 74%), Table 2 illustrates the trainees’ reaction towards individual procedure educational activity; usefulness, teaching methods, amount learned, stimulation and overall reaction towards the procedure activity.

|

Table 1 Overall Perception and Impact of the Workshop (Kirkpatrick; Reaction) |

|

Table 2 The Benefit and Effectiveness of the Educational Workshop Activities (Kirkpatrick; Reaction) |

Confidence Before and After Conducting the Minor Procedure Workshop (Learning Assessment)

The self-assessment of confidence was measured prior to the workshop and immediately after each procedure. The self-reported confidence level was low prior to the workshop and noticeably increased after the workshop across the major five specialty domains with a statistically significant difference with p-value < 0.001 for each procedure. Procedures like neonatal circumcision, IUCD insertion and removal, cryosurgery for skin tumors, and corneal foreign body removal reported at the level of non-confidence (likert scale mean <2) before the workshop and the confidence level of a trainee increased to likert scale mean of (3.3, 4.15, 4.13, and 3.1, respectively) a difference that is statistically significant (P < 0.001).

Individual Specialty Mini-Workshop Evaluation

General Surgery Sessions

Immediately after the general surgery procedure workshop, 30 (75%) trainees felt very skillful, while nine (22.5%) trainees thought that they were skillful to some extent. Furthermore, one trainee reported the need for further training, and there was no statistically significant difference between trainees in different training levels (R1–R4) at a p-value of 0.68. The surgery workshop met the expectation of 39 (97.5%) of the attended trainees. The overall satisfaction was 84%. The lowest confidence level reported by the trainees was in the neonatal circumcision 1.48 ± 0.72, and the highest confidence level reported was in the wound care procedure with 3.5 ± 1.15. Although minor changes in the level of confidence in performing the ingrown toenail procedure before and after the workshop were noticed and were statistically significant (P < 0.001), the overall satisfaction for this procedure educational session was the lowest (3.88 ± 0.82) among the sessions for general surgery procedures.

OB/GYN Sessions

Thirty-three (82.5%) trainees reported feeling very skillful immediately after the workshop, while skillful to some extent was reported in seven (17.5%) trainees. Feeling skillful between trainees (R1–R4) had no statistically significant difference at a p-value of 0.77, and the OB/GYN workshop met the expectation of 40 (100%) of the attended trainees. The overall satisfaction was 97%. Intrauterine contraceptive device (IUCD) insertion and removal and obstetric ultrasound both scored the lowest level of confidence (1.98 ± 0.8) prior to the workshop. Both educational sessions were highly perceived and accepted by trainees.

Orthopedic

Feeling very skillful immediately after the workshop was reported by 22 (55%) trainees, while skillful to some extent was reported by 18 (45%) of the trainees with no statistically significant difference between trainees (R1–R4) at a p-value of 0.29. The orthopedic workshop met the expectation of 38 (95%) of the attended trainees, and the overall satisfaction was 87%. The lowest level of confidence prior to the workshop was reported in the closed reduction of joint dislocation 1.95 ± 1.01. However, the closed reduction for the joint dislocation educational session had a high satisfaction score similar to the rest of the orthopedic procedure sessions.

Dermatology

Feeling very skillful immediately after the workshop was reported in 29 (72.5%) trainees, while skillful to some extent was reported in 11 (27.5%) trainees with no statistically significant difference between residents (R1–R4) at a p-value of 0.43. The dermatology workshop met the expectation of 39 (97.5%) of the attended trainees, and the overall satisfaction was 90%. The lowest confidence level before the workshop was reported for the cryosurgery of skin tumors 1.83 ± 0.81. The overall satisfaction for the cryosurgery of skin tumors was 4.43 ± 0.75 almost similar to the rest of the dermatology procedure educational sessions.

Ophthalmology and Otorhinolaryngology (ENT)

Feeling very skillful immediately after the workshop was reported in 19 (47.5%) trainees. Nineteen (47.5%) trainees reported that they felt skillful to some extent, and two trainees reported a little degree of skills and a need for further training. There was no statistically significant difference between residents (R1–R4) at a p-value of 0.072. The combined ophthalmology and otorhinolaryngology (ENT) workshop met the expectation of 33 (82.5%) of the attended trainees. The overall satisfaction for ENT is 76% and ophthalmology 72%, and the combined overall satisfaction for both specialties in day five is 74%. Procedure confidence was reported to be lowest for corneal foreign body removal and the use of a slit lamp 1.98 ± 1.02, 1.98 ± 1 that increased to 3.13 ± 1.2 and 3.18 ± 1.03, respectively, with p-value < 0.001. The individual procedure educational sessions were perceived well by the trainees.

Change of Trainees’ Behavior Within One Year of Training

For the purpose of this study, we selected15 fixed effect model with predictor variables “time” and “time*learned” to measure the change in trainees’ perceived skills of each procedure as workshop outcome over the twelve months period. Data analysis details for each procedure are listed in Tables S1–S4.

In response to the 12-week post-workshop survey, only 24 residents responded and completed the questionnaire (60% of the studied sample). While the response to the twelve months after the workshop was 40% of the studied sample (16 trainees).

In general, there was some improvement in the level of performance of the workshop procedures compared with the pre-workshop level. For example, Suturing and laceration procedure showed an improvement at one year compared with 12 weeks (1.25 vs 0.07) with each unit change in time*learned. The intercept estimate is 7.5 (SE=0.69, t=10.85, p<0.001, 95% CI: 6.13, 8.87), when the predictor was “time” at 12 weeks the estimate was 0.18 (SE=0.9, t= 0.19, p=0.84, 95% CI: −1.62, 1.97), as the predictor was the interaction “time*learned” the estimate at 12 weeks was 0.07 (SE=0.9, t= 0.08, p=0.9, 95% CI: −1.7, 1.87), at one year the estimate was 1.25 (SE=1.12, t= 1.10, p= 0.27, 95% CI: −.99, 3.49). On the other hand, the Pap smear performance improvement is minimal with reduction in performance at 12 weeks (−5) compared with minimal improvement (0.18) at one year with each unit change in time*learned, the intercept estimate was 8.57 (SE=0.59, t=14.32, p<0.001, 95% CI: 7.38, 9.75). When the predictor was “time” at 12 weeks the estimate was −.44 (SE=0.78, t=−.57, p= 0.56, 95% CI: −1.99, 1.10) as the predictor was the interaction “time*learned” the estimate at 12 weeks was −5.0 (SE=1.23, t=−4.07, p< 0.001, 95% CI: −4.07, −2.57) at one year the estimate was 0.18 (SE=1.69, t= 0.11, p=0.91, 95% CI: −3.176, 3.53). Table S3 shows more details of all the workshop procedure changes in performance.

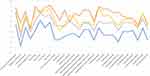

The intended outcome of mastering minor procedures was below the desired level in four procedures: neonatal circumcision, ingrown toenail, cryosurgery for skin tumors, and slit-lamp examination. Table 3 and Figure 2 display the details of the self-reported level of learned skill of different procedures prior and after each procedure training and 12 weeks and 12 months after the workshop.

|

Figure 2 Minor procedures’ learning outcome over time (blue: pre-workshop, red: post-workshop, grey: 12 weeks post-workshop, orange: 12 months post-workshop). |

It was reported that five office procedures, namely, neonatal circumcision, closed reduction of joint dislocations (elbow/shoulder), cryosurgery of skin tumors, nose foreign body removal, and corneal foreign body removal were not performed during the 12-week post-workshop, the number of the procedures performed increased slightly after that period of time (Table S5). The increased competency due to an increased number of different procedures was not statistically significant (P > 0.05). The difference between male (mean 655±57.8) and female trainees (mean 610±88.2) was not statistically significant (P = 0.25).

Facilitators and Barriers to Gained Skills Practice

The facilitators and barriers to the implementation of the gained skills are as follows: factors related to the training, confidence of trainees, the skills and attitudes of supervisors, clinic setting and materials, and institutional policy. The trainees agreed that training and confidence are facilitators for implementing the gained skills, skillful supervisor with a positive attitude, and a suitable clinical setting with availability of resources also facilitate the performance of procedures and enhance gained skills. At 12 weeks, the trainees did not consider the institutional policies and procedures or fees for service as factors impacting minor office procedures. Additionally, at 12 months, institutional policies prohibiting procedure performance in the clinic setting or restricting office procedures to a certified specialist were considered to be one of the obstacles for performing minor procedures in the clinic, and the service fee was not considered as an obstacle. The related factors of the patients were a major issue mentioned by one trainee as patient acceptance and the preferences of specialists to perform the procedures rather than a family physician or a trainee. Table S6 shows different perception of facilitators/obstacle factors according to training program level.

Discussion

This extensive workshop is the first at a national level to serve local program trainees. It adopted a competency-based medical education strategy to align with the Saudi Board of Family Medicine intended outcomes.1 Competency-based medical education is the trend for medical schools over the past two decades, and it denotes trainee ability or competency as an outcome of education.16 In our study, we also utilized the available resources in our clinical skill simulation center to conduct the workshop and to teach our trainees various technical skills. The use of simulation in training showed to be an effective approach in acquiring long-lasting skills17 when performed and calibrated under supervision.18

Family medicine trainees in this study were highly satisfied and positively reacted to the workshop, and their relationship with the workshop started at the stage of planning. The high acceptance and satisfaction with the activity can be explained on the basis of the principles of adult learning.19 They were actively involved in planning the activity and need assessment process. They were motivated, and they knew why they attended the activity, which is relevant to their future practice. The educational objectives of the workshop also matched the learning objectives of the trainees, and the educational activity was conducted on the basis of their previous experience and education. The graduate students passed the stage of novice trainees20 and carried with them a bulk of knowledge and skills that needed to be revised and improved as they advanced in the training program. Moreover, the second reason for the high positive reaction was that the family medicine program spent a fortune on this workshop by hosting 30 specialist instructors and utilizing low-fidelity simulation resources that were offered free of charge to our trainees. Nearly half of the trainees appreciated receiving this workshop free of charge plus the bonus of the course being accredited for 30 CME hours.

Learning and the change of behavior in our cohort sample were evident. The low confidence ratings on self-assessment scales prior to the workshop were measurably increased after attending, gaining knowledge, and receiving hands-on training. Studies have validated that the inaccuracy of self-assessment occurred among practicing physicians by overestimating themselves against an external rater,21 which is known as the Dunning–Kruger effect.22 The modified Dunning-Kruger effect explained this self-assessment on the degree of task difficulty; with easy tasks, participants tend to overestimate their abilities or skills, whereas with difficult tasks, good performers tend to greatly underestimate abilities, and poor performers tend to underestimate to a less extent. This was evident in our cohort when the senior trainees at the R4 level acknowledged their skills to be less in some procedures than the rating of the trainees at the R1–R2 training levels. Another observation was that the female trainees reported less competent than the male trainees. A study has verified that female surgeons on self-assessment tend to underestimate their technical skills compared with those with expert ratings.23

Competency increased over time with more procedures performed, and we noticed that the competency levels remained almost the same or declined at 12 weeks and 12 months. For example, suturing and laceration repair showed improvement in post-workshop level, a one unit increase in predictors “time” corresponds to 0.18 increase in the performance of suturing procedure, while one unit increase in predictor “time*learned” corresponds to 0.07 increase in the performance in 12 weeks and 1.25 increase in the performance in one year after the workshop. In contrast with pap smear procedure where the performance decreased at 12 weeks and increased after one year (−5.0, 0.18) compared with obstetric ultrasound at one year (−0.52) this cannot be explained solely to the effect of the workshop and the effect of the environment and personal maturity cannot be ignored. The number of procedures was less than what was expected, and this can be determined by the type of problems presented by the patients to the clinic. Surprisingly, the number of the fundoscopy procedures was less than what it should be. Roberts et al reported that only three physicians out of 72 performed fundoscopy routinely on all patients and that 30 physicians admitted insufficient training in the fundoscopy examination.24 Competency on corneal foreign body removal decreased between 12 weeks (1.08) and 12 months (0.7), and this could be explained by the low number of cases presenting to the clinical practice. In addition, during our workshop, the ophthalmologist utilized a slit lamp for corneal foreign body removal, which may have sent a false message to the trainees for the need of sophisticated equipment to perform the procedure. In fact, corneal foreign body removal can be managed in the general practice setting with the availability of simple equipment.25 Institutional policies and procedures, supervisors' qualities and attitudes, in addition to availability of resources within a suitable environment all play a role in enhancing and maintaining a skillful performance of procedures.

Limitation

Two major limitations were faced in this workshop, namely, time and resources. An exhaustive five-day workshop (8 am to 4 pm) led to stressed and fatigued residents. The timing was a challenge as this was inserted into the program schedule and could not be separated into different weeks. Resources for some procedures were unavailable, and this was solved by the enthusiastic instructors who made models or brought their equipment to run their allocated sessions. Another limitation was the assessment of competency by assessors after the workshop was not feasible due to lack of time and resources.

Conclusion

In this evaluation, we satisfied Kirkpatrick model levels one, two, and three, and we studied the factors influencing the utilization of the gained skills. Well-trained confident trainees, a skillful supervisor with a positive attitude, the availability of resources (setting and materials), and an institutional policy that allows and supports performing minor procedures in the family practice setting all facilitate procedure performance and increase the trainee competencies.

We planned and conducted an extensive workshop on minor office procedures that was positively accepted by our trainees. The success of this workshop stood on the active involvement of the stakeholders in planning to include this highly relevant educational activity to their training program and future practices. Adopting a competency-based medical educational workshop to train our residents the technical skills in addition to the presence of available resources facilitates the success of this workshop.

Acknowledgment

The authors would like to thank the Deanship of Scientific Research and RSSU at King Saud University for their technical support, and also to thank Dr Yasser Alaska and the clinical skill simulation center staff for technical support. They would also like to extend their gratitude to all the residents who participated in the survey.

Author Contributions

All authors made a significant contribution to the work reported, whether that is in the conception, study design, execution, acquisition of data, analysis and interpretation, or in all these areas; took part in drafting, revising or critically reviewing the article; gave final approval of the version to be published; have agreed on the journal to which the article has been submitted; and agree to be accountable for all aspects of the work.

Funding

This study was funded by the College of Medicine Research Center, Deanship of Scientific Research, King Saud university, Riyadh KSA.

Disclosure

The authors declare no conflicts of interest.

References

1. SCFHS S. Saudi Board for Family Medicine Curriculum; 2020.

2. Andijany MA, AlAteeq MA. Family medicine residents in central Saudi Arabia: how much do they know and how confident are they in performing minor surgical procedures? Saudi Med J. 2019;40(2):168–176. doi:10.15537/smj.2019.2.23914

3. Goertzen J. Learning procedural skills in family medicine residency: comparison of rural and urban programs. Can Family Physician. 2006;52(5):622.

4. Kelly M, Everard KM, Nixon L, Chessman A. Untapped potential: performance of procedural skills in the family medicine clerkship. Fam Med. 2017;49(6):437–442.

5. Rivet C, Wetmore S. Evaluation of procedural skills in family medicine training. Can Family Physician. 2006;52(5):561.

6. Malone E. Challenges & issues: evidence-based clinical skills teaching and learning: what do we really know? J Vet Med Educ. 2019;46(3):379–398. doi:10.3138/jvme.0717-094r1

7. Garcia-Rodriguez JA, Dickinson JA, Perez G, et al. Procedural knowledge and skills of residents entering Canadian family medicine programs in alberta. Fam Med. 2018;50(1):10–21. doi:10.22454/FamMed.2018.968199

8. Stone H, Crane J, Johnston K, Craig C. Retention of vaginal breech delivery skills taught in simulation. J Obstetrics Gynaecol Can. 2018;40(2):205–210. doi:10.1016/j.jogc.2017.06.029

9. Campbell C, Hendry P, Delva D, Danilovich N, Kitto S. Implementing competency-based medical education in family medicine: a scoping review on residency programs and family practices in Canada and the United States. Fam Med. 2020;52(4):8. doi:10.22454/FamMed.2020.594402

10. Yardley S, Dornan T. Kirkpatrick’s levels and education ‘evidence’. Med Edu. 2012;46(1):97–106. doi:10.1111/j.1365-2923.2011.04076.x

11. Bates R. A critical analysis of evaluation practice: the Kirkpatrick model and the principle of beneficence. Eval Program Plann. 2004;27(3):341–347. doi:10.1016/j.evalprogplan.2004.04.011

12. Reio TG, Rocco TS, Smith DH, Chang EA. Critique of Kirkpatrick’s evaluation model. New Horiz Adult Educ Human Resour Dev. 2017;29(2):35–53. doi:10.1002/nha3.20178

13. Bukhari AA. The clinical utility of eye exam simulator in enhancing the competency of family physician residents in screening for diabetic retinopathy. Saudi Med J. 2014;35(11):1361–1366.

14. Clanton J, Gardner A, Cheung M, Mellert L, Evancho-Chapman M, George RL. The relationship between confidence and competence in the development of surgical skills. J Surg Educ. 2014;71(3):405–412. doi:10.1016/j.jsurg.2013.08.009

15. Harrison XA, Donaldson L, Correa-Cano ME, et al. A brief introduction to mixed effects modelling and multi-model inference in ecology. PeerJ. 2018;6:e4794. doi:10.7717/peerj.4794

16. Frank JR, Mungroo R, Ahmad Y, Wang M, De Rossi S, Horsley T. Toward a definition of competency-based education in medicine: a systematic review of published definitions. Med Teach. 2010;32(8):631–637. doi:10.3109/0142159X.2010.500898

17. McGaghie WC, Issenberg SB, Barsuk JH, Wayne DB. A critical review of simulation-based mastery learning with translational outcomes. Med Edu. 2014;48(4):375–385. doi:10.1111/medu.12391

18. Satava R. Role of simulation in postgraduate medical education. J Health Specialties. 2015;3(1):12–16. doi:10.4103/1658-600X.150753

19. Bryan RL, Kreuter MW, Brownson RC. Integrating adult learning principles into training for public health practice. Health Promot Pract. 2009;10(4):557–563. doi:10.1177/1524839907308117

20. Kothari LG, Shah K, Barach P. Simulation based medical education in graduate medical education training and assessment programs. Prog Pediatr Cardiol. 2017;44:33–42. doi:10.1016/j.ppedcard.2017.02.001

21. Davis DA, Mazmanian PE, Fordis M, Van harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence a systematic review. JAMA. 2006;296(9):1094–1102. doi:10.1001/jama.296.9.1094

22. Schlösser T, Dunning D, Johnson KL, Kruger J. How unaware are the unskilled? Empirical tests of the “signal extraction” counterexplanation for the Dunning–Kruger effect in self-evaluation of performance. J Econ Psychol. 2013;39:85–100. doi:10.1016/j.joep.2013.07.004

23. Miller BL, Azari D, Gerber RC, Radwin R, Le BV. Evidence that female urologists and urology trainees tend to underrate surgical skills on self-assessment. J Surg Res. 2020;254:255–260. doi:10.1016/j.jss.2020.04.027

24. Roberts E, Morgan R, King D, Clerkin L. Funduscopy: a forgotten art? Postgrad Med J. 1999;75(883):282–284. doi:10.1136/pgmj.75.883.282

25. Fraenkel A, Lee L, Lee G. Managing corneal foreign bodies in office-based general practice. Aust Fam Physician. 2017;46:89–93.

© 2021 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2021 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.