Back to Journals » Advances in Medical Education and Practice » Volume 7

An evidence-based laparoscopic simulation curriculum shortens the clinical learning curve and reduces surgical adverse events

Authors De Win G , Van Bruwaene S, Kulkarni J, Van Calster B, Aggarwal R, Allen C, Lissens A, De Ridder D, Miserez M

Received 7 December 2015

Accepted for publication 12 April 2016

Published 30 June 2016 Volume 2016:7 Pages 357—370

DOI https://doi.org/10.2147/AMEP.S102000

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

Editor who approved publication: Dr Md Anwarul Azim Majumder

Gunter De Win,1,2 Siska Van Bruwaene,3,4 Jyotsna Kulkarni,5 Ben Van Calster,6 Rajesh Aggarwal,7,8 Christopher Allen,9 Ann Lissens,4 Dirk De Ridder,3 Marc Miserez4,10

1Department of Urology, Antwerp University Hospital, 2Faculty of Health Sciences, University of Antwerp, Antwerp, 3Department of Urology, University Hospitals of KU Leuven, 4Centre for Surgical Technologies, KU Leuven, Leuven, Belgium; 5Kulkarni Endo Surgery Institute, Pune, India; 6Department of Development and Regeneration, KU Leuven, Leuven, Belgium; 7Department of Surgery, Faculty of Medicine, 8Steinberg Centre for Simulation and Interactive Learning, Faculty of Medicine, McGill University, Montreal, QC, Canada; 9School of Arts and Sciences, University of Pennsylvania, Philadelphia, PA, USA; 10Department of Abdominal Surgery, University Hospitals Leuven, Leuven, Belgium

Background: Surgical simulation is becoming increasingly important in surgical education. However, the method of simulation to be incorporated into a surgical curriculum is unclear. We compared the effectiveness of a proficiency-based preclinical simulation training in laparoscopy with conventional surgical training and conventional surgical training interspersed with standard simulation sessions.

Materials and methods: In this prospective single-blinded trial, 30 final-year medical students were randomized into three groups, which differed in the way they were exposed to laparoscopic simulation training. The control group received only clinical training during residency, whereas the interval group received clinical training in combination with simulation training. The Center for Surgical Technologies Preclinical Training Program (CST PTP) group received a proficiency-based preclinical simulation course during the final year of medical school but was not exposed to any extra simulation training during surgical residency. After 6 months of surgical residency, the influence on the learning curve while performing five consecutive human laparoscopic cholecystectomies was evaluated with motion tracking, time, Global Operative Assessment of Laparoscopic Skills, and number of adverse events (perforation of gall bladder, bleeding, and damage to liver tissue).

Results:The odds of adverse events were 4.5 (95% confidence interval 1.3–15.3) and 3.9 (95% confidence interval 1.5–9.7) times lower for the CST PTP group compared with the control and interval groups. For raw time, corrected time, movements, path length, and Global Operative Assessment of Laparoscopic Skills, the CST PTP trainees nearly always started at a better level and were never outperformed by the other trainees.

Conclusion: Proficiency-based preclinical training has a positive impact on the learning curve of a laparoscopic cholecystectomy and diminishes adverse events.

Keywords: laparoscopy, simulation, learning curve, transfer of skills

Introduction

The apprentice–tutor model was useful for training surgeons for many years, but the complexity of surgical technology in the 21st century owing to the increased use of laparoscopy, endoscopy, and robotics as well as the restriction in working hours has led to an exponential demand for surgical skill training outside the operating room. Many research articles evidence the added value of simulation.1,2 There are not many evidences to demonstrate general transferability of skills from the laboratory to the live operative setting; however, a very recent study showed that a curriculum of individualized deliberate practice on a virtual reality simulator improves technical performance in the operation room.3 In addition, a week of simulation training seemed to have a positive clinical impact on the transition from student to intern.4

However, most studies focused on a specific simulator or a specific laparoscopic task or procedure.

Despite these data, where different training models and different training regimes were used, the optimal way of incorporating simulation training in a surgical curriculum remains unclear.5–7

The most prevalent way of integrating simulation into the surgical curriculum worldwide is offering several days of simulation training during surgical residency. This type of training is further called interval simulation training. Some centers organize structured preclinical simulation training with examination before surgical residency can start. An example of this is the well-validated Fundamentals of Laparoscopic Surgery in the US.8

We previously showed the benefit of preclinical structured simulation training over interval simulation training and no simulation training when students were evaluated with standard laboratory simulation exercises.9

However, the ultimate goal of a simulation program is to show transferability to the clinical setting, which means that improvement in real operative performance has to be detected after simulation training.

The goal of this pilot study was to compare the effect of interval and proficiency-based preclinical simulation programs on the real-life laparoscopic cholecystectomy learning curve for first-year residents who were conventionally trained without any simulation training.

Materials and methods

Study design and creation of study groups

We performed a single-blind trial in KU Leuven Academic Medical Center and its affiliated hospitals. The Medical Educational Committee for Masters in Medicine approved this study. The use of animals for the training was approved by the Animal Ethics Committee of the KU Leuven. All experiments were performed following KU Leuven institutional and national guidelines and regulations. Informed consent from the students was not sought because this training program was part of their curriculum and all the students agreed.

Students in their last year of medical school and applying for a surgical residency in the subsequent academic year received either standard residency-based intraoperative learning through mentorship (control), intraoperative learning through mentorship interspersed with three 6-hour simulation training sessions (18 hours in total) in a laparoscopy skills laboratory (interval) during their residency, or preclinical laparoscopy training as a student following the multimodal, structured, and proficiency-based Center for Surgical Technologies Preclinical Training Program (CST PTP)10 course (also 18 hours in total) before 6 months of starting their residency.

A total of 30 final-year medical students (age 23–26 years) without earlier laparoscopic training experience were recruited for this study. All the students were allocated into three study groups after testing for spatial ability (Schlauchfiguren test), handedness (Oldfield questionnaire), and baseline laparoscopic psychomotor abilities (Southwestern drills).11–13 Instead of pure randomization, students from the same real clinical study group were kept together. No simulation training, other than the training corresponding to their study group, was allowed until the end of this study. The simulation training standardly incorporated in our residency program was given only at the end of the study period.

Training program

Control group

During their last months as medical students and their first year as residents in general surgery, no further laparoscopic or endoscopic simulation training was allowed. Their training continued in the traditional mentored method, in which the trainees were exposed to procedures under the guidance of an experienced surgeon.

Interval group

The clinical training is comparable with that of the control group. Furthermore, every 2 months during the first half-year, the trainees received 1 day of laparoscopy training in the laboratory (6 hours). Deliberate practice between the training sessions and after the last training session was allowed and tracked.

The three training sessions, (a total of 18 hours), were structured as follows:

- Day 1: basic psychomotor exercises were practiced. Students learned the laparoscopic skills testing and training model exercises, checkerboard rope passing, and bean drop exercises from the Southwestern drills, together with the paperclip exercise and a needle trajectory exercise as described elsewhere.13–15

- Day 2: students practiced laparoscopic stitching and suturing on a skin pad and chicken skin. The Szabo suturing technique was learned, and polyfilament and monofilament sutures were used.16

- Day 3: hemostasis and dissection techniques were learned. During the morning sessions, students practiced on the cholecystectomy model in a pulsatile organ perfusion (POP) trainer.17 In the afternoon, students practiced a nephrectomy on a living rabbit model.18 During every training, feedback on student’s performance was given.

Preclinical group (CST PTP)

During their last months as medical students, subjects received structured, proficiency-based laparoscopy training following the CST PTP as described previously.10 The total amount of supervised laboratory training was 18 hours (as in the interval group), but the training was organized into three training blocks. Each training block consisted of four daily lessons of 1.5 hours. Training block one focused on psychomotor training, block two on laparoscopic stitching and suturing, and block three on laparoscopic dissection techniques and hemostasis. Deliberate practice was allowed. Block three consisted of an appendectomy exercise, a nephrectomy model, and a laparoscopic cholecystectomy model. The first two exercises were open for deliberate practice, whereas the cholecystectomy was not. The laparoscopic cholecystectomy (CCE) exercise was just practiced once (as in the interval training) and was as such not proficiency based. For all the other exercises, students had to show proficiency before the next training block was taught and to obtain a training certificate. After graduation, the students started their training as surgical residents. Their training continued in the traditional mentored method, that is, in the same way as in the control group. Neither further laparoscopic or endoscopic training nor deliberate practice was allowed.

Assessment

To assess the general exposure to surgical procedures among the study groups, during residency, all the procedures performed by the trainees, either as an operator or as an assistant operator, were logged in an online portfolio (www.medbook.be).19

In the beginning of their residency, all residents performed a cholecystectomy on a pulsating organ perfusion trainer. The procedure was recorded and afterward a blind single observer calculated a Global Operative Assessment of Laparoscopic Skills (GOALS) score.20 The GOALS score is a validated global rating scale for laparoscopy and consists of five separate items (depth perception, bimanual dexterity, efficiency, tissue handling, and autonomy). Each of these items receives a score between 1 and 5.

After 6 months of clinical training and assisting with at least five laparoscopic CCEs, all residents received a step-by-step explanation of a laparoscopic cholecystectomy on the Websurg platform.21,22 After that, their clinical CCE learning curve was registered.

Therefore, during their first five laparoscopic CCEs, residents were the first operators for the clip and cut of the cystic artery and duct and the dissection of the fundus of the gallbladder. Residents performed as much of these parts of the operation as possible as deemed appropriate by the supervising staff surgeon. This protocol was discussed intensively with all the participating staff surgeons in the different affiliated hospitals to ensure a standardized approach. Because some supervisors considered the dissection of Calot’s triangle as difficult for a first-year resident, that part of the operation was done by the staff surgeon himself/herself.

The inclusion criteria for patients eligible for this study are similar to an earlier study and are listed in Table 1. The study design is shown in Figure 1.23

| Table 1 Patient inclusion criteria for laparoscopic cholecystectomy within this study protocol Abbreviation: BMI, body mass index. |

| Figure 1 Three training groups and flow diagram of the study. Abbreviations: CST PTP, Center for Surgical Technologies Preclinical Training Program; h, hours; CCE, cholecystectomies. |

All cases were video recorded. A trained investigator, nonblinded to randomization status, was present during the entire procedure as an independent observer in order to oversee video recording, record operative times, and measure the frequency and type (manual or verbal) of corrective interventions by the supervisor. The motion-tracking device Imperial College Surgical Assessment Device was installed to track and calculate the number of movements and distance traveled by the resident’s hands (path length). Motion data have shown to correlate with surgeon’s experience.24

Every trainee worked with his/her personal supervisor in his/her own training hospital. In all, 20 hospitals and 31 supervisors participated. All supervisors got specific information about the study protocol and were told to let the resident perform as much as possible the clip and cut task and the fundus dissection as was considered safe for the patient. The supervisors were allowed to give verbal feedback if necessary and could take over if they had the opinion that the resident was not progressing safely.

The recording of operative time and motion data was standardized. Once the triangle of Callot was dissected by the supervisor, the resident took over the operation to clip and cut the artery and the duct. From the moment the resident entered his first instrument until both artery and duct were cut, time and motion data were measured. After completing this task, once the resident started the fundus dissection until the gallbladder was completely dissected from the liver bed, time and motion data were registered. Also, the time the supervisor took over was registered.

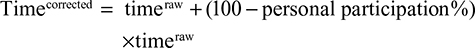

An earlier study realized that the operative time for poorly performing residents could be faster than the time for better trainees because the supervising surgeon would perform a greater proportion of the procedure. It was estimated that a staff surgeon would be twice as fast as a resident. Therefore, the corrected time was introduced as an outcome parameter based on the following calculation:25

|

|

The recorded videos were edited and blinded in clip and cut and fundus dissection separately. One expert in laparoscopic cholecystectomy having performed >7,000 laparoscopic CCEs and being an experienced teacher was trained in assessing videos with the GOALS global rating scale. She scored the videos and was blind to the randomization status. The scoring of the videos was done online with a simple web application (www.surgicalskillsrating.com). On this web platform, the expert filled in a GOALS score for each video fragment.26 Because it was difficult to rate for autonomy on a blinded video, this part of the GOALS score was skipped in analogy with the study by Beyer et al.27 This was possible because the validity of each separate item of the GOALS score has been shown. With a sliding bar, the blinded rater completed a visual analog scale of range 0–10 for the difficulty of the case.

The videos were presented in a random order and not in the chronological order of the CCEs performed by the resident. In this way, the observer was blinded to the identity of the trainee; his/her study group; if it was the first, second, third, fourth, or fifth CCE he/she performed; and which clip and cut fragment corresponded to which fundus dissection fragment.

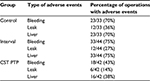

For fundus dissection, another blinded observer registered intraoperative adverse events. He used the following scale: bleeding (no, minor, moderate, major), liver damage (no, minor, moderate, major), and bile spillage (yes/no; Table 2). We used this scale specifically to assess smaller adverse events more specifically because it would not have been possible to achieve a significant difference between complications using the Clavien–Dindo classification (for cholecystectomy), a procedure with the reported overall complication of ∼2%.

| Table 2 Description of adverse events |

Statistics

Binary variables are summarized through counts and proportions; continuous variables are summarized through the median and range.

We analyzed the primary outcome and learning curves for the three study groups with fixed-effects longitudinal regression models. Dependent variables of interest relating to operative performance were time, corrected time, GOALS, path length, and number of movements. Independent (predictor) variables were study group (control, interval, CST PTP), session as a longitudinal repeated measure (1, 2, 3, 4, and 5), and the interaction between study group and session to allow group-specific learning effects. For path length and number of movements, handedness was also included as a repeated measure (left vs right). The missing values were not imputed, given that unavailability of measurements occurred completely at random due to nonstudy specific reasons (eg, loss of motion tracking data or video data due to equipment failure). Overall, 21% of the values were missing (21% in the control group, 17% in the interval group, 25% in the CST PTP group). Based on checks of model assumptions, dependent variables were transformed logarithmically. By consequence, regression coefficients can be interpreted in terms of percent change, eg, if the regression coefficient for CST PTP vs control is 0.10, then exp(0.10) =1.11, which suggests on average 11% higher values for CST PTP vs control subjects. We assumed a linear effect of session number due to the limited sample size. As output, we report 1) the expected value at baseline for each group as well as the percent difference between the CST PTP group and the control and interval groups and 2) the learning effect for each group (the percent change in the dependent variable per extra session) together with the percent difference between the CST PTP group and the control and interval groups.

We analyzed the presence of adverse events with multivariable logistic regression using the following independent variables: group (control, interval, and CST PTP), number of operation (1–5), interaction between group and session of operation, and type of adverse event (bleeding vs bile leak vs liver damage). The number of operations and type of adverse events are repeated measures variables, resulting in 15 observations per subject. Based on this model, we estimated the marginal difference in adverse event rates between groups, ie, with the other covariates averaged out. A similar approach was followed to analyze the manual and oral interventions of the supervisor. This analysis used the following independent variables: group (control, interval, and CST PTP), number of operations (1–5, repeated measures), interaction between group and number of operations, clip and cut vs fundus (binary, repeated measures), and type of feedback (manual vs oral, repeated measures).

Instead of using P-values to evaluate the results, we focused on the estimation of effect sizes with confidence intervals (CIs).28,29 The statistical analysis was performed using SAS 9.3 (SAS Institute Inc., Cary, NC, USA).

Results

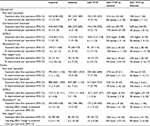

The three study groups were similar with respect to baseline characteristics, the result of the pulsating POP cholecystectomy exercise at the beginning of residency and exposure to laparoscopic procedures during their first 6 months of clinical training (Table 3).

Although they were allowed to practice after initial instruction, none of the students in the interval group did deliberate practice during their first 6 months of residency or during the next 6 months. The mean preclinical deliberate practice time in the CST PTP group (before the start of their residency) was 5 hours and 7 minutes.

Two of the CST PTP residents who got a training certificate were not allowed to take part in the clinical evaluation study because of administrative reasons. All residents performed at least three laparoscopic CCEs. For the fourth laparoscopic CCE, there were five dropouts: 2/10 in the interval, 1/8 in the control, and 2/10 in the CST PTP group. For the fifth operation, there were 12 dropouts (three interval, five control, four CST PTP; Figure 1). These dropouts occurred because the residents could not complete all five laparoscopic CCEs within their first year as a resident.

As shown in Table 4, there was no difference in difficulty of the presented cases between the three study groups.

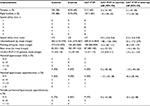

The odds of feedback were 6.6 times lower for the CST PTP group compared to the control group (odds ratio [OR] 6.6, 95% CI 2.1–20.8) and were 7.5 times lower for the CST PTP group compared to the interval group (95% CI 2.6–21.3). The observed feedback rates show that the difference is larger for manual feedback than for verbal feedback (Table 5).

| Table 4 Difficulty of presented cases Abbreviations: SE, standard error; CI, confidence interval; CST PTP, Center for Surgical Technologies Preclinical Training Program. |

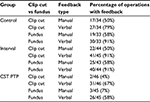

The odds of adverse events were 4.5 times lower for the CST PTP group compared to the control group (OR 4.5, 95% CI 1.3–15.3) and were 3.9 times lower for the CST PTP group compared to the interval group (95% CI 1.5–9.7). The observed adverse events rates show that for all types of adverse events, there is a huge difference between the CST PTP group and other groups where the amount of adverse events is twofold (Table 6).

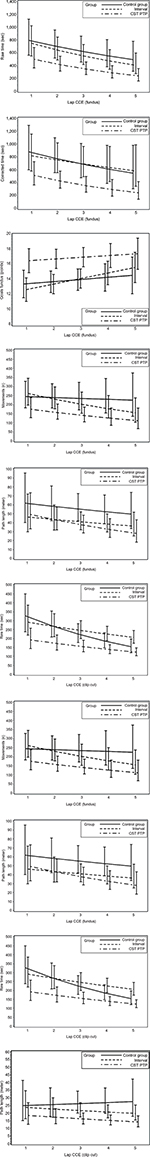

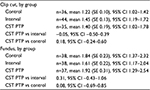

For raw time, corrected time, number of movements, path length, and GOALS, the CST PTP trainees always started at a better level than other groups (except versus the interval group for path length of fundus) and were never outperformed by the other trainees after five operations (Figure 2). An overview of the results of the longitudinal regression analysis is listed in Table 7, which summarizes the models using two quantities: the expected value at the first operation and the percentage improvement per operation (ie, the learning effect). For both quantities, comparisons of the CST PTP group with the other two groups are provided.

Discussion

Several studies have shown that residents often do not feel perfectly prepared to be able to perform laparoscopy on their own once they have finished their training.30–33 Evidence-based training curriculum can overcome this problem. An ideal training curriculum would tailor the time needed for each individual to be competent in a given skill upon completion of a preclinical training program so that no wasteful hours have to be spent in the operation room with repetitive motions without benefit.34

In this study, we have shown the positive impact of preclinical proficiency-based laparoscopic simulation training on the real-life surgical learning curve of a laparoscopic cholecystectomy.

Interval simulation training did not show a clear benefit over standard apprenticeship training.

Preclinical-trained residents performed the procedures quicker and more accurate, caused less adverse events, and needed less takeovers by their supervisor. Furthermore, their learning curve was better, as for all parameters, the curve started at a better level, the slope was less steep, and the curve was never crossed by the learning curves of other groups.

Previously, we have shown that CST PTP-trained registrars outperformed their conventionally trained peers with standardized simulation tasks.9

Surgical skill has shown to be a strong predictor of clinical outcome.35 Also in the current study, the preclinical-trained residents not only had better skills but there were also less intraoperative adverse events in this study group.

Why do the preclinical-trained residents perform better?

In both simulation-trained groups (interval vs CST PTP), the total time of supervised training was 18 hours. Apart from the CST PTP training done preclinically, the major difference between both study groups was that the CST PTP training was proficiency based, structured, and distributed and the interval group was more time based. Both groups were offered deliberate practice; however, only the preclinical-trained residents found the time and motivation to actually perform deliberate practice. Previous research has shown that residents often do not find the time to perform deliberate practice, especially when they are not obliged.36,37

The key finding of this study is that repeated exposure and competency-based progression (albeit time limited) is superior to the interval method. This is consistent with other works in this field. Training until proficiency, distributed training, and deliberate practice all have shown to be important factors to gain most benefit out of a simulation program.15,38–40 However, implementing these fundamentals into a real curriculum during residency is extremely difficult. For this reason, structured preclinical courses are developed. While some trainers may be reluctant or believe that these simulation sessions are given too early in the curriculum, when given preclinically, the current study clearly shows the benefit.

All groups showed a progressive learning curve over subsequent procedures. This finding shows that simulation cannot replace surgical experience but is a useful adjunct to traditional methods.

The ultimate goal of implementing simulation-based training in surgery is to provide a complementary experience that accelerates the clinical learning curve. Only the preclinical proficiency-based CST PTP group had reached this goal as these trainees had a better clinical start and were never outperformed by their peers.

While most previous studies evaluate a specific simulator and a specific surgical task, our program was more generic. It was not our aim to prepare our trainees for a specific procedure but help them to acquire most skills needed to perform general laparoscopic procedures. Therefore, we expect that also learning curves of other laparoscopic procedures will be accelerated. This is the first study that compares proficiency-based and time-based training and includes a real apprenticeship control group. Furthermore, transfer of skills is measured by evaluating the initial part of the learning curve (rather than one specific procedure) and combining a global rating scale, performance metrics, and operative adverse events as outcome measures in a real live human operative setting. Therefore, it addresses many shortcomings of earlier studies as addressed in a recent transfer of skill review.2

Our findings should be interpreted in light of some limitations of this study.

It is very difficult to standardize patient cases. However, our inclusion criteria for patients were strict so that we expected only easy cases to be used for the clinical evaluation. Furthermore, the score for difficulty of the cases was not different between the study groups. Trainees had to dissect the complete gallbladder from the liver bed; however, the size of the gallbladders could not be standardized, which caused the length over which the gallbladder fundus had to be dissected to be different. Also, in different hospitals, different clip appliers were used. However, we were convinced that these negative factors will not influence most outcome measurements (GOALS and motion tracking).

Another negative point is the dropout rate. Indeed, we were not capable of registering all five laparoscopic CCEs per trainee. This was not due to participant dropout. The logistics of recording 150 procedures in 20 different hospitals during the first year of residency were significant, and in certain cases, it was impossible to record them all within that first year. Also, only one blinded rater scored all the videos.

A cholecystectomy was used for training (animal) and evaluation (human), but we believe this was not a major drawback, since this training exercise was not open to deliberate practice. Moreover, as mentioned before, the cholecystectomy model was more a tool for training and evaluating general laparoscopic skills.

Because of the small sample size, this study can be considered as a pilot study. A follow-up study on a larger scale across different training centers would be interesting, but logistical difficulties make this kind of study hard to organize.

Apart from these limitations and the fact that we did not study the long-term effects of preclinical training (ie, only the beginning of the learning curve was evaluated), we believe that this study significantly underlines the importance of preclinical structured proficiency-based laparoscopic simulation training and we suggest to adapt the current simulation curriculum based on our findings.

Acknowledgments

Many thanks to Ivan Laermans from the Centre for Surgical Technologies who provided help in organizing these training sessions and evaluations. We thank Professor Broos, head of the Department of General Surgery at the time of this study. We also acknowledge and thank all the residents who took part in this project as well as all the surgical trainers who agreed to let their residents take part in this study protocol (Doctor Bosmans, Doctor Caluwé, Doctor Cardoen†, Doctor Cheyns, Doctor Coninck, Doctor Decoster, Doctor Devos, Doctor Geyskens, Doctor Geys, Doctor Janssens, Doctor Kempeneers, Doctor Lafullarde, Doctor Leman, Doctor Meekers, Doctor Meir, Doctor Mulier, Doctor Pattyn, Doctor Poelmans, Doctor Poortmans, Doctor Servaes, Doctor Sirbu, Doctor Smet, Doctor Topal, Doctor Vanclooster, Doctor Van Der Speeten, Doctor Van De Moortel, Doctor Vierendeels, Doctor Vuylsteke). We would also like to thank Robbi Struyf and Jiri Vermeulen for their help with the design of Figure 1. This study was funded by a grant from KU Leuven, University of Leuven, Educational Research, Development, and Implementation Projects (00I/2005/39). This research was presented at the joined International Surgical Congress of the ASGBI and ICOSET on April 30, 2014–May 2, 2014 in Harrogate, UK, and the abstract has been published in Volume 102, Issue S1, pages 9–118 of British Journal of Surgery in January 2015.

Disclosure

The authors report no other conflicts of interest in this work.

References

Zendejas B, Brydges R, Hamstra SJ, Cook DA. State of the evidence on simulation-based training for laparoscopic surgery: a systematic review.Ann Surg. 2013;257(4):586–593. | ||

Buckley CE, Kavanagh DO, Traynor O, Neary PC. Is the skillset obtained in surgical simulation transferable to the operating theatre? Am J Surg. 2014;207(1):146–157. | ||

Palter VN, Grantcharov TP. Individualized deliberate practice on a virtual reality simulator improves technical performance of surgical novices in the operating room: a randomized controlled trial. Ann Surg. 2014;259(3):443–448. | ||

Singh P, Aggarwal R, Pucher PH, et al. An immersive ‘simulation week’ enhances clinical performance of incoming surgical interns improved performance persists at 6 months follow-up. Surgery. 2015;157(3):432–442. | ||

Palter VN, Orzech N, Reznick RK, Grantcharov TP. Validation of a structured training and assessment curriculum for technical skill acquisition in minimally invasive surgery: a randomized controlled trial. Ann Surg. 2013;257(2):224–230. | ||

Stefanidis D, Arora S, Parrack DM, et al. Association for Surgical Education Simulation Committee. Research priorities in surgical simulation for the 21st century. Am J Surg. 2012;203(1):49–53. | ||

Stefanidis D, Hope WW, Korndorffer JR, Markley S, Scott DJ. Initial laparoscopic basic skills training shortens the learning curve of laparoscopic suturing and is cost-effective. J Am Coll Surg. 2010;210(4):436–440. | ||

Ritter EM, Scott DJ. Design of a proficiency-based skills training curriculum for the fundamentals of laparoscopic surgery. Surg Innov. 2007;14(2):107–112. | ||

De Win G, Van Bruwaene S, Aggarwal R, et al. Laparoscopy training in surgical education: the utility of incorporating a structured preclinical laparoscopy course into the traditional apprenticeship method. J Surg Educ. 2013;70(5):596–605. | ||

De Win G, Van Bruwaene S, Allen C, De Ridder D. Design and implementation of a proficiency-based, structured endoscopy course for medical students applying for a surgical specialty. Adv Med Educ Pract.2013;4:103–115. | ||

FEHC SH. Schlauchfiguren: Ein test zur beurteilung des rämlichen Vorstellungsvermöoges. Goöttingen: Hogrefe; 1983. | ||

Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. | ||

Scott DJ, Bergen PC, Rege RV, et al. Laparoscopic training on bench models: better and more cost effective than operating room experience? J Am Coll Surg. 2000;191(3):272–283. | ||

Molinas CR, De Win G, Ritter O, Keckstein J, Miserez M, Campo R. Feasibility and construct validity of a novel laparoscopic skills testing and training model. Gynecol Surg. 2008;5(4):281–290. | ||

De Win G, Van Bruwaene S, De Ridder D, Miserez M. The optimal frequency of endocopic skill labs for training and skill retention on suturing; a randomized controlled trial. J Surg Educ. 2013;70(3):384–393. | ||

Szabo Z, Hunter J, Berci G, Sackier J, Cuschieri A. Analysis of surgical movements during suturing in laparoscopy. Endosc Surg Allied Technol. 1994;2(1):55–61. | ||

Szinicz G, Beller S, Bodner W, Zerz A, Glaser K. Simulated operations by pulsatile organ-perfusion in minimally invasive surgery. Surg Laparosc Endosc. 1993;3(4):315–317. | ||

Molinas CR, Binda MM, Mailova K, Koninckx PR. The rabbit nephrectomy model for training in laparoscopic surgery. Hum Reprod. 2004;19(1):185–190. | ||

Peeraer G, Van Humbeeck B, De Leyn P, et al. The development of an electronic portfolio for postgraduate surgical training in Flanders. Acta Chir Belg. 2015;115:68–75. | ||

Gumbs AA, Hogle NJ, Fowler DL. Evaluation of resident laparoscopic performance using global operative assessment of laparoscopic skills. J Am Coll Surg. 2007;204(2):308–313. | ||

Mutter D, Marescaux J [webpage on the Internet]. Standard laparoscopic cholecystectomy; 2004;4(12). Available from: http://www.websurg.com/doi-vd01en1608e.htm. Accessed May 4, 2016. | ||

Mutter D [webpage on the Internet]. Laparoscopic cholecystectomy for symptomatic cholelithiasis with or without cholangiogram; 2001;1(02). Available from: http://www.websurg.com/doi-ot02en011.htm. Accessed May 4, 2016. | ||

Aggarwal R, Grantcharov T, Moorthy K, Milland T, Darzi A. Toward feasible, valid, and reliable video-based assessments of technical surgical skills in the operating room. Ann Surg. 2008;247(2):372–379. | ||

Moorthy K, Munz Y, Dosis A, Bello F, Darzi A. Motion analysis in the training and assessment of minimally invasive surgery. Minim Invasive Ther Allied Technol. 2003;12(3):137–142. | ||

Zendejas B, Cook DA, Bingener J, et al. Simulation-based mastery learning improves patient outcomes in laparoscopic inguinal hernia repair: a randomized controlled trial. Ann Surg. 2011;254(3):502–509. [discussion 509–511]. | ||

Vassiliou MC, Feldman LS, Andrew CG, et al. A global assessment tool for evaluation of intraoperative laparoscopic skills. Am J Surg. 2005;190(1):107–113. | ||

Beyer L, Troyer JD, Mancini J, Bladou F, Berdah SV, Karsenty G. Impact of laparoscopy simulator training on the technical skills of future surgeons in the operating room: a prospective study. Am J Surg. 2011;202(3):265–272. | ||

Ioannidis JP. Why most published research findings are false. PLoS Med. 2005;2(8):e124. | ||

Nuzzo R. Scientific method: statistical errors. Nature. 2014;506(7487):150–152. | ||

De Win G, Everaerts W, De Ridder D, Peeraer G. Laparoscopy training in Belgium: results from a nationwide survey in urology, gynecology and general surgery residents. Adv Med Educ Pract. 2015;6:55–63. | ||

Schijven MP, Berlage JTM, Jakimowicz JJ. Minimal-access surgery training in the Netherlands: a survey among residents-in-training for general surgery. Surg Endosc. 2004;18(12):1805–1814. | ||

Navez B, Penninckx F. Laparoscopic training: results of a Belgian survey in trainees. BGES. Acta Chir Belg. 1999;99(2):53–58. | ||

Qureshi A, Vergis A, Jimenez C, et al. MIS training in Canada: a national survey of general surgery residents. Surg Endosc. 2011;25(9):3057–3065. | ||

Gallagher AG, Ritter EM, Champion H, et al. Virtual reality simulation for the operating room. Ann Surg. 2005;241(2):364–372. | ||

Birkmeyer JD, Finks JF, O’Reilly A, et al; Michigan Bariatric Surgery Collaborative. Surgical skill and complication rates after bariatric surgery. N Engl J Med. 2013;369(15):1434–1442. | ||

Chang L, Petros J, Hess DT, Rotondi C, Babineau TJ. Integrating simulation into a surgical residency program: is voluntary participation effective? Surg Endosc. 2007;21(3):418–421. | ||

van Dongen KW, van der Wal WA, Rinkes IH, Schijven MP, Broeders IA. Virtual reality training for endoscopic surgery: voluntary or obligatory? Surg Endosc. 2008;22(3):664–667. | ||

Korndorffer JR Jr, Dunne JB, Sierra R, Stefanidis D, Touchard CL, Scott DJ. Simulator training for laparoscopic suturing using performance goals translates to the operating room. J Am Coll Surg. 2005;201(1):23–29. | ||

Stefanidis D, Korndorffer JR Jr, Sierra R, Touchard C, Dunne JB, Scott DJ. Skill retention following proficiency-based laparoscopic simulator training. Surgery. 2005;138(2):165–170. | ||

Crochet P, Aggarwal R, Dubb SS, et al. Deliberate practice on a virtual reality laparoscopic simulator enhances the quality of surgical technical skills. Ann Surg. 2011;253(6):1216–1222. |

© 2016 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2016 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.