Back to Journals » Journal of Healthcare Leadership » Volume 7

Use of CAHPS® patient experience survey data as part of a patient-centered medical home quality improvement initiative

Authors Quigley D , Mendel P, Predmore Z, Chen AY, Hays R

Received 27 March 2015

Accepted for publication 6 May 2015

Published 7 July 2015 Volume 2015:7 Pages 41—54

DOI https://doi.org/10.2147/JHL.S69963

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

Editor who approved publication: Professor Russell Taichman

Denise D Quigley,1 Peter J Mendel,1 Zachary S Predmore,2 Alex Y Chen,3 Ron D Hays4

1RAND Corporation, Santa Monica, CA, 2RAND Corporation, Boston, MA, 3AltaMed Health Services Corporation, 4Division of General Internal Medicine and Health Services Research, UCLA, Los Angeles, CA, USA

Objective: To describe how practice leaders used Consumer Assessment of Healthcare Providers and Systems (CAHPS®) Clinician and Group (CG-CAHPS) data in transitioning toward a patient-centered medical home (PCMH).

Study design: Interviews conducted at 14 primary care practices within a large urban Federally Qualified Health Center in California.

Participants: Thirty-eight interviews were conducted with lead physicians (n=13), site clinic administrators (n=13), nurse supervisors (n=10), and executive leadership (n=2).

Results: Seven themes were identified on how practice leaders used CG-CAHPS data for PCMH transformation. CAHPS® was used: 1) for quality improvement (QI) and focusing changes for PCMH transformation; 2) to maintain focus on patient experience; 3) alongside other data; 4) for monitoring site-level trends and changes; 5) to identify, analyze, and monitor areas for improvement; 6) for provider-level performance monitoring and individual coaching within a transparent environment of accountability; and 7) for PCMH transformation, but changes to instrument length, reading level, and the wording of specific items were suggested.

Conclusion: Practice leaders used CG-CAHPS data to implement QI, develop a shared vision, and coach providers and staff on performance. They described how CAHPS® helped to improve the patient experience in the PCMH model, including access to routine and urgent care, wait times, provider spending enough time and listening carefully, and courteousness of staff. Regular reporting, reviewing, and discussing of patient-experience data alongside other clinical quality and productivity measures at multilevels of the organization was critical in maximizing the use of CAHPS® data as PCMH changes were made. In sum, this study found that a system-wide accountability and data-monitoring structure relying on a standardized and actionable patient-experience survey, such as CG-CAHPS, is key to supporting the continuous QI needed for moving beyond formal PCMH recognition to maximizing primary care medical home transformation.

Keywords: PCMH, performance improvement, accountability, CAHPS®

Introduction

The patient-centered medical home (PCMH) has gained momentum as a model for primary care reform and as a response to high costs and suboptimal outcomes of the US health care system.1 The Patient Protection and Affordable Care Act (HR3590) includes funding for federal PCMH demonstration programs, and PCMH implementation is underway in a wide variety of settings.2,3 A comprehensive PCMH model deviates from more traditional models of care by striving to: 1) deliver whole-person, coordinated care to transform primary care into “what patients want it to be”; 2) value clinician–patient relationships and continuity to keep patients healthy between visits; 3) support “team-based care” freeing providers to work to their highest level of training; and 4) align use of information technology to support the triple aim of minimizing cost and maximizing quality and patient experience (refer to: http://www.ncqa.org/Programs/Recognition/Practices/PatientCenteredMedicalHomePCMH.aspx).

Implementation of PCMH requires changes to nearly every aspect of primary care practice, including clinical care, operations, administrative processes, quality measurement, and staff relationships.4,5 Full transformation may take years to achieve6 and requires resources from leaders and staff.7 For most primary care practices, adopting the PCMH model will entail not only significant redesign but also a fundamental shift in orientation and practice culture.1

In March 2014, National Committee for Quality Assurance (NCQA) released an updated version of its standards for primary care practices to attain formal recognition as a PCMH. The sixth standard, pertaining to performance measurement and quality improvement (QI), requires medical practices to measure patient/family experience and to use this data to implement continuous QI. However, little information is available on how primary care practices approach and use patient-experience data in PCMH transformation.

This paper examines a large multisite Federally Qualified Health Center (FQHC) in a large metropolitan area that initiated a corporate-wide effort of PCMH transformation and administered the visit version of the Consumer Assessment of Healthcare Providers and Systems (CAHPS®) Clinician and Group survey (CG-CAHPS). The analyses explore how the sites used these CG-CAHPS patient-experience data for their PCMH efforts.

Background

A critical element of the medical home is “a commitment to quality and QI by ongoing engagement in activities such as using evidence-based medicine and clinical decision-support tools to guide shared decision making with patients and families, engaging in performance measurement and improvement, measuring and responding to patient experiences and patient satisfaction, and practicing population health management”.8 The NCQA delineates this relationship between QI and the PCMH model in its sixth recognition standard for performance and QI, which includes measuring clinical quality, resource use and care coordination, and patient/family experience; implementing and demonstrating continuous QI (a must-pass element); reporting performance; and using certified electronic health-record technology.

The use of patient-experience surveys for QI is a common practice in the health care industry because it is essential for achieving patient-centered care.9–12 Health care organizations have had mixed success implementing patient-centeredness.10,13,14 Patient-experience data can be important to system transformation when physicians and practice administrators use and act on the data.9,12,15,16

CAHPS surveys were designed to use information from the patient’s perspective on care to improve its quality and make it more patient focused.17 The CAHPS surveys are now the US standard for information about patient experience of care because of their reliability, content, and validity.18–20 The CG-CAHPS survey asks patients to report about the quality of care received in physicians’ offices and can provide comparative information on individual clinicians, practice sites, and medical groups, as well as facilitate consumer choice, and inform and guide QI.21,22

Our analyses identify and describe a range of uses of CAHPS patient-experience data among sites within a large FQHC pursuing continuous QI and PCMH transformation.

Methods

Setting

At the time of this study, the FQHC had 26 primary care practices employing more than 100 providers and receiving nearly 1 million patient visits annually. Four years prior to this study, the FQHC’s new chief medical officer introduced two improvement initiatives – one on a robust quality monitoring and feedback system and the other on PCMH practice transformation.

Characteristics of the FQHC Corporate Quality Structure and PCMH Initiative

Quality-monitoring system

The quality-monitoring system marked a corporate-wide shift from a focus on productivity (patients seen, cycle time) to measure quality performance more broadly and used these data for improvement. The quality metrics are divided into two domains – technical quality and patient experience. In June 2012, the FQHC replaced its homegrown patient-satisfaction survey with the CG-CAHPS visit survey 2.0, supplemented by several questions. CG-CAHPS was administered to a continuous random sample every month, with the aim of 30 completes per physician, asking patients about their most recent visit. There are no repeat surveys within a given household within a 6-month period. The instrument was administered in English and Spanish to adults (patient or parent respondent) across general medical and pediatric primary care sites. Some sites supplemented the CAHPS data with other patient-experience information, including informal patient feedback, review of complaints, and patient interviews or invitations to participate in site quality and management meetings.

The Healthcare Effectiveness Data and Information Set (HEDIS®) and CAHPS data and productivity indicators are reported monthly by the corporate quality staff for sites and physicians and with a comparison to the previous 6 months’ aggregated total. These reports are then reviewed in a series of monthly meetings, including at each site with corporate leadership; in regional meetings of site medical directors to benchmark performance and share best practices; in meetings between site medical directors and individual providers; and at leadership and staff meetings at each site.

Accountability is based on quarterly goals and targets, largely determined by corporate leaders using national benchmarks. Sites have relatively broad discretion on how to achieve targets and are able to identify additional areas of low performance they would like to address or particular issues to elevate to corporate attention. The FQHC also provided financial incentives for attaining targets. Provider incentive bonuses are weighted 70% on HEDIS indicators (35% clinical quality measures and 35% CAHPS measures), 20% productivity (number of patients seen), and 10% resource use (eg, imaging orders, ER visits, hospital admissions). The CAHPS portion is based on the overall provider rating and the provider communication composite.

PCMH transformation program

All primary care sites attained NCQA’s PCMH recognition in 2012 (Level 3, based on the NCQA 2011 standards). Each site prepared its application separately but corporate staff managed the submission process.

The corporate PCMH program focused on changes related to several specific PCMH components, not directly addressed by existing QI programs but identified as general gaps in the PCMH model. These were daily huddles, care management, self-management support, referral tracking, and coordination. To address these components, corporate leadership provided resources and coordinated the use of several new types of staff, including care managers, referral coordinators, and clinical pharmacists. Some of these new staff members were located in individual sites, some were shared between several sites, and some were based at corporate offices. Corporate leadership instituted a centralized call-scheduling function and extended office hours, which at a few sites included certified urgent-care services.

Corporate leadership also recommended sites to institute a daily huddle with each physician and their medical assistants (MAs). Daily huddles are team or crossfunctional group meetings focusing on process status and identification of issues. Most physicians huddled with their MAs as “team-letts”; however, at some clinics, the physicians also huddled with their whole PCMH office team (front-office staff, MAs, providers, and care manager and sometimes the nurse supervisor), and at two of the large sites, there were also clinic-wide huddles (approximately 10 minutes) before the shift started followed by every team huddling with their provider “team-lett”. The clinic-wide huddle included the front-office staff, back-office staff, providers, and all staff who were present. They reviewed the day’s schedule, the volume of patients for each provider, staff who were out, and reports from the day before. In all provider team huddles, the “team-lett” reviewed the schedule and prepared for the day; in a huddle, there is a logistical aspect of identifying the patients and the services for the given day and then a more meaningful proactive discussion of the needs for the patient (such as laboratory tests, education, immunizations, HEDIS measures) as well as a clinical orientation that focuses on coordinating care for patients and addressing issues for chronically ill patients. The majority of physicians conducted a huddle at the beginning of the day, but a few also talked about touching base in the middle of the day as a “team-lett” and then a check-in at the end of the day for the next day. So, the huddle varies some from provider to provider across the sites. The majority of huddles was also reported to be down to 5–10 minutes, but many reported that they were approximately 15 minutes when they started implementing them.

The FQHC has continued to track particular functions of the PCMH-related staff (eg, referral-coordination performance, case-management loads) and periodically reviews the PCMH program. Most sites track “team huddle” implementation by having physicians and MAs initial their daily huddle logs, while some sites also survey providers on preferences for scheduling and how to improve patient flow.

Design

Semistructured individual interviews were conducted in October and November 2014. Interview guides were developed using literature on PCMH, continuous QI, and practice transformation in primary care. Four standardized interview guides were developed for: 1) lead physician (site medical director), 2) site clinic administrator (in charge of practice operations), 3) nurse supervisor (in charge of back-office clinical staff), and 4) corporate executive leadership at the FQHC. Interview content was similar across all the four guides with questions on staffing for administrators. To frame the context of the interview, participants were initially asked to describe their understanding of the PCMH model of care. They were then guided through a semistructured interview regarding their experiences with implementing the PCMH model at their practice (eg, personal history, changes made, the process of the practice and transformation, corporate support and resources, challenges), how they monitor and collect data including their opinion, and usefulness of their CG-CAHPS patient-experience survey, ending with a question about lessons learned from the transformation experience.

All interviews were conducted by phone. Individuals consented during the interview process and were given an honorarium ($50 to nonphysicians and $100 to physicians). Physicians are typically harder to recruit for interviews than nonphysicians and, therefore, require a higher honorarium to gain participation. Interviews lasted ~50 minutes each. All interviews were audio recorded, and field notes were transcribed.

Analysis

Transcripts were entered in ATLAS.ti, a software package for organizing, coding, and managing complex qualitative data through the analytic process. The team developed a code structure using systematic, inductive procedures to generate insights from participant responses.23 A grounded-theory approach was used to analyze the data.24 Grounded theory involves iterative development of theories about the data. It develops themes that emerge from the “ground” or responses to open-ended questions.24,25 Individual team members coded early transcripts independently, noting topics that emerged from the data. Team meetings explored the data to reach consensus on emerging topics and codes, identified discrepancies, refined concepts, and defined the preliminary codes for analysis.26 The preliminary codebook was refined and finalized through the same process.27 Coders suggested new codes for the codebook; the full analysis team discussed codebook additions or refinements and decided on them by consensus. The data presented describe the themes that emerged from the analysis.

Results

FQHC practice site characteristics

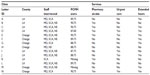

Table 1 shows practice site characteristics, and Table 2 lists staffing. Fourteen primary care practice sites serving adult and pediatric patients were included in this study. Six of these clinics are in one major metropolitan county and eight are in an adjacent one. Two pilot sites started PCMH transformation in 2011; by 2012, all 14 received NCQA Level 3 PCMH recognition.

| Table 1 Practice site characteristics |

Of the 14 sites, six have a pharmacy on-site, five provide urgent-care services, and nine offer extended hours. The number of providers per clinic ranges from 2 to 13 (median: 8), and the clinics have 4–29 MAs (median: 18). Eight clinics have an on-site clinical pharmacist and one has access to a telepharmacist. Five have an on-site clinical care coordinator, while five have an assigned clinical care coordinator off-site and four do not have a clinical care coordinator. Every clinic has an assigned referral coordinator and health-information representative (median: 3, range: 1–7); some of these are off-site. Twelve clinics also have a patient-care coordinator, and 13 sites have a health educator. Seven sites employ at least one licensed vocational nurse. Total staff per clinic ranges from 8 to 55, with a median of 30 staff.

Table 3 shows patient characteristics by site. The total number of unique patients per clinic ranges from 3,000 to 16,000 (median: 10,000, data unavailable for one clinic), with pediatric patients comprising 15%–51% (data unavailable for six clinics). Patient populations ranged from 45% to 98% Latino (median: 85%, data unavailable for one clinic). Data were unavailable because, for one clinic site, the site clinic administrator did not provide it.

Summary of central themes

Seven themes emerged on how practices used their patient-experience data for PCMH practice transformation. Each of these themes is reviewed below and includes illustrative quotes.

CAHPS was useful for QI and for selecting the focus of changes for PCMH transformation

In describing the changes and shifts necessary for PCMH transformation, lead physicians, site administrators, and nurse supervisors all mentioned that tracking patient-experience data was useful for monitoring outcomes, changes, and the quality of the patient experience. Multiple respondents at eleven sites also specifically mentioned how CAHPS was used for PCMH transformation.

Steps involved in QI

All the sites indicated the use of CAHPS data for QI, including the identifying areas for improvement, formulating actions, benchmarking performance, and monitoring and assessing the progress on the changes made. The ability to recognize areas for improvement grew as clinic staff became more familiar with CAHPS data. A nurse supervisor described the process after receiving monthly CAHPS data:

We review it and then we develop a plan to try to enhance whatever is low. We work on specific QI projects to develop strategies to decrease wait time. But we do look at all of the CAHPS questions with an eye toward wait times and duration – how long did they have to wait, their access to the provider, spend enough time. The important things regarding access are how long they have to wait, resources, availability – things that enhance their patient experience that we can improve now. (#19, Site B)

A lead clinician at another site focused on using the CAHPS for concrete data that can identify low areas for improvement and track effects of the QI method of Plan-Do-Study-Act (PDSA) implementation cycles over time.

We see if the things we implement are actually working, as you would in a typical PDSA cycle where you see if a metric goes up or down after a change. For example, we’ve set targets for providers so patients know they’re being taken care of. We use the overall doctor rating, provider explains, listens, easy to understand instructions, knows medical history, respects what you say, spends enough time with you. (#5, MD, Site A)

Most (n=12) of the sites discussed the use of open-ended comment narrative data in addition to the scores and trends from their CG-CAHPS survey data collection. These practice leaders indicated that the narrative comments were an important aspect of the patient voice.

We use the patient experience trends and comments for seeing the patient voice, and we use the comments, we review them with the doctors on their individual scores and also review the relevant items for the back office with the back-office staff and primarily use them to help identify areas of improvement. When we sit down with the management team and discuss what to improve on or if there is any course of action for a specific individual person, we use both the trends and comments. All of our data is shared across our entire clinic because we share it with the site leaders, the physicians and also other staff members. (#23, Site F)

A nurse supervisor at another site noted that she reviews the narrative comments to understand patient perception of their experience.

Usually when we get the results, I will look at the comments first to see what their perception of the experience that they had here was. (#17, Site I)

Forty percent (15/36) of practice leaders reported that they can now take a more proactive view of patient-experience data and fix problems before they became larger issues. Other practice leaders (16%; 6/36) noted that the CAHPS items “provider spends enough time with you” and being “seen within 15 minutes” are very difficult survey measures to improve. Sixty percent (22/36) of the practice leaders noted that making changes in the care processes, workflows, and office culture took time, required a constant focus, and was best approached as an ongoing process.

PCMH transformation

Eleven sites indicated that they used CAHPS specifically as part of their changes for PCMH. Practice leaders described how CAHPS data helped determine areas of PCMH transformation that needed extra attention. Seventy-five percent (23/30) of the practice leaders at these eleven sites noted that problems were brought to light by patient-experience survey results. Sites reported most often focusing on improving wait times, time spent with the provider, providers listening carefully, access issues, general customer service, or having a culture that focused on the patient. Seventy percent (21/30) of the practice leaders talked about how CAHPS helped identify a need in making changes or improving service, patient flow, urgent-care access, specialist access, and provider interaction.

As one site clinic administrator said:

We try to improve processes for patients and there is a QI focus. Do I think we would have done urgent care if we weren’t PCMH? I don’t think so. It’s good that PCMH made us think differently on how to do things. We have always tried to be innovative but PCMH has standards that we now follow and it does help us think about how to do things differently. So PCMH impacts both the process of monitoring, for example, making QI and the review of patient experience data more consistent and hard wired across the organization, but also it has impacted what we chose to do to solve issues. For example, we recently hired people (clinical care coordinator and referral specialist) just for PCMH. Because of PCMH we decided to add services, such as urgent care and specialists. We did that because we looked at the CAHPS data on access, and we looked at the data of how many people are coming to the ER after hours for things they shouldn’t be going there for ear infections. (#26, Site I)

Another site clinic administrator points to how the CG-CAHPS comments impacted their decisions for PCMH changes:

We use patient experience data a lot. In our CG-CAHPS comments, we look at what the patients say. At the beginning of our PCMH process, the comments said they wanted a more peds friendly office so we now actually have video games in the office and more toys for the kids to make it more kid friendly. Also a lot of patients said they wanted more nursing care after hours because we closed at 5:00 pm so we included extended hours within the past year and a half. (#32, Site D)

CAHPS data helped maintain a focus on patient experience

All sites reported frequent meetings and review of quality data, including CAHPS, enabled them to focus on patient experience while delivering care and making PCMH changes. Practice leaders at each site reported holding fortnightly or monthly meetings to review patient-experience and quality data with providers and other staff. Physician engagement with patient-experience data was a key facilitator of PCMH transformation. Clinics frequently engaged providers with the patient-experience scores and narrative comments at these meetings to obtain buy-in, using successes and positive trends from previous reports to keep providers motivated and engaged in future efforts. Staff receptiveness to patient-experience data often varied, but 16 percent (6/36) of the practice leaders noted that when at least a few providers were enthusiastic about the data, they served as champions and helped bring their colleagues along. One lead physician stated, “Our focus should always be on patient care. The data is a tool we use to accomplish that.” (#4, Site J) Another lead physician described using the patient-experience data.

We just want to make sure staff are supporting each other and continue to pay attention to the patient experience and patient satisfaction that we want them to pay attention to. When we started looking at the CAHPS surveys, the data reports were able to quantify the kind of experience the patients had. (#11, Site L)

A lead physician at a third site emphasized the importance not of the CAHPS data collection but of reviewing the patient-experience data and using it more.

I don’t think the data collection has been the change, but it has brought the patient experience to light. I think we’re just using the patient experience data more than in the past. Our awareness is higher and we are using the data as a coaching tool, a way to meet goals and to identify and make changes. (#13, Site N)

A nurse supervisor pointed out that staff pay attention to the patient-experience data and “listen up” when narrative comments are shared.

The staff do pay attention to the data, especially in the meeting with our medical director, as he goes over some of the comment. So, they do pay attention to the CAHPS results and they always listen up during the comments. (#23, Site F)

The most frequently cited barrier to using patient-experience data was a lack of interest in the provider-level CAHPS by some providers (38%; 5/13) and many nonprovider staff (65%; 15/23). While practice leaders generally reported that the providers at their sites were very receptive toward CAHPS scores and trends, 60% (8/13) noted that at least some of their providers, especially older ones, were not comfortable being evaluated on patient experience. Nineteen percent (7/36) of practice leaders emphasized the need to explain the importance of CAHPS survey results and how they relate to daily practice. Sixty-one percent (22/36) of practice leaders indicated that the constant attention to CAHPS data trends and continual review of it was how they encouraged physicians to improve their patient-experience scores.

Patient experience is something that we bring up so much that it’s in the staff’s heads. I think if we just brought it up occasionally, it would be tuned out. It’s such a crucial part of the office that the staff know how we are performing. (#26, Site I)

CAHPS data was used alongside other data, not in isolation

Lead physicians, nursing supervisors, and site clinic administrators alike all indicated that the patient-experience data were provided by the corporate quality department together with clinical quality and productivity indicators and reviewed alongside these other data as part of their quality goals and PCMH process. One nurse supervisor said, “With PCMH, we have a lot more meetings to review data, and not just patient-experience data, also measures like HEDIS”. (#22, Site E) A lead physician said, “We have a quality meeting once a month with all the staff and review everything – cycle time, no shows, HEDIS measures, CAHPS, hospitalizations, ER visits” (#9, Site E).

One site clinic administrator described how he uses other data to help better understand patient-experience data:

Once I receive the quality report, I do two things; I look at the data (whether it’s new or something we’ve been following) for trends. And then I look at anything else that might be related to that data. For instance, if I’m looking at patient-experience wait time then I also look at our cycle time data. I might also look at how many patients we saw on that day, so I know if I’m not bringing in enough staff that day. Then I check to see what the schedule was like to see if the patient was double-booked. Then I share my observations with my management team and allow them to really dissect it and identify areas where we need to improve. After I do all of that, I look to see what other clinics are doing to see if what’s happening here is also happening at other sites. (#34, Site F)

CAHPS was used for monitoring site-level trends and changes

All sites indicated using CAHPS data for reviewing trends as well as benchmarking and making comparisons to other sites. Forty-four percent (16/36) of the practice leaders identified CAHPS’ standardization, capacity for valid comparison and trending, and its appropriate unit of analysis as its best features for data monitoring.

Site-level trending and performance monitoring

One lead physician related the value of looking at trends.

I’m very open about our site’s patient-experience trends. I say the actual numbers aren’t the overall end-all/be-all. It’s more about whether trends are going in the right direction. That gives us a better idea of whether we need to address certain areas. I tend to focus on the overall provider rating, communication quality, “listens carefully.” Initially a good number of our providers had challenges with “listens carefully,” but they’re doing fine now. The measure I’m working on now is the “provider spends enough time with you”. (#7, C Site)

A site clinic administrator explains using the CAHPS measures and trends for staff performance.

We look at CAHPS on a monthly basis. We look at the trending. At every all-staff meeting, we share the CAHPS data, and then for every back office staff we share the data related to their work – treating patients with respect, listens carefully to what I have to say – those types of things. And with the front office it is more how the clerk/receptionist is treating me, do they treat me with respect, that type of thing. (#29, Site A)

All (13/13) the lead physicians, 77% (10/13) of site clinic administrators, and 40% (4/10) of the nurse practitioners discussed sharing patient-experience and quality data with staff across the practice to identify trends and areas of improvement. One nurse supervisor described using the CAHPS data over time with staff to evaluate progress with patient experience and their Acknowledge, Introduce, Duration, Explain, and Thank you (AIDET®) training for interacting with patients:

We go over patient experience data in all-staff meetings. The site clinic administrator is really good at going over the experience scores, where we need to improve, what we need to enhance. We use our communication tools like AIDET to develop that better patient experience. We usually address that at least once a month with staff because we figure that is an issue. The whole idea is to provide the patient a good experience. (#19, Site B)

Benchmarking

All the sites, including all clinic administrators (13/13), 54% of the lead physicians (7/13), and half (5/10) of the nurse supervisors compared their own site data to that of other sites within the FQHC and other groups across the nation. This benchmarking is facilitated by the inclusion of site-comparison data in the reports provided by the corporate quality department, as well as the monthly regional and site meetings in which comparative–trend data can be discussed. A site clinic administrator highlighted benchmarking as a strength of the FQHC’s quality system:

We have goals that are benchmarked across all sites, and the good thing about the new QI data monitoring system using the CAHPS and HEDIS data is we can review everyone‘s data and compare ourselves to each other. (#26, Site I)

A lead physician at another site noted the value of viewing trends when benchmarking, particularly for a struggling site:

I look at “Compared to Other Sites” section of the report. We have been behind for quite some time, but like I said, we’ve had all these challenges with lack of providers and difficulty with access, so what I’m looking for now is incremental increases on a monthly basis. If I see that each month we are going up, it does put a smile on my face, because I know people are trying hard. (#13, Site N)

CG-CAHPS content was extensively used to identify, analyze, and monitor specific areas for improvement

CAHPS data allowed for multilevel discussions on particular areas that needed improvement and how to address them. One clinic administrator provided an example of focusing on a specific CAHPS item and identifying potential issues and solutions.

We’ve been working on a nine-month QI project using CAHPS data to reduce the time it takes from a patient walking in the door to seeing the doctor. It is aimed specifically at the item on whether the patient sees a provider within 15 minutes. After seeing our low scores on this, we got a lot of feedback from the staff on what slows down their process. And we found that there were specific things in their day that weren’t assigned to anyone. So we tried to create new roles to assign specific people to these miscellaneous tasks. Also every provider wants things done a different way, so we created standard practices, such as the setup of the room. This standardization of practices allowed the medical assistants to do things more quickly and consistently. (#36, Site M)

A nurse supervisor at another site described how the CAHPS can differentiate performance by department or staff roles to identify underlying issues within a problem area.

I find the CAHPS data useful because it breaks the data on the patient visit down in different departments, for example it will say “Was the receptionist was helpful?,” “Were the nurses helpful?,” “Did you see your provider within 15 minutes?” So, in my case, if nurses scored low, there is obviously a problem in the back that will have to be addressed. It could have been a comment an medical assistant made or just anything, so I use that data to dig in a little bit more with my staff. (#17, Site I)

CAHPS was used for monitoring provider performance and individual coaching within a transparent environment of accountability

All the sites used the CAHPS data for discussing individual performance with physicians, while the majority (n=10) also indicated that they review patient comments with individual physicians. The practice leaders also shared the data and comments with other clinic leaders and staff in other venues. Forty-three percent (6/14) of the sites also described linking the patient-experience data to care teams and specific points in the patient visit to facilitate changes and as part of the feedback/coaching mechanism to provider teams (rather than only the physician). The structure and sharing of data were established within an environment of transparency, competition, and recognition to stimulate accountability. This was frequently cited by sites as critical to maintaining momentum during PCMH transformation.

Provider-level performance monitoring

Eighty-four percent (11/13) of the lead physicians described how they integrate the provider-level patient-experience data into their conversations with individual physicians on performance, goals, and areas of improvement. One said:

When I receive our CAHPS data, I track it compared to the previous CAHPS; I look at the comments. I see if there are any complaints or any glaring mistakes. Then I use it as part of my one-on-one meetings. I see my providers for about 40 minutes or so every quarter. (#10, Site F)

Another lead physician described his process:

When I receive the quality reports with our HEDIS and CAHPS metrics, the very first thing I do is I look for me, obviously. After that, I look through “All the Clinic” section. I try to identify the providers who may be having issues. Then I have a monthly rounding with each provider, and we go over the CAHPS scores. I send them out before our meeting so it’s not a surprise. We try to come up with a plan on how we are going to try to improve certain scores. Then, I also review the metrics for our site-level goals and measures, I look at that globally for our site compared to other sites. (#13, Site N)

The clinic administrator at the same site similarly described using CAHPS data to review provider-level performance and give feedback and coaching.

The patient-experience data comes to me directly. I review it and I collate it and sit down with my site medical director and we look at trends; we look at our ratings overall as a clinic and then we dive down into the specific providers. When we identify those that are not doing well, we dive deeper into the questionnaire to find what areas we can help with and coach them on. We keep a running trend so we can see if they are improving overall and if not overall, are they improving on individual questions, so we can coach them. (#38, Site N)

Six of the 14 sites discussed using CAHPS data to review performance with both front-office and back-office staff. One clinic administrator at a site with staff assigned to specific physicians described their process of providing CAHPS data to front-office staff:

I’ve even asked for additional levels so that I can track the two front-office questions about being helpful and respectful. So I’ve asked my quality department to please break out those by provider. So now I sit down with the front-office staff and do the same thing my medical director does with physicians. I look at their scores and say okay, here is your score. Let’s talk about it. Because of the way our staffing is, we are also connecting the CAHPS data straight to the office staff that supports a provider, which also connects to our data on orders and other operational data which helps us understand the process of where things are falling apart for an individual provider. (# 35, Site L)

Incentives, recognition, and accountability

Thirty-eight percent (5/13) of the lead physicians, 69% (9/13) of the site clinic administrators, and 20% (2/10) of the nurse supervisors attributed the attention, given the data to the accountability, incentives, and recognition tied to it. One lead physician explained, “The CAHPS data is tied to physician and their staffs’ bonuses. I also bug the physicians and they have to explain things to me. They don’t really want to do that” (#10, Site F). A site clinic administrator emphasized the nonpunitive incentives for individual recognition and opportunities for coaching that help garner attention to the CAHPS reports:

People pay attention to these patient experience data reports for various reasons. They want to see how they are doing, but it’s also true that if they are not doing well, they have this opportunity to have coaching to actually improve their scores. It’s an opportunity to get coaching and feedback. And get recognized if they do well. (#28, Site K)

Practice leaders at 43% of the sites (6/14) discussed using CAHPS data in bonus calculations. One site clinic administrator said

We use it to make decisions, to base provider compensation on. Each provider has his or her own CAHPS scores, which we use to provide compensation. Providers feel like it is valid. We have a rolling 6-month average that we use; patients will drop off, new patients will be added for the next month. (#29, Site A)

Sixty-one percent (22/36) of the practice leaders, including almost all (10/13) of the lead physicians, half (7/13) of the site clinic administrators, and half (5/10) of the nurse supervisors, pointed to recognizing teams and its link to maintaining momentum in PCMH transformation. As one site lead physician described:

This past quarter we started to focus on using the data to drive the momentum we’ve been able to establish. We recognize teams that perform well at our monthly all-staff meetings and weekly provider meetings. On a quarterly basis we use information from the quality reports to recognize teams. We’ve been recognizing the provider’s whole team, front office and back office, and inviting them to share their best practices with the rest of the staff. I think it’s been helpful for morale and maintaining the momentum we’ve generated. (#7, Site C)

Importance of transparency

Ninety-two percent (12/13) of the lead physicians and all 13 of the site clinic administrators indicated that being transparent with quality and patient-experience data and comparing performance between sites motivated providers and administrators to improve in areas of low performance. Similarly, providers and administrators at high-performing sites saw widespread praise and were motivated to keep the scores high. These high-performing sites were encouraged to share their best practices with other sites, creating many unofficial pilot tests of various techniques for PCMH transformation. One nurse supervisor noted how providers and staff are eager to see the data and use it constructively to improve patient care.

We are very transparent because we all take ownership in regard to physicians or provider teams that are doing extremely well and the ones that aren’t doing well. And it’s not to pull someone out and make them feel bad, it’s more as a positive thing. We say, although these are your numbers, we are also comparing every month and we want to see those numbers go up. The team we have in the back office, they are really dedicated to what they do, they really care and they want to improve. They want to see what they can do better, they want to make sure our patients want to come back to us. They want to know what our patients think about us and if they are saying negative things we want to know why. (#21, Site D)

Site clinic administrators and nursing supervisors at 12 of the 14 sites reported posting patient-experience data on quality boards visible to staff within the site. Sixty-seven percent (8/12) of the sites focus posts on specific CAHPS items each month, while other sites (5/12) focus on comparative trends and competition with other sites.

Our CAHPS surveys come to us once a month, all the scores for each individual provider are shared with them individually at one-on-one meetings with our site medical director. We always post at least two of the key questions that are on there: “overall provider rating,” “the staff treats me with courtesy and respect.” And we actually post those on our quality board every month. (#27, Site J)

We have a quality board and put all of our data up on the board. We show improvement, how we compare with other clinics or regions in [Corporate Name]. These measures are always a competition within our own sites and counties. I have great and wonderful staff that is always looking for ways to improve quality and measures for everyone. (#29, Site A)

CG-CAHPS contained relevant content that was applied to PCMH improvements, but changes to instrument length, reading level, and specific items were suggested

Practice leaders’ conception of PCMH transformation in this FQHC focused on the ability to provide more services in one place, enhance access to more specialists, and act as a “one-stop shop” for patients. Practice leaders at all 14 sites indicated that CAHPS contained valuable content that “aligned with their mission of improving the patient experience”, citing specifically the most useful items as the overall provider rating, provider communication questions (eg, explains things, listens carefully, easy to understand instructions, shows respect, and spends enough time), and the supplemental item “would recommend” site. Practice leaders at eleven of the 14 sites indicated that CAHPS content areas were relevant to changes needed to implement PCMH. They specifically noted the usefulness of CAHPS items related to office-staff courteousness, wait time and patient access, provider–patient interactions, and access questions. Twenty-three percent (3/13) of the lead physicians described the “physician knows medical history” item as particularly relevant to PCMH.

A nursing supervisor in one site explained the process of mapping CAHPS items to the PCMH model.

We make sure that the provider paid attention to the patient. So in the patient-experience survey, it is the questions that pertain to the provider and that we met the patient needs, which would be ones like getting an appointment as soon as you need it, or getting the information you need, or spending enough time with the doctor and that the back office was courteous and friendly. As nurse supervisor, I focus on the back office; the medical director focuses on the providers and they have their meetings regarding those data, but each department focuses on their own areas. But we all focus on things that are low, and the quarterly goals and measures. (#20, Site C)

Thirty-nine percent (14/36) of the practice leaders said that the CAHPS survey was too long for patients to complete and believed that it could be shortened to approximately 20 questions fitting onto two pages. Eleven percent (4/36) of the practice leaders also indicated that the reading level was too high for their patients – a particularly important issue in a safety net population.

Regarding applicability to PCMH, 28% (10/36) of the practice leaders suggested the need to add items related to specific PCMH activities, such as the patient’s experiences with referrals, interactions with clinical pharmacists, access to specialists, and care management and coordination.

Discussion

Several key implications emerged from our analysis of in-depth interviews with several types of leaders in multiple practices concerning QI, patient experience, and PCMH programs in this large urban FQHC. Overall, the analysis showed that practice leaders use the CG-CAHPS patient-experience data to implement continuous QI. They used it primarily for identifying the areas of improvement, regular monitoring of change, trending and benchmarking performance, developing a shared vision, incentivizing doctors, and coaching individual providers and staff on performance. Practice leaders described how they used the CAHPS data to improve important aspects of the patient experience in the PCMH model of care. These aspects included access to routine and urgent care, wait times, providers spending enough time and listening carefully, and staff courteousness. Several practice leaders indicated the need to add questions about PCMH activities like referrals, use of a clinical pharmacist, access to specialists, or questions related to care management and care coordination. These areas align with the content areas in the PCMH CAHPS item set,28,29 which was developed to be added onto the CG-CAHPS 12-month survey and designated by NCQA for the distinction in patient-experience reporting. Practice leaders at sites with MAs assigned to specific physicians described linking the patient-experience data to care teams and specific points in the patient visit to facilitate QI or PCMH changes and for feedback and coaching to provider teams. Practice leaders’ experiences demonstrate that the CG-CAHPS data were specific, actionable, and could help identify key aspects of patient experience central to PCMH transformation. Practice leaders described the CAHPS data as a tool for sustaining focus on patient experience.

Just less than half (44%) of the practice leaders identified standardization, capacity for valid comparison and trending, and appropriate unit of analysis as the best features of CAHPS for data monitoring. This finding reinforces previous analyses of the effectiveness of patient surveys in QI, namely, that their usefulness depends on design, standardization, construct validity, reliability, and internal/external validity.12,15 Hence, the initial corporate decision to administer the CG-CAHPS survey as a part of the data-monitoring system for PCMH and QI activities enabled the practice leaders at all levels at these FQHC sites to effectively act on their patient-experience data and make changes.

The regular reporting, reviewing, and discussing of patient-experience data alongside other clinical quality and productivity measures at multilevels of the organization was a critical component in maximizing the use of the CAHPS data as PCMH changes were made. Davies et al9 found that team leaders indicated frequent reports that were a powerful stimulus to improvement, but that they needed time and support to engage staff and clinicians in changing their behavior. In this study, the CG-CAHPS data trends and quarterly goals were reviewed in monthly meetings between each site individually and corporate leadership, regional meetings of site medical directors to benchmark performance, meetings with site leadership and their staff, and meetings between site medical directors and individual providers.

The constant review and presence of the patient-experience data alongside other metrics of productivity and quality reinforced its importance to the organization. Practice leaders were actively engaged in understanding what was impacting the patient experience and how they could improve it, knowing that they would not only be asked about it (at the corporate, regional, and site meetings) but also be compared to others (at the provider- and site-level).

The regular management-level and staff-level meetings held at the sites to discuss the patient-experience data were identified by practice leaders as facilitating physician and staff buy-in, a shared vision about PCMH, and provided a forum for ongoing learning that is required for continually improving patient-centered care. The transparency of site-level performance, in combination with the accountability for reaching targets, is why physicians and staff paid attention to the patient-experience data.

The findings from this study may not be generalizable to all US practices or to all FQHCs given the size, urban setting, and unique corporate structure of the FQHC under study. In particular, the extensive quality-monitoring system critical to regularly providing the spectrum of clinical, productivity, and patient-experience data at multiple levels of care may not be characteristic of many primary care organizations. However, the features of this case and our research design permitted exploring a rich range of uses for CAHPS patient-experience data with practice-level leadership who have considerable experience in performance measurement for QI and implementing PCMH changes. As shown, there was a strong consensus among these individuals regarding CAHPS and QI and their relationship to PCMH transformation.

In summary, this study identified several key factors shaping the use of patient-experience data as part of the PCMH model central to the success of primary care reform. Practice leaders regularly engaged and used CAHPS scores, trends, and benchmarks alongside other data while making QI and PCMH changes. Regular reporting, reviewing, and monitoring of data at all levels (corporate, regional, and site level) kept a clear focus on patient experience and developed a shared vision among providers and staff. Establishing a forum of open learning and sharing of best practices across sites and regular coaching of individuals on their performance in HEDIS and patient experience spurred change and incremental improvement, while maintaining a high level of awareness on the patient experience. The transparency of scores and performance at the sites, quarterly goals/targets, and staff recognition and reward supported an environment of accountability. Additionally, a system-wide accountability and data-monitoring structure that relied on a standardized, objective, specific, and actionable patient-experience survey tool, such as the CG-CAHPS survey, was a key component to supporting the continuous QI needed for moving beyond attainment of formal PCMH recognition to maximizing primary care medical home transformation. As increasing numbers of US practices seek PCMH recognition and transformation, researchers will need to continue to assess the structures and processes that drive the effective use of patient-experience survey data in improving patient centeredness.

Acknowledgment

Preparation of this manuscript was supported through a cooperative agreement from the Agency for Healthcare Quality (contract number U18 HS016980).

Disclosure

The authors report no conflicts of interest in this work.

References

Cronholm PF, Shea JA, Werner RM, et al. The patient centered medical home: mental models and practice culture driving the transformation process. J Gen Intern Med. 2013;28(9):1195–1201. | |

Fields D, Leshen E, Patel K. Analysis and commentary. Driving quality gains and cost savings through adoption of medical homes. Health Aff (Millwood). 2010;29(5):819–826. | |

http://www.pcpcc.org [homepage on the Internet]. Proof in practice: a compilation of patient centered medical home pilot and demonstration projects; 2009. Available from: https://www.pcpcc.org/guide/proof-practice. Accessed March 20, 2015. | |

Nutting PA, Miller WL, Crabtree BF, Jaen CR, Stewart EE, Stange KC. Initial lessons from the first national demonstration project on practice transformation to a patient-centered medical home. Ann Fam Med. 2009;7(3):254–260. | |

Wagner EH, Gupta R, Coleman K. Practice transformation in the safety net medical home initiative: a qualitative look. Med Care. 2014; 52(11 Suppl 4):S18–S22. | |

Sugarman JR, Phillips KE, Wagner EH, Coleman K, Abrams MK. The safety net medical home initiative: transforming care for vulnerable populations. Med Care. 2014;52(11 Suppl 4):S1–S10. | |

Stout S, Weeg S. The practice perspective on transformation: experience and learning from the frontlines. Med Care. 2014;52(11 Suppl 4):S23–S25. | |

http://www.ahrq.gov [homepage on the Internet]. Creating capacity for improvement in primary care: the case for developing a quality improvement infrastructure; 2013. Available from: http://www.ahrq.gov/professionals/prevention-chronic-care/improve/capacity-building/pcmhqi1.html. Accessed March 23, 2013. | |

Davies E, Shaller D, Edgman-Levitan S, et al. Evaluating the use of a modified CAHPS survey to support improvements in patient-centred care: lessons from a quality improvement collaborative. Health Expect. 2008;11(2):160–176. | |

Friedberg MW, SteelFisher GK, Karp M, Schneider EC. Physician groups’ use of data from patient experience surveys. J Gen Intern Med. 2011;26(5):498–504. | |

Goldstein E, Cleary PD, Langwell KM, Zaslavsky AM, Heller A. Medicare managed care CAHPS: a tool for performance improvement. Health Care Financ Rev. 2001;22(3):101–107. | |

Patwardhan A, Spencer CH. Are patient surveys valuable as a service improvement tool in health services? An overview. J Healthc Leadersh. 2012;4:33–46. | |

Luxford K, Safran DG, Delbanco T. Promoting patient-centered care: a qualitative study of facilitators and barriers in healthcare organizations with a reputation for improving the patient experience. Int J Qual Health Care. 2011;23(5):510–515. | |

Katz-Navon T, Naveh E, Stern Z. The moderate success of quality of care improvement efforts: three observations on the situation. Int J Qual Health Care. 2007;19(1):4–7. | |

Browne K, Roseman D, Shaller D, Edgman-Levitan S. Analysis and commentary. Measuring patient experience as a strategy for improving primary care. Health Aff (Millwood). 2010;29(5):921–925. | |

Zuckerman KE, Wong A, Teleki S, Edgman-Levitan S. Patient experience of care in the safety net: current efforts and challenges. J Ambul Care Manage. 2012;35(2):138–148. | |

Darby C, Crofton C, Clancy CM. Consumer assessment of health providers and systems (CAHPS): evolving to meet stakeholder needs. Am J Med Qual. 2006;21(2):144–147. | |

Crofton C, Lubalin JS, Darby C. Consumer assessment of health plans study (CAHPS). Foreword. Med Care. 1999;37(3 Suppl):MS1–MS9. | |

Darby C, Hays RD, Kletke P. Development and evaluation of the CAHPS hospital survey. Health Serv Res. 2005;40(6 pt 2):1973–1976. | |

Hays RD, Martino S, Brown JA, et al. Evaluation of a care coordination measure for the consumer assessment of healthcare providers and systems (CAHPS) Medicare survey. Med Care Res Rev. 2014;71(2):192–202. | |

Agency for Healthcare Research and Quality. The CAHPS Connection. Vol 3. Rockville, MD: AHRQ; 2007. [Publication No 07-0005-2-EF]. | |

Hays RD, Chong K, Brown J, Spritzer KL, Horne K. Patient reports and ratings of individual physicians: an evaluation of the DoctorGuide and consumer assessment of health plans study provider-level surveys. Am J Med Qual. 2003;18(5):190–196. | |

Bradley EH, Curry LA, Devers KJ. Qualitative data analysis for health services research: developing taxonomy, themes, and theory. Health Serv Res. 2007;42(4):1758–1772. | |

Glaser B, Strauss A. The Discovery of Grounded Theory; Strategies for Qualitative Research. Chicago: Aldine Publishing Company; 1967. | |

Charmaz K. Grounded theory: objectivist and constructivist methods. In: Denzin N, Lincoln Y, editors. Handbook of Qualitative Research. Thousand Oaks: Sage Publications; 2000:509–535. | |

Miller W, Crabtree B. The dance of interpretation. In: Miller W, Crabtree B, editors. Doing Qualitative Research in Primary Care: Multiple Strategies. 2nd ed. Newbury Park: Sage Publications; 1999:127–143. | |

Hill CE, Knox S, Thompson BJ, Williams EN, Hess SA, Ladany N. Consensual qualitative research: an update. J Couns Psychol. 2005; 52(2):196–205. | |

Hays RD, Berman LJ, Kanter MH, et al. Evaluating the psychometric properties of the CAHPS patient-centered medical home survey. Clin Ther. 2014;36(5):689–696. e681. | |

Scholle SH, Vuong O, Ding L, et al. Development of and field test results for the CAHPS PCMH Survey. Med Care. 2012;50(Suppl):S2–S10. |

© 2015 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2015 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.