Back to Journals » Advances in Medical Education and Practice » Volume 5

How valid are commercially available medical simulators?

Authors Stunt J, Wulms P, Kerkhoffs G, Dankelman J, van Dijk C, Tuijthof G

Received 4 March 2014

Accepted for publication 12 April 2014

Published 14 October 2014 Volume 2014:5 Pages 385—395

DOI https://doi.org/10.2147/AMEP.S63435

Checked for plagiarism Yes

Review by Single anonymous peer review

Peer reviewer comments 3

JJ Stunt,1 PH Wulms,2 GM Kerkhoffs,1 J Dankelman,2 CN van Dijk,1 GJM Tuijthof1,2

1Orthopedic Research Center Amsterdam, Department of Orthopedic Surgery, Academic Medical Centre, Amsterdam, the Netherlands; 2Department of Biomechanical Engineering, Faculty of Mechanical, Materials and Maritime Engineering, Delft University of Technology, Delft, the Netherlands

Background: Since simulators offer important advantages, they are increasingly used in medical education and medical skills training that require physical actions. A wide variety of simulators have become commercially available. It is of high importance that evidence is provided that training on these simulators can actually improve clinical performance on live patients. Therefore, the aim of this review is to determine the availability of different types of simulators and the evidence of their validation, to offer insight regarding which simulators are suitable to use in the clinical setting as a training modality.

Summary: Four hundred and thirty-three commercially available simulators were found, from which 405 (94%) were physical models. One hundred and thirty validation studies evaluated 35 (8%) commercially available medical simulators for levels of validity ranging from face to predictive validity. Solely simulators that are used for surgical skills training were validated for the highest validity level (predictive validity). Twenty-four (37%) simulators that give objective feedback had been validated. Studies that tested more powerful levels of validity (concurrent and predictive validity) were methodologically stronger than studies that tested more elementary levels of validity (face, content, and construct validity).

Conclusion: Ninety-three point five percent of the commercially available simulators are not known to be tested for validity. Although the importance of (a high level of) validation depends on the difficulty level of skills training and possible consequences when skills are insufficient, it is advisable for medical professionals, trainees, medical educators, and companies who manufacture medical simulators to critically judge the available medical simulators for proper validation. This way adequate, safe, and affordable medical psychomotor skills training can be achieved.

Keywords: validity level, training modality, medical education, validation studies, medical skills training

Introduction

Simulators for medical training have been used for centuries. More primitive forms of physical models were used before plastic mannequins and virtual systems (VS) were available.1 Since then, simulation in medical education has been deployed for a variety of actions, such as assessment skills, injections, trauma and cardiac life support, anesthesia, intubation, and surgical skills (SuS).2,3 These actions require psychomotor skills, physical movements that are associated with cognitive processes.4,5 Among these psychomotor skills are skills that require (hand–eye) coordination, manipulation, dexterity, grace, strength, and speed. Studies show that medical skills training which requires physical actions can be optimally performed by actual practice in performing these actions, eg, instrument handling.6 This is explained by the fact that when learning psychomotor skills, the brain and body co-adapt to improve the manual (instrument) handling. This way, the trainee learns which actions are correct and which are not.5

Four main reasons to use simulators instead of traditional training in the operating room have been described.6 Firstly, improved educational experience; when simulators are placed in an easily accessible location, they are available continuously. This overcomes the problem of dependency on the availability of an actual patient case. Simulators also allow easy access to a wide variety of clinical scenarios, eg, complications.6 Secondly, patient safety; simulators allow the trainee to make mistakes, which can equip the resident with a basic skills level that would not compromise patient safety when continuing training in the operating room.7–14 Thirdly, cost efficiency; the costs of setting up a simulation center are in the end often less than the costs of instructors’ training time, and resources required as part of the training.6 Moreover, the increased efficiency of trainees when performing a procedure adds to the return on investment achieved by medical simulators, as Frost and Sullivan demonstrated.15 Lastly, simulators offer the opportunity to measure performance and training progress objectively by integrated sensors that can measure, eg, task time, path length, and forces.6,9,16–18

With the increased developments and experiences in research settings, a wide variety of simulators have become commercially available. The pressing question is whether improvements in performance on medical simulators actually translates into improved clinical performance on live patients. Commercially available simulators in other industries, such as aerospace, the military, business management, transportation, and nuclear power, have been demonstrated to be valuable for performance in real life situations.19–23 Similarly, it is of high importance that medical simulators allow for the correct training of medical skills to improve real life performances. Lack of proper validation could imply that the simulator at hand does not improve skills or worse, could cause incorrect skills training.24,25

Since validation of a simulator is required to guarantee proper simulator training, the aim of this review is to determine the availability of medical simulators and whether they are validated or not, and to discuss their appropriateness. This review is distinctive as it categorizes simulators based on simulator type and validation level. In this way, it provides a complete overview of all sorts of available simulators and their degree of validation. This will offer hospitals and medical educators, who are considering the implementation of simulation training in their curriculum, guidelines on the suitability of various simulators to fulfil their needs and demands.

Methods

The approach to achieve the study goal was set as follows. Firstly, an inventory was made of all commercially available simulators that allow medical psychomotor skills training. Secondly, categories that represent medical psychomotor skills were identified and each simulator was placed in one of those categories. Each category will be discussed and illustrated with some representative simulators. Thirdly, validity levels for all available simulators were determined. Lastly, study designs of the validity studies were evaluated in order to determine the reliability of the results of the validity studies.

Inventory of medical simulators

The inventory of commercially available medical simulators was performed by searching the Internet using search engines Google and Yahoo, and the websites of professional associations of medical education (Table 1). The search terms were split up in categories to find relevant synonyms (Table 2). Combinations of these categorized keywords were used as search strategy. For each Internet search engine, a large number of “hits” were found. Relevant websites were selected using the following inclusion criteria: the website needs to be from the company that actually manufactures and sells the product; the simulator should be intended for psychomotor skills training in the medical field (this implies that the models, mannequins or software packages that only offer knowledge training or visualization were excluded); if the company’s website provided additional medical simulators, all products that fulfil the criteria were included separately; the website should have had its latest “update” after January 2009, so that it can be expected that the company is still actively involved in commercial activities in the field of medical simulators.

| Table 1 List of societies and associations concerning medical education and simulation |

| Table 2 Search terms |

Categorization of simulator type

For our study purpose, medical simulators were categorized based on their distinct characteristics: VS and physical mannequins with or without sensors (Figure 1).14,26 VS are software based simulators. The software simulates the clinical environment that allows practicing individual clinical psychomotor skills. Most of these simulators have a physical interface and provide objective feedback to the user about their performance with task time as the most commonly used performance parameter.26 The physical mannequins are mostly plastic phantoms simulating (parts of) the human body. The advantage of physical models is that the sense of touch is inherently present, which can provide a very realistic training environment. Most models do not provide integrated sensors and real-time feedback. These models require an experienced professional supervising the skills training. Some physical models have integrated sensors and computer software which allow for an objective performance assessment.14,27,28 As these simulators take over part of the assessment of training progress, it might be expected that they are validated in a different manner. Therefore, a distinction was made between simulators that provide feedback and simulators that do not.

Categorization of medical psychomotor skills

Skills were categorized in the following categories as they are the most distinct psychomotor skills medical professionals will learn during their education starting at BSc level: 1) manual patient examination skills (MES): an evaluation of the human body and its functions that requires direct physical contact between physician and patient; 2) injections, needle punctures, and intravenous catheterization (peripheral and central) skills (IPIS): the manual process of insertion of a needle into human skin tissue for different purposes such as taking blood samples, lumbar or epidural punctures, injections or vaccinations, or the insertion of a catheter into a vein; 3) basic life support skills (BLSS).29,30 BLSS refers to maintaining an open airway and supporting breathing and circulation, which can be further divided into the following psychomotor skills: continued circulation, executed by chest compression and cardiac massage; opening the airway, executed by manually tilting the head and lifting the chin; continued breathing, executed by closing the nose, removal of visible obstructions, mouth-to-mouth ventilation, and feeling for breathing;31 4) SuS: indirect tissue manipulation for diagnostic or therapeutic treatment by means of medical instruments, eg, scalpels, forceps, clamps, and scissors. Surgical procedures can cause broken skin, contact with mucosa or internal body cavities beyond a natural or artificial body orifice, and are subdivided into minimally invasive and open procedures.

Inventory of validation and study design quality assessment

The brand name of all retrieved simulators added to the keyword “simulator” was used to search PubMed for scientific evidence on validity of that particular simulator. After scanning the abstract, validation studies were included and the level of validation of that particular simulator was noted.32 Studies were scored for face validity24,32,33 (the most elementary level), construct validity,33 concurrent validity,24,32 and the most powerful level, predictive validity.24,32,33

The validation studies were evaluated for their study design using Issenberg’s guidelines for educational studies involving simulators (Table 3).34 Each study was scored for several aspects concerning the research question, participants, methodology, outcome measures, and manner of scoring (Table 4). An outcome measure is considered appropriate when it is clearly defined and measured objectively.

| Table 3 Checklist for the evaluation of validation study, using Issenberg’s guidelines for educational studies involving simulators |

| Table 4 Outcome measures to test the efficacy of the simulator (the parameters indicate psychomotor skills performance) |

The validation studies demonstrated substantial heterogeneity in study design, therefore, analysis of the data was performed qualitatively and trends were highlighted.

Results

Inventory and categorization of medical simulators

In total, 433 commercially available simulators were found (see Supplementary material), offered by 24 different companies. From these simulators, 405 (93.5%) are physical models and 28 (6.5%) are virtual simulators (Figure 1). An almost equal distribution of simulators is available for each of the four defined skills categories (Figure 1), with the SuS category containing the noticeably highest portion of virtual reality simulators (86%). Objective feedback was provided by the simulator itself in 65 cases (15%).

Simulators for patient examination (MES) training provide the possibility for physical care training, eg, respiratory gas exchange, intubation, and anesthesia delivery.28,35–38 The typical simulators in this category predominantly consist of (full body) mannequins that have anatomical structures and simulate physiological functions such as respiration, and peripheral pulses (eg, Supplementary material: simulators 3 and 21).

IPIS simulators provide training on needle punctures and catheterization. Such simulators usually consist of a mimicked body part, eg, an arm or a torso. An example is the Lumbar Puncture simulator (Kyoto Kagaku Co., Kitanekoya-cho Fushimi-ku Kyoto, Japan).39,40 This simulator consists of a life-like lower torso with a removable “skin” that does not show the marks caused by previous needle punctures. Integral to the simulator is a replaceable “puncture block”, which can represent different types of patients (eg, “normal”, “obese”, “elderly”), and which is inserted under the “skin”.41

BLSS simulators allow for emergency care skills training, such as correct head tilt and chin lift, application of cervical collars, splints, and traction or application to spine board.42 These simulators predominantly consist of full body mannequins having primary features such as anatomically correct landmarks, articulated body parts to manipulate the full range of motion, removable mouthpieces and airways, permitting the performance of chest compressions, oral or nasal intubation, and simulated carotid pulse (eg, Supplementary material: simulators 243, 245, and 270). SuS simulators are used for skills training required when performing open or minimally invasive surgery, like knot tying, suturing, instrument and tissue handling, dissection, simple and complex wound closure. Both physical and virtual simulators form part of this category. A representative example of a physical simulator is the life-sized human torso with thoracic and abdominal cavities and neck/trachea. Such a model is suited to provide training on a whole open surgery procedure, including preparing the operative area, (local) anesthesia, tube insertion, and closure (eg, Supplementary material: 355 and 357). The torso is covered with a polymer that mimics the skin and contains red fluid that mimics blood. Virtual reality systems start to take an important place in minimally invasive surgical procedure training, especially for hand–eye co-ordination training. The VS provide instrument handles with or without a phantom limb and a computer screen in which a virtual scene is presented (eg, the Symbionix simulators 316–322 [Simbionix, Cleveland, OH, USA] and the Simendo simulators 425–426 [Simendo B.V., Rotterdam, the Netherlands] in the Supplementary material). Software provides a ‘‘plug-and-play’’ connection to a personal computer via a USB port.43,44

Inventory of validation and study design quality assessment

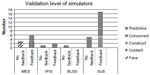

One hundred and thirty validation studies evaluated 35 commercially available medical simulators for levels of validity ranging from face to predictive validity (Figure 2). From these 35 simulators, two (5.7%) simulators were tested for face validity, four (11.4%) for content validity, seven (20%) for construct validity, 14 (40%) for concurrent validity and 8 (22.9%) for predictive validity (Figure 2). References of the validated simulators are shown in the Supplementary material (between brackets). Twenty-four (37%) simulators that provide objective feedback have been validated, from which six occurred in MES, one in IPIS and 17 in SuS (Figure 1).

The numbers of validated simulators per category were substantially different, as was the level of validity (Figure 2). SuS simulators were most validated (62.9%), and most frequently for the highest validity level (Figure 2, predictive validity). MES simulators were primarily tested for content and concurrent validity. The proportion of validated IPIS and BLSS simulators was small (Figure 2).

The quality of the study designs was verified for ten important aspects. Although all studies clearly described the researched question, study population, and outcome measures, few studies met all other criteria on the checklist. Most studies did not perform a power analysis to guarantee a correct number of participants before inclusion. Twelve percent of the 130 studies used a standardized assessment system or performed blind assessment. The majority of the studies (111) performed a correct selection of subjects: either based on experience level or with a randomly selected control group. However, 20 studies did not select their control group randomly or had no control group at all (Table 3) (37 studies tested face or content validity).45–48

Each study used proper outcome measures to test the efficacy of the simulator, which indicated psychomotor skills performance. The most commonly used performance measures are depicted in Table 4. To assess performance data objectively the following standardized scoring methods were used: team leadership-interpersonal skills (TLIS) and emergency clinical care scales (ECCS),49 objective structural clinical examination (OSCE),27,50 objective structured assessment of technical skills (OSAT),39,51–55 and global rating scale (GRS).56–59 All other studies used assessment methods that were developed specifically for that study.

Methodologically speaking, the studies that tested concurrent and predictive validity outperformed the studies that tested face, content, and construct validity.

Discussion

This study reviewed the availability of medical simulators, their validity level, and the reliability of the study designs. Four hundred and thirty-three commercially available simulators were found, of which 405 (94%) were physical models. Evidence of validation was found for 35 (6.5%) simulators (Figure 2). Mainly in category two and three, the number of validated simulators was marginal. Solely SuS simulators were validated for the highest validity level. Sixty-three percent of the 65 simulators that provide feedback on performance have not been validated, which is remarkable as these simulators take over part of the supervisors’ judgment. Studies that tested more powerful levels of validity (concurrent and predictive validity) were methodologically stronger than studies that tested more elementary levels of validity (face, content, and construct validity).

These findings can partly be explained: the necessity of a high level validation and the extent to which simulators need to mimic reality is firstly dependent on the type of skills training, and secondly on the possible consequences for patients when medical psychomotor skills are insufficient. This could especially be the case for SuS skills, because minimally invasive SuS are presumably most distinct from daily use of psychomotor skills, and as a result not well developed. In addition, when these skills are taught incorrectly, it can have serious consequences for the patient, eg, if a large hemorrhage occurs as a result of an incorrect incision. To guarantee patient safety, it is important that simulators designed for this type of training demonstrate high levels of validity.60,61 For other types of skills, such as patient examination, a lower validity level can be acceptable, because these skills are closer related to everyday use of psychomotor skills, and solely require a basic level of training on a simulator, which can be quickly adapted in a real-life situation.45,46 Moreover, it requires less extensive methodology to determine face validity than to determine predictive validity.

Certain factors made it difficult to score all validity studies on equal terms; substantial heterogeneity exists among the studies. However, in general, it can be stated that a substantial part of the validation studies showed methodological flaws. For example, many studies did not describe a power analysis, so it was difficult to judge whether these studies included the correct number of participants. Furthermore, only 15 of 130 studies used standardized assessment methods and blinded assessors. Unvalidated assessment methods and unblinded ratings are less objective, which affects reliability and validity of the test.26 This raises the question whether the presented studies were adequate enough to determine the validity level of a certain simulator. Future validity studies should focus on a proper study design, in order to increase the reliability of the results.

There are several limitations to our study. Firstly, our inventory of commercially available medical simulators was performed solely by searching the Internet. We did not complement our search by contacting manufacturers or by visiting conferences. This might implicate that our list of available simulators is not complete. Secondly, the available level of validity for the simulators was also determined by searching public scientific databases. Quite possibly, manufacturers have performed validity tests with a small group of experts, but refrained from publishing the results. It is also possible that studies have been rejected for publication or have not been published yet. Therefore, the total number of simulators and number of validated simulators that was found, might be underestimated. However, this does not undermine the fact that few simulators were validated. Especially high levels of validation are scanty.

Our results should firstly make medical trainers aware of the fact that a low number of simulators are actually tested, while validation is truly important. Although it is possible that unvalidated simulators provide proper training, validity of a device is a condition to guarantee proper acquisition of psychomotor skills1,6,7–9,18 and lack of validity brings the risk of acquisition of improper skills.1,35 Secondly, a simulator that provides feedback independent of a professional supervisor, should have been validated to guarantee that the provided feedback is adequate and appropriate in real-life settings.1,63 Thirdly, for reliable results of validity studies, proper study design is required. Well conducted studies have shown to be limited so far. Lastly, it is necessary to determine the type of skills educators will offer to their trainees with a simulator and the level of validity that is required to guarantee adequate training.

Our plea is for researchers to collaborate with manufacturers to develop questionnaires and protocols to test newly developed simulators. Simulators from the same category can be tested simultaneously with a large group of relevant participants.43 When objective evidence for basic levels of validity is obtained, it is important to publish the results so that this information is at the disposal of medical trainers. Before introducing a simulator in the training curriculum, it is recommended to first consider which skills training is needed, and the complexity and possible clinical consequences of executing those skills incorrectly. Subsequently, the minimum required level of validity should be determined for the simulator that allows for that type of skills training. The qualitative results support the concept that the level of validation depends on the difficulty level of skills training and the unforeseen consequences when skills are insufficient or lead to erroneous actions. This combination of selection criteria should guide medical trainers in the proper selection of a simulator for safe and adequate training.

Conclusion

For correct medical psychomotor skills training and to provide objective and correct feedback it is essential to have a realistic training environment. Scientific testing of simulators is an important way to prove and validate the training method. This review shows that 93.5% of the commercially available simulators are not known to be tested for validity, which implies that no evidence is available that they actually improve individual medical psychomotor skills. From the validity studies that were done for 35 simulators, many show some methodological flaws, which weaken the reliability of the results. It is also advisable for companies that manufacture medical simulators to validate their products and provide scientific evidence to their customers. This way, a quality system becomes available, which contributes to providing adequate, safe, and affordable medical psychomotor skills training.

Disclosure

This research was funded by the Marti-Keuning Eckhart Foundation, Lunteren, the Netherlands. The authors report no conflict of interest.

References

Wilfong DN, Falsetti DJ, McKinnon JL, Daniel LH, Wan QC. The effects of virtual intravenous and patient simulator training compared to the traditional approach of teaching nurses: a research project on peripheral iv catheter insertion. J Infus Nurs. 2011;34(1):55–62. | |

Gorman PJ, Meier AH, Krummel TM. Simulation and virtual reality in surgical education: real or unreal? Arch Surg. 1999;134(11):1203–1208. | |

Ahmed R, Naqvi Z, Wolfhagen I. Psychomotor skills for the undergraduate medical curriculum in a developing country – Pakistan. Educ Health (Abingdon). 2005;18(1):5–13. | |

Wolpert DM, Ghahramani Z. Computational principles of movement neuroscience. Nat Neurosci. 2005;3 Suppl:1212–1217. | |

Wolpert DM, Ghahramani Z, Flanagan JR. Perspectives and problems in motor learning. Trends Cogn Sci. 2001;5(11):487–494. | |

Kunkler K. The role of medical simulation: an overview. Int J Med Robot. 2006;2(3):203–210. | |

Cannon WD, Eckhoff DG, Garrett WE Jr, Hunter RE, Sweeney HJ. Report of a group developing a virtual reality simulator for arthroscopic surgery of the knee joint. Clin Orthop Relat Res. 2006;442:21–29. | |

Heng PA, Cheng CY, Wong TT, et al. Virtual reality techniques. Application to anatomic visualization and orthopaedics training. Clin Orthop Relat Res. 2006;442:5–12. | |

Howells NR, Gill HS, Carr AJ, Price AJ, Rees JL. Transferring simulated arthroscopic skills to the operating theatre: a randomised blinded study. J Bone Joint Surg Br. 2008;90(4):494–499. | |

McCarthy AD, Moody L, Waterworth AR, Bickerstaff DR. Passive haptics in a knee arthroscopy simulator: is it valid for core skills training? Clin Orthop Relat Res. 2006;442:13–20. | |

Michelson JD. Simulation in orthopaedic education: an overview of theory and practice. J Bone Joint Surg Am. 2006;88(6):1405–1411. | |

Poss R, Mabrey JD, Gillogly SD, Kasser JR, et al. Development of a virtual reality arthroscopic knee simulator. J Bone Joint Surg Am. 2000;82-A(10):1495–1499. | |

Safir O, Dubrowski A, Mirksy C, Backstein D, Carnahan H. What skills should simulation training in arthroscopy teach residents? Int J CARS. 2008;3:433–437. | |

Tuijthof GJ, van Sterkenburg MN, Sierevelt IN, van OJ, Van Dijk CN, Kerkhoffs GM. First validation of the PASSPORT training environment for arthroscopic skills. Knee Surg Sports Traumatol Arthrosc. 2010;18(2):218–224. | |

Frost and Sullivan. Return on Investment Study for Medical Simulation Training: Immersion Medical, Inc. Laparoscopy Accutouchú System; 2012. Available from: http://www.healthleadersmedia.com/content/138774.pdf. Accessed June 23, 2014. | |

Insel A, Carofino B, Leger R, Arciero R, Mazzocca AD. The development of an objective model to assess arthroscopic performance. J Bone Joint Surg Am. 2009;91(9):2287–2295. | |

Tuijthof GJ, Visser P, Sierevelt IN, van Dijk CN, Kerkhoffs GM. Does perception of usefulness of arthroscopic simulators differ with levels of experience? Clin Orthop Relat Res. 2011;469(6):1701–1708. | |

Horeman T, Rodrigues SP, Jansen FW, Dankelman J, van den Dobbelsteen JJ. Force measurement platform for training and assessment of laparoscopic skills. Surg Endosc. 2010;24(12):3102–3108. | |

Issenberg SB, McGaghie WC, Hart IR, et al. Simulation technology for health care professional skills training and assessment. JAMA. 1999;282(9):861–866. | |

Goodman W. The world of civil simulators. Flight International Magazine. 1978;18:435. | |

Streufert S, Pogash R, Piasecki M. Simulation-based assessment of managerial competence: reliability and validity. Personnel Psychology. 1988;41(3):537–557. | |

Rolfe JM, Staples KJ, editors. Flight simulation. Cambridge University Press; 1988. | |

Keys B, Wolfe J. The Role of Management Games and Simulations in Education and Research. Journal of Management. 1990;16(2):307–336. | |

Schijven MP, Jakimowicz JJ. Validation of virtual reality simulators: Key to the successful integration of a novel teaching technology into minimal access surgery. Minim Invasive Ther Allied Technol. 2005;14(4):244–246. | |

Chmarra MK, Klein S, de Winter JC, Jansen FW, Dankelman J. Objective classification of residents based on their psychomotor laparoscopic skills. Surg Endosc. 2010;24(5):1031–1039. | |

van Hove PD, Tuijthof GJ, Verdaasdonk EG, Stassen LP, Dankelman J. Objective assessment of technical surgical skills. Br J Surg. 2010; 97(7):972–987. | |

Alinier G, Hunt WB, Gordon R. Determining the value of simulation in nurse education: study design and initial results. Nurse Educ Pract. 2004;4(3):200–207. | |

Donoghue AJ, Durbin DR, Nadel FM, Stryjewski GR, Kost SI, Nadkarni VM. Effect of high-fidelity simulation on Pediatric Advanced Life Support training in pediatric house staff: a randomized trial. Pediatr Emerg Care. 2009;25(3):139–144. | |

Monsieurs KG, De RM, Schelfout S, et al. Efficacy of a self-learning station for basic life support refresher training in a hospital: a randomized controlled trial. Eur J Emerg Med. 2012;19(4):214–219. | |

European Society for Emergency Medicine [homepage on the Internet]. Emergency Medicine; 2012. Available from: http://www.eusem.org/whatisem/. Accessed June 23, 2014. | |

Handley AJ. Basic life support. Br J Anaesth. 1997;79(2):151–158. | |

Oropesa I, Sanchez-Gonzalez P, Lamata P, et al. Methods and tools for objective assessment of psychomotor skills in laparoscopic surgery. J Surg Res. 2011;171(1):e81–e95. | |

Carter FJ, Schijven MP, Aggarwal R, et al. Consensus guidelines for validation of virtual reality surgical simulators. Surg Endosc. 2005;19(12):1523–1532. | |

Issenberg SB, McGaghie WC, Waugh RA. Computers and evaluation of clinical competence. Ann Intern Med. 1999;130(3):244–245. | |

Martin JT, Reda H, Dority JS, Zwischenberger JB, Hassan ZU. Surgical resident training using real-time simulation of cardiopulmonary bypass physiology with echocardiography. J Surg Educ. 2011;68(6):542–546. | |

Tan GM, Ti LK, Suresh S, Ho BS, Lee TL. Teaching first-year medical students physiology: does the human patient simulator allow for more effective teaching? Singapore Med J. 2002;43(5):238–242. | |

Nehring WM, Lashley FR. Current use and opinions regarding human patient simulators in nursing education: an international survey. Nurs Educ Perspect. 2004;25(5):244–248. | |

Fernandez GL, Lee PC, Page DW, D’Amour EM, Wait RB, Seymour NE. Implementation of full patient simulation training in surgical residency. J Surg Educ. 2010;67(6):393–399. | |

Bath J, Lawrence P, Chandra A, et al. Standardization is superior to traditional methods of teaching open vascular simulation. J Vasc Surg. 2011;53(1):229–234. | |

Uppal V, Kearns RJ, McGrady EM. Evaluation of M43B Lumbar puncture simulator-II as a training tool for identification of the epidural space and lumbar puncture. Anaesthesia. 2011;66(6):493–496. | |

Uppal JK, Varshney R, Hazari PP, Chuttani K, Kaushik NK, Mishra AK. Biological evaluation of avidin-based tumor pretargeting with DOTA-Triazole-Biotin constructed via versatile Cu(I) catalyzed click chemistry. J Drug Target. 2011;19(6):418–426. | |

Laerdal, helping saves lives. 2012. Available from: http://www.laerdal.com/nl/. Accessed August 8, 2014. | |

Verdaasdonk EG, Stassen LP, Monteny LJ, Dankelman J. Standardization is superior to traditional methods of teaching open vascular simulation. Surg Endosc. 2006;20(3):511–518. | |

Verdaasdonk EG, Stassen LP, Schijven MP, Dankelman J. Construct validity and assessment of the learning curve for the SIMENDO endoscopic simulator. Surg Endosc. 2007;21(8):1406–1412. | |

Verma A, Bhatt H, Booton P, Kneebone R. The Ventriloscope(R) as an innovative tool for assessing clinical examination skills: appraisal of a novel method of simulating auscultatory findings. Med Teach. 2011;33(7):e388–e396. | |

Wilson M, Shepherd I, Kelly C, Pitzner J. Assessment of a low-fidelity human patient simulator for the acquisition of nursing skills. Nurse Educ Today. 2005;25(1):56–67. | |

Kruger A, Gillmann B, Hardt C, Doring R, Beckers SK, Rossaint R. Vermittlung von „soft skills“ für Belastungssituationen. [Teaching non-technical skills for critical incidents: Crisis resource management training for medical students]. Anaesthesist. 2009;58(6):582–588. German. | |

Shukla A, Kline D, Cherian A, et al. A simulation course on lifesaving techniques for third-year medical students. Simul Healthc. 2007;2(1):11–15. | |

Pascual JL, Holena DN, Vella MA, et al. Short simulation training improves objective skills in established advanced practitioners managing emergencies on the ward and surgical intensive care unit. J Trauma. 2011;71(2):330–337. | |

Karnath B, Thornton W, Frye AW. Teaching and testing physical examination skills without the use of patients. Acad Med. 2002; 77(7):753. | |

Nadler I, Liley HG, Sanderson PM. Clinicians can accurately assign Apgar scores to video recordings of simulated neonatal resuscitations. Simul Healthc. 2010;5(4):204–212. | |

Chou DS, Abdelshehid C, Clayman RV, McDougall EM. Comparison of results of virtual-reality simulator and training model for basic ureteroscopy training. J Endourol. 2006;20(4):266–271. | |

Lucas S, Tuncel A, Bensalah K, et al. Virtual reality training improves simulated laparoscopic surgery performance in laparoscopy naive medical students. J Endourol. 2008;22(5):1047–1051. | |

Korets R, Mues AC, Graversen JA, et al. Validating the use of the Mimic dV-trainer for robotic surgery skill acquisition among urology residents. Urology. 2011;78(6):1326–1330. | |

Adler MD, Trainor JL, Siddall VJ, McGaghie WC. Development and evaluation of high fidelity simulation case scenarios for pediatric resident education. Ambul Pediatr. 2007;7(2):182–186. | |

Schout BM, Ananias HJ, Bemelmans BL, et al. Transfer of cysto-urethroscopy skills from a virtual-reality simulator to the operating room: a randomized controlled trial. BJU Int. 2010;106(2):226–231. | |

Schout BM, Muijtjens AM, et al. Acquisition of flexible cystoscopy skills on a virtual reality simulator by experts and novices. BJU Int. 2010;105(2):234–239. | |

Knoll T, Trojan L, Haecker A, Alken P, Michel MS. Validation of computer-based training in ureterorenoscopy. BJU Int. 2005;95(9):1276–1279. | |

Clayman RV. Assessment of basic endoscopic performance using a virtual reality simulator. J Urol. 2003;170(2 Pt 1):692. | |

Inspectie voor de Gezondheidszorg [homepage on the Internet]. Rapport ‘Risico’s minimaal invasieve chirurgie onderschat, kwaliteitssysteem voor laparoscopische operaties ontbreekt’ [Healthcare Inspectorate. Risks minimally invasive surgery underestimated.]; 2012. Available from: http://www.igz.nl/zoeken/document.aspx?doc=Rapport+‘Risico’s+minimaal+invasieve+chirurgie+onderschat%2C+kwaliteitssysteem+voor+laparoscopische+operaties+ontbreekt’&docid=475. Accessed June 23, 2014. | |

Issenberg SB, McGaghie WC, Petrusa ER, Lee GD, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005;27(1):10–28. | |

Rosenthal R, Gantert WA, Hamel C, et al. Assessment of construct validity of a virtual reality laparoscopy simulator. J Laparoendosc Adv Surg Tech A. 2007;17(4):407–413. | |

Kuduvalli PM, Jervis A, Tighe SQ, Robin NM. Construct validity and assessment of the learning curve for the SIMENDO endoscopic simulator. Anaesthesia. 2008;63(4):364–369. | |

Oddone EZ, Waugh RA, Samsa G, Corey R, Feussner JR. Teaching cardiovascular examination skills: results from a randomized controlled trial. Am J Med. 1993;95(4):389–396. | |

Jones JS, Hunt SJ, Carlson SA, Seamon JP. Assessing bedside cardiologic examination skills using “Harvey,” a cardiology patient simulator. Acad Emerg Med. 1997;4(10):980–985. | |

Giovanni de GD, Roberts T, Norman G. Relative effectiveness of high- versus low-fidelity simulation in learning heart sounds. Med Educ. 2009;43(7):661–668. | |

Ende A, Zopf Y, Konturek P, et al. Strategies for training in diagnostic upper endoscopy: a prospective, randomized trial. Gastrointest Endosc. 2012;75(2):254–260. | |

Ferlitsch A, Glauninger P, Gupper A, et al. Evaluation of a virtual endoscopy simulator for training in gastrointestinal endoscopy. Endoscopy. 2002;34(9):698–702. | |

Ritter EM, McClusky DA III, Lederman AB, Gallagher AG, Smith CD. Objective psychomotor skills assessment of experienced and novice flexible endoscopists with a virtual reality simulator. J Gastrointest Surg. 2003;7(7):871–877. | |

Eversbusch A, Grantcharov TP. Learning curves and impact of psychomotor training on performance in simulated colonoscopy: a randomized trial using a virtual reality endoscopy trainer. Surg Endosc. 2004;18(10):1514–1518. | |

Felsher JJ, Olesevich M, Farres H, et al. Validation of a flexible endoscopy simulator. Am J Surg. 2005;189(4):497–500. | |

Grantcharov TP, Carstensen L, Schulze S. Objective assessment of gastrointestinal endoscopy skills using a virtual reality simulator. JSLS. 2005;9(2):130–133. | |

Koch AD, Buzink SN, Heemskerk J, et al. Expert and construct validity of the Simbionix GI Mentor II endoscopy simulator for colonoscopy. Surg Endosc. 2008;22(1):158–162. | |

Shirai Y, Yoshida T, Shiraishi R, et al. Prospective randomized study on the use of a computer-based endoscopic simulator for training in esophagogastroduodenoscopy. J Gastroenterol Hepatol. 2008;23(7 Pt 1):1046–1050. | |

Buzink SN, Goossens RH, Schoon EJ, de RH, Jakimowicz JJ. Do basic psychomotor skills transfer between different image-based procedures? World J Surg. 2010;34(5):933–940. | |

Fayez R, Feldman LS, Kaneva P, Fried GM. Testing the construct validity of the Simbionix GI Mentor II virtual reality colonoscopy simulator metrics: module matters. Surg Endosc. 2010;24(5):1060–1065. | |

Van Sickle KR, Buck L, Willis R, et al. A multicenter, simulation-based skills training collaborative using shared GI Mentor II systems: results from the Texas Association of Surgical Skills Laboratories (TASSL) flexible endoscopy curriculum. Surg Endosc. 2011;25(9):2980–2986. | |

Gettman MT, Le CQ, Rangel LJ, Slezak JM, Bergstralh EJ, Krambeck AE. Analysis of a computer based simulator as an educational tool for cystoscopy: subjective and objective results. J Urol. 2008;179(1):267–271. | |

Ogan K, Jacomides L, Shulman MJ, Roehrborn CG, Cadeddu JA, Pearle MS. Virtual ureteroscopy predicts ureteroscopic proficiency of medical students on a cadaver. J Urol. 2004;172(2):667–671. | |

Shah J, Montgomery B, Langley S, Darzi A. Validation of a flexible cystoscopy course. BJU Int. 2002;90(9):833–885. | |

Shah J, Darzi A. Virtual reality flexible cystoscopy: a validation study. BJU Int. 2002;90(9):828–832. | |

Mishra S, Kurien A, Patel R, et al. Validation of virtual reality simulation for percutaneous renal access training. J Endourol. 2010;24(4):635–640. | |

Vitish-Sharma P, Knowles J, Patel B. Acquisition of fundamental laparoscopic skills: is a box really as good as a virtual reality trainer? Int J Surg. 2011;9(8):659–661. | |

Zhang A, Hunerbein M, Dai Y, Schlag PM, Beller S. Construct validity testing of a laparoscopic surgery simulator (Lap Mentor): evaluation of surgical skill with a virtual laparoscopic training simulator. Surg Endosc. 2008;22(6):1440–1444. | |

Yamaguchi S, Konishi K, Yasunaga T, et al. Construct validity for eye-hand coordination skill on a virtual reality laparoscopic surgical simulator. Surg Endosc. 2007;21(12):2253–2257. | |

Ayodeji ID, Schijven M, Jakimowicz J, Greve JW. Face validation of the Simbionix LAP Mentor virtual reality training module and its applicability in the surgical curriculum. Surg Endosc. 2007;21(9):1641–1649. | |

Andreatta PB, Woodrum DT, Birkmeyer JD, et al. Laparoscopic skills are improved with LapMentor training: results of a randomized, double-blinded study. Ann Surg. 2006;243(6):854–860. | |

Hall AB. Randomized objective comparison of live tissue training versus simulators for emergency procedures. Am Surg. 2011;77(5):561–565. | |

Dayan AB, Ziv A, Berkenstadt H, Munz Y. A simple, low-cost platform for basic laparoscopic skills training. Surg Innov. 2008;15(2):136–142. | |

Boon JR, Salas N, Avila D, Boone TB, Lipshultz LI, Link RE. Construct validity of the pig intestine model in the simulation of laparoscopic urethrovesical anastomosis: tools for objective evaluation. J Endourol. 2008;22(12):2713–2716. | |

Youngblood PL, Srivastava S, Curet M, Heinrichs WL, Dev P, Wren SM. Comparison of training on two laparoscopic simulators and assessment of skills transfer to surgical performance. J Am Coll Surg. 2005;200(4):546–551. | |

Taffinder N, Sutton C, Fishwick RJ, McManus IC, Darzi A. Validation of virtual reality to teach and assess psychomotor skills in laparoscopic surgery: results from randomised controlled studies using the MIST VR laparoscopic simulator. Stud Health Technol Inform. 1998;50:124–130. | |

Jordan JA, Gallagher AG, McGuigan J, McClure N. Virtual reality training leads to faster adaptation to the novel psychomotor restrictions encountered by laparoscopic surgeons. Surg Endosc. 2001;15(10):1080–1084. | |

McNatt SS, Smith CD. A computer-based laparoscopic skills assessment device differentiates experienced from novice laparoscopic surgeons. Surg Endosc. 2001;15(10):1085–1089. | |

Kothari SN, Kaplan BJ, DeMaria EJ, Broderick TJ, Merrell RC. Training in laparoscopic suturing skills using a new computer-based virtual reality simulator (MIST-VR) provides results comparable to those with an established pelvic trainer system. J Laparoendosc Adv Surg Tech A. 2002;12(3):167–173. | |

Seymour NE, Gallagher AG, Roman SA, et al. Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Ann Surg. 2002;236(4):458–463. | |

Grantcharov TP, Kristiansen VB, Bendix J, Bardram L, Rosenberg J, Funch-Jensen P. Randomized clinical trial of virtual reality simulation for laparoscopic skills training. Br J Surg. 2004; 91(2):146–150. | |

Maithel S, Sierra R, Korndorffer J, et al. Construct and face validity of MIST-VR, Endotower, and CELTS: are we ready for skills assessment using simulators? Surg Endosc. 2006;20(1):104–112. | |

Hackethal A, Immenroth M, Burger T. Evaluation of target scores and benchmarks for the traversal task scenario of the Minimally Invasive Surgical Trainer-Virtual Reality (MIST-VR) laparoscopy simulator. Surg Endosc. 2006;20(4):645–650. | |

Tanoue K, Ieiri S, Konishi K, et al. Effectiveness of endoscopic surgery training for medical students using a virtual reality simulator versus a box trainer: a randomized controlled trial. Surg Endosc. 2008;22(4):985–990. | |

Debes AJ, Aggarwal R, Balasundaram I, Jacobsen MB. A tale of two trainers: virtual reality versus a video trainer for acquisition of basic laparoscopic skills. Am J Surg. 2010;199(6):840–845. | |

Botden SM, de Hingh IH, Jakimowicz JJ. Meaningful assessment method for laparoscopic suturing training in augmented reality. Surg Endosc. 2009;23(10):2221–2228. | |

Srivastava S, Youngblood PL, Rawn C, Hariri S, Heinrichs WL, Ladd AL. Initial evaluation of a shoulder arthroscopy simulator: establishing construct validity. J Shoulder Elbow Surg. 2004;13(2):196–205. | |

Brewin J, Nedas T, Challacombe B, Elhage O, Keisu J, Dasgupta P. Face, content and construct validation of the first virtual reality laparoscopic nephrectomy simulator. BJU Int. 2010;106(6):850–854. | |

Torkington J, Smith SG, Rees B, Darzi A. The role of the basic surgical skills course in the acquisition and retention of laparoscopic skill. Surg Endosc. 2001;15(10):1071–1075. | |

Schijven MP, Jakimowicz J. The learning curve on the Xitact LS 500 laparoscopy simulator: profiles of performance. Surg Endosc. 2004;18(1):121–127. | |

Schijven M, Jakimowicz J. Face-, expert, and referent validity of the Xitact LS500 laparoscopy simulator. Surg Endosc. 2002;16(12):1764–1770. | |

Schijven M, Jakimowicz J. Construct validity: experts and novices performing on the Xitact LS500 laparoscopy simulator. Surg Endosc. 2003;17(5):803–810. | |

Bajka M, Tuchschmid S, Fink D, Szekely G, Harders M. Establishing construct validity of a virtual-reality training simulator for hysteroscopy via a multimetric scoring system. Surg Endosc. 2010;24(1):79–88. | |

Bajka M, Tuchschmid S, Streich M, Fink D, Szekely G, Harders M. Evaluation of a new virtual-reality training simulator for hysteroscopy. Surg Endosc. 2009;23(9):2026–2033. | |

Buzink SN, Botden SM, Heemskerk J, Goossens RH, de RH, Jakimowicz JJ. Camera navigation and tissue manipulation; are these laparoscopic skills related? Surg Endosc. 2009;23(4):750–757. | |

Buzink SN, Goossens RH, de RH, Jakimowicz JJ. Training of basic laparoscopy skills on SimSurgery SEP. Minim Invasive Ther Allied Technol. 2010;19(1):35–41. | |

van der Meijden OA, Broeders IA, Schijven MP. The SEP “robot”: a valid virtual reality robotic simulator for the Da Vinci Surgical System? Surg Technol Int. 2010;19:51–58. | |

Wohaibi EM, Bush RW, Earle DB, Seymour NE. Surgical resident performance on a virtual reality simulator correlates with operating room performance. J Surg Res. 2010;160(1):67–72. | |

Bayona S, Fernández-Arroyo JM, Martín I, Bayona P. Assessment study of insight ARTHRO VR arthroscopy virtual training simulator: face, content, and construct validities. Journal of Robotic Surgery. 2008;2(3):151–158. | |

Hasson HM, Kumari NV, Eekhout J. Training simulator for developing laparoscopic skills. JSLS. 2001;5(3):255–256. | |

Fichera A, Prachand V, Kives S, Levine R, Hasson H. Physical reality simulation for training of laparoscopists in the 21st century. A multispecialty, multi-institutional study. JSLS. 2005;9(2):125–129. | |

Hasson HM. Simulation training in laparoscopy using a computerized physical reality simulator. JSLS. 2008;12(4):363–367. | |

Hung AJ, Zehnder P, Patil MB, et al. Face, content and construct validity of a novel robotic surgery simulator. J Urol. 2011;186(3):1019–1024. | |

Lerner MA, Ayalew M, Peine WJ, Sundaram CP. Does training on a virtual reality robotic simulator improve performance on the da Vinci surgical system? J Endourol. 2010;24(3):467–472. | |

Kenney PA, Wszolek MF, Gould JJ, Libertino JA, Moinzadeh A. Face, content, and construct validity of dV-trainer, a novel virtual reality simulator for robotic surgery. Urology. 2009;73(6):1288–1292. | |

Iwata N, Fujiwara M, Kodera Y, et al. Construct validity of the LapVR virtual-reality surgical simulator. Surg Endosc. 2011;25(2):423–428. | |

Sethi AS, Peine WJ, Mohammadi Y, Sundaram CP. Validation of a novel virtual reality robotic simulator. J Endourol. 2009;23(3):503–508. | |

Mahmood T, Darzi A. A study to validate the colonoscopy simulator. Surg Endosc. 2003;17(10):1583–1589. | |

Ahlberg G, Hultcrantz R, Jaramillo E, Lindblom A, Arvidsson D. Virtual reality colonoscopy simulation: a compulsory practice for the future colonoscopist? Endoscopy. 2005;37(12):1198–1204. | |

Rowe R, Cohen RA. An evaluation of a virtual reality airway simulator. Anesth Analg. 2002;95(1):62–66. | |

Broe D, Ridgway PF, Johnson S, Tierney S, Conlon KC. Construct validation of a novel hybrid surgical simulator. Surg Endosc. 2006;20(6):900–904. | |

Van Sickle KR, McClusky DA III, Gallagher AG, Smith CD. Construct validation of the ProMIS simulator using a novel laparoscopic suturing task. Surg Endosc. 2005;19(9):1227–1231. | |

Pellen MG, Horgan LF, Barton JR, Attwood SE. Construct validity of the ProMIS laparoscopic simulator. Surg Endosc. 2009;23(1):130–139. | |

Botden SM, Berlage JT, Schijven MP, Jakimowicz JJ. Face validity study of the ProMIS augmented reality laparoscopic suturing simulator. Surg Technol Int. 2008;17:26–32. | |

Jonsson MN, Mahmood M, Askerud T, et al. ProMIS can serve as a da Vinci(R) simulator – a construct validity study. J Endourol. 2011;25(2):345–350. | |

Chandra V, Nehra D, Parent R, et al. A comparison of laparoscopic and robotic assisted suturing performance by experts and novices. Surgery. 2010;147(6):830–839. | |

Feifer A, Delisle J, Anidjar M. Hybrid augmented reality simulator: preliminary construct validation of laparoscopic smoothness in a urology residency program. J Urol. 2008;180(4):1455–1459. | |

Cesanek P, Uchal M, Uranues S, et al. Do hybrid simulator-generated metrics correlate with content-valid outcome measures? Surg Endosc. 2008;22(10):2178–2183. | |

Neary PC, Boyle E, Delaney CP, Senagore AJ, Keane FB, Gallagher AG. Construct validation of a novel hybrid virtual-reality simulator for training and assessing laparoscopic colectomy; results from the first course for experienced senior laparoscopic surgeons. Surg Endosc. 2008;22(10):2301–2309. | |

Ritter EM, Kindelan TW, Michael C, Pimentel EA, Bowyer MW. Concurrent validity of augmented reality metrics applied to the fundamentals of laparoscopic surgery (FLS). Surg Endosc. 2007;21(8):1441–1445. | |

Woodrum DT, Andreatta PB, Yellamanchilli RK, Feryus L, Gauger PG, Minter RM. Construct validity of the LapSim laparoscopic surgical simulator. Am J Surg. 2006;191(1):28–32. | |

Duffy AJ, Hogle NJ, McCarthy H, et al. Construct validity for the LAPSIM laparoscopic surgical simulator. Surg Endosc. 2005;19(3):401–405. | |

Verdaasdonk EG, Dankelman J, Lange JF, Stassen LP. Transfer validity of laparoscopic knot-tying training on a VR simulator to a realistic environment: a randomized controlled trial. Surg Endosc. 2008;22(7):1636–1642. | |

Dayal R, Faries PL, Lin SC, et al. Computer simulation as a component of catheter-based training. J Vasc Surg. 2004;40(6):1112–1117. | |

Nicholson WJ, Cates CU, Patel AD, et al. Face and content validation of virtual reality simulation for carotid angiography: results from the first 100 physicians attending the Emory NeuroAnatomy Carotid Training (ENACT) program. Simul Healthc. 2006;1(3):147–150. | |

Willoteaux S, Lions C, Duhamel A, et al. Réalité virtuelle en radiologie vasculaire interventionnelle : évaluation des performances. [Virtual interventional radiology: evaluation of performances as a function of experience]. J Radiol. 2009;90:37–41. French. | |

Van Herzeele I, Aggarwal R, Neequaye S, Darzi A, Vermassen F, Cheshire NJ. Cognitive training improves clinically relevant outcomes during simulated endovascular procedures. J Vasc Surg. 2008;48(5):1223–1230. | |

Van Herzeele I, Aggarwal R, Choong A, Brightwell R, Vermassen FE, Cheshire NJ. Virtual reality simulation objectively differentiates level of carotid stent experience in experienced interventionalists. J Vasc Surg. 2007;46(5):855–863. | |

Patel AD, Gallagher AG, Nicholson WJ, Cates CU. Learning curves and reliability measures for virtual reality simulation in the performance assessment of carotid angiography. J Am Coll Cardiol. 2006;47(9):1796–1802. | |

Berry M, Reznick R, Lystig T, Lonn L. The use of virtual reality for training in carotid artery stenting: a construct validation study. Acta Radiol. 2008;49(7):801–805. | |

Tedesco MM, Pak JJ, Harris EJ Jr, Krummel TM, Dalman RL, Lee JT. Simulation-based endovascular skills assessment: the future of credentialing? J Vasc Surg. 2008;47(5):1008–1111. | |

Sotto JA, Ayuste EC, Bowyer MW, et al. Exporting simulation technology to the Philippines: a comparative study of traditional versus simulation methods for teaching intravenous cannulation. Stud Health Technol Inform. 2009;142:346–351. | |

Britt RC, Novosel TJ, Britt LD, Sullivan M. The impact of central line simulation before the ICU experience. Am J Surg. 2009;197(4):533–536. | |

Cavaleiro AP, Guimaraes H, Calheiros F. Training neonatal skills with simulators? Acta Paediatr. 2009;98(4):636–639. | |

St Clair EW, Oddone EZ, Waugh RA, Corey GR, Feussner JR. Assessing housestaff diagnostic skills using a cardiology patient simulator. Ann Intern Med. 1992;117(9):751–756. |

© 2014 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.

© 2014 The Author(s). This work is published and licensed by Dove Medical Press Limited. The full terms of this license are available at https://www.dovepress.com/terms.php and incorporate the Creative Commons Attribution - Non Commercial (unported, v3.0) License.

By accessing the work you hereby accept the Terms. Non-commercial uses of the work are permitted without any further permission from Dove Medical Press Limited, provided the work is properly attributed. For permission for commercial use of this work, please see paragraphs 4.2 and 5 of our Terms.